Kubernetes success stories in production. Part 3: GitHub

We continue to talk about successful examples of using Kubernetes in production. New case - quite fresh. Details about him appeared only yesterday. And what is even more significant, it will be about a major online service, which every reader of the Habra will probably work in one way or another - GitHub.

The fact that GitHub is engaged in the implementation of Kubernetes in its production, was first publicly known a month ago from the Twitter account of the SRE-engineer of the Aaron Brown company. Then he briefly reported:

')

That is: “if you go through the pages of GitHub today, then you may be interested in the fact that from this day all web content is delivered using Kubernetes”. The following answers clarified that the traffic to the Docker containers managed by Kubernetes was switched for the web frontend and the Gist service, and the API applications were in the process of migration. Containerization in GitHub has affected only stateless applications, because the situation with stateful products is more complicated and “[for maintenance] MySQL, Redis and Git have already been extensively automated [in GitHub]”. The choice for Kubernetes was called optimal for GitHub employees with a note that "Mesos / Nomad is neither worse nor better - they are just different."

There was little information, but GitHub engineers promised to talk about the details soon. And yesterday Jesse Newland, SRE senior in the company, published the long-awaited article “ Kubernetes at GitHub ”, and just 8 hours before this publication on Habré, the already mentioned Aaron Brown spoke at the belatedly celebration of Kubernetes 2 anniversary in Apprenda with the corresponding report:

Quote from the report of Aaron: "" I dream to spend more time setting up hosts ", - no engineer, never"

Until recent events, the main GitHub application, written in Ruby on Rails, has changed little over the past 8 years since its creation:

As GitHub grew (employees, the number of features and services, user requests), there were difficulties, and in particular:

Engineers and developers started a joint project to solve these problems, which led to the study and comparison of existing container orchestration platforms. Evaluating Kubernetes, they identified several advantages:

To organize the deployment of the main Ruby GitHub application using the Kubernetes infrastructure, a so-called “Assessment Lab” was created. It consisted of the following projects:

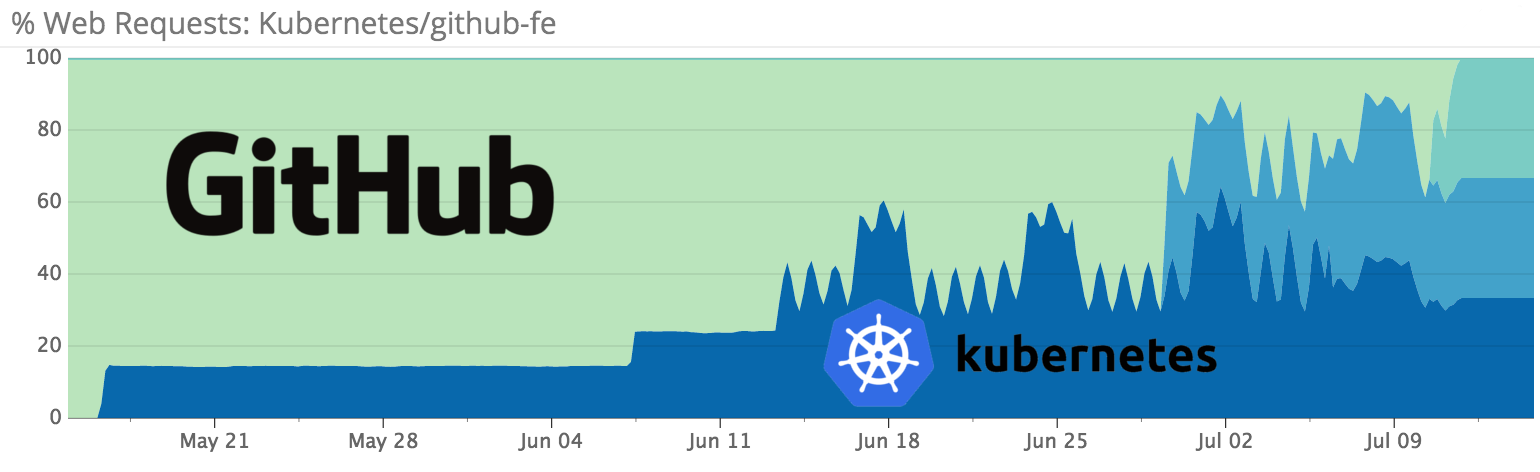

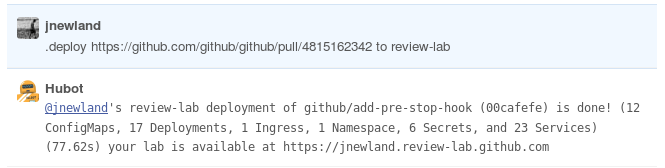

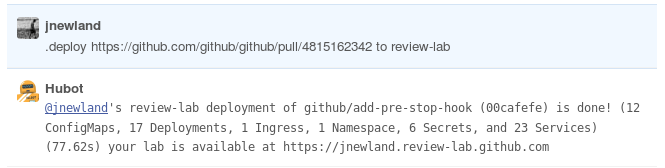

The bottom line is a chat-based interface for deploying a GitHub application at any pull request:

The implementation of the laboratory proved to be excellent, and by the beginning of June, the entire GitHub deployed to the new scheme.

The next stage in the implementation of Kubernetes was the construction of a very demanding infrastructure performance and reliability for the company's main service in production - github.com.

The basic infrastructure of GitHub is the so-called metal cloud (a cloud running on physical servers in its own data centers). Of course, Kubernetes was required to run, given the existing specifics. To this end, the company's engineers again implemented a number of auxiliary projects:

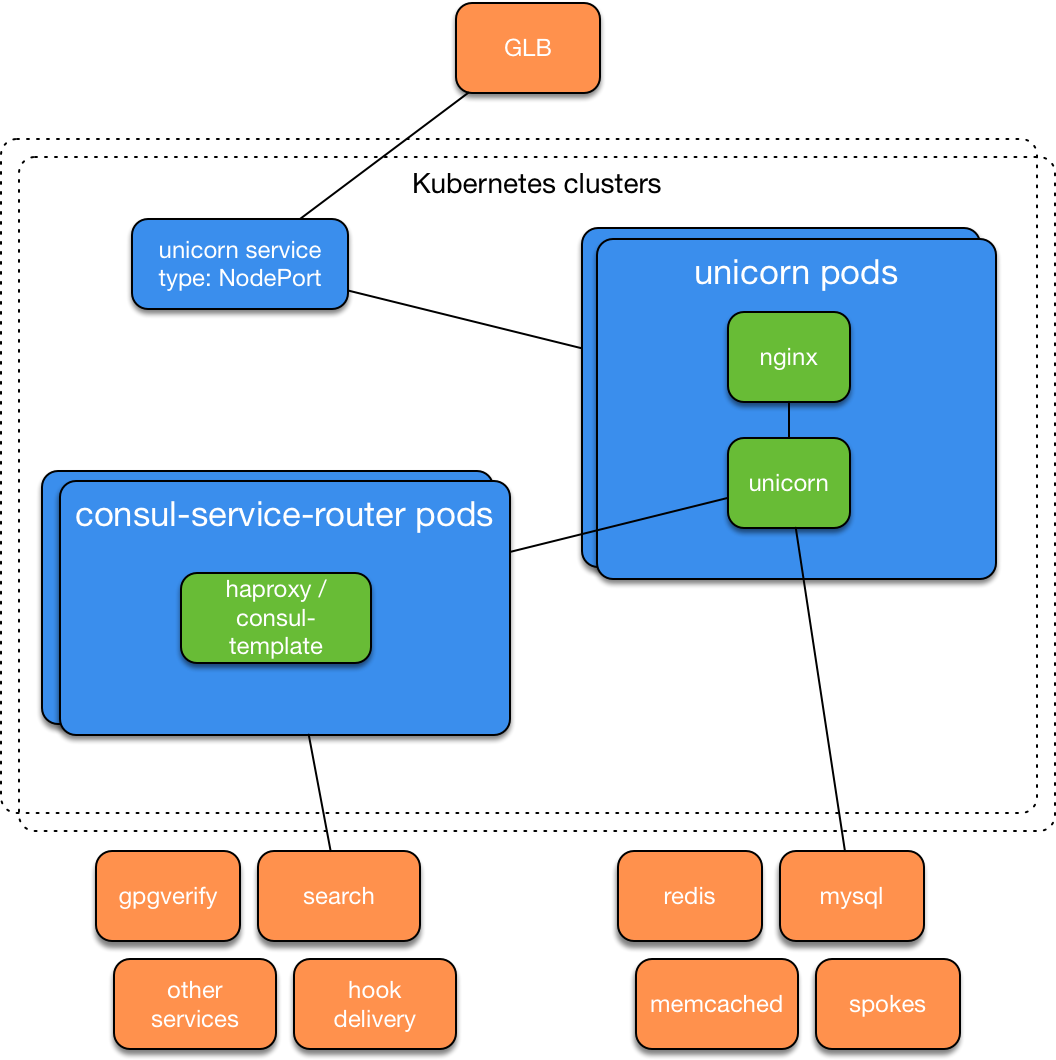

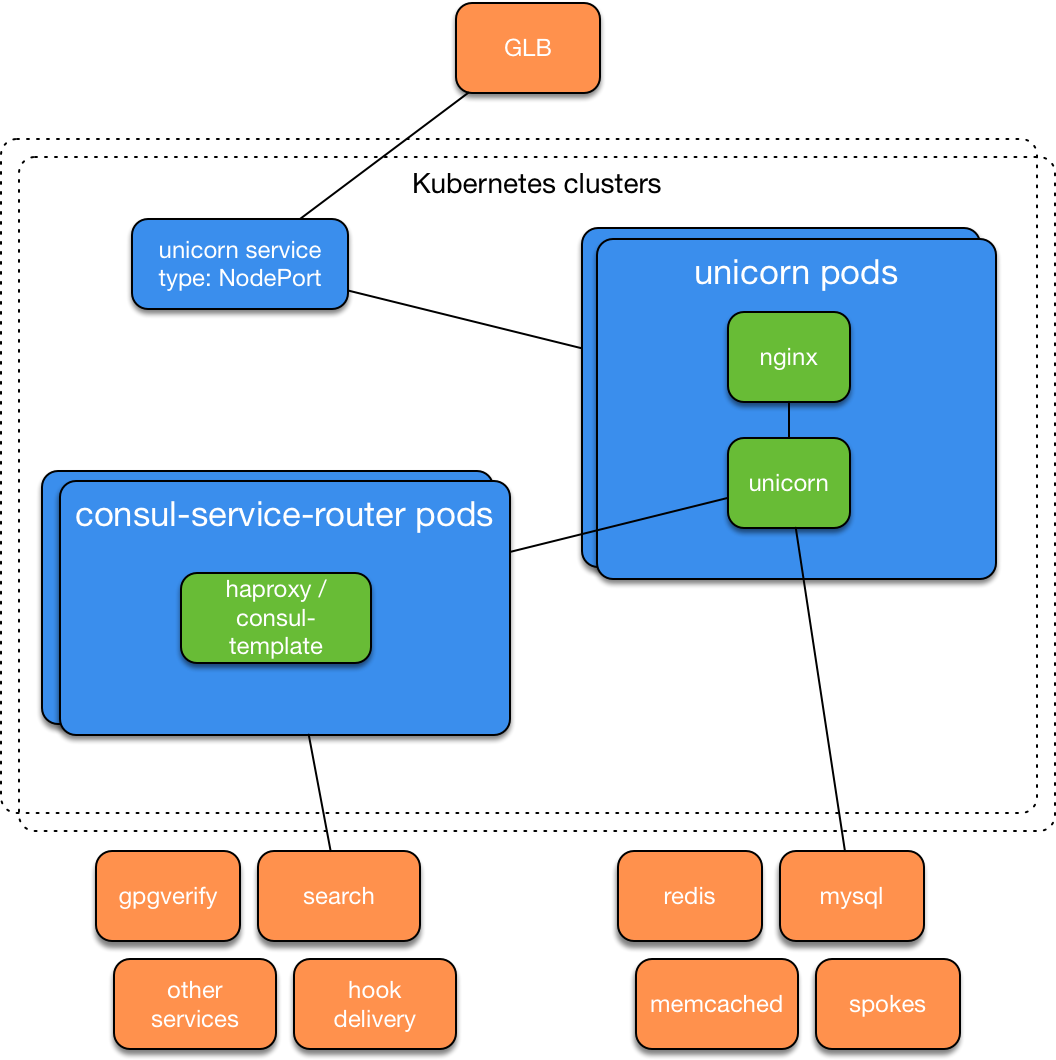

The bottom line is the Kubernetes cluster on the iron servers, which passed internal tests and in less than a week began to be used for migrating from AWS. After creating additional such clusters, GitHub engineers launched a copy of the combat github.com on Kubernetes and (using GLB) offered their employees a button to switch between the original installation of the application and the version in Kubernetes. The services architecture looked like this:

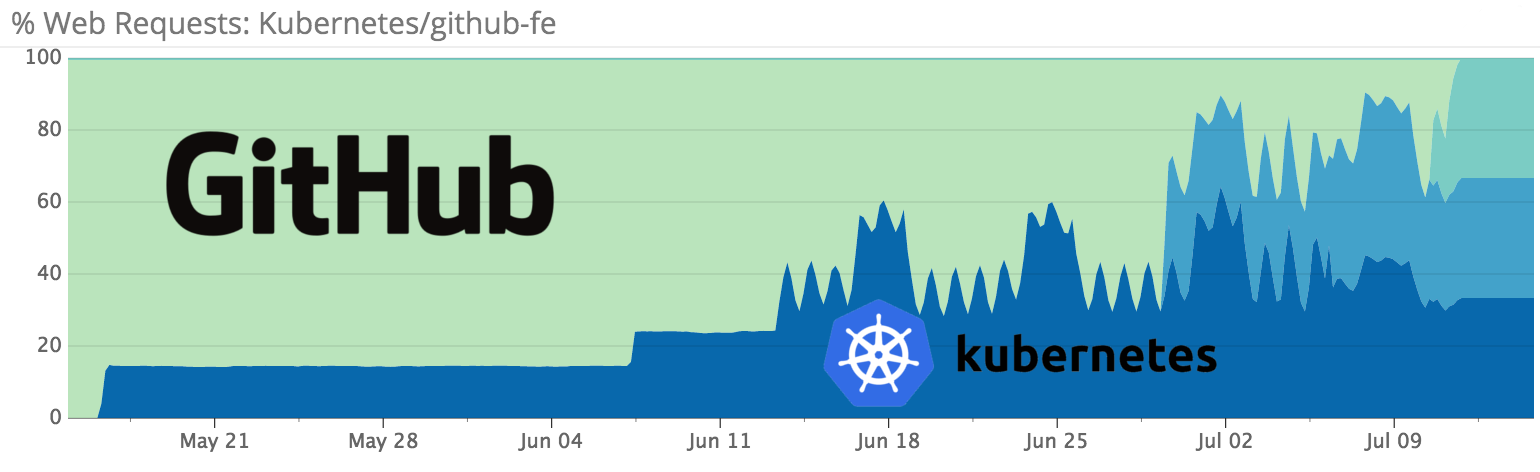

After fixing the problems found by employees, a gradual switching of user traffic to new clusters began: first, 100 requests per second, and then 10% of all requests to github.com and api.github.com.

Go from 10% to 100% of the traffic did not hurry. Part-load tests showed unexpected results: the failure of one Kubernetes apiserver node had a negative impact on the availability of available resources in general — the reason “apparently” was associated with interaction between different clients connecting to apiserver (calico-agent, kubelet, kube -proxy, kube-controller-manager), and the behavior of the internal load balancer when the apiserver node falls. Therefore, GitHub decided to run the main application on several clusters in different places and redirect requests from problematic clusters to workers.

By mid-July of this year, 100% of GitHub production traffic was redirected to Kubernetes-based infrastructure.

One of the remaining problems, according to the company's engineer, is that sometimes during heavy loads, on some Kubernetes nodes, a kernel panic occurs, after which they reboot. Although outwardly for users it is not noticeable at all, the SRE-team has a high priority on finding out the reasons for this behavior and eliminating them, and the tests that test fault tolerance have specifically added the call to kernel panic (via

First information

The fact that GitHub is engaged in the implementation of Kubernetes in its production, was first publicly known a month ago from the Twitter account of the SRE-engineer of the Aaron Brown company. Then he briefly reported:

')

That is: “if you go through the pages of GitHub today, then you may be interested in the fact that from this day all web content is delivered using Kubernetes”. The following answers clarified that the traffic to the Docker containers managed by Kubernetes was switched for the web frontend and the Gist service, and the API applications were in the process of migration. Containerization in GitHub has affected only stateless applications, because the situation with stateful products is more complicated and “[for maintenance] MySQL, Redis and Git have already been extensively automated [in GitHub]”. The choice for Kubernetes was called optimal for GitHub employees with a note that "Mesos / Nomad is neither worse nor better - they are just different."

There was little information, but GitHub engineers promised to talk about the details soon. And yesterday Jesse Newland, SRE senior in the company, published the long-awaited article “ Kubernetes at GitHub ”, and just 8 hours before this publication on Habré, the already mentioned Aaron Brown spoke at the belatedly celebration of Kubernetes 2 anniversary in Apprenda with the corresponding report:

Quote from the report of Aaron: "" I dream to spend more time setting up hosts ", - no engineer, never"

Why bother Kubernetes in GitHub at all?

Until recent events, the main GitHub application, written in Ruby on Rails, has changed little over the past 8 years since its creation:

- On Ubuntu servers configured using Puppet, God’s process manager ran Unicorn web server.

- For deployment, Capistrano was used, which was connected via SSH to each front-end server, updated the code and restarted the processes.

- When the peak load exceeded the available capacity, SRE engineers added new frontend servers using gPanel, IPMI, iPXE, Puppet Facter and Ubuntu PXE image in their workflow (read more about this here ) .

As GitHub grew (employees, the number of features and services, user requests), there were difficulties, and in particular:

- some teams needed to “extract” a small part of their functionality from large services for a separate launch / deployment;

- the increase in the number of services led to the need to support a variety of similar configurations for dozens of applications (more time was spent on server support and provisioning);

- Deploy new services (depending on their complexity) took days, weeks or even months.

Over time, it became clear that this approach does not provide our engineers with the flexibility that was necessary to create a world-class service. Our engineers needed a self-service platform that they could use for experimenting with new services, their deployment and scaling. In addition, it was necessary that the same platform satisfy the requirements of the main application on Ruby on Rails, so that engineers and / or robots could respond to changes in load by allocating additional computing resources in seconds, rather than hours, days, or longer.

Engineers and developers started a joint project to solve these problems, which led to the study and comparison of existing container orchestration platforms. Evaluating Kubernetes, they identified several advantages:

- active open source community supporting the project;

- positive experience of the first launch (the first deployment of the application in a small cluster took only a few hours);

- extensive information about the experience of the authors Kubernetes, which led them to the existing architecture.

Kubernetes Deplo

To organize the deployment of the main Ruby GitHub application using the Kubernetes infrastructure, a so-called “Assessment Lab” was created. It consisted of the following projects:

- A Kubernetes cluster running in the AWS VPC cloud and managed with Terraform and kops.

- A set of integration tests for Bash that perform checks on temporary Kubernetes clusters that were actively used at the beginning of the project.

Dockerfilefor the application.- Improvements to the internal platform for continuous integration (CI) to support the assembly of containers and their publication in the registry.

- YAML submissions 50+ resources used in Kubernetes.

- Improvements in the internal deployment application for “forwarding” Kubernetes resources from the repository to the Kubernetes namespace and creating Kubernetes secrets (from the internal repository).

- Service based on HAProxy and consul-template for redirecting traffic from pods from Unicorn to existing services.

- Service that sends emergency events from Kubernetes to the internal error reporting system.

- The kube-me service, which is compatible with chatops-rpc and provides chat users with limited access to kubectl commands.

The bottom line is a chat-based interface for deploying a GitHub application at any pull request:

The implementation of the laboratory proved to be excellent, and by the beginning of June, the entire GitHub deployed to the new scheme.

Kubernetes for infrastructure

The next stage in the implementation of Kubernetes was the construction of a very demanding infrastructure performance and reliability for the company's main service in production - github.com.

The basic infrastructure of GitHub is the so-called metal cloud (a cloud running on physical servers in its own data centers). Of course, Kubernetes was required to run, given the existing specifics. To this end, the company's engineers again implemented a number of auxiliary projects:

- Calico was chosen as the network provider, which “out of the box provided the necessary functionality for the rapid deployment of the cluster in

ipipmode”. - Repeated (“at least a dozen times”) reading Kubernetes the hard way helped assemble several manually serviced servers into a temporary cluster Kubernetes, which successfully passed the integration tests used for existing AWS-based clusters.

- Create a small utility that generates the CA and configuration for each cluster in a format recognized by the used Puppet and secrets storage systems.

- Puppet'izatsiya configuration of two roles (Kubernetes node and Kubernetes apiserver).

- Creation of a service (in the Go language) that collects the logs of the containers, adds metadata to each line in the key-value format and sends them to the syslog for the host.

- Add support for Kubernetes NodePort Services in a load balancing service ( GLB ).

The bottom line is the Kubernetes cluster on the iron servers, which passed internal tests and in less than a week began to be used for migrating from AWS. After creating additional such clusters, GitHub engineers launched a copy of the combat github.com on Kubernetes and (using GLB) offered their employees a button to switch between the original installation of the application and the version in Kubernetes. The services architecture looked like this:

After fixing the problems found by employees, a gradual switching of user traffic to new clusters began: first, 100 requests per second, and then 10% of all requests to github.com and api.github.com.

Go from 10% to 100% of the traffic did not hurry. Part-load tests showed unexpected results: the failure of one Kubernetes apiserver node had a negative impact on the availability of available resources in general — the reason “apparently” was associated with interaction between different clients connecting to apiserver (calico-agent, kubelet, kube -proxy, kube-controller-manager), and the behavior of the internal load balancer when the apiserver node falls. Therefore, GitHub decided to run the main application on several clusters in different places and redirect requests from problematic clusters to workers.

By mid-July of this year, 100% of GitHub production traffic was redirected to Kubernetes-based infrastructure.

One of the remaining problems, according to the company's engineer, is that sometimes during heavy loads, on some Kubernetes nodes, a kernel panic occurs, after which they reboot. Although outwardly for users it is not noticeable at all, the SRE-team has a high priority on finding out the reasons for this behavior and eliminating them, and the tests that test fault tolerance have specifically added the call to kernel panic (via

echo c > /proc/sysrq-trigger ) . Despite this, the authors are generally satisfied with their experience and are going to migrate more to such an architecture, as well as to begin experiments with the launch of stateful services in Kubernetes.Other articles from the cycle

- “ Kubernetes success stories in production. Part 1: 4,200 pods and TessMaster on eBay . ”

- “ Kubernetes success stories in production. Part 2: Concur and SAP . ”

- “ Kubernetes success stories in production. Part 4: SoundCloud (by Prometheus) . ”

- “ Kubernetes success stories in production. Part 5: Monzo Digital Bank .

- “ Kubernetes success stories in production. Part 6: BlaBlaCar .

- “ Kubernetes success stories in production. Part 7: BlackRock .

- “ Kubernetes success stories in production. Part 8: Huawei .

- “ Kubernetes success stories in production. Part 9: CERN and 210 K8s clusters. ”

- “ Kubernetes success stories in production. Part 10: Reddit .

Source: https://habr.com/ru/post/335814/

All Articles