Create a self-contained Docker cluster

A self-sufficient system is one that is able to recover and adapt. Recovery means that the cluster will almost always be in the state in which it was designed. For example, if a copy of the service fails, the system will need to restore it. Adaptation is associated with the modification of the desired state, so that the system can cope with the changed conditions. A simple example would be an increase in traffic. In this case, services will need to scale. When recovery and adaptation is automated, we get a self-healing and self-adaptive system. Such a system is self-sufficient and can operate without human intervention.

What does a self-contained system look like? What are its main parts? Who are the characters? In this article we will discuss only services and ignore the fact that iron is also very important. With such restrictions, we will compose a high-level picture that describes the (mostly) autonomous system in terms of services. We omit the details and take a look at the bird's-eye view of the system.

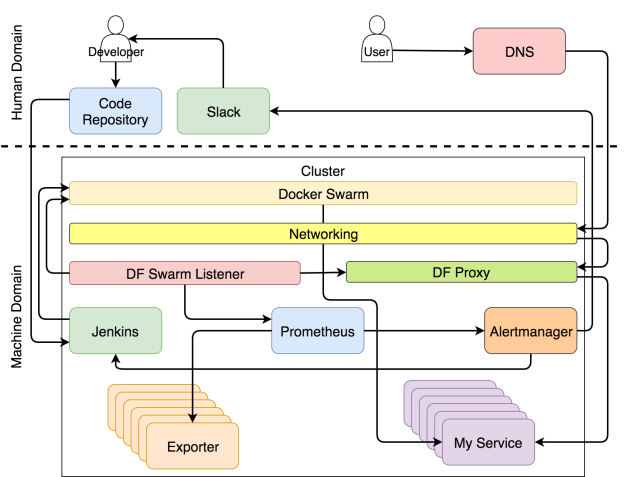

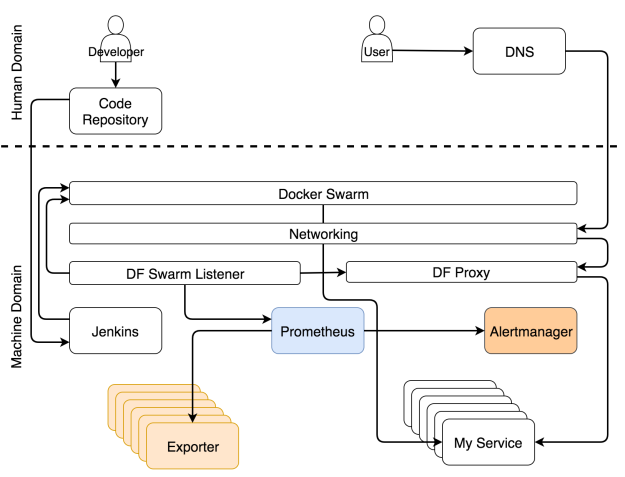

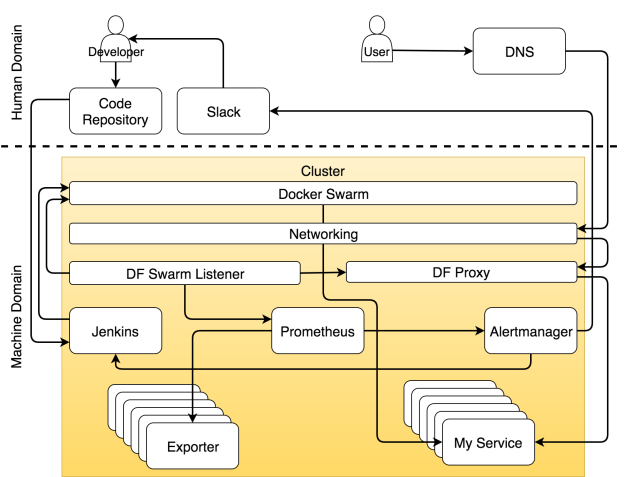

If you are well versed in the subject and want to understand everything at once, the system is shown in the figure below.

System with self-restoring and self-adapting services

Perhaps such a diagram is difficult to understand on the go. If I got rid of you in such a pattern, you might think that empathy is not the brightest trait of my character. In that case, you are not alone. My wife thinks the same way even without any diagrams there. But this time I will do everything possible to change your opinion and start from scratch.

We can divide the system into two main areas - human and machine. Consider that you are in the Matrix . If you have not seen this movie, immediately postpone this article, take out the popcorn and go ahead.

In the Matrix, the world has enslaved the machines. People there do little, except for the few who realize what is happening. Most live in a dream, which reflects past events of human history. The same thing is happening with modern clusters. Most treats them as if 1999 were in the yard. Almost all actions are performed manually, the processes are cumbersome, and the system survives only due to brute force and wasted energy. Some realized that it was already 2017 in the yard (at least at the time of this writing) and that a well-designed system should do most of the work autonomously. Practically everything has to be driven by machines, not people.

But this does not mean that there is no room left for people. There is work for us, but it is more connected with creative and non-recurring tasks. Thus, if we focus only on cluster operations, the human area of responsibility will decrease and give way to machines. Tasks are distributed by roles. As you will see below, a tool’s specialization or human can be very narrow, and then it will perform only one type of task, or it may be responsible for many aspects of operations.

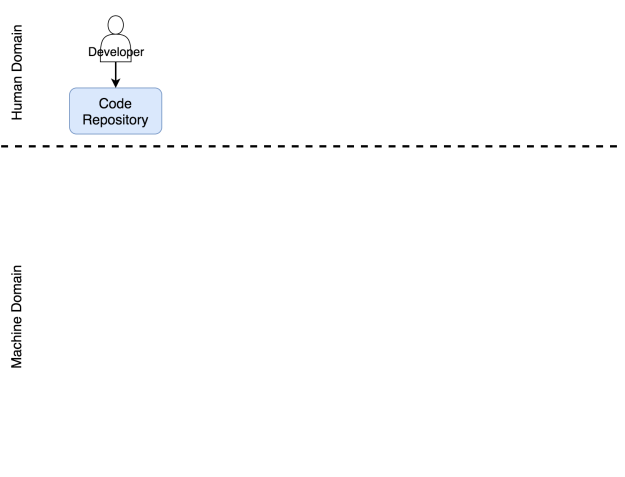

Developer role in the system

The person’s area of responsibility includes the processes and tools that need to be managed manually. From this area we are trying to remove all repetitive actions. But this does not mean that it should disappear altogether. Just the opposite. When we get rid of repetitive tasks, we release time that can be spent on truly meaningful tasks. The less we are engaged in tasks that can be delegated to the machine, the more time we can spend on those tasks that require a creative approach. This philosophy is on a par with the strengths and weaknesses of each actor in this drama. The machine is well controlled with numbers. They are able to very quickly perform the specified operations. In this matter, they are much better and more reliable than we are. We, in turn, are able to think critically. We can think creatively. We can program these machines. We can tell them what to do and how.

I designated the developer as the main character in this drama. I deliberately refused the word “coder”. A developer is any person who works on a software development project. He can be a programmer, a tester, a guru of operations, or a scrum master — that is all not important. I put all these people in a group called developer. As a result of their work, they must place some code in the repository. As long as it is not there, it does not seem to exist. It does not matter whether it is located on your computer, in a laptop, on a table or on a small piece of paper attached to a carrier pigeon. From the point of view of the system, this code does not exist until it reaches the repository. I hope this is a Git repository, but, in theory, it could be any place where you can store anything and track versions.

This repository is also a person’s responsibility. Although it is software, it belongs to us. We work with him. We update the code, download it from the repository, merge its parts together and sometimes we are horrified by the number of conflicts. But one cannot conclude that there are no automated operations at all, nor that some areas of machine responsibility do not require human intervention. And yet, if in a certain area most of the tasks are performed manually, we will consider it to be a field of human responsibility. The code repository is definitely part of a system that requires human intervention.

The developer sends the code to the repository.

Let's see what happens when the code is sent to the repository.

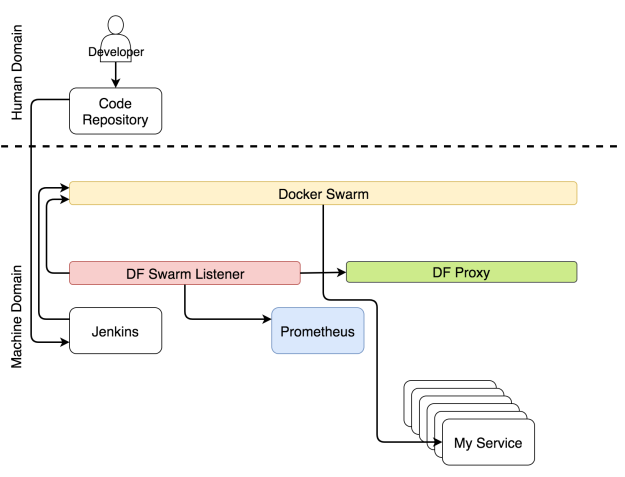

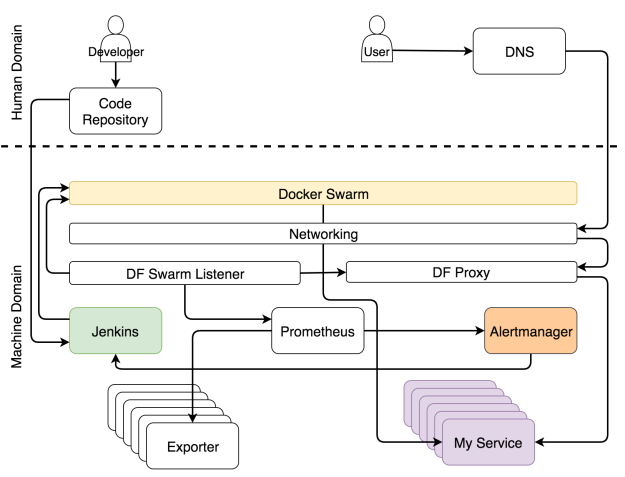

The role of continuous deployment in the system

The process of continuous deployment is fully automated. No exceptions. If your system is not automated, then you do not have a continuous deployment. You may need to manually deploy to production. If you manually click on a single button on which “deploy” is written in bold, then your process is continuous delivery. That I can understand. Such a button may be required from a business point of view. And yet the level of automation in this case is the same as in continuous deployment. You are only making decisions here. If you need to do something else manually, then you either perform continuous integration, or, more likely, do something in whose name there is no word “continuous”.

It doesn’t matter whether continuous deployment or delivery is involved, the process should be fully automated. All manual actions can be justified only by the fact that you have an outdated system that your organization prefers not to touch (usually this application is in Cobol). She just stands on the server doing something. I really like rules like “nobody knows what she does, so it’s better not to touch her”. It is a way to express the greatest respect, while maintaining a safe distance. And yet I will assume that this is not your case. You want to do something with it, the desire to literally tear you apart. If this is not the case and you are not lucky to work with the ala “hands off from here” system, then you should not read this article, I am surprised that you did not understand this before.

As soon as the repository receives a commit or pull request, the Web hook is triggered, which in turn sends the request to the continuous deployment tool to start the continuous deployment process. In our case, this tool is Jenkins. The query starts a stream of all sorts of continuous deployment tasks. He checks the code and conducts unit tests. He creates an image and pushes the register. It runs functional, integration, load and other tests - those that require working service. At the very end of the process (not counting the tests) a request is sent to the scheduler to deploy or update the service in the cluster. Among other planners, we choose Docker Swarm.

Deploying a service through Jenkins

Along with continuous deployment, there is another set of processes that follow the system configuration updates.

The role of service configuration in the system

Whatever cluster element is changed, you need to reconfigure some parts of the system. You may need to update the proxy configuration, the metric collector may need new targets, the log analyzer needs to update the rules.

No matter which parts of the system need to be changed, the main thing is that all these changes should be applied automatically. Few will argue with that. But there is a big question: where to find those parts of the information that should be embedded in the system? The most optimal place is the service itself. Since almost all planners use Docker, the most logical is to store information about the service in the service itself in the form of labels. If we place this information in any other place, we will lose a single true source and it will become very difficult to perform auto-detection.

If information about the service is inside it, it does not mean that the same information should not be placed in other places within the cluster. It should. However, the service is the place where the primary information should be, and from that moment it should be transferred to other services. With Docker it is very simple. He already has an API to which anyone can connect and get information about any service.

There is a good tool that finds information about the service and spreads it throughout the system - this is the Docker Flow Swarm Listener (DFSL). You can use any other solution or create your own. The ultimate goal of this and any other such tool is to listen to Docker Swarm events. If the service has a special set of shortcuts, the application will receive information as soon as you install or update the service. After that, it will transmit this information to all interested parties. In this case, it is the Docker Flow Proxy (DFP, inside which there is a HAProxy) and the Docker Flow Monitor (DFM, inside there is a Prometheus). As a result, both will always have the latest actual configuration. Proxy has a path to all public services, while Prometheus has information about exporters, alerts, the Alertmanager address and other things.

Reconfiguration of the system through the Docker Flow Swarm Listener

While deploying and reconfiguring, users should have access to our services without downtime.

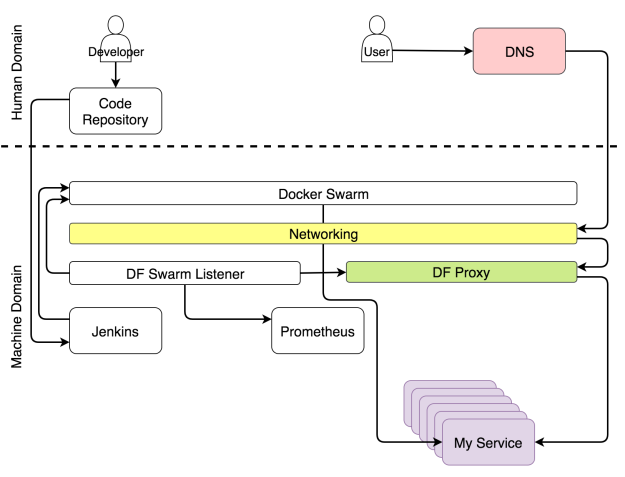

Proxy role in the system

Each cluster must have a proxy that will accept requests from a single port and redirect them to the designated services. The only exception to this rule is public service. For such a service, the question will be not only the need for a proxy, but also a cluster in general. When a request comes to a proxy, it is evaluated and, depending on its path, domain and some headers, is redirected to one of the services.

Thanks to Docker, some aspects of the proxy are now obsolete. No need to balance load. Docker Overlay does it for us. No longer need to support IP-nodes on which services are hosted. Service discovery does this for us. All that is required of the proxy is to evaluate the headers and forward the requests to the right place.

Because Docker Swarm always uses sliding updates when a particular aspect of the service changes, the continuous deployment process should not cause downtime. For this statement to be true, several requirements must be met. At least two replicas of the service should be running, and even better. Otherwise, if there is only one replica, a simple one is inevitable. For a minute, a second, or a millisecond - it does not matter.

Simple does not always cause a disaster. It all depends on the type of service. When Prometheus is updated, you can’t get away from idle time, because the program does not know how to scale. But this service can not be called public, unless you have several operators. A few seconds of downtime will not hurt anyone.

It is quite another thing - a public service like a large online store with thousands or even millions of users. If this service comes to rest, he will quickly lose his reputation. We, consumers, are so spoiled that even a single failure will force us to change our point of view and go look for a replacement. If this failure will be repeated again and again, the loss of business is almost guaranteed. Continuous deployment has a lot of advantages, but since it is used quite often, there are more and more potential problems, and simple is one of them. In fact, you should not allow idleness in one second if it is repeated several times a day.

There is good news: if you combine rolling updates and multiple replicas, you can avoid downtime, provided that the proxy will always be the latest version.

If you combine recurring updates with a proxy that dynamically reconfigures itself, then we get a situation where the user can send a request to the service at any time and will not be affected by continuous deployment, failure, or any other changes in the cluster status. When a user sends a request to a domain, this request penetrates the cluster through any working node, and is intercepted by the Ingress Docker network. The network in turn determines that the request uses the port on which the proxy listens and forwards it there. A proxy, on the other hand, evaluates the path, domain, and other aspects of the request and forwards it to the designated service.

We use the Docker Flow Proxy (DFP), which adds the desired level of dynamism on top of HAProxy.

Request path to the assigned service

The next role we discuss is related to collecting metrics.

The role of metrics in the system

Data is a key part of any cluster, especially one that targets self-adaptation. It is unlikely that anyone will challenge that we need both past and current metrics. Happen that, without them, we will run like that rooster in the yard, to whom the cook has cut off his head. The main question is not whether they are needed, but what to do with them. Usually, the operators endlessly stare at the monitor. This approach is far from effective. Take a better look at Netflix. They at least approach the issue of fun. The system must use metrics. The system generates them, collects and makes decisions about what actions to take when these metrics reach certain thresholds. Only then can the system be called self-adaptive. Only when it acts without human intervention, are they self-sufficient.

A self-adapting system needs to collect data, store it and apply different actions to it. We will not discuss what is better - sending data or collecting them. But since we use Prometheus to store and evaluate data, as well as to generate alerts, we will collect data. This data is available from exporters. They can be general (for example, Node Exporter, cAdvisor, etc.) or specific to the service. In the latter case, services should provide metrics in a simple format that Prometheus expects.

Regardless of the flows we described above, exporters issue different types of metrics. Prometheus periodically collects them and stores them in a database. In addition to collecting metrics, Prometheus also constantly evaluates the thresholds set by alerts, and if the system reaches one of them, the data is transmitted to the Alertmanager . In most cases, these limits are reached when conditions change (for example, the system load has increased).

Data collection and alerts

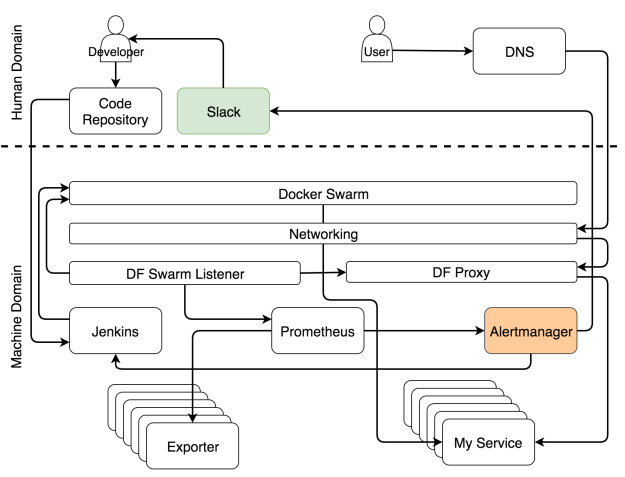

The role of alerts in the system

Alerts are divided into two main groups depending on who receives them - the system or the person. When an alert is rated as systemic, the request is usually sent to a service that is able to assess the situation and complete the tasks that prepare the system. In our case, this is Jenkins, which performs one of the predefined tasks.

The most common tasks that Jenkins performs are usually to scale up (or scale to) the service. However, before he tries to scale, he needs to find out the current number of replicas and compare them with the higher and lower limits that we set using labels. If, on the basis of scaling, the number of replicas will go beyond these limits, it will send a notification to Slack so that the person will decide what actions need to be taken to solve the problem. On the other hand, when it maintains the number of replicas within specified limits, Jenkins sends a request to one of the Swarm managers, which in turn increases (or decreases) the number of replicas in the service. This process is called self-adaptation, because the system adapts to change without human intervention.

Notification system for self-adaptation

Although our goal is a completely autonomous system, in some cases one cannot do without a person. In fact, these are cases that are impossible to foresee. When something happens that we expected, let the system eliminate the error. A person should be called only when surprises occur. In such cases, the Alertmanager sends a notification to the person. In our version, this is notification via Slack , but in theory it can be any other service for sending messages.

When you start designing a self-healing system, most alerts will fall into the “unexpected” category. You cannot predict all situations. The only thing you can do in this case is to make sure that the unexpected happens only once. When you receive a notification, your first task is to adapt the system manually. The second is to improve the rules in Alertmanager and Jenkins so that when the situation repeats, the system can handle it automatically.

A notification for a person when something unexpected happens.

It is hard to set up a self-adapting system, and this work is endless. It needs to be constantly improved. What about self-healing? Is it so difficult to achieve it?

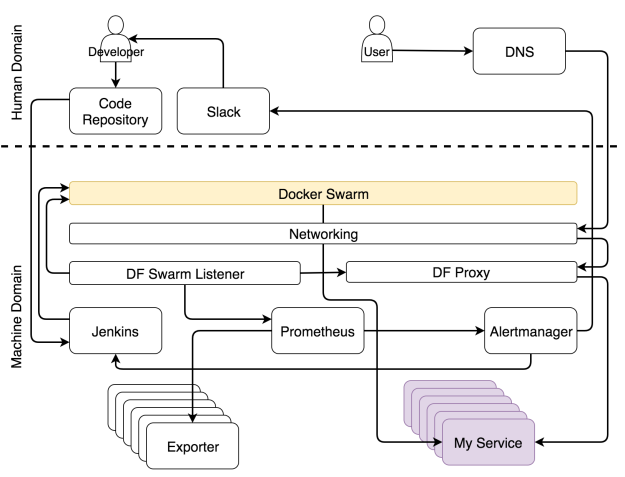

The role of the scheduler in the system

Unlike self-adaptation, self-healing is relatively easy to achieve. As long as enough resources are available, the scheduler will always ensure that a certain number of replicas are running. In our case, this is the Docker Swarm scheduler.

Replicas can fail, they can be killed and they can be inside an unhealthy node. This is all not so important, because Swarm ensures that they are restarted when necessary and (almost) always work normally. If all our services are scalable and at least a few replicas are running on each of them, there will never be any downtime. The self-healing processes inside the Docker will make the self-adaptation processes easily accessible. It is the combination of these two elements that makes our system completely autonomous and self-sufficient.

Problems begin to pile up when the service cannot be scaled. If we cannot have multiple replicas of the service, Swarm cannot guarantee the absence of downtime. If the replica fails, it will be restarted. However, if this is the only available replica, the period between the accident and the restart becomes simple. People have everything the same way. We get sick, lie in bed and after some time return to work. If we are the only employee in this company and there is no one to replace us while we are away, then this is a problem. The same applies to services. For a service that wants to avoid downtime, you must at least have two replicas.

Docker Swarm ensures that there is no downtime.

Unfortunately, our services are not always designed with scalability. But even when it is taken into account, there is always a chance that some of the third-party services that you use do not have it. Scalability is an important design decision, and we must take this requirement into account when we choose a new tool. It is necessary to clearly distinguish between services for which idle time is unacceptable and services that do not put the system at risk if they are unavailable for a few seconds. Once you learn to distinguish between them, you will always know which of the services are scalable. Scalability is a requirement for unrestricted services.

Cluster role in the system

In the end, everything we do is within one or more clusters. There are no individual servers anymore. We do not decide what to send. Planners do this. From our (human) point of view, the smallest object is a cluster in which resources such as memory and CPU are collected.

Everything is a cluster

')

Source: https://habr.com/ru/post/335464/

All Articles