Monitoring as a service: a modular system for microservice architecture

Today on our project, in addition to the monolithic code, dozens of microservices are functioning. Each of them requires that it be monitored. To do this in such volumes by the forces of DevOps engineers is problematic. We have developed a monitoring system that works as a service for developers. They can write their own metrics to the monitoring system, use them, build dashboards on their basis, attach alerts to them, which will be triggered when threshold values are reached. With DevOps engineers, only infrastructure and documentation.

This post is a transcript of my speech from our section on RIT ++. Many asked us to make text versions of reports from there. If you were at a conference or watched a video, you will not find anything new. And all the rest - welcome under cat. I'll tell you how we came to such a system, how it works and how we plan to update it.

')

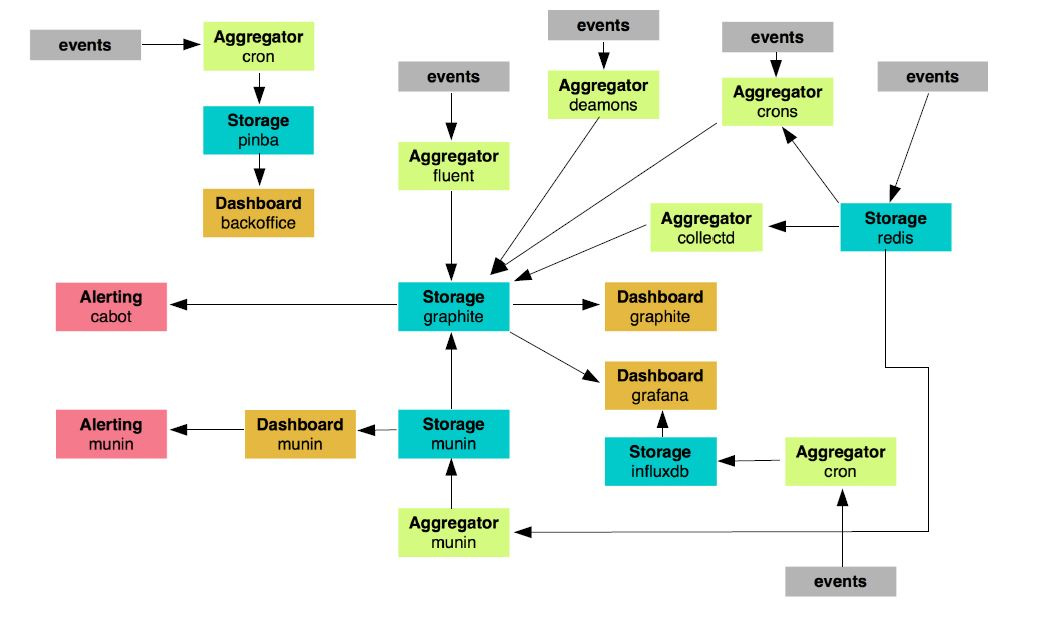

How did we arrive at the existing monitoring system? To answer this question, you need to go to 2015. Here's how it looked then:

We had about 24 nodes that were responsible for monitoring. There is a whole bunch of different crowns, scripts, demons that somehow monitor something, send messages, perform functions. We thought that the further, the less such a system would be viable. It makes no sense to develop it: too cumbersome.

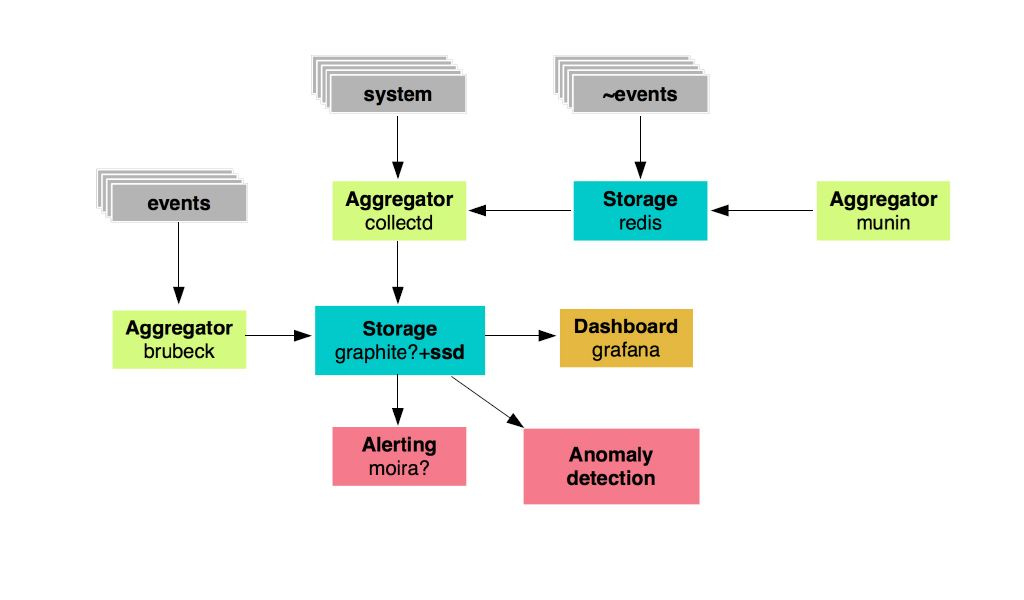

We decided to choose those elements of monitoring that we will leave and will develop, and those that we will refuse. They turned 19. Only graphites, aggregators and Grafana remained as dashboards. But what will the new system look like? Like this:

We have a storage of metrics: these are graphites, which will be based on fast SSD disks, these are certain aggregators for metrics. Next - Grafana for the withdrawal of dashboards and Moira as an alert. We also wanted to develop a system to search for anomalies.

That was what the plans looked like in 2015. But we had to prepare not only the infrastructure and the service itself, but also the documentation for it. We have developed a corporate standard for ourselves, called monitoring 2.0. What were the requirements for the system?

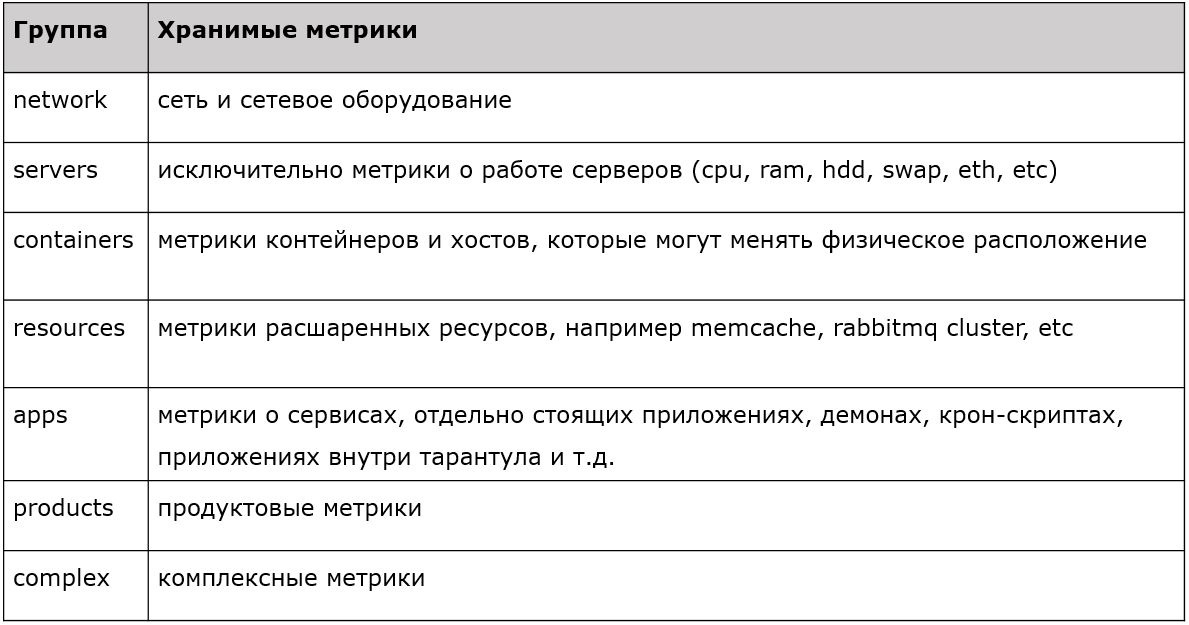

We needed UDP because we have a large flow of traffic and events that generate metrics. If they all write to graphite at once, the storage will fall. We also selected first-level prefixes for all metrics.

Each prefix has a property. There are metrics for servers, networks, containers, resources, applications, and so on. Implemented a clear, strong, typed filtering, where we accept the first level metrics, and the rest just drop. This is how we planned this system in 2015. What about the present?

First of all, we monitor applications: our PHP code, applications, and microservices - in short, everything our developers write. All applications via UDP send metrics to the Brubeck aggregator (statsd, rewritten to C). He was the fastest on the basis of synthetic tests. And it sends already aggregated metrics to Graphite via TCP.

It has a type of metrics like timers. This is a very handy thing. For example, for each user connection to a service, you send a response response time to Brubeck. A million answers came, and the aggregator issued only 10 metrics. You have the number of people who came, the maximum, minimum and average response time, median and 4 percentiles. Then the data is transferred to Graphite and we see them all live.

We also have an aggregation for metrics for hardware, software, system metrics and our old Munin monitoring system (it worked with us until 2015). We collect all this through the C'ish daemon of CollectD (a whole bundle of various plugins is embedded in it, it can interrogate all the resources of the host system on which it is installed, simply indicate in the configuration where to write data) and write data through it into Graphite. It also supports python plugins and shell scripts, so you can write your custom solutions: CollectD will collect this data from a local or remote host (suppose there is a Curl) and send it to Graphite.

Further, all the metrics that we have collected are sent to Carbon-c-relay. This is a Graphite Carbon Relay solution, modified by C. This is a router that collects all the metrics that we send from our aggregators and routes them through the nodes. Also at the routing stage, it checks the validity of the metrics. First, they must conform to the scheme with the prefixes, which I showed earlier and, second, they are valid for graphite. Otherwise, they drop off.

Then Carbon-c-relay sends the metrics to the Graphite cluster. We use Carbon-cache rewritten to Go as the main storage for metrics. Go-carbon because of its multithreading is far superior in performance Carbon-cache. It takes data into itself and writes it to disk using the whisper package (standard, written in python). In order to read data from our repositories, we use the Graphite API. It works much faster than the standard Graphite WEB. What happens to the data next?

They go to Grafana. As a primary data source, we use our graphite clusters, plus we have Grafana as a web interface for displaying metrics, building deshboards. For each of its services, developers get their own deshbord. Next, they build graphs on them, which display the metrics that they write from their applications. In addition to Grafana, we also have SLAM. This is a python-demon that counts SLA based on graphite data. As I said, we have several dozen microservices, each of which has its own requirements. With the help of SLAM, we go to the documentation and compare it with what is in Graphite and compare how requirements correspond to the availability of our services.

Go ahead: alert. It is organized with the help of a strong system - Moira. She is independent because she has her own Graphite under the hood. Developed by the guys from SKB "Kontur", written in python and Go, fully open source. Moira gets all the same flow that goes into graphite. If for some reason your storage dies, your alert will work.

We deployed Moira to Kubernetes, it uses a cluster of Redis servers as its main database. The result was a fault tolerant system. It compares the flow of metrics with the list of triggers: if there are no references in it, it drops the metric. So she is able to digest gigabytes of metrics per minute.

We also attached corporate LDAP to it, with the help of which each user of the corporate system can create notifications for itself on existing (or newly created) triggers. Since Moira contains Graphite, it supports all its functions. Therefore, you first take the line and copy it to Grafana. See how the data is displayed on the graphs. And then take the same line and copy it in Moira. Hang it with limits and get alert at the exit. To do all this, you do not need any specific knowledge. Moira can alert via SMS, email, to Jira, Slack ... She also supports the execution of custom scripts. When a trigger happens to her, and she is subscribed to a custom script or binary, she launches it, and gives it to stdin to this JSON binary. Accordingly, your program should parse it. What do you do with this JSON - decide for yourself. If you want - send to Telegram, if you want - open the task in Jira, do whatever you want.

We also use our own development for the alert - Imagotag. We adapted the panel, which is usually used for electronic price tags in stores, for our tasks. We brought triggers on it from Moira. It indicates what condition they are in when they occurred. Some of the guys from the development refused to notify in Slack and in the mail in favor of this panel here.

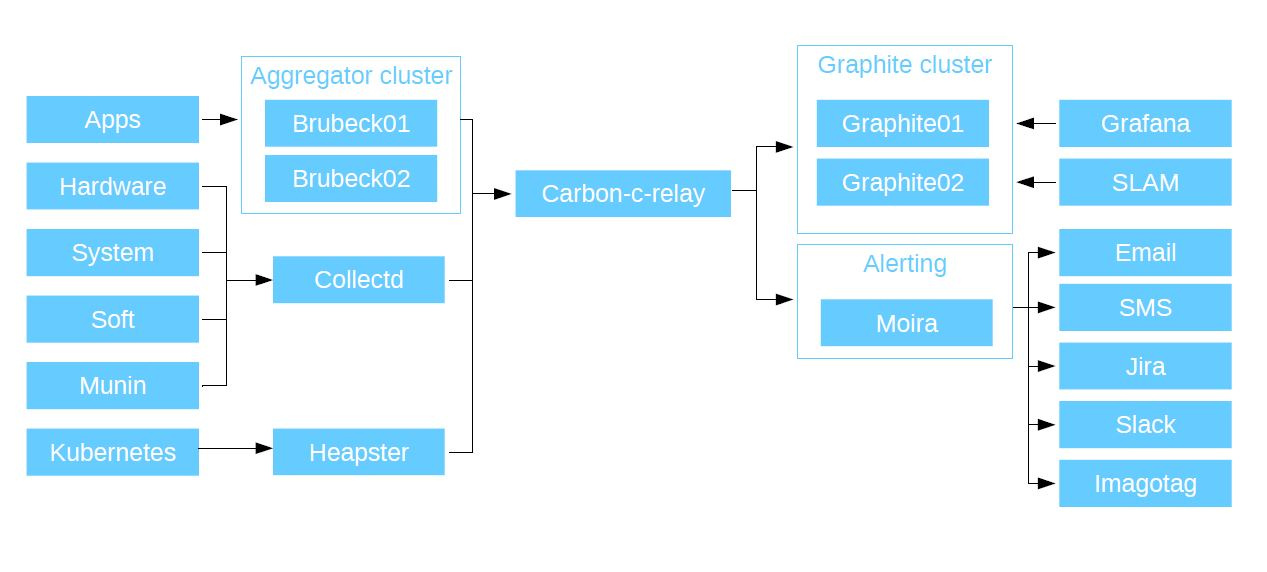

Well, since we are a progressive company, Kubernetes is also monitored in this system. We included it in the system using Heapster, which we installed in the cluster, it collects data and sends it to Graphite. As a result, the scheme looks like this:

Here is a list of links to the components that we used for this task. All of them are open source.

github.com/grobian/carbon-c-relay

github.com/github/brubeck

collectd.org

github.com/moira-alert

grafana.com

github.com/kubernetes/heapster

And here are some numbers on how the system works for us.

Number of metrics: ~ 300,000 / sec

Graphite Send Metrics Interval: 30 sec

Use of server resources: ~ 6% CPU (we are talking about full-fledged servers); ~ 1Gb RAM; ~ 3 Mbps LAN

Number of metrics: ~ 1 600 000 / min

Metric update interval: 30 sec

Metrics storage scheme: 30sec 35d, 5min 90d, 10min 365d (gives an understanding of what is happening with the service at a long time)

Use of server resources: ~ 10% CPU; ~ 20Gb RAM; ~ 30 Mbps LAN

We at Avito really appreciate the flexibility in our monitoring service. Why, he actually turned out so? First, its components are interchangeable: both the components themselves and their versions. Secondly - maintainability. Since the entire project is built on open source, you yourself can edit the code, make changes, and implement functions that are not available from the box. Quite common stacks are used, mainly Go and Python, so this is done quite simply.

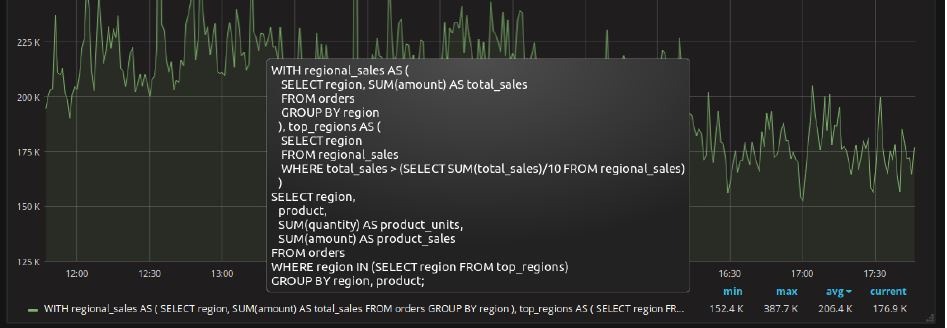

Here is an example of a real problem. Metrics in Graphite is a file. He has a name. File name = metric name. And there is a path to it. Linux file names are limited to 255 characters. And we have (as “internal customers”) guys from the database department. They tell us: “We want to monitor our SQL queries. And they are not 255 characters, but 8 MB each. We want to display them in Grafana, to see the parameters for this query, and even better, we want to see the top of such queries. It will be great if it is displayed in real time. And it would be absolutely cool to shove them into alert ”.

An example of an SQL query is taken as an example from the postgrespro.ru site.

We pick up the Redis server and our Collectd plugins that go to Postgres and take all the data from there, send metrics to Graphite. But we replace metric name with hashes. The same hash is simultaneously sent to Redis as a key, and the entire SQL query as a value. It remains for us to make Grafana able to go to Redis and take this information. We open the Graphite API, because This is the main interface for the interaction of all monitoring components with graphite, and enter a new function there, called aliasByHash () - from Grafana we get the name of the metric, and use it in the request to Redis as a key, in response we get the key value, which is our “SQL query ". Thus, we brought to the Grafana the display of a SQL query, which, in theory, could not be displayed there, along with statistics on it (calls, rows, total_time, ...).

Availability. Our monitoring service is available 24 to 7 from any application and any code. If you have access to the repositories, you can write data to the service. Language is not important, decisions are not important. You only need to know how to open a socket, throw a metric there and close the socket.

Reliability. All components are fault tolerant and handle our loads well.

Low threshold of entry. In order to use this system, you do not need to learn programming languages and requests in Grafana. Simply open your application, enter a socket into it that will send metrics to Graphite, close it, open Grafana, create deshboards there and look at the behavior of your metrics, receiving notifications through Moira.

Independence. All this can be done by yourself, without the help of DevOps engineers. And this is an overflight, because you can monitor your project right now, no one needs to be asked - neither to start work, nor for changes.

Everything listed below is not just abstract thoughts, but something to which at least the first steps have been taken.

Thanks for attention! Ask your questions on the topic, I will try to answer here or in the following posts. Perhaps someone has experience building a similar monitoring system or switching to Clickhouse in a similar situation - share it in the comments.

This post is a transcript of my speech from our section on RIT ++. Many asked us to make text versions of reports from there. If you were at a conference or watched a video, you will not find anything new. And all the rest - welcome under cat. I'll tell you how we came to such a system, how it works and how we plan to update it.

')

The past: charts and plans

How did we arrive at the existing monitoring system? To answer this question, you need to go to 2015. Here's how it looked then:

We had about 24 nodes that were responsible for monitoring. There is a whole bunch of different crowns, scripts, demons that somehow monitor something, send messages, perform functions. We thought that the further, the less such a system would be viable. It makes no sense to develop it: too cumbersome.

We decided to choose those elements of monitoring that we will leave and will develop, and those that we will refuse. They turned 19. Only graphites, aggregators and Grafana remained as dashboards. But what will the new system look like? Like this:

We have a storage of metrics: these are graphites, which will be based on fast SSD disks, these are certain aggregators for metrics. Next - Grafana for the withdrawal of dashboards and Moira as an alert. We also wanted to develop a system to search for anomalies.

Standard: Monitoring 2.0

That was what the plans looked like in 2015. But we had to prepare not only the infrastructure and the service itself, but also the documentation for it. We have developed a corporate standard for ourselves, called monitoring 2.0. What were the requirements for the system?

- continuous availability;

- metric storage interval = 10 seconds;

- structured storage of metrics and dashboards;

- SLA> 99.99%

- collecting event metrics via UDP (!).

We needed UDP because we have a large flow of traffic and events that generate metrics. If they all write to graphite at once, the storage will fall. We also selected first-level prefixes for all metrics.

Each prefix has a property. There are metrics for servers, networks, containers, resources, applications, and so on. Implemented a clear, strong, typed filtering, where we accept the first level metrics, and the rest just drop. This is how we planned this system in 2015. What about the present?

Present: diagram of the interaction of monitoring components

First of all, we monitor applications: our PHP code, applications, and microservices - in short, everything our developers write. All applications via UDP send metrics to the Brubeck aggregator (statsd, rewritten to C). He was the fastest on the basis of synthetic tests. And it sends already aggregated metrics to Graphite via TCP.

It has a type of metrics like timers. This is a very handy thing. For example, for each user connection to a service, you send a response response time to Brubeck. A million answers came, and the aggregator issued only 10 metrics. You have the number of people who came, the maximum, minimum and average response time, median and 4 percentiles. Then the data is transferred to Graphite and we see them all live.

We also have an aggregation for metrics for hardware, software, system metrics and our old Munin monitoring system (it worked with us until 2015). We collect all this through the C'ish daemon of CollectD (a whole bundle of various plugins is embedded in it, it can interrogate all the resources of the host system on which it is installed, simply indicate in the configuration where to write data) and write data through it into Graphite. It also supports python plugins and shell scripts, so you can write your custom solutions: CollectD will collect this data from a local or remote host (suppose there is a Curl) and send it to Graphite.

Further, all the metrics that we have collected are sent to Carbon-c-relay. This is a Graphite Carbon Relay solution, modified by C. This is a router that collects all the metrics that we send from our aggregators and routes them through the nodes. Also at the routing stage, it checks the validity of the metrics. First, they must conform to the scheme with the prefixes, which I showed earlier and, second, they are valid for graphite. Otherwise, they drop off.

Then Carbon-c-relay sends the metrics to the Graphite cluster. We use Carbon-cache rewritten to Go as the main storage for metrics. Go-carbon because of its multithreading is far superior in performance Carbon-cache. It takes data into itself and writes it to disk using the whisper package (standard, written in python). In order to read data from our repositories, we use the Graphite API. It works much faster than the standard Graphite WEB. What happens to the data next?

They go to Grafana. As a primary data source, we use our graphite clusters, plus we have Grafana as a web interface for displaying metrics, building deshboards. For each of its services, developers get their own deshbord. Next, they build graphs on them, which display the metrics that they write from their applications. In addition to Grafana, we also have SLAM. This is a python-demon that counts SLA based on graphite data. As I said, we have several dozen microservices, each of which has its own requirements. With the help of SLAM, we go to the documentation and compare it with what is in Graphite and compare how requirements correspond to the availability of our services.

Go ahead: alert. It is organized with the help of a strong system - Moira. She is independent because she has her own Graphite under the hood. Developed by the guys from SKB "Kontur", written in python and Go, fully open source. Moira gets all the same flow that goes into graphite. If for some reason your storage dies, your alert will work.

We deployed Moira to Kubernetes, it uses a cluster of Redis servers as its main database. The result was a fault tolerant system. It compares the flow of metrics with the list of triggers: if there are no references in it, it drops the metric. So she is able to digest gigabytes of metrics per minute.

We also attached corporate LDAP to it, with the help of which each user of the corporate system can create notifications for itself on existing (or newly created) triggers. Since Moira contains Graphite, it supports all its functions. Therefore, you first take the line and copy it to Grafana. See how the data is displayed on the graphs. And then take the same line and copy it in Moira. Hang it with limits and get alert at the exit. To do all this, you do not need any specific knowledge. Moira can alert via SMS, email, to Jira, Slack ... She also supports the execution of custom scripts. When a trigger happens to her, and she is subscribed to a custom script or binary, she launches it, and gives it to stdin to this JSON binary. Accordingly, your program should parse it. What do you do with this JSON - decide for yourself. If you want - send to Telegram, if you want - open the task in Jira, do whatever you want.

We also use our own development for the alert - Imagotag. We adapted the panel, which is usually used for electronic price tags in stores, for our tasks. We brought triggers on it from Moira. It indicates what condition they are in when they occurred. Some of the guys from the development refused to notify in Slack and in the mail in favor of this panel here.

Well, since we are a progressive company, Kubernetes is also monitored in this system. We included it in the system using Heapster, which we installed in the cluster, it collects data and sends it to Graphite. As a result, the scheme looks like this:

Monitoring components

Here is a list of links to the components that we used for this task. All of them are open source.

Graphite:

- go-carbon: github.com/lomik/go-carbon

- whisper: github.com/graphite-project/whisper

- graphite-api: github.com/brutasse/graphite-api

Carbon-c-relay:

github.com/grobian/carbon-c-relay

Brubeck:

github.com/github/brubeck

Collectd:

collectd.org

Moira:

github.com/moira-alert

Grafana:

grafana.com

Heapster:

github.com/kubernetes/heapster

Statistics

And here are some numbers on how the system works for us.

Aggregator (brubeck)

Number of metrics: ~ 300,000 / sec

Graphite Send Metrics Interval: 30 sec

Use of server resources: ~ 6% CPU (we are talking about full-fledged servers); ~ 1Gb RAM; ~ 3 Mbps LAN

Graphite (go-carbon)

Number of metrics: ~ 1 600 000 / min

Metric update interval: 30 sec

Metrics storage scheme: 30sec 35d, 5min 90d, 10min 365d (gives an understanding of what is happening with the service at a long time)

Use of server resources: ~ 10% CPU; ~ 20Gb RAM; ~ 30 Mbps LAN

Flexibility

We at Avito really appreciate the flexibility in our monitoring service. Why, he actually turned out so? First, its components are interchangeable: both the components themselves and their versions. Secondly - maintainability. Since the entire project is built on open source, you yourself can edit the code, make changes, and implement functions that are not available from the box. Quite common stacks are used, mainly Go and Python, so this is done quite simply.

Here is an example of a real problem. Metrics in Graphite is a file. He has a name. File name = metric name. And there is a path to it. Linux file names are limited to 255 characters. And we have (as “internal customers”) guys from the database department. They tell us: “We want to monitor our SQL queries. And they are not 255 characters, but 8 MB each. We want to display them in Grafana, to see the parameters for this query, and even better, we want to see the top of such queries. It will be great if it is displayed in real time. And it would be absolutely cool to shove them into alert ”.

An example of an SQL query is taken as an example from the postgrespro.ru site.

We pick up the Redis server and our Collectd plugins that go to Postgres and take all the data from there, send metrics to Graphite. But we replace metric name with hashes. The same hash is simultaneously sent to Redis as a key, and the entire SQL query as a value. It remains for us to make Grafana able to go to Redis and take this information. We open the Graphite API, because This is the main interface for the interaction of all monitoring components with graphite, and enter a new function there, called aliasByHash () - from Grafana we get the name of the metric, and use it in the request to Redis as a key, in response we get the key value, which is our “SQL query ". Thus, we brought to the Grafana the display of a SQL query, which, in theory, could not be displayed there, along with statistics on it (calls, rows, total_time, ...).

Results

Availability. Our monitoring service is available 24 to 7 from any application and any code. If you have access to the repositories, you can write data to the service. Language is not important, decisions are not important. You only need to know how to open a socket, throw a metric there and close the socket.

Reliability. All components are fault tolerant and handle our loads well.

Low threshold of entry. In order to use this system, you do not need to learn programming languages and requests in Grafana. Simply open your application, enter a socket into it that will send metrics to Graphite, close it, open Grafana, create deshboards there and look at the behavior of your metrics, receiving notifications through Moira.

Independence. All this can be done by yourself, without the help of DevOps engineers. And this is an overflight, because you can monitor your project right now, no one needs to be asked - neither to start work, nor for changes.

What are we aiming for?

Everything listed below is not just abstract thoughts, but something to which at least the first steps have been taken.

- Anomaly detector. We want to sip a service that will go to our Graphite-storages and check every metric using various algorithms. Already there are algorithms that we want to view, there is data, we know how to work with them.

- Metadata. We have many services, over time they change, as well as the people who work with them. Constantly keeping documentation manually is not an option. Therefore, now metadata is embedded in our microservices. It says who developed it, the languages with which it interacts, SLA requirements, where and to whom to send notifications. When a service is deployed, all entity data is created independently. As a result, you get two links - one to the triggers, the other to the dashboards in Grafana.

- Monitoring in every home. We believe that such a system should be used by all developers. In this case, you always understand where your traffic is, what happens to it, where it falls, where it has weak points. If, say, something comes in and overwhelms your service, then you will not find out about it during the call from the manager, but from the alert, and you can immediately open fresh logs and see what happened there.

- High performance. Our project is constantly growing, and today it processes about 2,000,000 metric values per minute. A year ago, this figure was 500,000. And the growth continues, and this means that over time, Graphite (whisper) will begin to greatly load the disk subsystem. As I said, this monitoring system is quite versatile due to the interchangeability of components. Someone specifically under Graphite maintains and constantly expands its infrastructure, but we decided to go another way: to use ClickHouse as a repository of our metrics. This transition is almost complete, and very soon I will tell you in more detail how it was done: what the difficulties were and how they were overcome, how the migration process went, I will describe the components selected for binding and their configuration.

Thanks for attention! Ask your questions on the topic, I will try to answer here or in the following posts. Perhaps someone has experience building a similar monitoring system or switching to Clickhouse in a similar situation - share it in the comments.

Source: https://habr.com/ru/post/335410/

All Articles