Colibri-ui - our solution for automating mobile application testing

With the growth of teams, the number of features inevitably grows, and at the same time the test model and the number of test cases that need to be checked during regression testing. At the same time, the number of teams is growing for a reason, in our case business wants to be released more and more often without losing quality.

How we solved the problem of finding a balance between speed, budget and quality at Alfa Labs will be discussed today with the example of Alfa Mobile. Looking ahead, ATTENTION, SPOILER !!! Our solution is available on github: the colibri-ui library and the colibri-ui-template template for a quick start.

Pavel pvivanov and Lilia Lidiyatullina took an active part in writing the article .

')

Back in 2013, we didn’t even have thoughts about testing automation, because the regression testing process took one day for one of the testers for both OSes (iOS / Android).

However, with the active growth of the application, the addition of new services and services to it, the cost of manual testing also began to grow at a high rate.

Add to this the increase in the number of teams (from one to six) that constantly produce new features - and regression testing will become a thrombus of your processes, which runs the risk of breaking off and jeopardizing production.

At some point, we came to the conclusion that at the time of preparing the application for release, all six teams were “idle”, until all six testers were releasing the release candidate. In time equivalent, the duration of regression testing increased to 8 working days (six people!).

This situation does not add motivation to the testers themselves. At a certain point, we even had a joke “Regress like a holiday!”

The problem needed to be solved somehow, and we had two ways to “attach bablishko”:

For ethical and economic reasons, we chose the second option, after all, the cost of automation, whatever one may say, is a much more profitable investment.

We decided to start the movement in the chosen direction with a pilot project for automating the testing of mobile applications. Based on its results, we have formed requirements for the future instrument:

Based on the received requirements, an automation tool was to be selected.

In the framework of the pilot project, we considered:

The requirement for cross-platform solutions narrowed down the choice to the last pair from the list. The final choice was made in favor of Appium due to a more active community involved in development and support.

Automating any process at the bottom level is scripts and code. However, not everyone can understand the development tools or even write something on their own. That is why we decided to maximally simplify this moment using the BDD-methodology on projects.

Our framework is divided into several levels of abstraction, where the upper level is written in the popular test writing language Gherkin, and the lower level is written by developers in the Java programming language. JBehave was chosen for writing scripts.

So, what does our solution look like from the user?

This example describes the transition process from the main screen to the mobile payment screen. Maybe someone will argue that from the point of view of the business process, it does not matter to him which way he gets to the desired screen, and he will be right. Indeed, nothing prevents us from going to the desired section, replacing the last five lines of the previous scenario with one, for example, like this:

However, such steps will be less atomic and it will be more difficult to reuse them on two platforms at once, iOS and Android. After all, when we want to lower the threshold for entering the development, we need to reuse the current steps as much as possible, otherwise the tester will always need new ones and, as a result, their implementation. And here, as we remember, the tester does not always have the necessary development skills.

If everything is extremely clear with the script, he reads "from the sheet" and describes our actions, then how can we specify the locators, while trying not to use them explicitly?

One way to accomplish the task of reducing the entry threshold is to simplify writing complex locators and hide everything in the project more deeply. Thus, two factories were born that allow us to create a locator by description and use it for searching. Unfortunately, it is not always possible to do without writing locators, in rare cases it is necessary to write it. For such situations, we left the possibility to find an element by XPath.

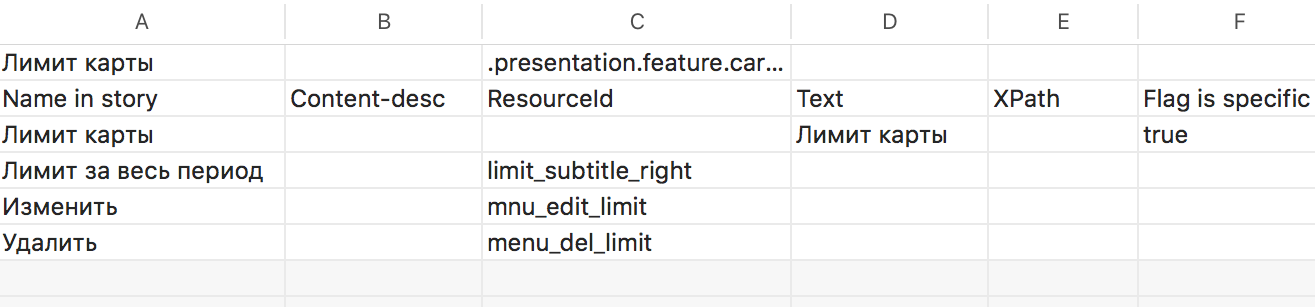

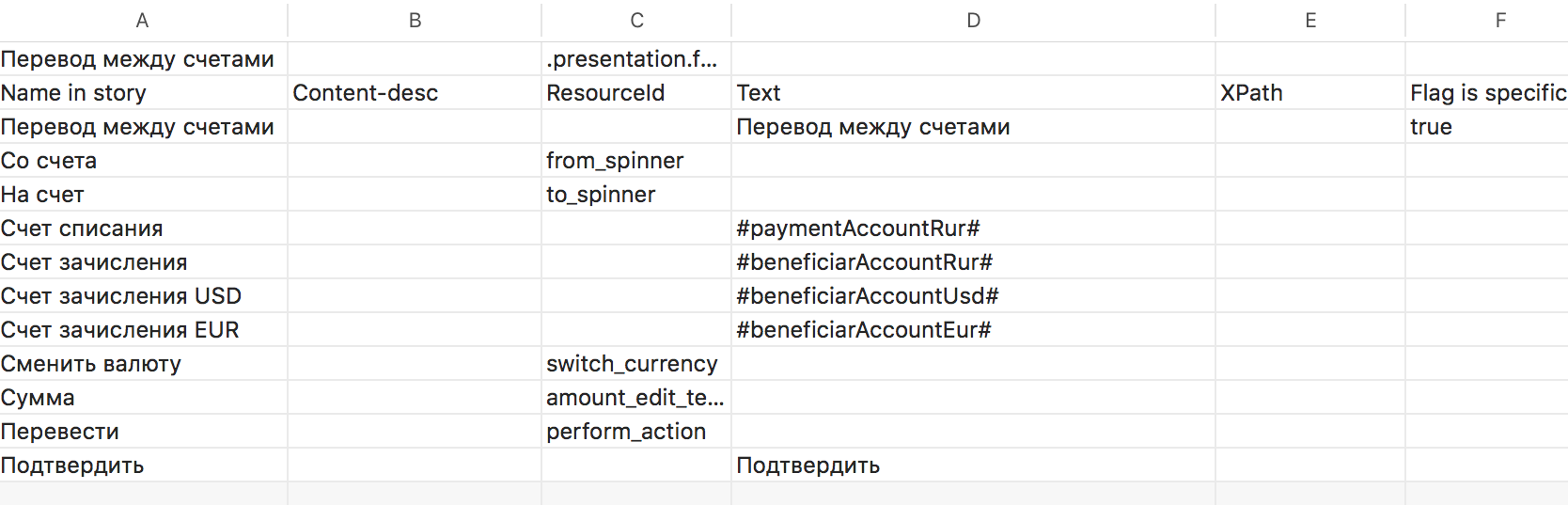

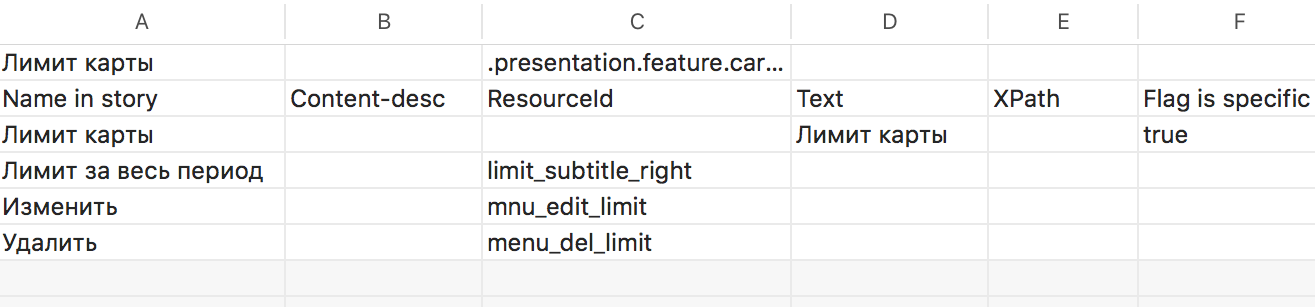

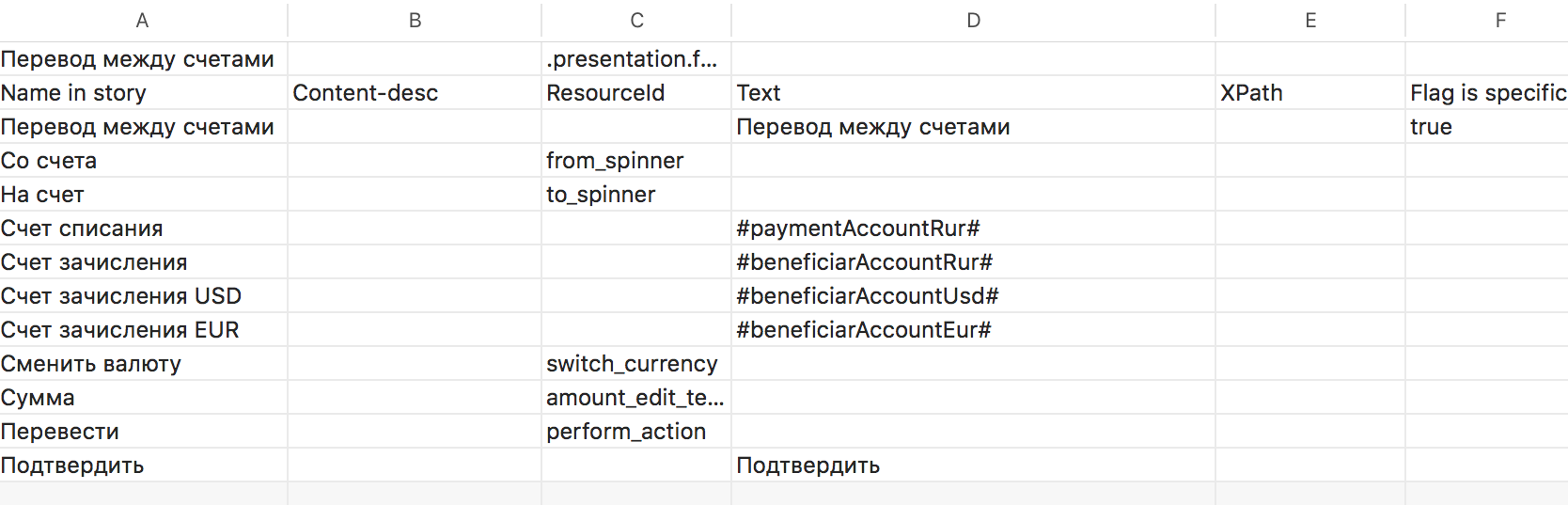

The description of the elements on the screen can consist of four components. All four components are never used, but two may well be used, for example, in the case when you first need to scroll to an element, and then click on it.

We will use the name of the element (Name in story) in the script, we will use it to pull out Content description / ResourceId / AccessabilityIdeitificator / Text / XPath.

All the descriptions we collect in .csv files, where you can conveniently edit them in a table form. On the one hand, this is the usual PageObject, and on the other hand, our testers do not have to edit the locators in the code.

It seems that much easier! We described the screen, wrote the script, launched autotests, but let's talk a bit about non-trivial tasks and look at our colibri-ui framework from the inside.

When working with Appium, it is always necessary to indicate which mobile device we want to work with. In our solution, you need to transfer to the project the udid device (unique identifier) and its name. The name will also be mentioned in the logs if something goes wrong. Note that with the help of udid, our project will work with the desired mobile device, because we have a whole farm and all of them are connected to one Mac.

Currently, descriptions of mobile devices are stored as a set of folders, each of which contains settings files such as .property and json-object. The file type .property contains the udid and device name, the json object describes the node settings for operation in cluster mode (see the colibri-ui-template template).

A small offtop, or how to get udid connected devices!

For Android, we run “adb devices” in the console, for iOS, “instruments -s devices | grep -v (Simulator | $ (id -un))” and get a list of connected devices. In the case of Android, the list will contain both real devices and emulators, while for iOS we only filter real devices. If someone needs to get only emulators, another filtering “instruments -s devices | grep simulator.

We plan to transfer this set of folders to the database or to another storage, or to receive the list dynamically, executing the above commands in the process of building the environment. We currently have no need for such an improvement.

Additionally, we note that for the above commands to work, ADB Driver and Xcode must be installed on your Mac, respectively. When working with emulators, also do not forget to deflate their images.

To date, we have taken into account all that is necessary to run the project in the simplest mode. We have scripts, screen descriptions and device descriptions. However, in applications that we test, this is not enough. In our applications, you need to log in to a test stand by some user and use its credentials, such as: phone number, login, password, account numbers, etc.

Files with user credentials are also in the project as a separate folder. In the future, as well as with devices, transfer them to the database or centralized storage.

In scripts and descriptions of pages, we use markers of the form # userName #, by which we get the property value from the user file and replace these markers during the run.

Thus, we can run the same set of scenarios on different users, including at the same time.

This is how it looks in the page description:

This is how it looks in the user.property file:

Be sure to specify the keys and values.

We started the development by describing fairly small steps, such as typing text or clicking on something. Over time, we realized that we could not write scripts in small steps, or write complex steps, for example, to return to the main screen, without duplicating the code. Thus began the search for a solution to reuse small steps into larger ones.

The first attempt was to add guiCe to the project, for organizing DI, but its implementation brought with it the processing of almost the entire project core. And since the dependencies, right in the appium-java-client, already have Spring, for us the solution became obvious and our next step was to introduce Spring.

When introducing Sping into our project, the amount of change was minimal. At the very depth of the project, only the JBeHave steps factory and a couple of lines in the Allure report connection have changed. Almost all classes were declared components and removed most of the dependencies.

The effect of the implementation was not only the steps of the designers, in which we can reuse smaller actions, but also the ability to make general decisions to the library (the link to the githab was at the very beginning). For us, this is relevant, because we use this solution on several mobile projects.

Well, the most unusual effect is that we can write tests for tests. No matter how ridiculous and clumsy it may sound, but much also depends on the quality of the instrument, so it must also be maintained and developed. At the moment, work on the coating is still underway. With the introduction of changes to the core of the project, we will expand the test coverage inside the library.

As already mentioned, we run our project on a specific set of devices in parallel.

An example of running a project from the console:

From the example, it is clear that tests are run for Android (- tests "* AndroidStories *"). Also as parameters are passed:

The device on which the test run will run, Nexus6p_android6. Do not forget to describe the device in the project, we wrote about it above. This is how it is done with us.

The device.properties file contains:

The test_node.json file contains data for running the node.

Test user 6056789, whose data we will use. There is a whole set of test users on the project that we use to run our tests. The user must be described in user.properties.

Type of testing smokeNewReg, in our framework the logic of choosing test scripts for Meta tags is implemented. In each script in the Meta block there is a set of tags.

The testCycle.properties file contains the keys and values for the labels.

Thus, due to the presence of the Meta Matcher in JBehave, we can form a set of test scenarios for a specific testing cycle.

The build number that we download from the centralized repository, and the branch from which the build will be downloaded. In our case, the environmentAndroid.properties file contains a link with wildcard characters, which is formed based on the parameters, which in turn are passed to the input from the console.

Now, knowing how to start a project from the console, you can easily integrate the project into Jenkins. There is such an integration on our projects, and it is enough for the tester to simply form a job in Jenkins to run autotests.

Now also formed a farm with mobile devices. This is a Mac Pro with about ten mobile devices connected to it.

In our project, a report is generated using the allure report. Therefore, after the tests have completed, it is enough to run “allure generate directory-with-results”

In the report we can see the statuses for each scenario. If you start to open scripts in a report, then you can find the steps that you took the test, almost before calling each method. If something collapsed, in a step with an error inside there will be a screenshot of the screen.

Previously, the screenshots were in each step, but we thought it was meaningless, and the screen is only on collapsed scenarios. In addition, as the number of automated scripts grows, the report begins to take up more and more space.

Let's summarize the tasks we set for ourselves.

As a bonus:

The results met our expectations and confirmed the correctness of the decision to go into automation.

We continue to develop our solution, which is available on github: the colibri-ui library and the colibri-ui-template template for a quick start. Further only more!

If you want to become one of the Alfa Lab testers (or not just a tester) - we have open vacancies .

How we solved the problem of finding a balance between speed, budget and quality at Alfa Labs will be discussed today with the example of Alfa Mobile. Looking ahead, ATTENTION, SPOILER !!! Our solution is available on github: the colibri-ui library and the colibri-ui-template template for a quick start.

Pavel pvivanov and Lilia Lidiyatullina took an active part in writing the article .

')

What happened?

Back in 2013, we didn’t even have thoughts about testing automation, because the regression testing process took one day for one of the testers for both OSes (iOS / Android).

However, with the active growth of the application, the addition of new services and services to it, the cost of manual testing also began to grow at a high rate.

Add to this the increase in the number of teams (from one to six) that constantly produce new features - and regression testing will become a thrombus of your processes, which runs the risk of breaking off and jeopardizing production.

At some point, we came to the conclusion that at the time of preparing the application for release, all six teams were “idle”, until all six testers were releasing the release candidate. In time equivalent, the duration of regression testing increased to 8 working days (six people!).

This situation does not add motivation to the testers themselves. At a certain point, we even had a joke “Regress like a holiday!”

What to do?

The problem needed to be solved somehow, and we had two ways to “attach bablishko”:

- bringing new people into the ranks of testers;

- test automation to eliminate manual testing.

For ethical and economic reasons, we chose the second option, after all, the cost of automation, whatever one may say, is a much more profitable investment.

We decided to start the movement in the chosen direction with a pilot project for automating the testing of mobile applications. Based on its results, we have formed requirements for the future instrument:

- A test automation tool should be with the lowest possible entry threshold to start using it.

It's about minimizing coding, getting rid of writing complex locators, etc., because the main users of the tool are testers from product teams who may not have automation experience. - Test scripts should be clear to non-development users;

- The solution should be cross-platform and work simultaneously on two platforms - Android and iOS;

- A farm must be formed with a connected set of mobile devices;

- The solution should be scalable to other mobile applications of the bank.

Based on the received requirements, an automation tool was to be selected.

In the framework of the pilot project, we considered:

- Robotium

- Espresso

- Ui recorder

- Keep it Functional

- Calabash

- Appium

The requirement for cross-platform solutions narrowed down the choice to the last pair from the list. The final choice was made in favor of Appium due to a more active community involved in development and support.

Reducing the threshold of entry into the development

Automating any process at the bottom level is scripts and code. However, not everyone can understand the development tools or even write something on their own. That is why we decided to maximally simplify this moment using the BDD-methodology on projects.

Our framework is divided into several levels of abstraction, where the upper level is written in the popular test writing language Gherkin, and the lower level is written by developers in the Java programming language. JBehave was chosen for writing scripts.

So, what does our solution look like from the user?

Then " " When " " " " When " " Then " " When " " " " When " " This example describes the transition process from the main screen to the mobile payment screen. Maybe someone will argue that from the point of view of the business process, it does not matter to him which way he gets to the desired screen, and he will be right. Indeed, nothing prevents us from going to the desired section, replacing the last five lines of the previous scenario with one, for example, like this:

When " " However, such steps will be less atomic and it will be more difficult to reuse them on two platforms at once, iOS and Android. After all, when we want to lower the threshold for entering the development, we need to reuse the current steps as much as possible, otherwise the tester will always need new ones and, as a result, their implementation. And here, as we remember, the tester does not always have the necessary development skills.

We describe the screens

If everything is extremely clear with the script, he reads "from the sheet" and describes our actions, then how can we specify the locators, while trying not to use them explicitly?

One way to accomplish the task of reducing the entry threshold is to simplify writing complex locators and hide everything in the project more deeply. Thus, two factories were born that allow us to create a locator by description and use it for searching. Unfortunately, it is not always possible to do without writing locators, in rare cases it is necessary to write it. For such situations, we left the possibility to find an element by XPath.

The description of the elements on the screen can consist of four components. All four components are never used, but two may well be used, for example, in the case when you first need to scroll to an element, and then click on it.

The components of the description of the page as a set of elements:

- Content description - by this identifier you can find items on Android;

- ResourceId / AccessabilityIdeitificator is a unique identifier. Sometimes application developers do not put identifiers, but this is the most desirable element that we can find in the markup of an Android / iOS application, respectively;

- Text - visible text, for example, on a button that we can click on;

- XPath is a regular XPath with xml markup. It is used in cases when the previous three methods unambiguously describe the element failed.

We will use the name of the element (Name in story) in the script, we will use it to pull out Content description / ResourceId / AccessabilityIdeitificator / Text / XPath.

All the descriptions we collect in .csv files, where you can conveniently edit them in a table form. On the one hand, this is the usual PageObject, and on the other hand, our testers do not have to edit the locators in the code.

It seems that much easier! We described the screen, wrote the script, launched autotests, but let's talk a bit about non-trivial tasks and look at our colibri-ui framework from the inside.

Customize the environment

When working with Appium, it is always necessary to indicate which mobile device we want to work with. In our solution, you need to transfer to the project the udid device (unique identifier) and its name. The name will also be mentioned in the logs if something goes wrong. Note that with the help of udid, our project will work with the desired mobile device, because we have a whole farm and all of them are connected to one Mac.

Currently, descriptions of mobile devices are stored as a set of folders, each of which contains settings files such as .property and json-object. The file type .property contains the udid and device name, the json object describes the node settings for operation in cluster mode (see the colibri-ui-template template).

A small offtop, or how to get udid connected devices!

For Android, we run “adb devices” in the console, for iOS, “instruments -s devices | grep -v (Simulator | $ (id -un))” and get a list of connected devices. In the case of Android, the list will contain both real devices and emulators, while for iOS we only filter real devices. If someone needs to get only emulators, another filtering “instruments -s devices | grep simulator.

We plan to transfer this set of folders to the database or to another storage, or to receive the list dynamically, executing the above commands in the process of building the environment. We currently have no need for such an improvement.

Additionally, we note that for the above commands to work, ADB Driver and Xcode must be installed on your Mac, respectively. When working with emulators, also do not forget to deflate their images.

We describe the user

To date, we have taken into account all that is necessary to run the project in the simplest mode. We have scripts, screen descriptions and device descriptions. However, in applications that we test, this is not enough. In our applications, you need to log in to a test stand by some user and use its credentials, such as: phone number, login, password, account numbers, etc.

Files with user credentials are also in the project as a separate folder. In the future, as well as with devices, transfer them to the database or centralized storage.

In scripts and descriptions of pages, we use markers of the form # userName #, by which we get the property value from the user file and replace these markers during the run.

Thus, we can run the same set of scenarios on different users, including at the same time.

This is how it looks in the page description:

This is how it looks in the user.property file:

paymentAccountRur=··0278 beneficiarAccountRur=··0163 beneficiarAccountUsd=··0889 beneficiarAccountEur=··0038 Be sure to specify the keys and values.

We form Uber-steps and some side effects

We started the development by describing fairly small steps, such as typing text or clicking on something. Over time, we realized that we could not write scripts in small steps, or write complex steps, for example, to return to the main screen, without duplicating the code. Thus began the search for a solution to reuse small steps into larger ones.

The first attempt was to add guiCe to the project, for organizing DI, but its implementation brought with it the processing of almost the entire project core. And since the dependencies, right in the appium-java-client, already have Spring, for us the solution became obvious and our next step was to introduce Spring.

When introducing Sping into our project, the amount of change was minimal. At the very depth of the project, only the JBeHave steps factory and a couple of lines in the Allure report connection have changed. Almost all classes were declared components and removed most of the dependencies.

The effect of the implementation was not only the steps of the designers, in which we can reuse smaller actions, but also the ability to make general decisions to the library (the link to the githab was at the very beginning). For us, this is relevant, because we use this solution on several mobile projects.

Well, the most unusual effect is that we can write tests for tests. No matter how ridiculous and clumsy it may sound, but much also depends on the quality of the instrument, so it must also be maintained and developed. At the moment, work on the coating is still underway. With the introduction of changes to the core of the project, we will expand the test coverage inside the library.

Run the project

As already mentioned, we run our project on a specific set of devices in parallel.

An example of running a project from the console:

./gradlew --info clean test --tests "*AndroidStories*" -Dorg.gradle.project.platform=Nexus6p_android6 -Dorg.gradle.project.user=6056789 -Dorg.gradle.project.testType=smokeNewReg -Dorg.gradle.project.buildVersion=9.0.0.7,development From the example, it is clear that tests are run for Android (- tests "* AndroidStories *"). Also as parameters are passed:

The device on which the test run will run, Nexus6p_android6. Do not forget to describe the device in the project, we wrote about it above. This is how it is done with us.

The device.properties file contains:

UDID=ENU14008659 deviceName=Nexus6p The test_node.json file contains data for running the node.

Test user 6056789, whose data we will use. There is a whole set of test users on the project that we use to run our tests. The user must be described in user.properties.

Type of testing smokeNewReg, in our framework the logic of choosing test scripts for Meta tags is implemented. In each script in the Meta block there is a set of tags.

Meta: @regressCycle @smokeCycle The testCycle.properties file contains the keys and values for the labels.

smoke=+smokeCycle,+oldRegistration,-skip smokeNewReg=+smokeCycle,+newRegistrationCardNumber,-skip smokeNewAccountReg=+smokeCycle,+newRegistrationAccountNumber,-skip regress=+regressCycle,+oldRegistration,-skip regressNewReg=+regressCycle,+newRegistrationCardNumber,-skip Thus, due to the presence of the Meta Matcher in JBehave, we can form a set of test scenarios for a specific testing cycle.

The build number that we download from the centralized repository, and the branch from which the build will be downloaded. In our case, the environmentAndroid.properties file contains a link with wildcard characters, which is formed based on the parameters, which in turn are passed to the input from the console.

remoteFilePathReleaseAndDevelopment=http://mobile/android/mobile-%2$s/%1$s/mobile-%2$s-%1$s.apk Now, knowing how to start a project from the console, you can easily integrate the project into Jenkins. There is such an integration on our projects, and it is enough for the tester to simply form a job in Jenkins to run autotests.

Now also formed a farm with mobile devices. This is a Mac Pro with about ten mobile devices connected to it.

We form the report

In our project, a report is generated using the allure report. Therefore, after the tests have completed, it is enough to run “allure generate directory-with-results”

In the report we can see the statuses for each scenario. If you start to open scripts in a report, then you can find the steps that you took the test, almost before calling each method. If something collapsed, in a step with an error inside there will be a screenshot of the screen.

Previously, the screenshots were in each step, but we thought it was meaningless, and the screen is only on collapsed scenarios. In addition, as the number of automated scripts grows, the report begins to take up more and more space.

The result we got

Let's summarize the tasks we set for ourselves.

- We managed to make a tool to automate testing with a rather low threshold for entering the development. On average, as practice has shown, a tester needs two weeks to confidently start writing and running autotests. Testers are most challenged by the appium setup environment.

- Test scripts are understood by all team members, this is especially important when your project uses the BDD methodology.

- Our framework can work simultaneously with two platforms - iOS and Android.

- We currently have a farm of ten mobile devices and a Mac Pro. The project is integrated with Jenkins, and any tester can run autotests in parallel on all ten devices.

- Our solution is scalable and already several mobile projects are actively working with our framework and run autotests.

As a bonus:

- At one of the mobile projects due to automation, we completely relieved the functional testers from testing the front for backward compatibility with backend. After the introduction of automation, the testing time in this case was reduced by 8 times (from 8 hours to 1 hour).

- New autotests are written by testers in the sprint along with the development of new functionality in mobile applications;

- Part of the regression testing is already automated, as a result - we have reduced the time to regress from 8 days to 1 day. This allowed us to be released more often, and testers stopped falling out of teams for the period of regression testing. Well, just become a little happier :)

The results met our expectations and confirmed the correctness of the decision to go into automation.

We continue to develop our solution, which is available on github: the colibri-ui library and the colibri-ui-template template for a quick start. Further only more!

If you want to become one of the Alfa Lab testers (or not just a tester) - we have open vacancies .

Source: https://habr.com/ru/post/335278/

All Articles