How Discord Scaled Elixir to 5 Million Concurrent Users

From the very beginning Discord actively used Elixir. Erlang virtual machine was the perfect candidate for creating a highly parallel real-time system that we were going to create. The original Discord prototype was developed by Elixir; it is now at the core of our infrastructure. The task and mission of Elixir is simple: access to all the power of the Erlang VM through a much more modern and user-friendly language and toolkit.

Two years have passed. We now have five million simultaneous users , and millions of events pass through the system per second . Although we absolutely do not regret the choice of architecture, we had to do a lot of research and experimentation to achieve such a result. Elixir is a new ecosystem, and the Erlang ecosystem lacks information about its use in production (although Erlang in Anger is something). Following the results of the whole journey, trying to adapt Elixir to work in Discord, we learned some lessons and created a number of libraries.

Although Discord has many functions, basically everything comes down to pub / sub. Users connect to WebSocket and unwind a session (GenServer), which then establishes a connection with remote Erlang nodes where guild processes are running (also GenServers). If something is published in guild (the internal naming of the “Discord Server”), it is fanned out for each connected session.

')

When the user goes online, he connects to the guild, and he publishes the presence status in all other connected sessions. There is a lot of other logic, but a simplified example:

This was the normal approach when we initially created Discord for groups of 25 or less users. However, we were lucky to encounter “good problems” of growth when people started using Discord in large groups . As a result, we came to the conclusion that on many Discord servers like / r / Overwatch there are up to 30,000 users at a time. During peak hours, we observed that these processes could not cope with message queues. At some point, we had to manually intervene and turn off message generation to help cope with the load. It was necessary to deal with the problem before it becomes large-scale.

We started with benchmarks for the most loaded paths within the guild processes and soon identified the obvious cause of the trouble. The exchange of messages between Erlang processes was not as effective as we thought, and the Erlang unit of work for process shading was also very expensive. We found that the time for a single

It was necessary to somehow distribute the work of sending messages. Since the spawn processes in Erlang are cheap, our first idea was to simply spawn a new process to process each published message. However, all publications could occur at different times, and Discord clients depend on the linearity of events. Moreover, this solution cannot be scaled well, because the guild service has become more and more work.

Inspired by a blog post about improving performance in passing messages between nodes, we created Manifold . Manifold distributes the work of sending messages between remote nodes with PIDs (process ID in Erlang). This ensures that the sending processes will call

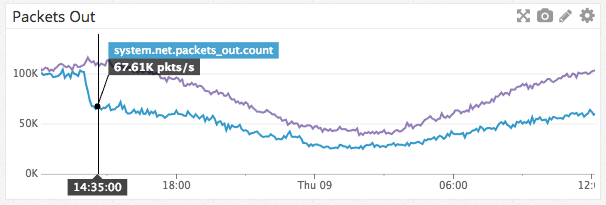

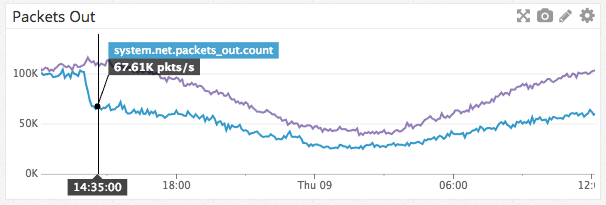

A remarkable side effect of Manifold was that we managed not only to distribute the CPU load of fan messages, but also to reduce the network traffic between the nodes:

Reducing network traffic by one Guild node

Manifold is on GitHub , so try.

Discord is a distributed system that uses consistent hashing . Using this method required the creation of a ring data structure that can be used to search for the nodes of a particular object. We wanted the system to work quickly, so we chose Chris Musa’s wonderful library by connecting it through the Erlang C port (the process responsible for the interface with code C). It worked fine, but as Discord was scaled, we began to notice problems during surges with reconnecting users. The Erlang process, which is responsible for managing the ring, began to load so much work that it could not cope with requests to the ring, and the entire system could not cope with the load. The solution at first glance looked obvious: run a variety of processes with ring data to make better use of all the cores of the machine in order to process all requests. But this is too important a task. Is there a better option?

Let's look at the components.

If the session server crashed and rebooted, it took about 30 seconds just to search in the ring. This is not even considering desheduling by Erlang of one process involved in the work of other processes of the ring. Can we completely eliminate these costs?

When working with Elixir, if you need to speed up access to data, the first step is to use ETS . This is a quick, changeable C dictionary; the flip side of the coin is that the data is copied there and read from there. We couldn’t just transfer our ring to ETS because we used port C to control the ring, so we rewrote the code to pure Elixir . Once this was completed, we had a process that owned the ring and continuously copied it to the ETS, so other processes could read the data directly from the ETS. This markedly improved performance, but the ETS read operations took about 7 µs and we still spent 17.5 seconds on the search operation for values in the ring. The data structure of the ring is actually quite large, and copying it to the ETS and reading from there took most of the time. We were disappointed; in any other programming language, you could just make the general meaning for safe reading. There must be some way to do this on Erlang!

After some research, we found a mochiglobal module that uses the virtual machine function: if Erlang encounters a function that constantly returns the same data, it puts this data into a read-only heap with shared access to which processes have access. Copying is not required. mochiglobal uses this by creating an Erlang module with one function and compiling it. Since the data is not copied anywhere, search costs have decreased to 0.3 μs, which reduced the total time to 750 ms! However, there is no complete freebie; the creation of a module with a data structure of this size in runtime can take up to a second. The good news is that we rarely change the ring, so we are ready to pay such a price.

We decided to port the mochiglobal to Elixir and add some functionality to avoid atomization. Our version is called FastGlobal .

After solving an important problem with node search performance, we noticed that the processes responsible for processing the

It was necessary to make session processes smarter; ideally, they should not even attempt to make these calls to the guild registry if an unfortunate outcome is unavoidable. We did not want to use a circuit breaker to prevent a situation when a surge in timeouts leads to a temporary state, when no attempt is made at all. We knew how to implement this in other languages, but how to do it on Elixir?

In most other languages, we could use an atomic counter to track outgoing requests and early warning if their number is too large, effectively implementing the semaphore. Erlang VM is built on coordination between processes, but we didn’t want to overload the process responsible for this coordination. After some research, we stumbled upon

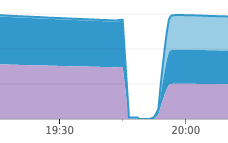

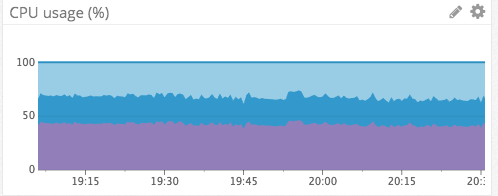

This library helped protect our infrastructure at Elixir. As recently as last week, there was a situation similar to the above-mentioned cascading interruptions in service, but this time there were no interruptions. Our presence services failed for another reason, but the session services did not even budge, and the presence services were able to recover in a few minutes after the reboot:

Work presence services

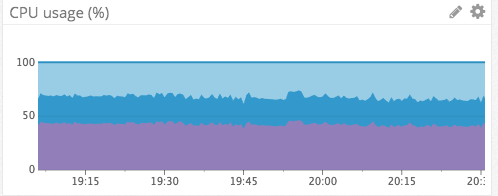

The use of CPU session services for the same period

You can find our library Semaphore on GitHub .

Choosing and working with Erlang and Elixir has proven to be a great experience. If we were forced to return and start anew, we would definitely choose the same path. We hope that the story of our experience and tools will be useful to other developers of Elixir and Erlang, and we hope to continue to talk about our work, solving problems and gaining experience in the course of this work.

Two years have passed. We now have five million simultaneous users , and millions of events pass through the system per second . Although we absolutely do not regret the choice of architecture, we had to do a lot of research and experimentation to achieve such a result. Elixir is a new ecosystem, and the Erlang ecosystem lacks information about its use in production (although Erlang in Anger is something). Following the results of the whole journey, trying to adapt Elixir to work in Discord, we learned some lessons and created a number of libraries.

Fan Message Deployment

Although Discord has many functions, basically everything comes down to pub / sub. Users connect to WebSocket and unwind a session (GenServer), which then establishes a connection with remote Erlang nodes where guild processes are running (also GenServers). If something is published in guild (the internal naming of the “Discord Server”), it is fanned out for each connected session.

')

When the user goes online, he connects to the guild, and he publishes the presence status in all other connected sessions. There is a lot of other logic, but a simplified example:

def handle_call({:publish, message}, _from, %{sessions: sessions}=state) do Enum.each(sessions, &send(&1.pid, message)) {:reply, :ok, state} end This was the normal approach when we initially created Discord for groups of 25 or less users. However, we were lucky to encounter “good problems” of growth when people started using Discord in large groups . As a result, we came to the conclusion that on many Discord servers like / r / Overwatch there are up to 30,000 users at a time. During peak hours, we observed that these processes could not cope with message queues. At some point, we had to manually intervene and turn off message generation to help cope with the load. It was necessary to deal with the problem before it becomes large-scale.

We started with benchmarks for the most loaded paths within the guild processes and soon identified the obvious cause of the trouble. The exchange of messages between Erlang processes was not as effective as we thought, and the Erlang unit of work for process shading was also very expensive. We found that the time for a single

send/2 call can vary from 30 μs to 70 μs due to the de-tuning of the Erlang call process. This meant that at peak hours the publication of a single event from a large guild can take from 900 ms to 2.1 s! Erlang processes are completely single-threaded, and shards are the only option for parallelization. Such an event would require considerable forces, and we knew that there would be a better option.It was necessary to somehow distribute the work of sending messages. Since the spawn processes in Erlang are cheap, our first idea was to simply spawn a new process to process each published message. However, all publications could occur at different times, and Discord clients depend on the linearity of events. Moreover, this solution cannot be scaled well, because the guild service has become more and more work.

Inspired by a blog post about improving performance in passing messages between nodes, we created Manifold . Manifold distributes the work of sending messages between remote nodes with PIDs (process ID in Erlang). This ensures that the sending processes will call

send/2 at most as many times as the remote nodes are involved. Manifold does this by first grouping the PIDs by their remote nodes, and then sending them to the Manifold.Partitioner “separator” on each of these nodes. Then the separator hashes the PIDs sequentially, using :erlang.phash2/2 , groups them by the number of cores and sends them to the child workers. In the end, workers send messages to real processes. This ensures that the delimiter is not overloaded and continues to be linearizable, like send/2 . This solution was an effective replacement for send/2 : Manifold.send([self(), self()], :hello) A remarkable side effect of Manifold was that we managed not only to distribute the CPU load of fan messages, but also to reduce the network traffic between the nodes:

Reducing network traffic by one Guild node

Manifold is on GitHub , so try.

General quick access data

Discord is a distributed system that uses consistent hashing . Using this method required the creation of a ring data structure that can be used to search for the nodes of a particular object. We wanted the system to work quickly, so we chose Chris Musa’s wonderful library by connecting it through the Erlang C port (the process responsible for the interface with code C). It worked fine, but as Discord was scaled, we began to notice problems during surges with reconnecting users. The Erlang process, which is responsible for managing the ring, began to load so much work that it could not cope with requests to the ring, and the entire system could not cope with the load. The solution at first glance looked obvious: run a variety of processes with ring data to make better use of all the cores of the machine in order to process all requests. But this is too important a task. Is there a better option?

Let's look at the components.

- The user can be in any number of guilds, but the average is 5.

- The session-responsible Erlang VM virtual machine can support up to 500,000 sessions.

- When connecting a session, she needs to find the remote node for each guild that is interesting to her.

- Communication time with another Erlang process using request / reply is about 12 µs.

If the session server crashed and rebooted, it took about 30 seconds just to search in the ring. This is not even considering desheduling by Erlang of one process involved in the work of other processes of the ring. Can we completely eliminate these costs?

When working with Elixir, if you need to speed up access to data, the first step is to use ETS . This is a quick, changeable C dictionary; the flip side of the coin is that the data is copied there and read from there. We couldn’t just transfer our ring to ETS because we used port C to control the ring, so we rewrote the code to pure Elixir . Once this was completed, we had a process that owned the ring and continuously copied it to the ETS, so other processes could read the data directly from the ETS. This markedly improved performance, but the ETS read operations took about 7 µs and we still spent 17.5 seconds on the search operation for values in the ring. The data structure of the ring is actually quite large, and copying it to the ETS and reading from there took most of the time. We were disappointed; in any other programming language, you could just make the general meaning for safe reading. There must be some way to do this on Erlang!

After some research, we found a mochiglobal module that uses the virtual machine function: if Erlang encounters a function that constantly returns the same data, it puts this data into a read-only heap with shared access to which processes have access. Copying is not required. mochiglobal uses this by creating an Erlang module with one function and compiling it. Since the data is not copied anywhere, search costs have decreased to 0.3 μs, which reduced the total time to 750 ms! However, there is no complete freebie; the creation of a module with a data structure of this size in runtime can take up to a second. The good news is that we rarely change the ring, so we are ready to pay such a price.

We decided to port the mochiglobal to Elixir and add some functionality to avoid atomization. Our version is called FastGlobal .

Limited concurrency

After solving an important problem with node search performance, we noticed that the processes responsible for processing the

guild_pid search in the guild nodes began to reverse. Slow searching for nodes used to protect them. A new problem was that about 5,000,000 session processes tried to put pressure on ten of these processes (one for each guild node). Here, speeding up the processing did not solve the problem; The fundamental reason was that session process calls to this registry of guilds fell out in timeout and left a request in the queue to the registry. After some time, the query was repeated, but the constantly accumulating queries went into a fatal state. Receiving messages from other services, sessions would block these requests until they go to a timeout, which caused the message queue to inflate and, as a result, to the OOM of the entire Erlang VM, resulting in cascading outages .It was necessary to make session processes smarter; ideally, they should not even attempt to make these calls to the guild registry if an unfortunate outcome is unavoidable. We did not want to use a circuit breaker to prevent a situation when a surge in timeouts leads to a temporary state, when no attempt is made at all. We knew how to implement this in other languages, but how to do it on Elixir?

In most other languages, we could use an atomic counter to track outgoing requests and early warning if their number is too large, effectively implementing the semaphore. Erlang VM is built on coordination between processes, but we didn’t want to overload the process responsible for this coordination. After some research, we stumbled upon

:ets.update_counter/4 , which performs atomic operations due to increment on the number that is in the ETS key. Since good parallelization was needed, you could run ETS in write_concurrency mode, but still read the value, because :ets.update_counter/4 returns the result. This gave us a fundamental basis for creating the Semaphore library. It is extremely easy to use, and it works very well with high bandwidth: semaphore_name = :my_sempahore semaphore_max = 10 case Semaphore.call(semaphore_name, semaphore_max, fn -> :ok end) do :ok -> IO.puts "success" {:error, :max} -> IO.puts "too many callers" end This library helped protect our infrastructure at Elixir. As recently as last week, there was a situation similar to the above-mentioned cascading interruptions in service, but this time there were no interruptions. Our presence services failed for another reason, but the session services did not even budge, and the presence services were able to recover in a few minutes after the reboot:

Work presence services

The use of CPU session services for the same period

You can find our library Semaphore on GitHub .

Conclusion

Choosing and working with Erlang and Elixir has proven to be a great experience. If we were forced to return and start anew, we would definitely choose the same path. We hope that the story of our experience and tools will be useful to other developers of Elixir and Erlang, and we hope to continue to talk about our work, solving problems and gaining experience in the course of this work.

Source: https://habr.com/ru/post/335220/

All Articles