Dedicated servers based on Intel Xeon processors Skylake-SP

On July 12, Intel introduced a new line of server processors, code-named Skylake-SP. The letters SP in the name of the line is an abbreviation for Scalable Processors ("scalable processors" in Russian). Such a name is not accidental: Intel implemented many interesting innovations and, as noted in one review , "they tried to please almost everyone."

SP line processors are part of the Purley server platform, which is called the “ platform of the decade ”.

Servers based on new processors are already available for order in our data centers .

')

What innovations are implemented in Intel Skylake-SP? What are the technical specifications of these processors? What are their advantages compared with previous models? All this we will describe in detail in this article.

New processors - new names

Previous lines of Xeon processors received names of the Exvx type: E3v3, E3v5, etc. The SP line uses a different naming scheme: all processors are divided into four series under the code names Bronze, Silver, Gold and Platinum. All these series differ in the number of cores and a set of technologies.

Bronze are the simplest processors: they can have up to 8 cores and do not support hyper-threading. Platinum, as the name implies, is designed to work under high loads and have the largest (up to 28) number of cores.

The names of some models have new indexes. Thus, the letter F indicates the presence of the built-in controller Omni-Path , M indicates support for a larger memory size (up to 1.5 TB per socket), and T indicates support for the NEBS standard (Network Equipment Building System) . Processors with an index T in the name can withstand high temperature loads, and their service life is much longer compared to other models.

Specifications

For our configurations, we chose Intel Xeon Silver 4114 and Intel Xeon Gold 6140. Their main technical characteristics are presented in the table below.

| Characteristic | Intel Xeon Silver 4114 | Intel Xeon Gold 6140 |

|---|---|---|

| Technological process | 14 nm | 14 nm |

| Number of Cores | ten | 18 |

| Number of threads | 20 | 36 |

| Base frequency | 2.20 GHz | 2.30 GHz |

| Maximum Turbo Frequency | 3.00 GHz | 3.70 GHz |

| L3 cache | 13.75 MB | 24.75 MB |

| Number of UPI lines | 2 | 3 |

| TDP (thermal design power) | 85 W | 140 W |

Major innovations

Here is a list of the most significant innovations implemented in Skylake-SP processors:

- thanks to the AVX-512 instruction set, the performance of integer and floating-point calculations has been significantly improved;

- the presence of a six-channel memory controller (in the previous line was a four-channel);

- communication between cores is accelerated thanks to UPI (Ultra Path Interconnect) technology;

- increased number of PCI lines (up to 48);

- modified topology: mesh architecture has replaced the ring bus.

Microarchitecture

The basic structure of the core of the SP line processors remains exactly the same as that of previous models of Skylake. However, there are some differences and improvements. Increased L2 cache: it is 1 MB. The L3 cache is 1.375 MB per core. The L2 cache is populated directly from RAM, and then unused lines are pushed out into L3. Data common to several cores is stored in L3.

Note one more thing: the volume of the L3 cache does not depend on the number of cores. The 24.75 MB cache is equipped with both eight-core, and twelve, and even eighteen-core (see table above) models.

As noted in many reviews (see, for example, here ), the emphasis is placed on working with the L2 cache. The built-in eDRAM memory in Skylake processors is completely absent.

New topology

An important innovation in Intel Skylake-SP processors is the abandonment of the internal circuit bus, which has been used for communication between the cores for almost 10 years.

The ring bus first appeared in 2009 in eight-core Nehalem-EX processors . She worked very quickly (up to 3 GHz). L3 cache latency was minimal. If the kernel found data in its cache fragment, only one additional cycle was required. It took up to 12 cycles to get the cache line from another fragment (6 cycles on average).

Subsequently, the ring bus technology has undergone many changes and improvements. Thus, in the processors Ivy Bridge, presented in 2012, three rows of cores were combined with two ring buses. They moved data in two directions (clockwise and counterclockwise), which allowed them to be delivered along the shortest route and reduce the delay time. After the data arrived at the ring structure, it was necessary to coordinate their route in order to avoid confusion with previous data.

In the Intel Xeon E5v3 processors (2014), everything has become much more complicated: four rows of cores, two ring buses that are independent of each other, a buffer switch (for more on this, see the article on the link just provided).

The ring bus technology has become widespread when the processors had a maximum of 8 cores. And when there were more than 20 cores, it became clear: the limit of its capabilities is near. Of course, it would be possible to go the simplest way and add another, third, ring. But Intel decided to go the other way and move on to the new topology of the tire structure - the mesh. This approach has already been tested on Xeon Phi processors (for more details, see this article .

Schematically, the cellular topology of the structure of tires can be represented as follows:

Intel illustration

Thanks to the new topology, it was possible to significantly increase the speed of interaction between the cores, as well as to increase the efficiency of working with memory.

New AVX-512 instruction set

The performance of computational operations in Skylake-SP processors was improved thanks to the use of the new instruction set AVX-512. It extends 32-bit and 64-bit AVX instructions using 512-bit vectors.

Programs can now package either 8 double-precision floating-point numbers, or 16 single-precision floating-point numbers, or 8 64-bit integers, or 16 32-bit integers inside a 512-bit vector. This allows you to increase the number of processed items for one instruction twice as compared with Intel AVX / AVX2 and four times as compared with Intel SSE.

The AVX-512 is fully compatible with the AVX instruction set. This, in particular, means that both instruction sets can be used in one program without sacrificing performance (this problem was observed when SSE and AVX were used together). The AVX registers (YMM0 — YMM15) refer to the lower parts of the AVX-512 registers (ZMM0 — ZMM15) by analogy with the SSE and AVX registers. Therefore, in processors with support for the AVX-512, the AVX and AVX2 instructions are executed on the lower 128 or 256 bits of the first 16 ZMM registers.

Performance tests

Sysbench tests

A description of the capabilities of the new processors would be incomplete without the results of performance tests. We conducted such tests and compared two servers: based on the Intel Xeon 8170 Platinum processor and based on the Intel Xeon E5-2680v4 processor . On both servers was installed OC Ubuntu 16.04.

Let's start with the tests from the popular sysbench package.

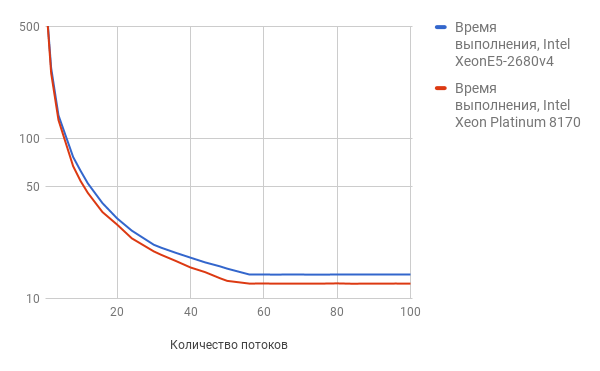

The first benchmark we conducted was a primer search test.

On both servers we installed sysbench and executed the command:

sysbench --test=cpu --cpu-max-prime=200000 --num-thread=1 run During the test, we increased the number of threads (parameter --num-threads) from 1 to 100.

The test result is graphically presented (the lower the number, the better the result):

As the number of threads increases, Intel Xeon Platinum 8170 performs better.

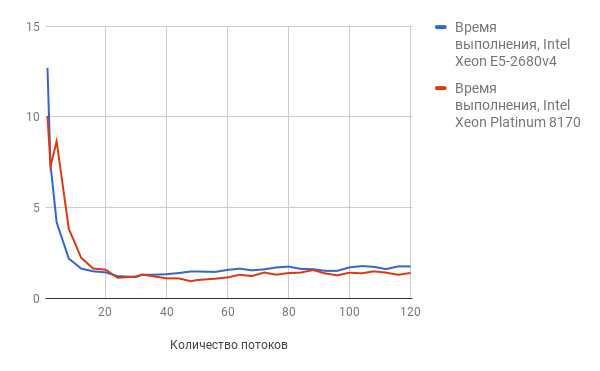

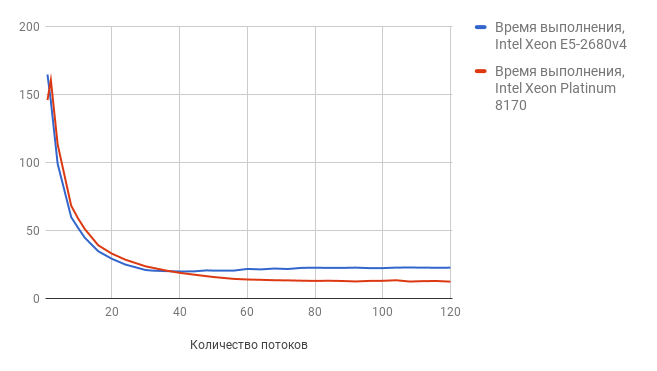

Let us now consider the test results for the speed of read-write operations from the memory buffer (the lower the number, the better the result):

Here we see a similar picture: with an increase in the number of threads, Intel Xeon 8170 Platinum takes the lead.

The threads test checks work with a large number of concurrent threads. During our experiments, we increased the number of threads from 1 to 120.

The results of this test are presented below (the smaller the numbers, the better the result):

As can be seen from the graph, as the number of streams increases, the results for Intel Xeon Skylake-SP Platinum are higher.

Linpack test

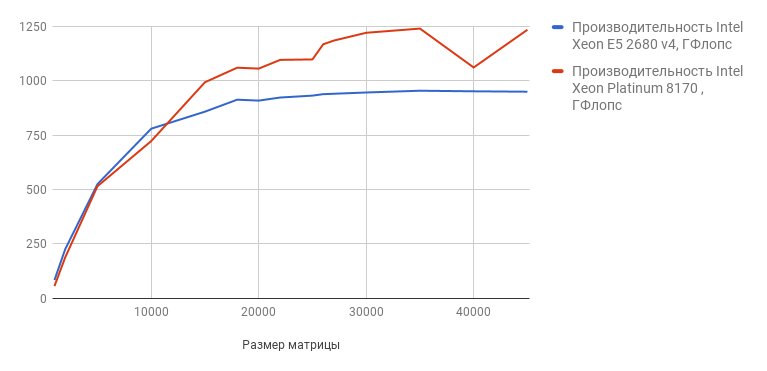

The next test we did was Linpack . This test is used to measure the performance of floating-point calculations and is the de facto standard in the field of testing computing systems. According to its results, a list of the most productive systems in the world is compiled.

The meaning of the test is to solve a dense system of linear algebraic equations (SLAE) using the LU-decomposition method. Performance is measured in flops - this is short for floating point per second, that is, the number of floating point operations per second. For the Linpack algorithm, read more here .

Linpack test benchmark can be downloaded from the Intel website . During the test, the program solves 15 systems of equations with a matrix of different dimensions (from 1000 to 45000). The results of the test conducted by us are graphically presented (the higher the number, the better the result):

As you can see, the new processor shows much higher results. In the test with the maximum matrix size (45,000), the performance of Intel Xeon E5-2680v4 is 948.9728 Gflops, and Intel Xeon Platinum - 1233.2960 Gflops.

Build a speed boost

To evaluate the performance of processors, it is highly desirable to conduct not only benchmarks, but also tests that are as close as possible to actual practice. Therefore, we decided to see the speed with which our servers will assemble a set of C ++ Boost libraries from source code.

We used the latest stable version of Boost - 1.64.0; We downloaded the archive with the source code from the official site .

On a server with an Intel Xeon E5-2680v4 processor, the build took 12 minutes and 25 seconds . The server based on Intel Xeon Platinum coped with the task even faster - in 9 minutes 16 seconds .

Conclusion

In this article, we have reviewed the major innovations that have appeared in Intel Skylake-SP processors. For those who want to learn more, here is a selection of useful links on the topic:

- http://www.anandtech.com/show/11544/intel-skylake-ep-vs-amd-epyc-7000-cpu-battle-of-the-decade ;

- https://servernews.ru/955164 ;

- https://itpeernetwork.intel.com/intel-mesh-architecture-data-center ;

- https://software.intel.com/en-us/node/683422 ;

Servers based on new processors are already available for ordering at data centers in St. Petersburg and Moscow.

We offer the following configurations:

| CPU | Memory | Discs |

|---|---|---|

| Intel Xeon Silver 4114 | 96 GB DDR4 | 2 × 480 GB SSD + 2 × 4 TB SATA |

| Intel Xeon Silver 4114 | 192 GB DDR4 | 2 × 480 GB SSD |

| Intel Xeon Silver 4114 | 384 GB DDR4 | 2 × 480 GB SSD |

| Intel Xeon Gold 6140 2.1 | 384 GB DDR4 | 2 × 800 GB SSD |

To rent servers on the basis of new processors, you need to pre-order, but soon they will be available on an ongoing basis.

Source: https://habr.com/ru/post/335132/

All Articles