37 reasons why your neural network is not working

The network has been trained for the last 12 hours. Everything looked good: the gradients were stable, the loss function was decreasing. But then the result came: all zeros, one background, nothing recognized. “What did I do wrong?” I asked the computer, which said nothing in reply.

Why does the neural network produce garbage (for example, the average of all the results or does it have really weak accuracy)? How to start checking?

The network may not be trained for several reasons. As a result of many debug sessions, I noticed that I often do the same checks. Here I gathered my experience together with the best ideas of my colleagues. I hope this list will be useful to you.

Much can go wrong. But some problems are more common than others. I usually start with this small list as an emergency kit:

')

If all else fails, proceed to reading this long list and check each item.

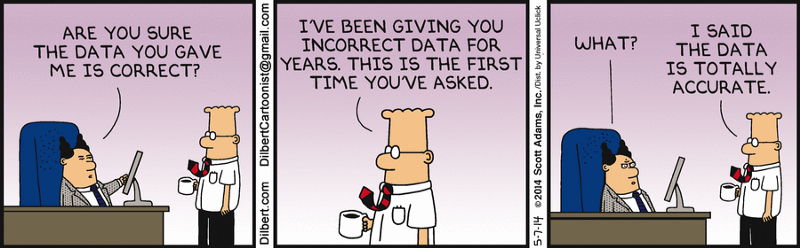

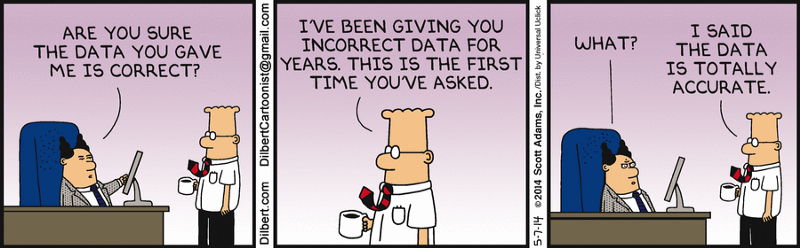

Source: http://dilbert.com/strip/2014-05-07

Verify that the input data makes sense. For example, I have often mixed in a heap the height and width of images. Sometimes by mistake I gave all zeros to a neural network. Or used the same batch over and over again. So type / view a couple of batches of input data and planned output — make sure everything is in order.

Try sending random numbers instead of real data and see if the same error remains. If so, then this is a sure sign that your network at some stage turns data into garbage. Try debugging layer by layer (operation by operation) and see where the crash occurs.

With data, everything can be in order, and an error in the code that transmits the input data of the neural network. Print and check the input data of the first layer before starting its operations.

Check that several samples of input data are labeled correctly. Also check that the swapping of input samples is also reflected in the output labels.

Maybe the non-random parts of the relationship between the input and the output are too small compared to the random part (someone might say that these are quotes on the stock exchange). That is, the input is not sufficiently connected to the output. There is no universal method here, because the measure of randomness depends on the type of data.

Once this happened to me when I pulled off a set of food images from the site. There were so many bad marks that the network could not learn. Manually check a series of sample input values and see that all labels are in place.

This item is worth a separate discussion, because this work shows accuracy above 50% on the basis of MNIST with 50% of damaged tags.

If your data is not mixed and arranged in a certain order (sorted by tags), this may adversely affect the training. Shuffle the dataset: make sure you mix both the input data and the tags.

Is there a thousand class A images per class B image in the dataset? Then you may need to balance the loss function or try other imbalance approaches .

If you train the network from scratch (that is, do not configure it), then a lot of data may be needed. For example, to classify images, they say , you need a thousand images for each class, or even more.

This happens in a sorted dataset (that is, the first 10,000 samples contain the same class). Easily corrected by mixing the data set.

This work indicates that too large batches may reduce the ability of the model to generalize.

Thanks hengcherkeng for this:

Did you calibrate the input data to zero mean and unit variance?

Augmentation has a regularizing effect. If it is too strong, then this, together with other forms of regularization (L2-regularization, dropout, etc.) can lead to under-training of the neural network.

If you are using a model that has already been prepared, then make sure that the same normalization and preprocessing is used as in the model you are teaching. For example, should a pixel be in the range [0, 1], [-1, 1] or [0, 255]?

CS231n pointed to a typical trap :

Also check for different preprocessing of each sample and batch.

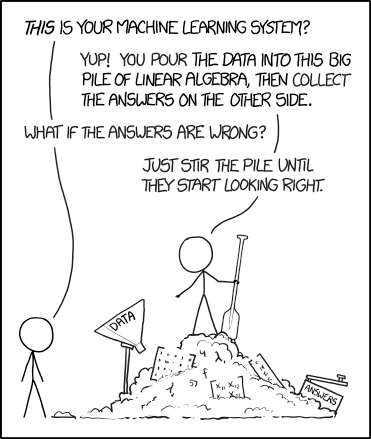

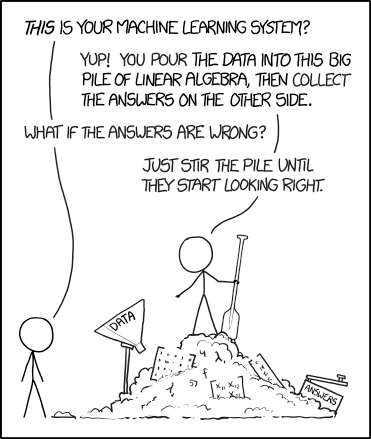

Source: https://xkcd.com/1838/

This will help determine where the problem is. For example, if the target output is the object class and coordinates, try restricting the prediction to only the object class.

Again from matchless CS231n : Initialize with small parameters, without regularization. For example, if we have 10 classes, then “by probability” means that the correct class is determined in 10% of cases, and the Softmax loss function is the inverse logarithm to the probability of the correct class, that is, it turns out −ln(0.1)=$2.30

After that, try increasing the regularization strength, which should increase the loss function.

If you have implemented your own, check it for bugs and add unit tests. I have often had a slightly wrong loss function subtly harming network performance.

If you use the loss function of the framework, then make sure that you give it what you need. For example, in PyTorch, I would mix NLLLoss and CrossEntropyLoss, because the first one requires softmax input data, and the second one does not.

If your loss function consists of several functions, check their relation to each other. For this you may need to test in different versions of the relationships.

Sometimes the loss function is not the best predictor of how well your neural network is learning. If possible, use other indicators, such as accuracy.

Have you independently implemented any of the network layers? Double check that they work as expected.

Look, maybe you unintentionally turned off the gradient updates of some layers / variables.

Perhaps the expressive power of the network is not enough to assimilate the objective function. Try adding layers or more hidden units to fully connected layers.

If your input looks like (k,H,W)=(64,64,64) then it is easy to miss the error due to incorrect measurements. Use unusual numbers to measure input data (for example, different simple numbers for each dimension) and see how they are distributed throughout the network.

If you independently implemented Gradient Descent, then with the help of Gradient Checking you can be sure of the correct feedback. Additional information: 1 , 2 , 3 .

Source: http://carlvondrick.com/ihog/

Re-train the network on a small dataset and make sure it works . For example, teach it with just 1-2 examples and see if the network is able to distinguish objects. Go to more samples for each class.

If unsure, use Xavier or He initialization. In addition, your initialization can lead to a bad local minimum, so try a different initialization, it can help.

Maybe you are using a bad set of hyperparameters. If possible, try grid search .

Due to too much regularization, the network may be specifically under-trained. Reduce regularization, such as dropout, batch norm, L2-regularization weight / bias, etc. In an excellent course “ Practical depth training for programmers, ” Jeremy Howard recommends getting rid of under- training first. That is, you need enough to retrain the network on the source data, and only then deal with retraining.

Maybe the network needs more time to learn before it starts making meaningful predictions. If the loss function is steadily decreasing, let it learn a little longer.

In some frameworks, the Batch Norm, Dropout, and others layers behave differently during training and testing. Switching to the right mode can help your network start making correct predictions.

Your choice of optimizer should not prevent the neural network from learning, unless you have specifically chosen poor hyperparameters. But the right optimizer for the task can help you get the best training in the shortest possible time. A scientific article describing the algorithm that you are using should also be mentioned by the optimizer. If not, I prefer to use Adam or plain SGD.

Read the excellent article by Sebastian Ruder to learn more about gradient descent optimizers.

Low learning speed will lead to a very slow convergence of the model.

A high learning rate will first quickly reduce the loss function, and then it will be difficult for you to find a good solution.

Experiment with the speed of learning, accelerating or slowing it down 10 times.

NaN (Non-a-Number) states are much more common when learning RNN (as I heard). Some ways to eliminate them:

Why does the neural network produce garbage (for example, the average of all the results or does it have really weak accuracy)? How to start checking?

The network may not be trained for several reasons. As a result of many debug sessions, I noticed that I often do the same checks. Here I gathered my experience together with the best ideas of my colleagues. I hope this list will be useful to you.

Content

0. How to use this guide?

I. Problems with data set

Ii. Data Normalization / Augmentation Problems

Iii. Implementation issues

Iv. Learning problems

0. How to use this guide?

Much can go wrong. But some problems are more common than others. I usually start with this small list as an emergency kit:

')

- Start with a simple model that works correctly for this type of data (for example, VGG for images). Use the standard loss function if possible.

- Disable all trinkets, such as regularization and data augmentation.

- In the case of fine tuning the model, double check the preprocessing to match the learning of the original model.

- Verify that the input data is correct.

- Start with a really small dataset (2-20 samples). Then expand it, gradually adding new data.

- Start gradually adding back all the fragments that were omitted: augmentation / regularization, custom loss functions, try more complex models.

If all else fails, proceed to reading this long list and check each item.

I. Problems with data set

Source: http://dilbert.com/strip/2014-05-07

1. Check the input data

Verify that the input data makes sense. For example, I have often mixed in a heap the height and width of images. Sometimes by mistake I gave all zeros to a neural network. Or used the same batch over and over again. So type / view a couple of batches of input data and planned output — make sure everything is in order.

2. Try random input values.

Try sending random numbers instead of real data and see if the same error remains. If so, then this is a sure sign that your network at some stage turns data into garbage. Try debugging layer by layer (operation by operation) and see where the crash occurs.

3. Check data loader

With data, everything can be in order, and an error in the code that transmits the input data of the neural network. Print and check the input data of the first layer before starting its operations.

4. Make sure the input connects to the output.

Check that several samples of input data are labeled correctly. Also check that the swapping of input samples is also reflected in the output labels.

5. Is the relationship between input and output too random?

Maybe the non-random parts of the relationship between the input and the output are too small compared to the random part (someone might say that these are quotes on the stock exchange). That is, the input is not sufficiently connected to the output. There is no universal method here, because the measure of randomness depends on the type of data.

6. Too much noise in the data set?

Once this happened to me when I pulled off a set of food images from the site. There were so many bad marks that the network could not learn. Manually check a series of sample input values and see that all labels are in place.

This item is worth a separate discussion, because this work shows accuracy above 50% on the basis of MNIST with 50% of damaged tags.

7. Shuffle the dataset

If your data is not mixed and arranged in a certain order (sorted by tags), this may adversely affect the training. Shuffle the dataset: make sure you mix both the input data and the tags.

8. Reduce class imbalances

Is there a thousand class A images per class B image in the dataset? Then you may need to balance the loss function or try other imbalance approaches .

9. Are there enough samples for training?

If you train the network from scratch (that is, do not configure it), then a lot of data may be needed. For example, to classify images, they say , you need a thousand images for each class, or even more.

10. Make sure there are no batches with a single tag.

This happens in a sorted dataset (that is, the first 10,000 samples contain the same class). Easily corrected by mixing the data set.

11. Reduce batch size

This work indicates that too large batches may reduce the ability of the model to generalize.

Addition 1. Use a standard data set (for example, mnist, cifar10)

Thanks hengcherkeng for this:

When testing a new network architecture or writing new code, first use the standard data sets instead of yours. Because for them there are already a lot of results and they are guaranteed to be “solvable”. There will be no problems with noise in the tags, the difference in the distribution of training / testing, too much complexity of the data set, etc.

Ii. Data Normalization / Augmentation Problems

12. Calibrate the symptoms.

Did you calibrate the input data to zero mean and unit variance?

13. Too strong augmentation data?

Augmentation has a regularizing effect. If it is too strong, then this, together with other forms of regularization (L2-regularization, dropout, etc.) can lead to under-training of the neural network.

14. Verify pre-trained model preprocessing.

If you are using a model that has already been prepared, then make sure that the same normalization and preprocessing is used as in the model you are teaching. For example, should a pixel be in the range [0, 1], [-1, 1] or [0, 255]?

15. Check pre-processing for recruitment training / validation / testing

CS231n pointed to a typical trap :

“... any pre-processing statistics (for example, average of data) need to be calculated on the data for training, and then applied on the validation / testing data. For example, it will be an error to calculate the average and subtract it from each image in the entire data set, and then divide the data into fragments for training / validation / testing. ”

Also check for different preprocessing of each sample and batch.

Iii. Implementation issues

Source: https://xkcd.com/1838/

16. Try to solve a simpler version of the problem.

This will help determine where the problem is. For example, if the target output is the object class and coordinates, try restricting the prediction to only the object class.

17. Look for the correct loss function "in probability"

Again from matchless CS231n : Initialize with small parameters, without regularization. For example, if we have 10 classes, then “by probability” means that the correct class is determined in 10% of cases, and the Softmax loss function is the inverse logarithm to the probability of the correct class, that is, it turns out −ln(0.1)=$2.30

After that, try increasing the regularization strength, which should increase the loss function.

18. Check loss function

If you have implemented your own, check it for bugs and add unit tests. I have often had a slightly wrong loss function subtly harming network performance.

19. Check the input loss function

If you use the loss function of the framework, then make sure that you give it what you need. For example, in PyTorch, I would mix NLLLoss and CrossEntropyLoss, because the first one requires softmax input data, and the second one does not.

20. Adjust the weight loss function

If your loss function consists of several functions, check their relation to each other. For this you may need to test in different versions of the relationships.

21. Follow other indicators.

Sometimes the loss function is not the best predictor of how well your neural network is learning. If possible, use other indicators, such as accuracy.

22. Check each custom layer.

Have you independently implemented any of the network layers? Double check that they work as expected.

23. Verify that there are no “stuck” layers or variables.

Look, maybe you unintentionally turned off the gradient updates of some layers / variables.

24. Increase network size

Perhaps the expressive power of the network is not enough to assimilate the objective function. Try adding layers or more hidden units to fully connected layers.

25. Look for hidden measurement errors.

If your input looks like (k,H,W)=(64,64,64) then it is easy to miss the error due to incorrect measurements. Use unusual numbers to measure input data (for example, different simple numbers for each dimension) and see how they are distributed throughout the network.

26. Explore Gradient Checking

If you independently implemented Gradient Descent, then with the help of Gradient Checking you can be sure of the correct feedback. Additional information: 1 , 2 , 3 .

Iv. Learning problems

Source: http://carlvondrick.com/ihog/

27. Solve the problem for a really small data set.

Re-train the network on a small dataset and make sure it works . For example, teach it with just 1-2 examples and see if the network is able to distinguish objects. Go to more samples for each class.

28. Check the initialization of the scale.

If unsure, use Xavier or He initialization. In addition, your initialization can lead to a bad local minimum, so try a different initialization, it can help.

29. Change the hyperparameters

Maybe you are using a bad set of hyperparameters. If possible, try grid search .

30. Reduce regularization

Due to too much regularization, the network may be specifically under-trained. Reduce regularization, such as dropout, batch norm, L2-regularization weight / bias, etc. In an excellent course “ Practical depth training for programmers, ” Jeremy Howard recommends getting rid of under- training first. That is, you need enough to retrain the network on the source data, and only then deal with retraining.

31. Give time

Maybe the network needs more time to learn before it starts making meaningful predictions. If the loss function is steadily decreasing, let it learn a little longer.

32. Switch from learning mode to testing mode.

In some frameworks, the Batch Norm, Dropout, and others layers behave differently during training and testing. Switching to the right mode can help your network start making correct predictions.

33. Visualize learning

- Track activations, weights and updates for each layer. Make sure the ratios of their values match. For example, the ratio of the magnitude of updates to parameters (weights and offsets) should be 1e-3 .

- View visualization libraries like Tensorboard and Crayon . In extreme cases, you can simply print the values of weights / shifts / activations.

- Be careful with network activations with an average much greater than zero. Try Batch Norm or ELU.

- Deeplearning4j indicated what to look in the histograms of weights and shifts:

“For weights, these histograms should have an approximately Gaussian (normal) distribution, after some time. Shift histograms usually start from zero and usually end at about a Gaussian distribution (the only exception is LSTM). Watch for parameters that deviate at plus / minus infinity. Watch for shifts that get too big. Sometimes this happens in the output layer for classification if the distribution of classes is too unbalanced. ”

- Check for updates of layers, they should have a normal distribution.

34. Try a different optimizer.

Your choice of optimizer should not prevent the neural network from learning, unless you have specifically chosen poor hyperparameters. But the right optimizer for the task can help you get the best training in the shortest possible time. A scientific article describing the algorithm that you are using should also be mentioned by the optimizer. If not, I prefer to use Adam or plain SGD.

Read the excellent article by Sebastian Ruder to learn more about gradient descent optimizers.

35. Explosion / Disappearance of Gradients

- Check for layer updates, as very large values may indicate gradient explosions. Clipping gradient can help.

- Check layer activation. Deeplearning4j gives excellent advice: “A good standard deviation for activations is in the region from 0.5 to 2.0. A significant overrun may indicate an explosion or disappearance of activations. ”

36. Accelerate / slow down learning.

Low learning speed will lead to a very slow convergence of the model.

A high learning rate will first quickly reduce the loss function, and then it will be difficult for you to find a good solution.

Experiment with the speed of learning, accelerating or slowing it down 10 times.

37. Elimination of NaN states

NaN (Non-a-Number) states are much more common when learning RNN (as I heard). Some ways to eliminate them:

- Reduce the learning rate, especially if NaN appears in the first 100 iterations.

- Non-numbers can occur due to division by zero, taking the natural logarithm of zero, or a negative number.

- Russell Stewart offers good advice on what to do when NaN appears .

- Try to evaluate the network layer by layer and see where NaN appears.

Sources

cs231n.imtqy.com/neural-networks-3

russellsstewart.com/notes/0.html

stackoverflow.com/questions/41488279/neural-network-always-predicts-the-same-class

deeplearning4j.org/visualization

www.reddit.com/r/MachineLearning/comments/46b8dz/what_does_debugging_a_deep_net_look_like

www.researchgate.net/post/why_the_prediction_or_the_output_of_neural_network_does_not_change_during_the_test_phase

book.caltech.edu/bookforum/showthread.php?t=4113

gab41.lab41.org/some-tips-for-debugging-deep-learning-3f69e56ea134

www.quora.com/How-do-I-debug-an-artificial-neural-network-algorithm

russellsstewart.com/notes/0.html

stackoverflow.com/questions/41488279/neural-network-always-predicts-the-same-class

deeplearning4j.org/visualization

www.reddit.com/r/MachineLearning/comments/46b8dz/what_does_debugging_a_deep_net_look_like

www.researchgate.net/post/why_the_prediction_or_the_output_of_neural_network_does_not_change_during_the_test_phase

book.caltech.edu/bookforum/showthread.php?t=4113

gab41.lab41.org/some-tips-for-debugging-deep-learning-3f69e56ea134

www.quora.com/How-do-I-debug-an-artificial-neural-network-algorithm

Source: https://habr.com/ru/post/334944/

All Articles