Archive this: how file system archiving works with Commvault

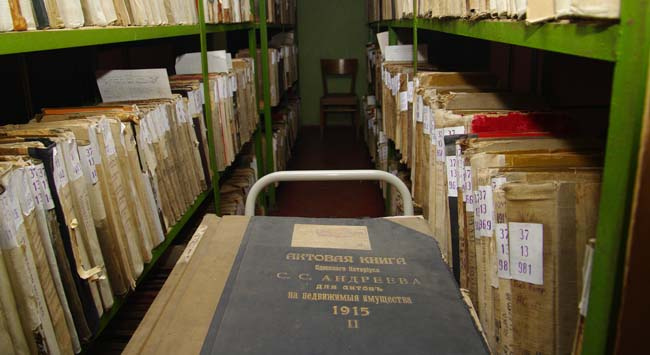

Previously, the so-called long-term archiving was more common, when files that had to be legally stored for several years were dropped onto tapes, and tapes were taken away to special storage when necessary. On holidays, for example, on the occasion of an audit, the tapes came to the office, and the necessary files were got from them. With the availability of disk storages, it became possible to organize archiving not only for super important accounting or legal documents, but also for mere mortal files, which cannot be deleted like (suddenly useful), but I don’t even want to spend space on a fast store.

Such archiving usually works in the following way: special archiving rules are written (the date of the last discovery, editing, creation), and all files that fall under these rules are automatically moved from the productive storage to the archive on slower disks.

Today I just want to tell you about this archiving option using the Commvault solution as an example.

')

And immediately a disclaimer: archiving is not equal to backup

As it was possible to guess, the main profit from archiving is to save space on the storage. Quarterly reports that are needed only during an audit, photos from the New Year's corporate party beforehand - in general, everything that is not needed is transferred to the archive, and does not lie on the main repository for ballast. Since in the end there are fewer files, the volume of backups from the product will be reduced, which means that you will need less space for backups.

As a rule, archiving licenses are cheaper than backing up.

Example: a backup license conditionally costs $ 100 for 1 TB, and for archiving is $ 70. A client has a server with 5 TB of data, which he backs up completely and pays $ 500 per month for this. After he decided to send 4 TB to the archive, 1 TB was left for backup, i.e. 100 dollars a month. For the archive he pays 4 TB x 70 dollars = 280 dollars. As a result, instead of the initial 500 dollars, the client pays 380, saving 120. Multiply by 12, it turns out 1440 dollars less annually.

You can go further, and add here the cost of the vacated space on the production account due to having moved to the archive, as well as savings due to deduplication, which also works in archiving. Such a situation pleases many so much that a bright thought arises: is it possible to replace a more expensive backup with archiving. And here the problems begin.

Archiving is not equal to backup (which is not yet backup, read here ). It differs from backup in that it does not support versioning in any way: in what form the file got into the archive, in that it will lie there. The second point: if something happens to the archive repository, then without backup or copy to the second site, the fate of the archive will be deplorable.

In fact, they solve two different tasks: archiving - optimization of the space on the productive storage, backup - protection against data loss.

What Commvault has for archiving

In Commvault, the same agent is responsible for archiving as for backup, OnePass . In the framework of one task, part of the data goes to the backup, the other, subject to the archiving rules, is archived. Therefore, if you have already backed up the data using Commvault and decided to get acquainted with archiving, then no additional agents need to be installed.

OnePass works as follows:

1. If you already have full and incremental file backups, then it is recommended to make a synthetic backup (synthetic full backup). In this case, the backup file will be collected from the last full backup and all subsequent incremental and / or differential copies. Resources of the source server will not be involved.

2. After the backup is completed, OnePass determines the files that fall under the archiving rules and transfers them to the archive (the allocated space on the storage or separate storage for the archives is how you decide).

The criteria by which OnePass decides to send the file to the archive are as follows:

- when to start deleting files in the archive (depending on the availability of free disk space);

- when the file was last opened;

- when the file was last edited;

- file creation time;

- file size.

Actually everything is configured here.

3. Files defined in the archive are either deleted from the product completely, or are replaced with a kind of labels (stubs).

In the second case, for the end user, little will change. If the accountant, Marya Ivanovna, needs to show the auditor a report five years ago, she will simply click on the shortcut, the file will again move to productive and will open as usual. Small files will be recovered from the archive quickly: a Word file is less than MB - a few seconds. If this is any video, then it will take more time.

On production, the disturbed file will remain until it falls under the archive policy again. Up to this point, he will leave for backup tasks.

Files with crosses are the very labels.

As with backup, the administrator has the ability to limit the number of threads for recovery (throttling), so that the system does not fit under a large number of requests. You can set the settings by the number of files for simultaneous recovery, set the intervals between recoveries, etc.

Files that are sent to the archive, you can encrypt and store them already in this form.

After transferring to the archive storage, the adventures of unclaimed files do not end. For archive storage, you can also configure the rules by which the archives themselves will be deleted over time (retention policy). For example, the reports rewound the legal three years in the archive, and then automatically retired.

On a test disk with copies of personnel department documents, videos and photos from any corporate events, I tried to apply the following archiving rules: files older than 0 days that have not changed for more than 7 days, more than 1 MB in size. It turned out the following: before archiving, the amount of data on production is 391 GB, after - only 1 GB.

How to understand what to archive

To determine which values to prescribe for each parameter, there is a System Discovery and Archive Analyzer Tool (available for Commvault users) in OnePass. It scans files by the time of the last change, opening and creation, as well as by their size. Further, all these raw statistics can be sent to Commvault and get beautiful graphs and diagrams, from which you can clearly see which archiving rules are best written. I admit, not the most convenient scheme, but it will be clear in which direction to dig.

The graph shows the statistics on the limitations of changes in the files. Screenshot from Commvault documentation.

And here are collected statistics on the date of the last file opening. Screenshot from Commvault documentation.

Also reports are made on the file size and format. But the most important is File Level Analytics Report. He will suggest the rules of archiving, and also show how much space you can save if you use these rules.

The report promises that if all files larger than 10 MB, not changed for more than 90 days, are sent to the archive, then 3.85 TB will be saved. You should not look at the calculation of savings in money: for some reason the cost of 1 GB on a disk is estimated by them to be 10 dollars in space.

Source: https://habr.com/ru/post/334824/

All Articles