As I made the fastest resize of images. Part 3, fixed-point numbers

I continue to talk in detail about optimization techniques that allowed me to write the fastest image resize on modern x86 processors. This time it will be about converting floating-point calculations to calculations with integers. First, I will tell you a little bit of theory of how this works. Then go back to the real code, including the SIMD version.

In the previous parts:

→ Part 0

→ Part 1, general optimizations

→ Part 2, SIMD

Integers and Floating Points

I think everyone knows that there are two basic ways to represent numbers in computer memory. Here are their main features:

Whole numbers

- Store exact value

- Have a relatively small range of values.

- The difference between two adjacent values is always 1

- Convenient for storage

- 1 - 0.1 = 0

Floating point numbers

- Store approximate value with a certain accuracy

- The range of values is very large

- The difference between neighboring values may be greater than the number of photons in the universe.

- Convenient for intermediate calculations.

- 1 - 0.1 = 0.900000000000000022204460 ...

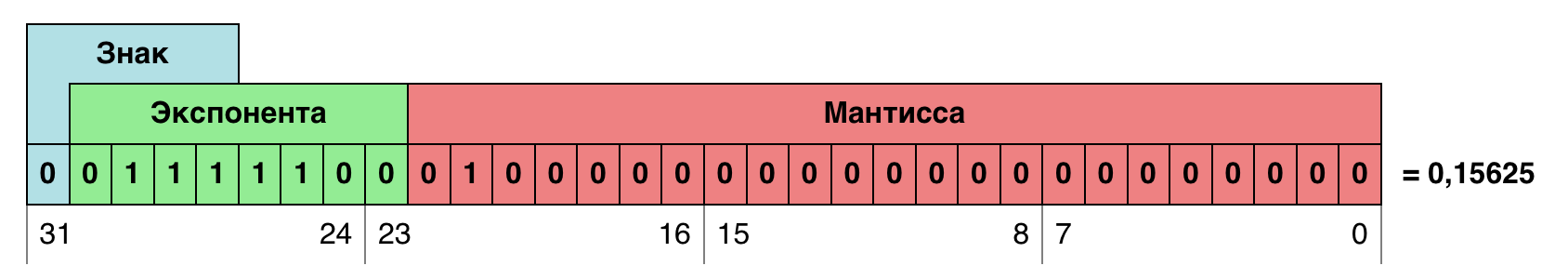

Numbers with a floating point are stored in memory in the form of two values - mantissa and exponent. The mantissa is the bits that store the value itself (almost as whole numbers), and the exponent says how many digits the mantissa value is shifted. To find out the true value of a number, you need to multiply the mantissa by the bit depth of the exponent: m · 2ᵉ. The digit capacity in this case is 2, since binary numeral system.

It seems that this system is quite complex compared to the integer representation of a number. However, on modern processors, most operations (such as multiplication and addition) on floating-point numbers are performed in the same number of cycles as operations on integers. The complexity of the operations only leads to an increase in the number of transistors in the processors, but not the number of cycles. But besides the speed of the operations themselves, there are several other factors that need to be considered in the context of performance.

As mentioned above, integers are more often used for storage, and floating point for calculations. This means that you often need to convert from one view to another. This is of course fast, but depending on the task it can affect performance. At the end of the first part, I described a case in which such conversion was the most expensive calculation operation.

The minimum size of floating-point types for the x86 architecture is 32 bits. But for storing integers, you can often get by with 16 bits, and in some cases eight. This is not critical for scalar computing, when the processor at one time is busy executing one instruction. However, in the case of vector calculations, it allows two or four times more operations per clock cycle.

- At the end of the second part , it was clear that when the AVX2 code is running, the processor slows down the clock frequency. According to my observations, this happens only when working with floating point numbers. If integer AVX2 commands are used, the processor continues to operate at maximum frequency. Of course, this is a rather specific point, the behavior can easily change from generation to generation, and depending on the purpose of the processor. However, the output does not change: floating-point numbers may run slower than integers, even with the same performance per clock.

Fixed point

It can be seen that the integers have the potential for higher performance and you can try to translate arithmetic on them. But, of course, replacing float with int everywhere will not work. When resizing, many calculations are carried out in the range from 0 to 1. That is, in integer representation, it will be just zero.

Here numbers with a fixed point come to the rescue. Strictly speaking, integers are also fixed-point numbers, the point of which is fixed after the youngest binary digit. But it is possible to speculatively move it, say 8 bits, and assume that the unit is actually 1/256. Then 256 is one, 512 is a two, and 384 is 1.5. What does this give? In this form, you can record not only the integer part of the number, but also the real part. A fairly common example of fixed-point numbers is the currency data type, which some programming languages have. It holds an integer amount of kopecks or cents, and to get rubles or dollars, you need to divide the value by one hundred.

So again: fixed-point numbers are numbers multiplied by some constant to increase the accuracy of calculations. Unlike floating-point numbers, this constant is not stored in the number itself, but can be hard-wired into the implementation of the algorithm, or be calculated in the process.

In general, working with fixed-point numbers is not difficult, but there are a few things that need to be remembered.

The range of values decreases with increasing accuracy of the fractional part. If you move a fixed point one bit to the left, the accuracy will double, but the range of values will be halved. You should always try to move the point as far as possible to the left, but avoid overflows. Therefore, before starting to transfer calculations to a fixed point, you need to determine the range of maximum values that will be involved in the calculations. For example, if the range of values is from -128 to 384, then the number of bits needed to represent the values (including the sign) will be 10. If the 16-bit data type is used, then only 6 bits will remain for accuracy.

The addition and subtraction operations for fixed-point numbers work as usual. Multiplication by an integer too.

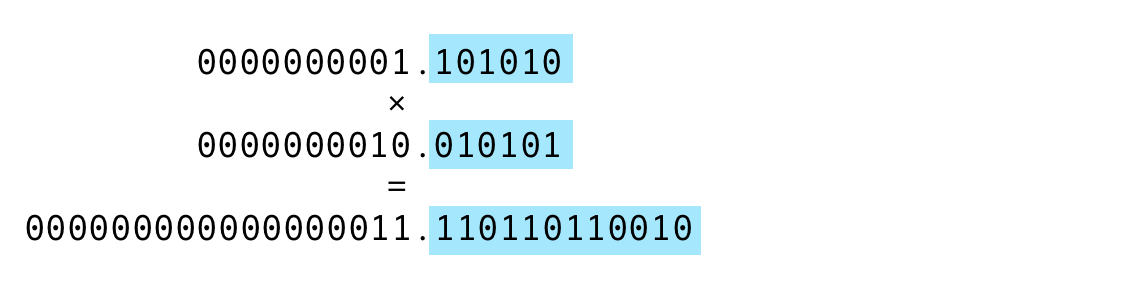

- When multiplying two numbers with a fixed point, the number of digits responsible for accuracy doubles. In the same way as the number of digits responsible for storing the whole part doubles. That is, if after multiplication you need to get the original accuracy, the result will have to be shifted by the number of bits of precision. Or you can not move, if it will be more convenient to read, but you have to keep this fact in mind.

Counting accuracy

Before translating the code into integers, it would be nice to calculate how many bits can be allocated for accuracy. Moreover, this calculation makes sense for each operation. And it’s better to start from the end:

float ss, ss0, ss1; for (xx = 0; xx < imOut->xsize; xx++) { ss0 = ss1 = ss2 = 0.5; for (y = ymin; y < ymax; y++) { ss0 = ss0 + ((UINT8) imIn->image[y][xx*4+0]) * k[y-ymin]; ss1 = ss1 + ((UINT8) imIn->image[y][xx*4+1]) * k[y-ymin]; ss2 = ss2 + ((UINT8) imIn->image[y][xx*4+2]) * k[y-ymin]; } imOut->image[yy][xx*4+0] = clip8(ss0); imOut->image[yy][xx*4+1] = clip8(ss1); imOut->image[yy][xx*4+2] = clip8(ss2); } The ss0 - ss2 contain the sum of the products of the coefficients per pixel. Pixels have values in the range [0, 255], and about the sum of the coefficients it is known that it is equal to one. That is, the final value of ss0 - ss2 will also be in the range [0, 255]. But this is only the final! In general, some coefficients may be negative, and, as a result, the sum of positive coefficients may be greater than one (look at the filter charts from the zero article). Therefore, intermediate values may slightly fall outside the range [0,255]. One bit for negative numbers and one more for possible overflows above would be enough in this case. Total to store the value will need 10 bits [-512,511]. Since it is logical to make the batteries at least 32-bit, then the storage of the accuracy of the batteries (let's call it PRECISION_BITS ) is 22 bits.

It remains to deal with the multiplication of pixels by the coefficients. I have already said that when multiplying a number with a fixed point by an integer, no additional transformations are necessary. In this case, the integer is the pixel values. This means that the coefficients of accuracy can be the same as that of batteries - 22 bits.

Fixed-point scalar computing

This is surprising, but in the code above you need to change only one line to translate it to work with a fixed point. At the very beginning, the batteries are assigned a value of 0.5. In the new number system this will correspond to the value 1 << (PRECISION_BITS - 1) . That is, the unit is shifted one digit less than the accuracy. 0.5 new units.

int ss, ss0, ss1; for (xx = 0; xx < imOut->xsize; xx++) { ss0 = ss1 = ss2 = 1 << (PRECISION_BITS -1); for (y = ymin; y < ymax; y++) { ss0 = ss0 + ((UINT8) imIn->image[y][xx*4+0]) * k[y-ymin]; ss1 = ss1 + ((UINT8) imIn->image[y][xx*4+1]) * k[y-ymin]; ss2 = ss2 + ((UINT8) imIn->image[y][xx*4+2]) * k[y-ymin]; } imOut->image[yy][xx*4+0] = clip8(ss0); imOut->image[yy][xx*4+1] = clip8(ss1); imOut->image[yy][xx*4+2] = clip8(ss2); } All other calculations remain unchanged, which indirectly indicates that we are on the right track. After all, a change in the concept did not introduce difficulties in implementation, but you can still count on performance gains.

But the function clip8 , limiting the final pixel value in the range [0, 255], will change a lot. It was:

static inline UINT8 clip8(float in) { int out = (int) in; if (out >= 255) return 255; if (out <= 0) return 0; return (UINT8) out; } It became:

static inline UINT8 clip8(int in) { if (in >= (1 << PRECISION_BITS << 8)) return 255; if (in <= 0) return 0; return (UINT8) (in >> PRECISION_BITS); } First, the accepted value changes - now it's a 32-bit int. Secondly, it is not immediately cast to the integer type (earlier it was in the first line). Now, instead, you can compare in with the value 1 << PRECISION_BITS << 8 . This value is 256 in our number system with a fixed point, because it is shifted by the number of bits in the fractional part and another 8 bits. And as you know, 1 << 8 - this is just 256. And already at the end, if all the comparisons gave a negative result, the value is really reduced to an ordinary whole, without any points. The result is the usual shift by the number of bits of precision.

Now to a fixed point you need to bring the coefficients. Let me remind you that initially the coefficients are floating point numbers from -1 to 1. And the sum of all coefficients for calculating one pixel is equal to one. I am sure that there is no point in using integer arithmetic to actually calculate the coefficients. First, the calculation of coefficients takes much less time than their use. Secondly, trigonometric functions are used inside some filters. Therefore, it seems to me correct to calculate the floating point coefficients, and only then convert them to a fixed one, multiplying by (1 << PRECISION_BITS) .

for (x = 0; x < xsize * kmax; x++) { kk[x] = (int) (prekk[x] * (1 << PRECISION_BITS)); } What does this give? Here are the latest results for scalar calculations that were obtained on floating point numbers:

Scale 2560×1600 RGB image to 320x200 bil 0.03009 s 136.10 Mpx/s to 320x200 bic 0.05187 s 78.97 Mpx/s to 320x200 lzs 0.08113 s 50.49 Mpx/s to 2048x1280 bil 0.14017 s 29.22 Mpx/s to 2048x1280 bic 0.17750 s 23.08 Mpx/s to 2048x1280 lzs 0.22597 s 18.13 Mpx/s to 5478x3424 bil 0.58726 s 6.97 Mpx/s to 5478x3424 bic 0.74648 s 5.49 Mpx/s to 5478x3424 lzs 0.90867 s 4.51 Mpx/s Result for commit 57e8925 .

Here are the results for a fixed point:

Scale 2560×1600 RGB image to 320x200 bil 0.02079 s 196.99 Mpx/s 44.7 % to 320x200 bic 0.03459 s 118.41 Mpx/s 50.0 % to 320x200 lzs 0.05649 s 72.50 Mpx/s 43.6 % to 2048x1280 bil 0.10483 s 39.07 Mpx/s 33.7 % to 2048x1280 bic 0.13362 s 30.66 Mpx/s 32.8 % to 2048x1280 lzs 0.17210 s 23.80 Mpx/s 31.3 % to 5478x3424 bil 0.46706 s 8.77 Mpx/s 25.7 % to 5478x3424 bic 0.59492 s 6.88 Mpx/s 25.5 % to 5478x3424 lzs 0.72819 s 5.62 Mpx/s 24.8 % Result for commit 15d0573 .

As you can see, everything was not in vain and the increase is very serious. Most of all, it is noticeable on a strong decrease, where there were more operations to convert pixel values.

Fixed Point SIMD

Translation of a SIMD code from the third part to calculations with a fixed point can be divided into 4 stages:

- SSE4 vertical pass translation

- horizontal passage translation SSE4

- AVX2 vertical pass translation

- horizontal pass translation AVX2

These stages will be pretty monotonous, so it makes sense to carefully consider only one. Here is an example of a vertical passage SSE4 on floating point numbers.

ImagingResampleVerticalConvolution8u(UINT32 *lineOut, Imaging imIn, int ymin, int ymax, float *k) { int y, xx = 0; for (; xx < imIn->xsize; xx++) { __m128 sss = _mm_set1_ps(0.5); for (y = ymin; y < ymax; y++) { __m128i pix = _mm_cvtepu8_epi32(*(__m128i *) &imIn->image32[y][xx]); __m128 mmk = _mm_set1_ps(k[y - ymin]); __m128 mul = _mm_mul_ps(_mm_cvtepi32_ps(pix), mmk); sss = _mm_add_ps(sss, mul); } __m128i ssi = _mm_cvtps_epi32(sss); ssi = _mm_packs_epi32(ssi, ssi); lineOut[xx] = _mm_cvtsi128_si32(_mm_packus_epi16(ssi, ssi)); } } The data type __m128 stores 4 floating point numbers. It is no longer needed, it should be replaced with __m128i . The analogue of the _mm_set1_ps function is _mm_set1_epi32 . The _mm_cvtepi32_ps and _mm_cvtps_epi32 conversion _mm_cvtepi32_ps _mm_cvtps_epi32 no longer needed; instead, the result at the end will need to be shifted to PRECISION_BITS to the right. Difficulties can arise only with the _mm_mul_ps function, because there is no direct analogue for it, but if you search, _mm_mullo_epi32 is _mm_mullo_epi32 . The fact is that multiplying two 32-bit numbers gives a 64-bit number. Lo means that the lower 32 bits of the result will be returned, which is exactly what is needed. Completely all code will look like this:

ImagingResampleVerticalConvolution8u(UINT32 *lineOut, Imaging imIn, int ymin, int ymax, int *intk) { int y, xx = 0; for (; xx < imIn->xsize; xx++) { __m128i sss = _mm_set1_epi32(1 << (PRECISION_BITS -1)); for (y = ymin; y < ymax; y++) { __m128i pix = _mm_cvtepu8_epi32(*(__m128i *) &imIn->image32[y][xx]); __m128i mmk = _mm_set1_epi32(intk[y - ymin]); __m128i mul = _mm_mullo_epi32(pix, mmk); sss = _mm_add_epi32(sss, mul); } sss = _mm_srai_epi32(sss, PRECISION_BITS); sss = _mm_packs_epi32(sss, sss); lineOut[xx] = _mm_cvtsi128_si32(_mm_packus_epi16(sss, sss)); } } Now you can compare the results obtained for the SSE4 version on floating point numbers:

Scale 2560×1600 RGB image to 320x200 bil 0.01151 s 355.87 Mpx/s to 320x200 bic 0.02005 s 204.27 Mpx/s to 320x200 lzs 0.03421 s 119.73 Mpx/s to 2048x1280 bil 0.04450 s 92.05 Mpx/s to 2048x1280 bic 0.05951 s 68.83 Mpx/s to 2048x1280 lzs 0.07804 s 52.49 Mpx/s to 5478x3424 bil 0.18615 s 22.00 Mpx/s to 5478x3424 bic 0.24039 s 17.04 Mpx/s to 5478x3424 lzs 0.30674 s 13.35 Mpx/s Result for commit 8d0412b .

With the results obtained for SS4 on fixed-point numbers:

Scale 2560×1600 RGB image to 320x200 bil 0.01253 s 326.82 Mpx/s -8.1 % to 320x200 bic 0.02239 s 182.94 Mpx/s -10.5 % to 320x200 lzs 0.03663 s 111.83 Mpx/s -6.6 % to 2048x1280 bil 0.04712 s 86.92 Mpx/s -5.6 % to 2048x1280 bic 0.06731 s 60.86 Mpx/s -11.6 % to 2048x1280 lzs 0.08176 s 50.10 Mpx/s -4.5 % to 5478x3424 bil 0.19010 s 21.55 Mpx/s -2.1 % to 5478x3424 bic 0.25013 s 16.38 Mpx/s -3.9 % to 5478x3424 lzs 0.31413 s 13.04 Mpx/s -2.4 % Result for commit 7d8df66 .

And here I was in for a surprise. For a long time I tried to understand what went wrong. At some point I went to watch the timings of each instruction that was used in the cycle and the solution was exactly here.

Now in the Intel Intrinsics Guide, this is not visible, because it is constantly updated and from time to time it deletes data for old processors from it. But when I dealt with this issue, the _mm_mullo_epi32 operation had the following timings table:

Architecture Latency Throughput Broadwell 10 2 Haswell 10 2 Ivy Bridge 5 1 Now compare with the timings of a similar _mm_mul_ps for floating point numbers:

Architecture Latency Throughput Broadwell 3 0.5 Haswell 5 0.5 Ivy Bridge 5 1 Here you can see that, starting from the architecture of Haswell, Intel scored on the vector multiplication of whole 32-bit numbers. And it is exactly whole and exactly 32-bit ones, because all other variants of multiplications continue to grow faster from architecture to architecture.

Interestingly, for the AVX2 version of the code, this is not observed and the negative effect of increased delay does not prevail over the positive effect of switching to fixed-point calculations. And the performance gain for fixed-point numbers is ≈10%. There are two reasons for this:

- As I said at the very beginning, the processor does not slow down the frequency when it executes integer AVX2 instructions, unlike AVX2 floating point instructions.

- The AVX2 version processes 2 times more data at a time, and therefore the multiplication instruction is performed 2 times less often for the same amount of data. This means that the negative effect of large delays is 2 times less noticeable.

The Quest for the Holy Grail

I prepared integer calculations for Pillow version 3.3. And I tried to release the Pillow and Pillow-SIMD versions more or less synchronously and with the same improvements. And it was very disappointing that the transition to integers gave a tangible increase in Pillow, but it did not give much or did not give any in Pillow-SIMD. Then, in the release, I was able to slightly compensate for the lag by deploying cycles. This made it possible to improve the instruction pipeline and slightly eliminated the effect of slow multiplication. But about this I would like to tell in the last article of this series.

If you look at how the performance of the regular Pillow version has changed, it will be seen that Pillow 3.3 was a good gain due to integer calculations. And in Pillow 3.4, everything remained almost at the same level.

Whereas for Pillow-SIMD the situation is reversed: version 3.3 was almost slower than the previous one. But in 3.4 there was a significant leap, which allows me to say now that Pillow-SIMD is currently the fastest implementation of resizing on a CPU.

To achieve such improvements in Pillow-SIMD 3.4, it was necessary to get rid of the vector 32-bit multiplication of integers. But how? Translate all calculations to 16 bits? It is easy to calculate that in this case, the coefficients ( PRECISION_BITS ) would be 16−8−2 = 6 bits, that is only 64 values. In practice, much less, because the sum of all coefficients must be equal to one (that is, 64). And the number of coefficients depends on the size of the filter window and the scale of reduction (details in part 0 ). When the image is reduced 10 times with the Lanczos filter, the coefficients themselves will be sixty. The calculations in 16 bits were obviously not accurate enough, you had to think of something else.

The thought gave me no peace: for some reason, Intel decided to cut down the multiplication in such a strange way. And other developers do not lament about this on the Internet, but continue to successfully solve their problems. Maybe 32-bit multiplication is really not necessary for working with graphics, I just don’t see how to do without it.

I watched _mm_mullo_epi16 . It was possible to try cleverly select the bit width of the coefficients so that the result of the convolution was 32-bit, but the result of multiplying the pixel value by the coefficient remained within 16 bits. Then the accuracy of the coefficient itself would have remained 7 bits (one bit went to the sign). It was significantly better than 6 bits for the sum of all coefficients. I was going to implement it when I accidentally stumbled upon another solution.

What if there was a special instruction for the bundles?

Imagine you are solving a problem, considering the tools that you have from different angles, and then accidentally stumble upon one that was specifically devised for this task.

What is the difficulty of multiplication? Storage of the result of multiplication requires two times more bits than operands. Therefore, we have to choose: the upper or lower part of the result must be obtained. Precision suffers from this, and only a fraction of the significant bits are used by the operands. What if you could get the whole result of multiplication? Then it would take twice as many bits, that is, for example, two registers with the result. But what if, after multiplying, add these two registers? All the same, you will need to add the result of multiplication, this is the meaning of convolution. Then an instruction would be obtained that takes X pairs of operands to multiply, multiplies them, gets X products, then adds adjacent ones and gives X / 2 sums of products at the output. And, oddly enough, this instruction was found already in SSE2! It is called _mm_madd_epi16 . And the delay in it is two times lower than that of _mm_mullo_epi32 , and it performs 3 times more operations.

Once again: there are two registers at the input, each with eight 16-bit signed integers. These numbers are multiplied in pairs, somewhere in the mind eight eight-bit multiplication results are stored. Neighboring multiplication results are added together and four signed 32-bit numbers are obtained. Eight multiplications and four additions with one quick instruction instead of four multiplications slow. Virtually no loss of accuracy.

The only problem is that the adjacent results of multiplications add up, and for pixels these will be adjacent channels. If you apply the command to the forehead, the red channel of the first pixel will fold with the green of the first pixel, and the blue of the first pixel will fold with the alpha channel. The same for the second pixel. And for bundles you need to add the red channel of the first pixel with the red channel of the second and so on. That is, the values need to be slightly mixed before applying this instruction.

Transition to 16-bit coefficients

Unfortunately, simply replacing the int type with INT16 not enough: the coefficients can go beyond this type. At the beginning, I said that the exponent (the accuracy of a number or the position of a virtual fixed point, as you wish) can be set both in the algorithm itself and calculated in the process. And here is exactly the case when, depending on different input data, you will need to select different exponents. And for this calculation will need the value of the maximum of the coefficients.

#define MAX_COEFS_PRECISION (16 - 1) #define PRECISION_BITS (32 - 8 - 2) coefs_precision = 0; while ( maxkk < (1 << (MAX_COEFS_PRECISION-1)) && (coefs_precision < PRECISION_BITS) ) { maxkk *= 2; coefs_precision += 1; }; That is, it is necessary to ensure that, on the one hand, the value of the maximum coefficient does not exceed 16 bits (because it will be presented in a 16-bit form), and on the other, the value of the whole convolution does not exceed 32 bits (this condition is satisfied coefs_precision < PRECISION_BITS ).

It seems to me that I already tired enough with the code, so I will not analyze what exactly needs to be changed and how to mix the pixels so that you can apply the _mm_madd_epi16 instruction. Those who are interested can, as always, see the changes in the githaba commits and ask questions in the comments. I will give the results of the SSE4 version on 16-bit coefficients relative to the SSE4 version on floating point numbers:

Scale 2560×1600 RGB image to 320x200 bil 0,00844 s 485.20 Mpx/s 36,4 % to 320x200 bic 0,01289 s 317.79 Mpx/s 55,5 % to 320x200 lzs 0,01903 s 215.24 Mpx/s 79,8 % to 2048x1280 bil 0,04481 s 91.41 Mpx/s -0,7 % to 2048x1280 bic 0,05419 s 75.59 Mpx/s 9,8 % to 2048x1280 lzs 0,06930 s 59.11 Mpx/s 12,6 % to 5478x3424 bil 0,19939 s 20.54 Mpx/s -6,6 % to 5478x3424 bic 0,24559 s 16.68 Mpx/s -2,1 % to 5478x3424 lzs 0,29152 s 14.05 Mpx/s 5,2 % Result for commit 9b9a91f .

And the results of the AVX2 version on 16-bit coefficients relative to the AVX2 version on floating-point numbers:

Scale 2560×1600 RGB image to 320x200 bil 0.00682 s 600.15 Mpx/s 34.6 % to 320x200 bic 0.00990 s 413.86 Mpx/s 50.5 % to 320x200 lzs 0.01424 s 287.54 Mpx/s 60.6 % to 2048x1280 bil 0.03889 s 105.31 Mpx/s 7.6 % to 2048x1280 bic 0.04519 s 90.64 Mpx/s 11.3 % to 2048x1280 lzs 0.05226 s 78.38 Mpx/s 18.2 % to 5478x3424 bil 0.15195 s 26.96 Mpx/s 6.7 % to 5478x3424 bic 0.16977 s 24.13 Mpx/s 17.8 % to 5478x3424 lzs 0.20229 s 20.25 Mpx/s 15.6 % Result for commit 3ad4718 .

Total

Thus, the transition to integer calculations gave a gain for both the scalar code and SIMD. There is a slight performance regression in the SSE4 version when enlarging images using some filters. But the fact is that the code presented here is quite different from what was included in the Pillow-SIMD 3.3 or 3.4 version - this is a kind of vinaigrette, an intermediate link, where some optimizations are applied, but others are not. In real versions, there was no performance degradation.

If you look back and remember the very first version, it turns out that the current code is 10-12 times faster on the same hardware. What took 2 seconds can now be performed 5 times per second! But if you look at the official benchmarks , you can see that the actual performance of the Pillow-SIMD 3.4 with the AVX2 is still 2 times higher than it turned out by the end of this article. So, there is a reason and material for the next part.

')

Source: https://habr.com/ru/post/334790/

All Articles