Virtuozzo: What are the real benefits of distributed storage?

There are many technologies that allow you to save important information in the event of media failures, as well as speed up access to important data. But our hyper-convergent storage, Virtuozzo Storage, is ahead of open-source software-defined solutions, as well as ready-made SAN or NAS, by a number of parameters. And today we are talking about the architecture of the system and its advantages.

First of all, we should say what Virtuozzo Storage is (in the development environment - VZ Storage). The solution is a distributed storage using the same infrastructure on which your virtual machines and containers work (the so-called hyper-converged infrastructure). Initially, the product evolved with Virtuozzo virtualization. However, if you do not need a full-fledged virtualization system, the project is now available as a separate distributed storage, which can work with any clients.

Generally speaking, VZ Storage uses disks in the same servers that serve the virtualization system. Thus, you have no need to purchase separate equipment, for example, an expensive SAN / NAS controller, in order to create a network storage medium. One of the distinguishing features of VZ Storage is the choice of data storage method (redundancy scheme) for different data categories. Temporary logs, for example, can not be backed up at all, and for important data various protection technologies are provided - replication (full duplication) or self-recovering codes (Erasure Coding).

Iron

Since VZ Storage is a hyper-convergent storage system, it can be deployed using any of the servers of the standard x86 architecture. However, in order for the system to work efficiently, each server must have at least three hard drives of at least 100 GB of capacity each, a dual-core processor (one core is given to the storage), and 2 GB of RAM. In more powerful configurations, we recommend installing one processor core and 4 GB of memory for every 8 hard drives. That is, using on a node, for example, 15 disks to create storage, to support the operation of a storage cluster, you need only 2 cores and 8GB of RAM.

')

Since we are talking about distributed storage, servers must be networked. Theoretically, you can use the same data network on which the virtualization cluster works, but it is much more efficient to have a second network adapter with a bandwidth of at least 1 Gb / s, because the speed of reading and writing data will directly depend on the network characteristics. In addition, a separate network will be useful from a security point of view.

Architecture

Distributed VZ Storage architecture implies that we install various system components on physical or virtual servers: a graphical user interface control panel, a data storage server (Chunk Server - CS), a metadata server (MetaData Server - MDS), a storage mount for reading / writing data (Client). One node can run multiple components in any combination. That is, a single server, for example, can simultaneously store both data, and metadata, and run virtual machines, and provide a cluster control panel.

All data in the cluster is divided into blocks of fixed size (“chunks” - chunks). For each “chunk” several replicas (its copies) are created, and they are placed on different machines (to ensure fault tolerance in case of failure of the whole machine). When you install a cluster, you set the parameter for the normal and minimum number of replicas. If a machine fails or the disk stops working, all lost replicas by the cluster will be reproduced in the remaining ones - up to the parameter of a normal amount (usually 3). At this time, the system still allows you to write and part of the data without delay. But, if due to a failure, the number of copies fell below the minimum value (usually 2), that is, two components failed simultaneously, the cluster only allows you to read the data, and to write, customers will have to wait until at least the minimum number of copies is restored. The system recovers those chunks that are being worked with with the highest priority.

The number of CS and MDS on each server is determined by the number of physical disks. VZ Storage binds one component to one drive, thereby creating a clear separation of resources and replicas between different physical equipment.

What are the advantages?

We got a little acquainted with the structure and requirements of VZ Storage, and now the question arises, why is all this necessary? What are the advantages of the system? The most important advantage of VZ Storage is reliability. Using the same equipment (perhaps by adding network controllers and disks to it), you get a highly efficient, scalable system with a streamlined mechanism for working with data and metadata. VZ Storage allows you to provide permanent and reliable data storage, including VM disks and container application data for Docker, Kubernetes or Rancher.

The second plus is the low cost of ownership (TCO). Apart from the fact that for the operation of the solution, you do not need to purchase a separate expensive “hardware” and you can select backup options for various data, VZ Storage has the option of using erasure coding (redundancy codes such as Reed-Solomon). This reduces the total capacity requirements while maintaining the ability to recover data in the event of a failure. The method is suitable for storing large amounts of data, when the highest access speed is not the most important thing.

What are the advantages of erasure coding (EC)? Erasure codding can significantly reduce the consumption of disk space. This is achieved through special data processing.

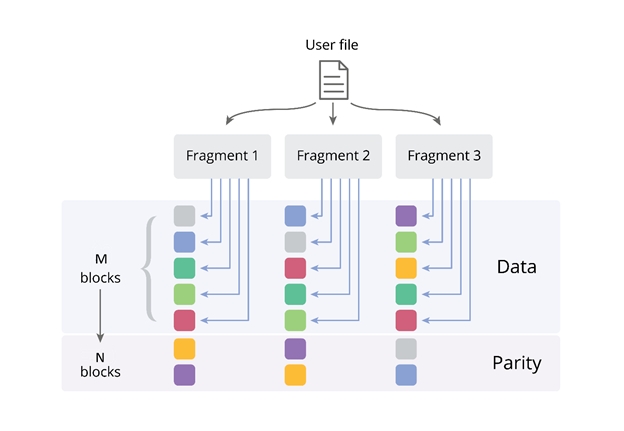

With a redundancy formula of M + N [/ X], EC allows you to use much less disk space. If M is the number of data blocks, N is the number of special checksum blocks (“Parity”), and X is the write validity parameter (it is characterized by how many nodes of the storage system may be unavailable when the client can still write data to his files). For the system to work, the minimum number of nodes in VZ Storage must be 5 (in this case, M = 3, N = 2, or “3 + 2”). The picture shows an example where M = 5, N = 2 or “5 + 2”.

Using the example of installing a system with a 5 + 2 configuration and EC enabled, we can guarantee an additional load on capacity of only 40%, creating only 2 GB of backup data for every 5 GB of application data).

In this case, for secure storage of 100 TB of data, you need only 140 TB of capacity. This approach helps to optimize the budget or provide storage of large amounts of data in cases when more disks cannot physically be installed into a cluster, rack is greater than servers, and into the server one is more stable. At the same time, we maintain high availability of data - even if two elements of the storage system fail, the remaining nodes of the system will allow to restore all data up to a bit, without stopping the application. The table shows the backup capacity values, and, as you can see, the results of using erasure coding are really impressive when many machines are used in a cluster. For example, in a 17 + 3 configuration with erasure coding, the backup capacity is only 18%.

Performance is another thing. Of course, erasure coding increases the CPU load, but only slightly. Due to SSE instructions on modern processors, one core can process up to ~ 2GB / s of data.

In fact, plus a distributed storage system, you can specify different types of redundancy for different loads. And in the case of direct replicas, a cluster with a large number of nodes, by contrast, provides much greater performance. However, we’ll talk about VZ Storage performance in more detail in the next post, since measurements of the efficiency of a hyper-convergent data storage system depend on a huge number of factors, including hardware characteristics, type of network architecture, load characteristics, and so on.

Source: https://habr.com/ru/post/334724/

All Articles