In a section: the news aggregator on Android with backend. Assembly system

Introduction (with links to all articles)

About 10-15 years ago, when the programs consisted of source codes and a small number of binary files with the work of assembling the final programs, all kinds of "? Make" did an excellent job. However, now modern programs and approaches to development have changed a lot - these are:

many different files (I don’t consider source) - styles, templates, resources, configurations, scripts, binary data, etc.;

Moreover, the average developer has to go through several times a day through the stages of assembling the final or intermediate artifacts using the listed tools and techniques. Launching command files with hands (with possible verification of results) is too burdensome in this case: you need a tool that tracks changes in your project data and runs the necessary tools depending on the detected changes.

')

My way of using build systems was incomprehensible_number * make -> ant -> maven -> gradle (the fact that the android Studio under the hood uses gradle pleased me a lot).

Gradle attracted me:

You can familiarize yourself with the features of Gradle on the developers website in the documentation section (you can find out everything!) . For those who want to compare gradle and maven there is an interesting video from JUG .

In my case, the build scripts look like this:

,

,

Where:

In this configuration, all changes to the build script are made in centralized files (not given to each project) and can / should be adjusted centrally by the person responsible for building the project.

Of the interesting things / tips that I would like to share, when setting up a gradle in my project there are the following:

Running all this rich functionality from the console with one command, which identifies the base fragments of the project, updates the necessary dependencies, performs the necessary checks and generates the required artifacts, let us speak of assembly systems as tools that, if properly configured, can radically change the speed of delivery of your project to the finished consumers.

Thanks for attention!

About 10-15 years ago, when the programs consisted of source codes and a small number of binary files with the work of assembling the final programs, all kinds of "? Make" did an excellent job. However, now modern programs and approaches to development have changed a lot - these are:

many different files (I don’t consider source) - styles, templates, resources, configurations, scripts, binary data, etc.;

- preprocessors;

- systems for checking the source style or the entire project (lint, checkstyle, etc.);

- development techniques based on tests, with their launch during assembly;

- various types of stands;

- cloud-based deployment systems, etc. etc.

Moreover, the average developer has to go through several times a day through the stages of assembling the final or intermediate artifacts using the listed tools and techniques. Launching command files with hands (with possible verification of results) is too burdensome in this case: you need a tool that tracks changes in your project data and runs the necessary tools depending on the detected changes.

')

My way of using build systems was incomprehensible_number * make -> ant -> maven -> gradle (the fact that the android Studio under the hood uses gradle pleased me a lot).

Gradle attracted me:

- its simple model (from which the truth can grow a monster, commensurate with the product being created);

- flexibility (both in terms of setting up the scripts themselves, and in organizing their distribution within large projects);

- constant development (as with all other things in the development - here we must constantly learn something new);

- ease of adaptation (knowledge of groovy and gradle DSL is required);

- the presence of plugin systems developed by the community includes various preprocessors, code generators, delivery and publication systems, etc., etc. (see login.gradle.org )

You can familiarize yourself with the features of Gradle on the developers website in the documentation section (you can find out everything!) . For those who want to compare gradle and maven there is an interesting video from JUG .

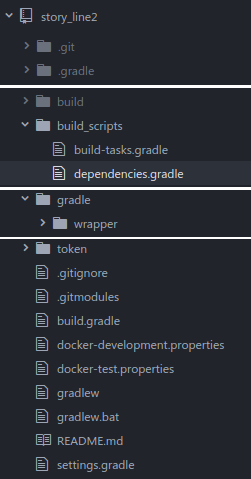

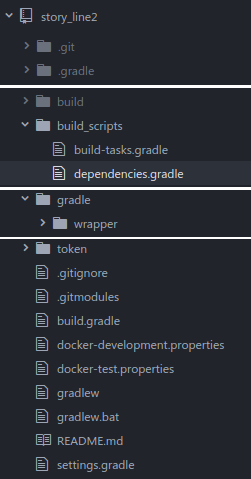

In my case, the build scripts look like this:

Where:

- build_scripts / build-tasks.gradle - all tasks for the build with their dependencies;

- build_scripts / dependencies.gradle - description of dependencies and methods of publishing;

- build.gradle - the main script, which defines dependent modules, libraries and includes other build scripts;

- settings.gradle - the list of dependent modules and the settings of the script itself (you can override the gradle launch arguments).

In this configuration, all changes to the build script are made in centralized files (not given to each project) and can / should be adjusted centrally by the person responsible for building the project.

Tips

Of the interesting things / tips that I would like to share, when setting up a gradle in my project there are the following:

Removal of artifact versions (scrolling in blocks of modules was a boring task)

We declare a block with dependencies:// project dependencies ext { COMMONS_POOL_VER='2.4.2' DROPWIZARD_CORE_VER='1.1.0' DROPWIZARD_METRICS_VER='3.2.2' DROPWIZARD_METRICS_INFLUXDB_VER='0.9.3' JSOUP_VER='1.10.2' STORM_VER='1.0.3' ... GROOVY_VER='2.4.7' // test TEST_JUNIT_VER='4.12' TEST_MOCKITO_VER='2.7.9' TEST_ASSERTJ_VER='3.6.2' }

And we use it in the project:project(':crawler_scripts') { javaProject(it) javaLogLibrary(it) javaTestLibrary(it) dependencies { testCompile "org.codehaus.groovy:groovy:${GROOVY_VER}" testCompile "edu.uci.ics:crawler4j:${CRAWLER4J_VER}" testCompile "org.jsoup:jsoup:${JSOUP_VER}" testCompile "joda-time:joda-time:${JODATIME_VER}" testCompile "org.apache.commons:commons-lang3:${COMMONS_LANG_VER}" testCompile "commons-io:commons-io:${COMMONS_IO_VER}" } }- Transferring settings to an external file

Create or already have a configuration file:--- # presented - for test/development only - use artifact from ""/provision/artifacts" directory storyline_components: crawler_scripts: version: "0.5" crawler: version: "0.6" server_storm: version: "presented" server_web: version: "0.1"

And we use it in the project:import com.fasterxml.jackson.databind.ObjectMapper import com.fasterxml.jackson.dataformat.yaml.YAMLFactory buildscript { repositories { jcenter() } dependencies { // reading YAML classpath "com.fasterxml.jackson.core:jackson-databind:2.8.6" classpath "com.fasterxml.jackson.dataformat:jackson-dataformat-yaml:2.8.6" } } .... def loadArtifactVersions(type) { Map result = new HashMap() def name = "${projectDir}/deployment/${type}/hieradata/version.yaml" println "Reading artifact versions from ${name}" if (new File(name).exists()) { ObjectMapper mapper = new ObjectMapper(new YAMLFactory()); result = mapper.readValue(new FileInputStream(name), HashMap.class); } return result['storyline_components']; } - Use of templates

This is my favorite part - it allows you to create the necessary configuration files from templates.

We create templates:version: '2' services: ... server_storm: domainname: story-line.ru hostname: server_storm build: ./server_storm depends_on: - zookeeper - elasticsearch - mongodb links: - zookeeper - elasticsearch - mongodb ports: - "${server_storm_ui_host_port}:8082" - "${server_storm_logviewer_host_port}:8083" - "${server_storm_nimbus_host_port}:6627" - "${server_storm_monit_host_port}:3000" - "${server_storm_drpc_host_port}:3772" volumes: - ${logs_dir}:/data/logs - ${data_dir}:/data/db ....

And we use it in the project:// task copyTemplates (type: Copy, dependsOn: ['createStandDir']){ description " " from "${projectDir}/deployment/docker_templates" into project.ext.stand.deploy_dir expand(project.ext.stand) filteringCharset = 'UTF-8' }

As a result, you get a configuration file whose data is filled with variable values that received values during the script operation. However, it should be noted that if you need more complex logic with variables (for example, hiding if there are no values), you will need another template engine in the assembly system. I bypassed this situation using the YAML format in the course of the project. - Using groovy lists and closures for specific processing of specific modules

This is actually the usual use of groovy in the build script, but it helped me solve a couple of non-trivial tasks.

We declare or get multiple value variables:ext { // , docker' docker_machines = ['elasticsearch', 'zookeeper', 'mongodb', 'crawler', 'server_storm', 'server_web'] // , docker' docker_machines_w_artifacts = ['crawler', 'server_storm', 'server_web'] }

And we use them in the project:// docker docker_machines.each { machine -> task "copyProvisionScripts_${machine}" (type: Copy, dependsOn: ['createStandDir']){ ... } } - Integration with maven repositories is a painfully cumbersome description for inclusion in the article (and the work itself is quite useless given the presence of examples in the documentation)

- Possibility of the task of dependency blocks for modules, copying and pasting of which is absent for each module

We declare blocks:def javaTestLibrary(project) { project.dependencies { testCompile "org.apache.commons:commons-lang3:${COMMONS_LANG_VER}" testCompile "commons-io:commons-io:${COMMONS_IO_VER}" testCompile "junit:junit:${TEST_JUNIT_VER}" testCompile "org.mockito:mockito-core:${TEST_MOCKITO_VER}" testCompile "org.assertj:assertj-core:${TEST_ASSERTJ_VER}" } }

And we use them in the project:project(':token') { javaProject(it) javaLogLibrary(it) javaTestLibrary(it) }

Running all this rich functionality from the console with one command, which identifies the base fragments of the project, updates the necessary dependencies, performs the necessary checks and generates the required artifacts, let us speak of assembly systems as tools that, if properly configured, can radically change the speed of delivery of your project to the finished consumers.

Thanks for attention!

Source: https://habr.com/ru/post/334592/

All Articles