High-Availability Postgres Clustering

A couple of months ago we moved from Amazon to our dedicated servers (Hetzner), one of the reasons for this was the high cost of RDS. The task is to set up and launch a master-slave cluster on dedicated servers. After googling and reading the official documentation, it was decided to assemble my own decision of the highly available asynchronous Postgres cluster.

Goals

- Use as few tools and dependencies as possible.

- Striving for transparency, no magic!

- Do not use all-included combine type pg-pool, stolon etc.

- Use docker and his buns.

So, let's begin. Actually, we will need Postgres itself and such a wonderful tool as repmgr , which manages replication management and cluster monitoring.

')

The project is called pg-dock, consists of 3 parts, each part lies on the githaba, you can take them and modify as you like.

- pg-dock-config is a ready - made set of configuration files, now 2 nodes are registered there, master slave.

- pg-dock is engaged in packing configs and delivering them to nodes, in the right form and in the right place.

- pg-dock-base is the base docker image that will be run on the nodes.

Let's take a detailed look at each part:

pg-dock-config

The cluster configuration has the following structure

| Two nodes (n1, n2) are already registered in the repository, if you have more than a node, then simply create another folder with the name of the new node. For each node its configuration files. It seems to me that everything is quite simple, for example, the env folder is the environment variables that will be picked up by the docker-compose, the postgres folder, respectively, the postgres configs, etc. For example, the file pg-dock-conf / n1 / env / main This tells us that during the initial initialization of the postgres, the user postgres and the testdb database will be created. Also, variables for the failover-ip script are written here that change the ip to a new master node if the old one is not available. pg-dock-conf / n1 / env / backup The environment variables for the interval backup of the database on s3 are picked up by the docker-compose when starting the service. |

If we have shared configuration files, then in order not to duplicate them on the nodes, we will put them in the shared folder.

Let's go through its structure:

- failover

In my case, there is a script for Hetzner failover-ip, which changes the ip to a new master. In your case, it can be a keepalived script or something similar. - initdb

All initializing sql queries must be put in this folder. - ssh

Here are the keys to connect to another node, in our example, the keys on all the nodes are the same, so they are in the shared folder. ּּ Ssh need repmgr to do such manipulations as switchover itp - sshd

The ssh server configuration file, ssh will work on port 2222 for us not to intersect with the default port on the host (22)

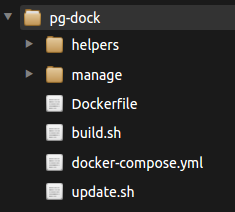

pg-dock

Here the configuration for each node is actually packaged.

The bottom line is to pack the node's configuration into the docker image, push it into the hub or your registry, and then do the update on the node.

| To work there are basic operations, create a build config (build.sh), update the config on the node (update.sh) and run the cluster itself (docker-compose.yml)

|

At startup:

PG_DOCK_NODE=n1 PG_DOCK_CONF_IMAGE=n1v1 ./build.sh docker images REPOSITORY TAG IMAGE ID CREATED SIZE n1v1 latest 712e6b2ace1a 6 minutes ago 1.17MB The pg-dock-conf / n1 configuration is copied to the pg-dock / pg-dock-conf-n1 folder, then the docker build starts with all the dependencies, the output is an image with the name n1v1 which stores our configuration for node n1.

At startup:

PG_DOCK_CONF_IMAGE=n1v1 ./update.sh This will launch a container that will update all the configuration files on the host. Thus, we can have several configuration images, rollback to different versions of it.

pg-docker-base

The base docker image in which all packages for cluster operation are installed: repmgr, rsync, openssh-server, supervisor ( Dockerfile ). The image itself is based on the latest version of postgres 9.6.3, but you can use any other build. Components run by supervisor from under postgres user. We will run this image on our servers (rsync, openssh-server is required for repmgr to work).

Let's run the cluster!

For convenience, in this article all the manipulations will be done with the help of the docker-machine.

We clone the pg-dock and pg-dock-conf projects into the working folder (for example lab)

mkdir ~/lab && cd ~/lab git clone https://github.com/xcrezd/pg-dock git clone https://github.com/xcrezd/pg-dock-conf We create nodes, group and the user of postgres (uid, gid has to be 5432 on a host and in the container)

docker-machine create n1 docker-machine ssh n1 sudo addgroup postgres --gid 5432 docker-machine ssh n1 sudo adduser -u 5432 -h /home/postgres --shell /bin/sh -D -G postgres postgres # debian/ubuntu #sudo adduser --uid 5432 --home /home/postgres --shell /bin/bash --ingroup postgres --disabled-password postgres docker-machine create n2 docker-machine ssh n2 sudo addgroup postgres -g 5432 docker-machine ssh n2 sudo adduser -u 5432 -h /home/postgres --shell /bin/sh -D -G postgres postgres Add ip node in / etc / hosts

docker-machine ip n1 #192.168.99.100 docker-machine ip n2 #192.168.99.101 # n1 docker-machine ssh n1 "sudo sh -c 'echo 192.168.99.100 n1 >> /etc/hosts'" docker-machine ssh n1 "sudo sh -c 'echo 192.168.99.101 n2 >> /etc/hosts'" # n2 docker-machine ssh n2 "sudo sh -c 'echo 192.168.99.100 n1 >> /etc/hosts'" docker-machine ssh n2 "sudo sh -c 'echo 192.168.99.101 n2 >> /etc/hosts'" If the IP of your machines differ from the IP in the article, then you need to add them to

- pg-dock-config / n1 / postgres / pg_hba.conf

- pg-dock-config / n2 / postgres / pg_hba.conf

Create configuration images and immediately update them on nodes

cd pg-dock docker-machine use n1 PG_DOCK_NODE=n1 PG_DOCK_CONF_IMAGE=n1v1 ./build.sh PG_DOCK_CONF_IMAGE=n1v1 ./update.sh docker-machine use n2 PG_DOCK_NODE=n2 PG_DOCK_CONF_IMAGE=n2v1 ./build.sh PG_DOCK_CONF_IMAGE=n2v1 ./update.sh Pay attention to the docker-machine use command ( how to do it ), each time it is used, we change the context of the client docker, that is, in the first case, all the docker manipulations will be on node n1 and then on n2.

We start containers

docker-machine use n1 PG_DOCK_NODE=n1 docker-compose up -d docker-machine use n2 PG_DOCK_NODE=n2 docker-compose up -d docker-compose will also launch the pg-dock-backup container, which will make a periodic backup on s3.

Now let's see where the files we need are stored:

| Files | Host | Container |

| DB | / opt / pg-dock / data | / var / lib / postgresql / data |

| Logs | / opt / pg-dock / logs | / var / log / supervisor |

| Configuration and Scripts | / opt / pg-dock / scripts | ** learn docker-compose.yml |

Go ahead, set up a cluster

docker-machine use n1 # docker exec -it -u postgres pg-dock repmgr master register docker-machine use n2 # n1 docker exec -it -u postgres -e PG_DOCK_FROM=n1 pg-dock manage/repmgr_clone_standby.sh # docker exec -it -u postgres pg-dock repmgr standby register That's it, the cluster is ready

docker exec -it -u postgres pg-dock repmgr cluster show Role | Name | Upstream | Connection String ----------+------|----------|-------------------------------------------- * master | n1 | | host=n1 port=5432 user=repmgr dbname=repmgr standby | n2 | n1 | host=n2 port=5432 user=repmgr dbname=repmgr Let's check it out. In the pg-dock-config / shared / tests folder we have such preparations for testing our cluster:

# cat tests/prepare.sh CREATE TABLE IF NOT EXISTS testtable (id serial, data text); GRANT ALL PRIVILEGES ON TABLE testtable TO postgres; # 100000 cat tests/insert.sh insert into testtable select nextval('testtable_id_seq'::regclass), md5(generate_series(1,1000000)::text); # cat tests/select.sh select count(*) from testtable; We create a test table, fill it with data and check if they are on the slave:

docker-machine use n1 # docker exec -it -u postgres pg-dock config/tests/prepare.sh # docker exec -it -u postgres pg-dock config/tests/insert.sh INSERT 0 1000000 docker-machine use n2 # n2 () docker exec -it -u postgres pg-dock config/tests/select.sh count --------- 1000000 (1 row) Profit!

Now let's take a look at the master drop script:

# docker-machine use n1 docker stop pg-dock # repmgr docker-machine use n2 docker exec -it pg-dock tailf /var/log/supervisor/repmgr-stderr.log #NOTICE: STANDBY PROMOTE successful Full log

[2017-07-12 12:51:49] [ERROR] unable to connect to server: Connection refused

Is the server running on host "n1" (192.168.99.100) and accepting

TCP / IP connections on port 5432?

[2017-07-12 12:51:49] [ERROR] connection to database failed

Is the server running on host "n1" (192.168.99.100) and accepting

TCP / IP connections on port 5432?

[2017-07-12 12:51:49] [WARNING] connection to master has been lost, trying to recover ... 60 seconds before failover decision

[2017-07-12 12:51:59] [WARNING] connection to master has been lost, trying to recover ... 50 seconds before failover decision

[2017-07-12 12:52:09] [WARNING] connection to master has been lost, trying to recover ... 40 seconds before failover decision

[2017-07-12 12:52:19] [WARNING] connection to master has been lost, trying to recover ... 30 seconds before failover decision

[2017-07-12 12:52:29] [WARNING] connection to master has been lost, trying to recover ... 20 seconds before failover decision

[2017-07-12 12:52:39] [WARNING] connection to master has been lost, trying to recover ... 10 seconds before failover decision

[2017-07-12 12:52:49] [ERROR] unable to reconnect to master (timeout 60 seconds) ...

[2017-07-12 12:52:54] [NOTICE] this site, promoting ...

% Total% Received% Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 171 100 143 0 28 3 0 0:00:47 0:00:39 0:00:08 31

Did not connect to server: Connection refused

Is the server running on host "n1" (192.168.99.100) and accepting

TCP / IP connections on port 5432?

NOTICE: promoting standby

NOTICE: promoting server using '/usr/lib/postgresql/9.6/bin/pg_ctl -D / var / lib / postgresql / data promote'

NOTICE: STANDBY PROMOTE successful

Is the server running on host "n1" (192.168.99.100) and accepting

TCP / IP connections on port 5432?

[2017-07-12 12:51:49] [ERROR] connection to database failed

Is the server running on host "n1" (192.168.99.100) and accepting

TCP / IP connections on port 5432?

[2017-07-12 12:51:49] [WARNING] connection to master has been lost, trying to recover ... 60 seconds before failover decision

[2017-07-12 12:51:59] [WARNING] connection to master has been lost, trying to recover ... 50 seconds before failover decision

[2017-07-12 12:52:09] [WARNING] connection to master has been lost, trying to recover ... 40 seconds before failover decision

[2017-07-12 12:52:19] [WARNING] connection to master has been lost, trying to recover ... 30 seconds before failover decision

[2017-07-12 12:52:29] [WARNING] connection to master has been lost, trying to recover ... 20 seconds before failover decision

[2017-07-12 12:52:39] [WARNING] connection to master has been lost, trying to recover ... 10 seconds before failover decision

[2017-07-12 12:52:49] [ERROR] unable to reconnect to master (timeout 60 seconds) ...

[2017-07-12 12:52:54] [NOTICE] this site, promoting ...

% Total% Received% Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 171 100 143 0 28 3 0 0:00:47 0:00:39 0:00:08 31

Did not connect to server: Connection refused

Is the server running on host "n1" (192.168.99.100) and accepting

TCP / IP connections on port 5432?

NOTICE: promoting standby

NOTICE: promoting server using '/usr/lib/postgresql/9.6/bin/pg_ctl -D / var / lib / postgresql / data promote'

NOTICE: STANDBY PROMOTE successful

Consider the status of the cluster:

docker exec -it -u postgres pg-dock repmgr cluster show Role | Name | Upstream | Connection String ---------+------|----------|-------------------------------------------- FAILED | n1 | | host=n1 port=5432 user=repmgr dbname=repmgr * master | n2 | | host=n2 port=5432 user=repmgr dbname=repmgr Now the new master has n2, failover ip also points to it.

Now let's return the old master as a new slave.

docker-machine use n1 # PG_DOCK_NODE=n1 docker-compose up -d # # n2 docker exec -it -u postgres -e PG_DOCK_FROM=n2 pg-dock manage/repmgr_clone_standby.sh # docker exec -it -u postgres pg-dock repmgr standby register -F Consider the status of the cluster:

docker exec -it -u postgres pg-dock repmgr cluster show Role | Name | Upstream | Connection String ---------+------|-----------|-------------------------------------------- * master | n2 | | host=n2 port=5432 user=repmgr dbname=repmgr standby| n1 | n2 | host=n1 port=5432 user=repmgr dbname=repmgr Done! And that's what we did to do; We dropped the master, the automatic assignment of the slave by the new master worked, the failover IP was changed. The system continues to function. Then we reanimated node n1, made it a new slave. Now for the sake of interest, we will make a swithover - that is, we will manually make n1 a master and n2 a slave, as it was before. That's exactly what repmgr needs for ssh, the slave connects via ssh to the master and makes the necessary manipulations with scripts.

switchover:

docker-machine use n1 docker exec -it -u postgres pg-dock repmgr standby switchover #NOTICE: switchover was successful Full log

NOTICE: switching current node 1 to master server and current master to standby ...

Warning: Permanently added '[n2]: 2222, [192.168.99.101]: 2222' (ECDSA) to the list of known hosts.

NOTICE: 1 files copied to / tmp / repmgr-n2-archive

NOTICE: current master has been stopped

Did not connect to server: Connection refused

Is the server running on host "n2" (192.168.99.101) and accepting

TCP / IP connections on port 5432?

NOTICE: promoting standby

NOTICE: promoting server using '/usr/lib/postgresql/9.6/bin/pg_ctl -D / var / lib / postgresql / data promote'

server promoting

NOTICE: STANDBY PROMOTE successful

NOTICE: Executing pg_rewind on old master server

Warning: Permanently added '[n2]: 2222, [192.168.99.101]: 2222' (ECDSA) to the list of known hosts.

Warning: Permanently added '[n2]: 2222, [192.168.99.101]: 2222' (ECDSA) to the list of known hosts.

NOTICE: 1 files copied to / var / lib / postgresql / data

Warning: Permanently added '[n2]: 2222, [192.168.99.101]: 2222' (ECDSA) to the list of known hosts.

Warning: Permanently added '[n2]: 2222, [192.168.99.101]: 2222' (ECDSA) to the list of known hosts.

NOTICE: restarting server using '/usr/lib/postgresql/9.6/bin/pg_ctl -w -D / var / lib / postgresql / data -m fast restart'

pg_ctl: PID file "/var/lib/postgresql/data/postmaster.pid" does not exist

Is server running?

starting server anyway

NOTICE: replication slot "repmgr_slot_1" deleted on node 2

NOTICE: switchover was successful

Warning: Permanently added '[n2]: 2222, [192.168.99.101]: 2222' (ECDSA) to the list of known hosts.

NOTICE: 1 files copied to / tmp / repmgr-n2-archive

NOTICE: current master has been stopped

Did not connect to server: Connection refused

Is the server running on host "n2" (192.168.99.101) and accepting

TCP / IP connections on port 5432?

NOTICE: promoting standby

NOTICE: promoting server using '/usr/lib/postgresql/9.6/bin/pg_ctl -D / var / lib / postgresql / data promote'

server promoting

NOTICE: STANDBY PROMOTE successful

NOTICE: Executing pg_rewind on old master server

Warning: Permanently added '[n2]: 2222, [192.168.99.101]: 2222' (ECDSA) to the list of known hosts.

Warning: Permanently added '[n2]: 2222, [192.168.99.101]: 2222' (ECDSA) to the list of known hosts.

NOTICE: 1 files copied to / var / lib / postgresql / data

Warning: Permanently added '[n2]: 2222, [192.168.99.101]: 2222' (ECDSA) to the list of known hosts.

Warning: Permanently added '[n2]: 2222, [192.168.99.101]: 2222' (ECDSA) to the list of known hosts.

NOTICE: restarting server using '/usr/lib/postgresql/9.6/bin/pg_ctl -w -D / var / lib / postgresql / data -m fast restart'

pg_ctl: PID file "/var/lib/postgresql/data/postmaster.pid" does not exist

Is server running?

starting server anyway

NOTICE: replication slot "repmgr_slot_1" deleted on node 2

NOTICE: switchover was successful

Consider the status of the cluster:

docker exec -it -u postgres pg-dock repmgr cluster show Role | Name | Upstream | Connection String ----------+------|----------|-------------------------------------------- standby | n2 | | host=n2 port=5432 user=repmgr dbname=repmgr * master | n1 | | host=n1 port=5432 user=repmgr dbname=repmgr That's it, the next time when we need to update the node's configuration, be it postgres, repmgr, or supervisor config, we just pack it and update it:

PG_DOCK_NODE=n1 PG_DOCK_CONF_IMAGE=n1v1 ./build.sh PG_DOCK_CONF_IMAGE=n1v1 ./update.sh After updating the new configuration:

# postgres docker exec -it -u postgres pg-dock psql -c "SELECT pg_reload_conf();" # supervisor docker exec -it -u postgres pg-dock supervisorctl reread # docker exec -it -u postgres pg-dock supervisorctl restart foo:sshd * A nice bonus, the supervisor has a log rotation feature, so we don’t have to worry about it either.

* Containers work directly through the host network, thereby avoiding network virtualization delays.

* I recommend adding already existing production nodes in the docker-machine, this will greatly simplify your life.

Now let's touch on the topic of balancing requests. I didn’t want to complicate (that is, use pg-pool, haproxy, stolon), so we will do balancing on the application side, thereby relieving ourselves of the responsibility for organizing the high availability of the balancer itself. Our backends are written in ruby, so the choice fell on gem makara . Heme can separate requests for sample and data modification (insert / update / delete / alter), sample requests can be balanced between several nodes (slaves). In case of failure of one of the nodes, heme can temporarily exclude him from the pool.

Sample database.yml configuration file:

production: adapter: 'postgresql_makara' makara: # the following are default values blacklist_duration: 5 master_ttl: 5 master_strategy: failover sticky: true connections: - role: master database: mydb host: 123.123.123.123 port: 6543 weight: 3 username: <%= ENV['DATABASE_USERNAME'] %> password: <%= ENV['DATABASE_PASSWORD'] %> - role: slave database: mydb host: 123.123.123.124 port: 6543 weight: 7 username: <%= ENV['DATABASE_USERNAME'] %> password: <%= ENV['DATABASE_PASSWORD'] %> Libraries in other languages / frameworks:

→ laravel

→ Yii2

→ Node.js

Conclusion

So, what we got in the end:

- Self-sufficient master-standby cluster ready for battle.

- Transparency of all components, easy replaceability.

- Automatic failover in case of master failure (repmgr)

- Load balancing on the client, thereby removing the responsibility for the availability of the balancer itself

- The lack of a single point of failure, repmgr will run a script that will transfer the IP address to the new node, which was upgraded to the master in case of failure. In the template there is a script for hetzner, but nothing prevents to add keepalived, aws elasticIp, drdb, pacemaker, corosync.

- Version control, the ability to do rollback in case of problems / ab testing.

- Ability to customize the system for themselves, add nodes, repmgr witness, for example, configuration flexibility and its changes.

- Periodic backup on S3

In the next article I will tell you how to place pg-dock and PgBouncer on one node without losing high availability, thank you all for your attention!

Recommendations for familiarization:

Source: https://habr.com/ru/post/334484/

All Articles