How does neural machine translation work?

Description of the processes of machine translation of rule-based (Rule-Based), machine translation based on phrases (Phrase-Based) and neural translation

In this publication of our cycle of step-by-step articles, we will explain how neural machine translation works and compare it with other methods: rule-based translation technology and frame translation technology (PBMT, the most popular subset of which is statistical machine translation - SMT).

The research results obtained by Neural Machine Translation are surprising in terms of decoding the neural network. It seems that the network actually “understands” the sentence when it translates it. In this article we will examine the question of the semantic approach that neural networks use for translation.

')

Let's start with the fact that we consider the methods of work of all three technologies at different stages of the translation process, as well as the methods used in each of the cases. Next, we will get acquainted with some examples and compare what each of the technologies does in order to give the most correct translation.

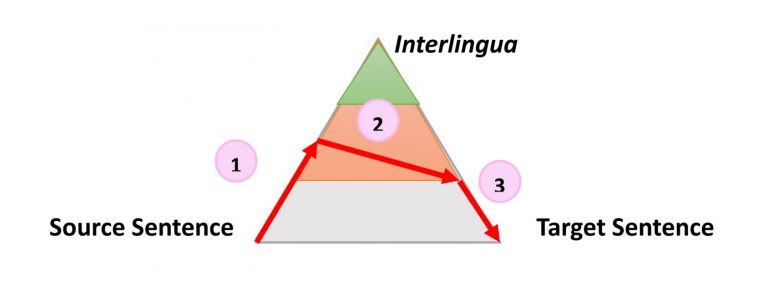

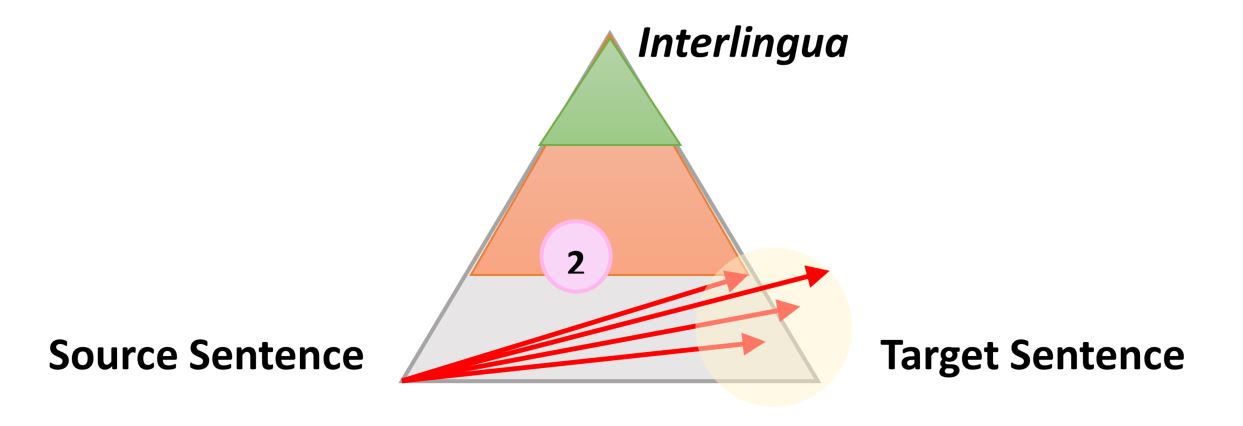

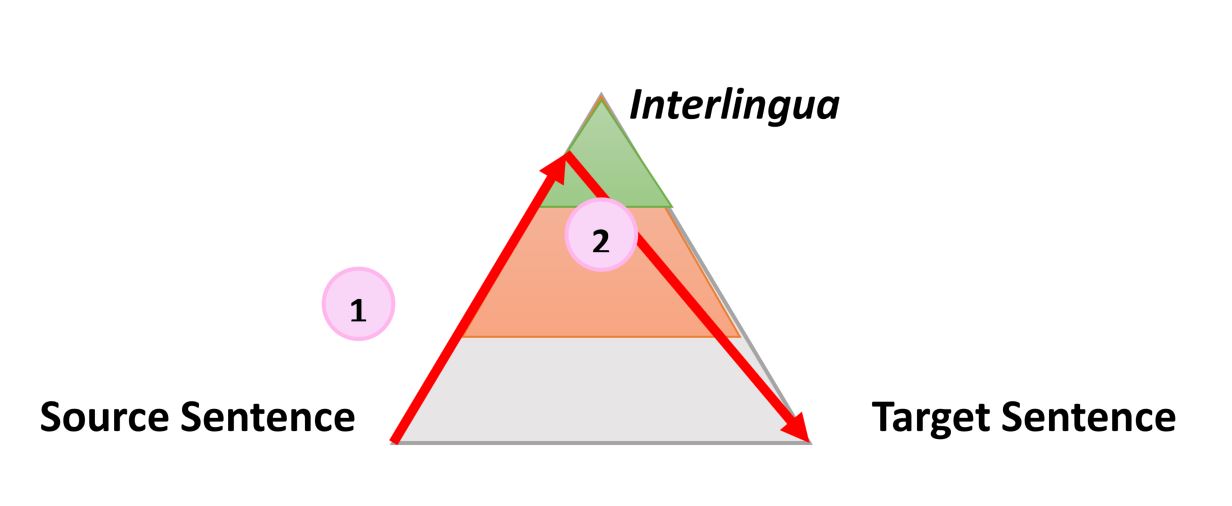

A very simple, but still useful information about the process of any type of automatic translation is the following triangle, which was formulated by the French researcher Bernard Vokua (Bernard Vauquois) in 1968:

This triangle shows the process of converting a source sentence to a target in three different ways.

The left part of the triangle characterizes the source language, while the right - the target. The difference in levels within a triangle represents the depth of the process of analyzing the original sentence, for example, syntactic or semantic. Now we know that we cannot separately carry out syntactic or semantic analysis, but the theory is that we can go deep in each of the directions. The first red arrow indicates the analysis of the sentence in the original language. From the sentence given to us, which is simply a sequence of words, we can get an idea of the internal structure and the degree of possible depth of analysis.

For example, at one level we can define parts of the speech of each word (noun, verb, etc.), and on the other, the interaction between them. For example, which particular word or phrase is the subject.

When the analysis is completed, the sentence is “carried over” by the second process with an equal or lesser depth of analysis to the target language. Then the third process, called “generation,” forms the actual target sentence from this interpretation, that is, creates a sequence of words in the target language. The idea of using a triangle is that the higher (deeper) you analyze the original sentence, the easier the transfer phase goes. Ultimately, if we could transform the source language into some kind of universal “interlingualism” during this analysis, we would not need to perform the transfer procedure at all. It would take only an analyzer and a generator for each language to be translated into any other language (direct translation of a comment.)

This general idea explains the intermediate steps when the machine translates the sentences step by step. More importantly, this model describes the nature of the actions during translation. Let's illustrate how this idea works for three different technologies, using the sentence “The smart mouse plays violin” as an example (The sentence chosen by the authors of the publication contains a small catch, since the word Smart is in English, except for the most common meaning of smart , has another 17 meanings in the dictionary as an adjective, for example, “agile” or “clever” comment.)

Machine translation based on rules

Machine based rule translation is the oldest approach and covers a wide range of technologies. However, all of them are usually based on the following postulates:

- The process strictly follows the Vokua triangle, the analysis is very often overestimated, and the generation process is minimized;

- All three translation stages use a database of rules and lexical elements to which these rules apply;

- Rules and lexical elements are uniquely defined, but can be changed by a linguist.

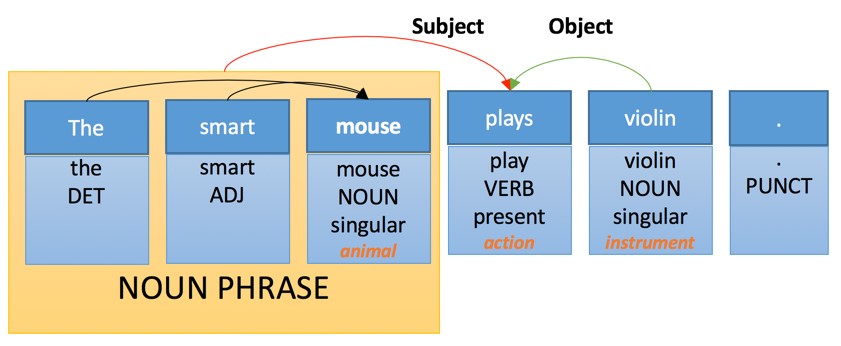

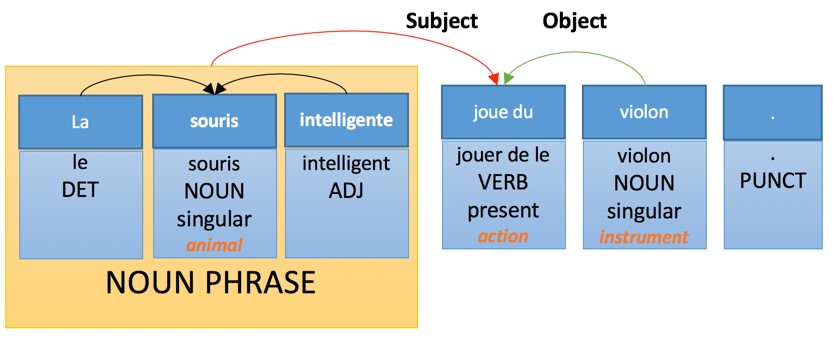

For example, the internal representation of our proposal may be as follows:

Here we see a few simple levels of analysis:

- Targeting parts of speech. Each word is assigned its own "part of speech", which is a grammatical category.

- Morphological analysis: the word “plays” is recognized as a distortion from a third person and represents the form of the verb “Play”.

- Semantic analysis: some words are assigned a semantic category. For example, “Violin” is a tool.

- Composite analysis: some words are grouped. "Smart mouse" is a noun.

- Dependency analysis: words and phrases are associated with “links” by which the object and subject of the main verb “Plays” are identified.

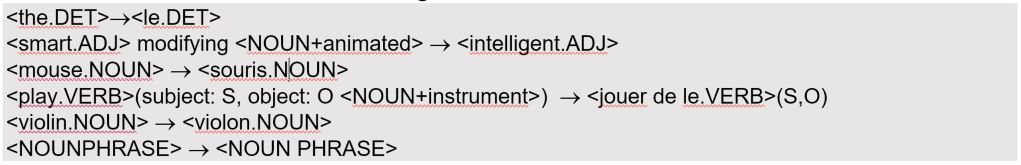

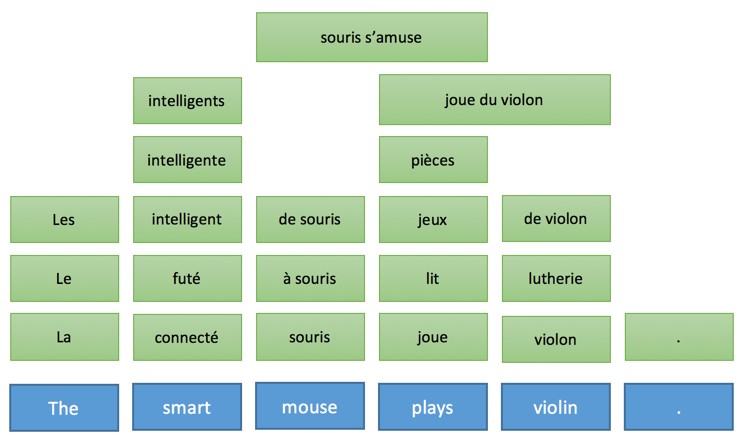

The transfer of such a structure will be subject to the following rules of lexical transformation:

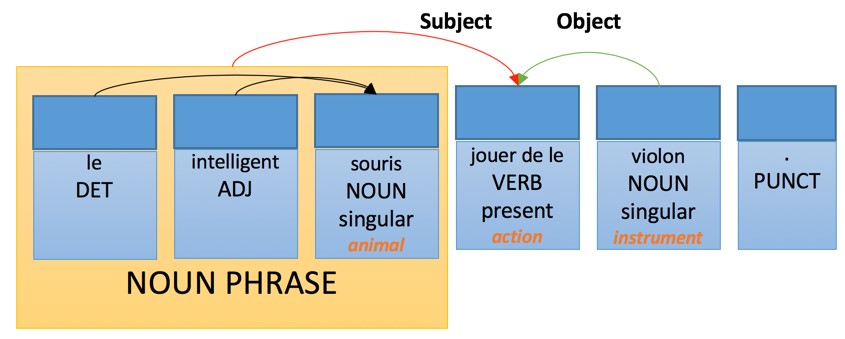

The application of these rules will lead to the following interpretation in the target language of the translation:

While the French generation rules will look like this:

- The adjective expressed by the phrase follows the noun - with a few exceptions listed.

- The defining word is consistent in number and gender with the noun it modifies.

- The adjective is matched in number and gender with the noun it modifies.

- Verb agreed with the subject.

Ideally, this analysis will generate the following translation version:

Machine translation based on phrases

Phrase-based machine translation is the simplest and most popular version of statistical machine translation. Today, it is still the main workhorse and is used in large online translation services.

Technically speaking, machine translation based on phrases does not follow the process formulated by Vokua. Moreover, in the process of this type of machine translation no analysis or generation is carried out, but, more importantly, the subordinate part is not deterministic. This means that the technology can generate several different translations of the same sentence from the same source, and the essence of the approach is to choose the best option.

This translation model is based on three basic methods:

- The use of the phrase-table, which gives the translation options and the probability of their use in this sequence in the source language.

- An order reordering table that indicates how words can be swapped when migrated from the source to the target language.

- A language model that shows the probability for each possible sequence of words in the target language.

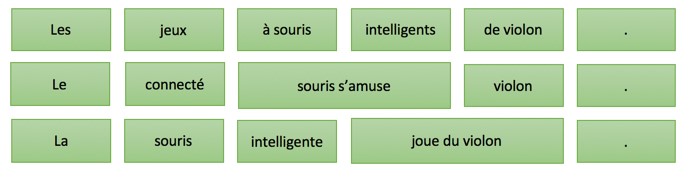

Consequently, the following table will be built on the basis of the original sentence (this is a simplified form, in reality there would be many more options associated with each word):

Further, this table generates thousands of possible translation sentences, for example:

However, thanks to intelligent probability calculations and the use of more advanced search algorithms, only the most likely translation options will be considered, and the best one will be preserved as a final one.

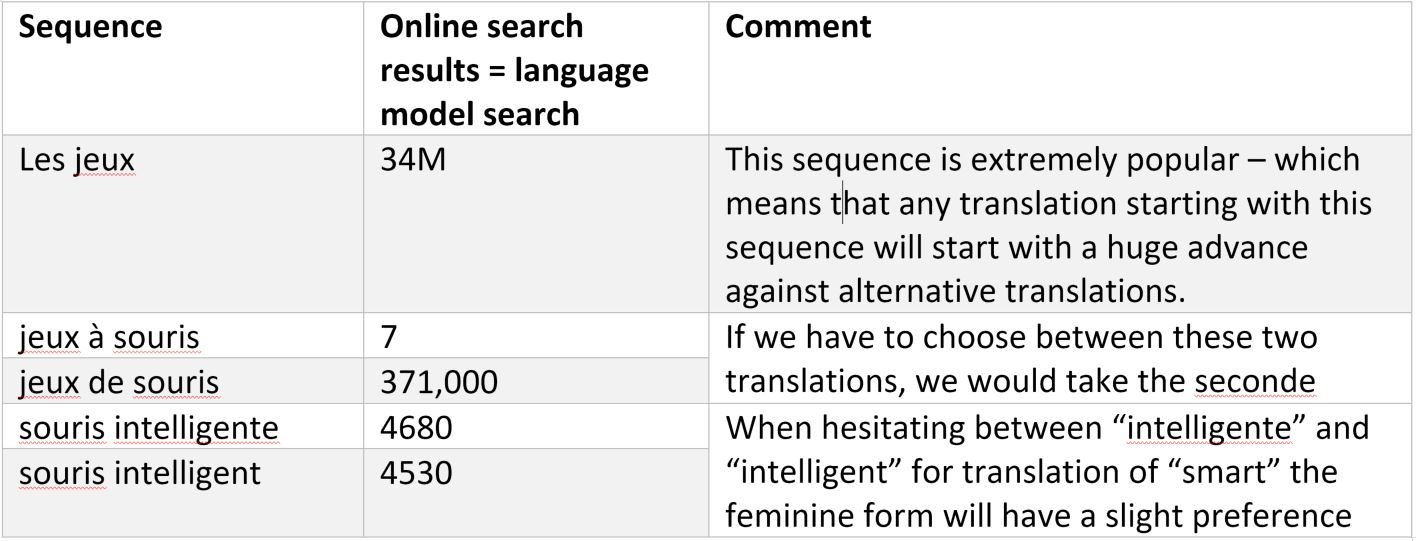

In this approach, the target language model is extremely important and we can get an idea of the quality of the result simply by searching the Internet:

Search algorithms intuitively prefer to use sequences of words that are the most likely translations of the original ones, taking into account the order reordering table. This allows you to accurately generate the correct sequence of words in the target language.

In this approach, there is no explicit or implicit linguistic or semantic analysis. We were offered many options. Some are better, others worse, but as far as we know, the main online translation services use this technology.

Neural machine translation

The approach to the organization of neural machine translation is fundamentally different from the previous one, and based on the Vokua triangle, it can be described as follows:

Neural machine translation has the following features:

- "Analysis" is called coding, and its result is a mysterious sequence of vectors.

- “Transfer” is called decoding and directly generates the target form without any generation phase. This is not a strict limitation and there may be variations, but the underlying technology works that way.

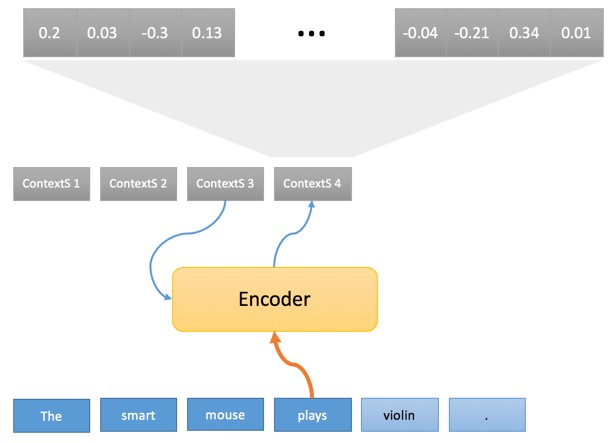

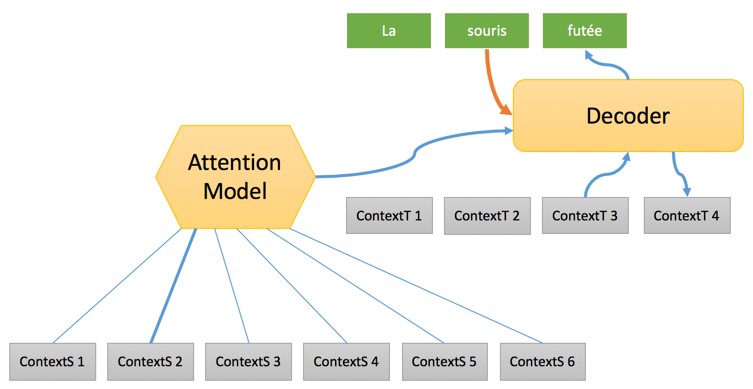

The process itself is divided into two phases. In the first, each word of the source sentence passes through a “coder” that generates what we call the “source context”, relying on the current word and the previous context:

The sequence of source contexts (ContextS 1, ... ContextS 5) is an internal interpretation of the source sentence of the Vokua triangle and, as mentioned above, is a sequence of floating point numbers (usually 1000 floating point numbers associated with each source word). For now, we will not discuss how the encoder performs this conversion, but I would like to note that the initial conversion of words in the “float” vector is especially interesting.

In fact, this is a technical unit, as is the case with the rule-based translation system, where each word is first compared with a dictionary, the first step of the encoder is to search for each source word within the table.

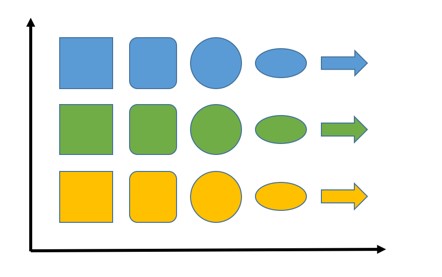

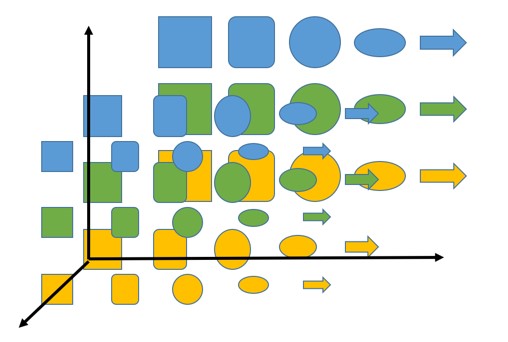

Suppose you need to imagine different objects with variations in shape and color in two-dimensional space. In this case, the objects that are closest to each other should be similar. The following is an example:

Figures are shown on the abscissa axis and there we try to place objects of another shape that are closest in this parameter (we will need to indicate what makes the figures similar, but in the case of this example it seems intuitive). The ordinate is a color — green between yellow and blue (positioned because green is the result of mixing yellow and blue, approx. Lane). If our figures had different sizes, we could add this third parameter as follows:

If we add more colors or shapes, we can also increase the number of dimensions so that any point can represent different objects and the distance between them, which reflects the degree of their similarity.

The basic idea is that it works in the case of placing words. Instead of figures there are words, the space is much larger - for example, we use 800 dimensions, but the idea is that the words can be represented in these spaces with the same properties as the figures.

Consequently, words with common properties and signs will be located close to each other. For example, one can imagine that words of a certain part of speech are one dimension, words based on gender (if there is one) are another, there may be a sign of positive or negative meaning, and so on.

We do not know exactly how these investments are formed. In another article, we will analyze attachments in more detail, but the idea itself is as simple as the organization of figures in space.

Let's go back to the translation process. The second step is as follows:

At this stage, a complete sequence is formed with a focus on the “source context”, after which one after the other the target words are generated using:

- “Target context” formed in conjunction with the previous word and providing some information about the status of the translation process.

- The significance of the “contextual source”, which is a mixture of different “source contexts” based on a specific model called the Attention Model. What is this we will discuss in another article. In short, the “Attention Models” choose the source word to be used in translation at any stage of the process.

- Previously quoted words using word embedding to convert it into a vector that will be processed by the decoder.

The translation is completed when the decoder reaches the generation stage of the last word in the sentence.

The whole process is undoubtedly very mysterious and we will need several publications in order to review the work of its individual parts. The main thing to remember is that the operations of the neural machine translation process are arranged in the same sequence as in the case of machine translation based on the rules, but the nature of the operations and processing of objects is completely different. And these differences begin with the conversion of words into vectors through their embedding in tables. Understanding this point is enough to realize what is happening in the following examples.

Translation examples for comparison

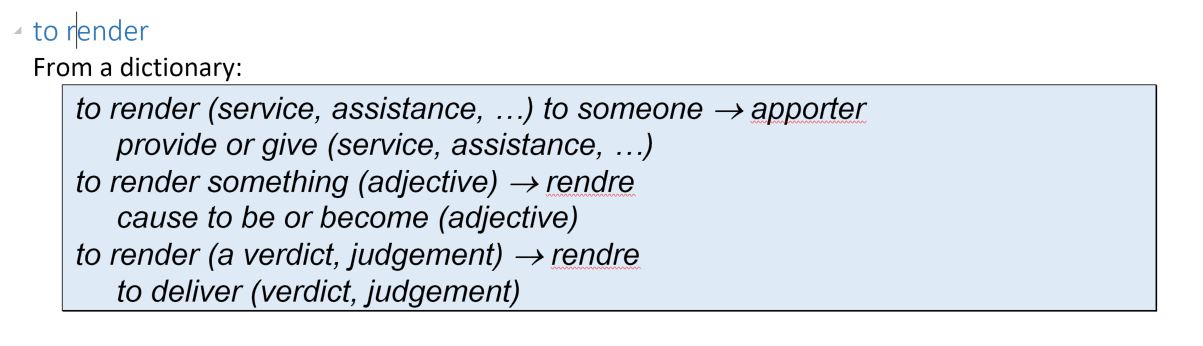

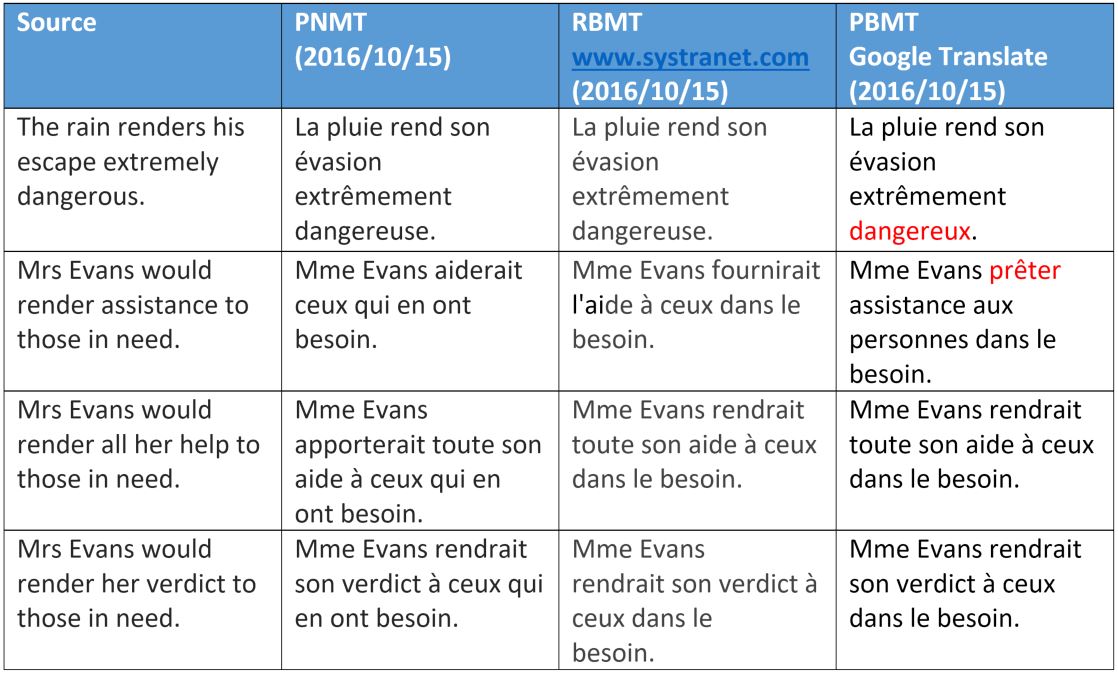

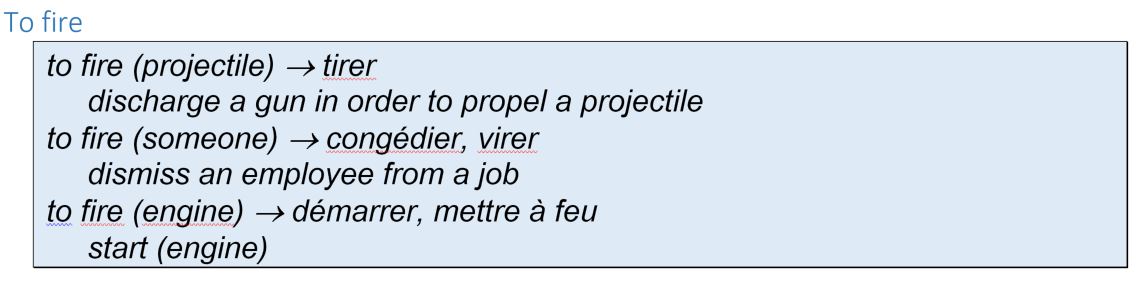

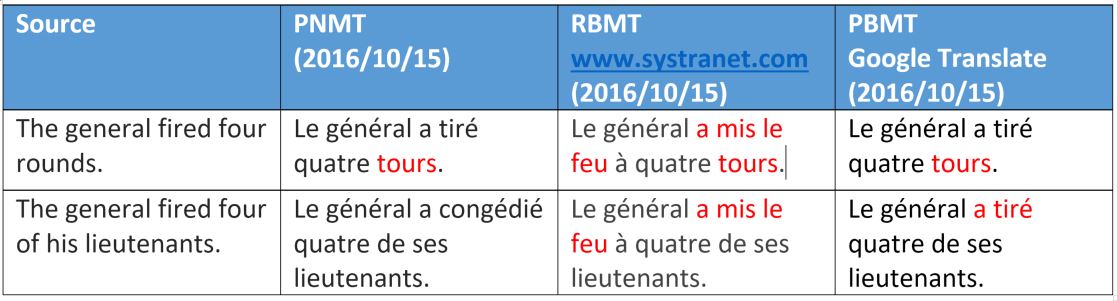

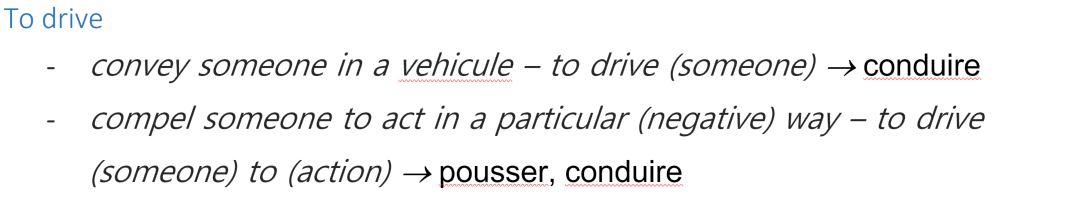

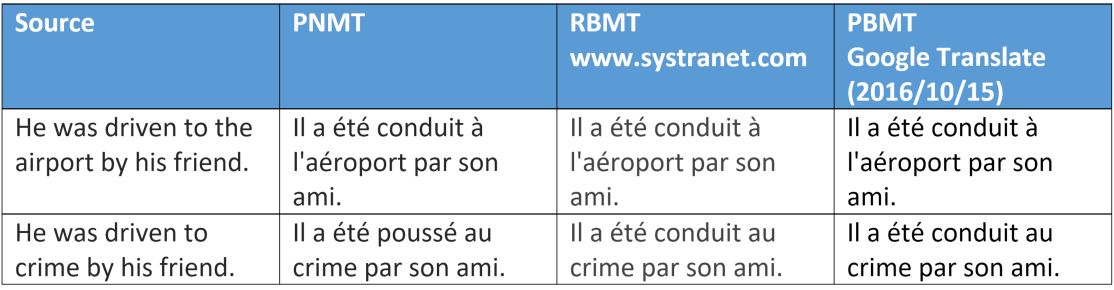

Let's look at some examples of translation and discuss how and why some of the suggested options do not work for different technologies. We chose several polysemic verbs of the English language and study their translation into French.

We see that machine translation based on phrases interprets “render” as a meaning - with the exception of the very idiomatic variant “rendering assistance”. This can be easily explained. The choice of a value depends either on checking the syntactic value of the sentence structure, or on the semantic category of the object.

For neural machine translation, it is clear that the words “help” and “assistance” are processed correctly, which shows some superiority, as well as the obvious ability of this method to obtain syntactic data at a great distance between words, which we will take a closer look at in another publication.

This example again shows that neural machine translation has semantic differences in two other ways (they mainly relate to the animate, denotes the word of a person or not).

However, we note that the word "rounds" was incorrectly translated, which in this context has the meaning of the word "bullet". We will explain this type of interpretation in another article on neural network training. As for the rule-based translation, he recognized only the third meaning of the word “rounds”, which is used in relation to missiles, not bullets.

Above is another interesting example of how semantic variations of a verb in the course of neural translation interact with an object in the case of an unambiguous use of the word being proposed for translation (crime or destination).

Other variants with the word "crime" showed the same result ...

Translators working on the basis of words and phrases were also not mistaken, since they used the same verbs that are acceptable in both contexts.

Source: https://habr.com/ru/post/334342/

All Articles