VESNIN Server: First Disk Subsystem Tests

Debugging an instance of the server of the first revision, we partially tested the speed of the I / O subsystem. In addition to the numbers with test results, in the article I tried to reflect the observations that can be useful to engineers when designing and configuring application I / O.

I'll start from afar. Up to 4 processors (up to 48 POWER8 cores) and a lot of memory (up to 8 TB) can be put into our server. This opens up many opportunities for applications, but a large amount of data in RAM entails the need to store them somewhere. The data must be quickly retrieved from the disks and also quickly re-stuffed. In the near future we are waiting for the beautiful world of disaggregated non-volatile and shared memory. In this beautiful future, perhaps, there will be no need for a backing store at all. The processor will copy the bytes directly from the internal registers to non-volatile memory with an access time like DRAM (tens NS) and the memory hierarchy will be reduced by one floor. All this is then, now all the data are usually stored on a block disk subsystem.

We define the initial conditions for testing:

')

The server has a relatively large number of cores. This is useful for parallel processing of large amounts of data. That is, one of the priorities is the high throughput capacity of the I / O subsystem with a large number of parallel processes. As a result, it is logical to use microbenmark and set up quite a lot of parallel threads.

In addition, the I / O subsystem is built on NVMe disks, which can process many requests in parallel. Accordingly, we can expect a performance boost from asynchronous I / O. In other words, interesting is the high throughput in parallel processing. This is more appropriate for the purpose of the server. Performance on single-threaded applications, and achieving a minimum response time, although it is one of the goals of future tuning, is not considered in this test.

Benchmarks of individual NVMe disks are full on the network, it’s not worth creating extra ones. In this article, I consider the disk subsystem as a whole, so the disks will be loaded mainly in groups. As a load, we will use 100% random read and write with a block of different sizes.

On a small block of 4K, we’ll look at IOPS (number of operations per second) and secondarily latency (response time). On the one hand, the focus on IOPS is a legacy from hard drives, where random access by a small unit brought the greatest delays. In the modern world, all-flash systems are capable of issuing millions of IOPS, often more than software can use. Now “IOPS-intensive loads” are valuable because they show the balance of the system in terms of computing resources and bottlenecks in the software stack.

On the other hand, for some of the tasks, it is not the number of operations per second that is important, but the maximum throughput on a large block ≥64KB. For example, when data is drained from memory into disks (database snapshot) or the database is loaded into memory for in-memory computing, warming up the cache. For a server with 8 terabytes of memory, the throughput of the disk subsystem is of particular importance. On a large block we will look at the bandwidth, that is, megabytes per second.

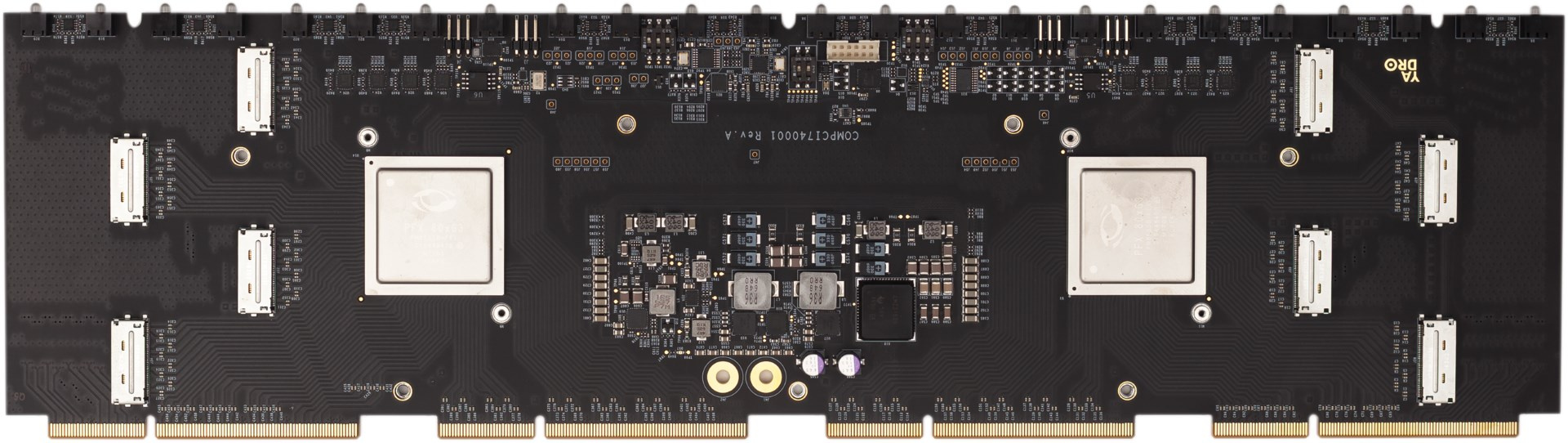

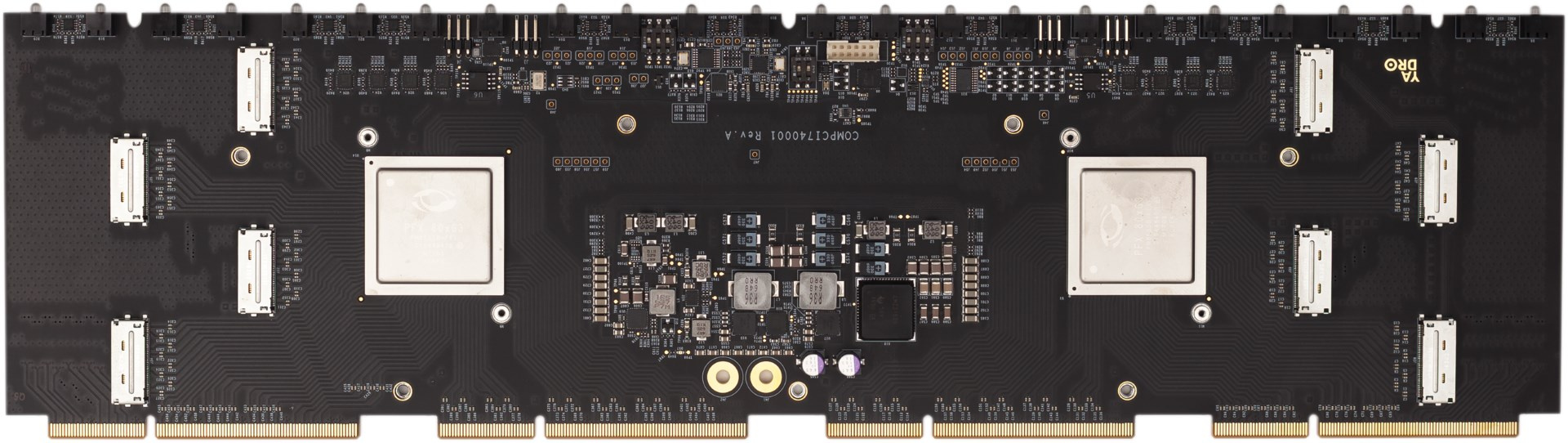

The server disk subsystem can include up to 24 NVMe standard disks. The drives are evenly distributed across four processors using two PMC 8535 PCI Express switches. Each switch is logically divided into three virtual switches: one x16 and two x8. Thus, for each processor is available PCIe x16, or up to 16 GB / s. 6 NVMe disks are connected to each processor. In total, we expect throughput up to 60 GB / s from all drives.

For tests, I have an instance of a server with 4 processors (8 cores per processor, the maximum is 12 cores). The disks are connected to two sockets out of four. That is, it is half of the maximum configuration of the disk subsystem. On the backplane with PCI Express switches of the first revision, two Oculink connectors were faulty, and therefore only half of the drives are available. In the second revision, this has already been fixed, but here I was able to put only half of the disks, namely, the following configuration was obtained:

The variety of disks is caused by the fact that we simultaneously test them to form a nomenclature of standard components (disks, memory, etc.) from 2-3 manufacturers.

To begin, let's perform a simple test in the minimum configuration - one disk (Micron MTFDHAL2T4MCF-1AN1ZABYY), one POWER8 processor and one fio stream with a queue = 16.

It turned out like this:

What do we see here? Received 133K IOPS with a response time of 119 µs. Note that the CPU usage is 73%. It's a lot. What is the processor busy with?

We use asynchronous I / O, and this simplifies the analysis of the results. fio separately counts slat (submission latency) and clat (completion latency). The first includes the execution time of the read system call before returning to the user space. That is, all the overhead of the kernel before the request leaves in iron is shown separately.

In our case, the slat is only 2.8 μs per request, but given the repetition of this 133,000 times per second, we get a lot: 2.8 μs * 133,000 = 372 ms. That is, the processor spends 37.2% of the time only on the IO submission. And there is also the fio code itself, interrupts, the work of the asynchronous I / O driver.

The total processor load is 73%. It looks like another fio won't pull the kernel, but let's try:

With two threads, the speed grew from 133k to 180k, but the core is overloaded. According to the top processor utilization 100% and clat increased. That is, 188k is the limit for one core on this load. It is easy to see that the growth of clat is caused by the processor, and not by the disk. Let's see the 'biotop' ():

Because of the enabled tracing, the speed slipped somewhat, but the response time from the disks ~ 109 µs is the same as in the previous test. Measurements in another way (sar -d) show the same numbers.

For the sake of curiosity, it is interesting to see what the processor is doing:

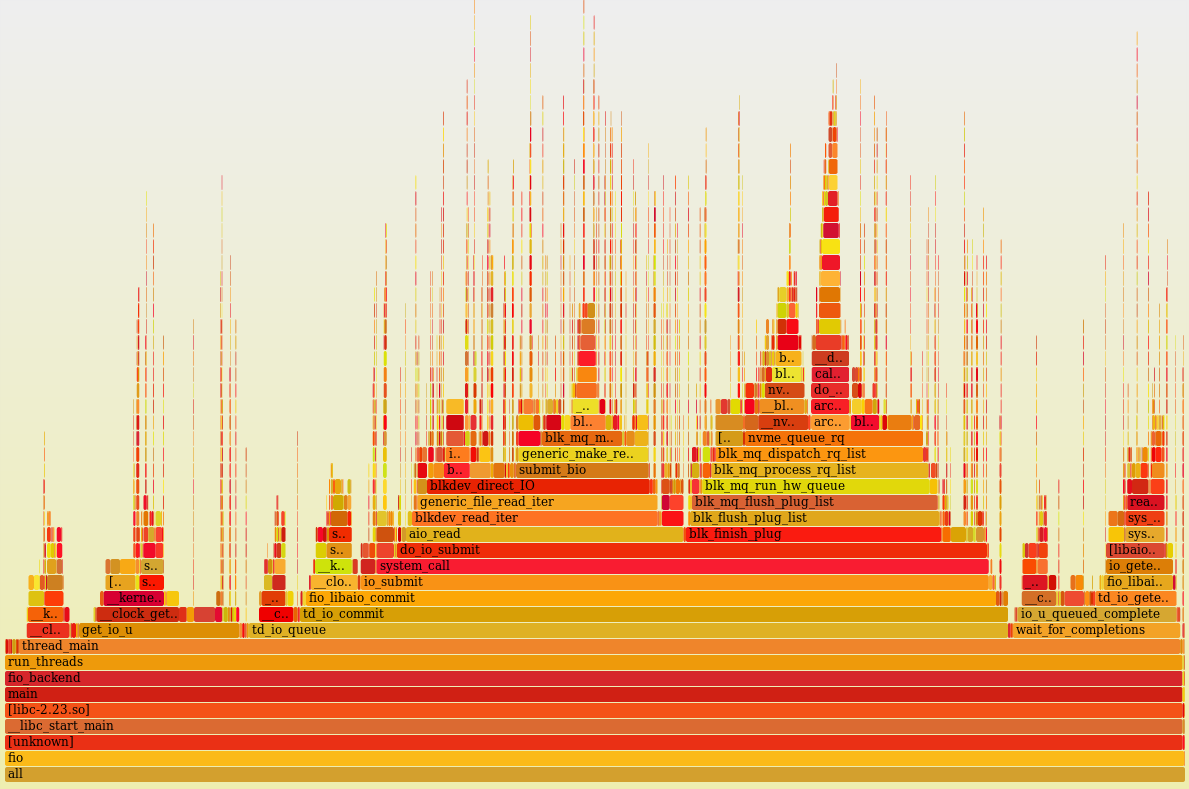

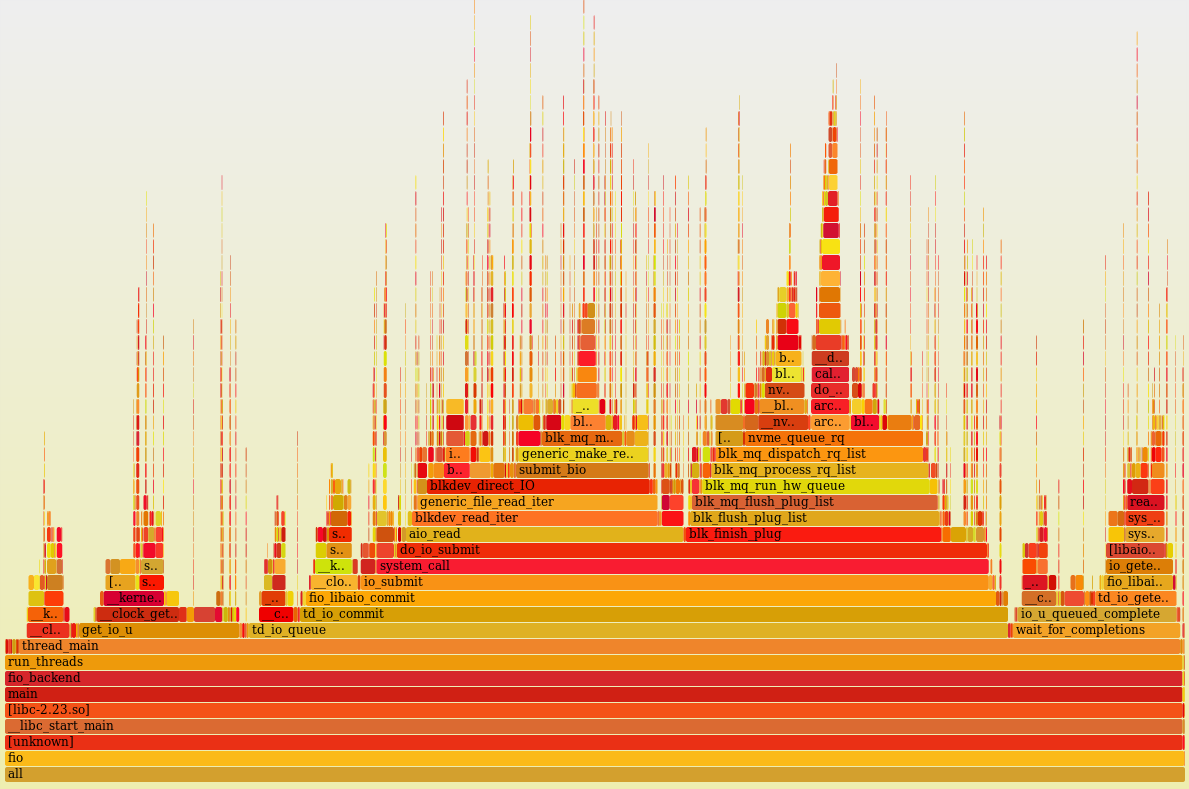

Single core (perf + flame graph) load profile with multithreading disabled and two fio processes running. As you can see, it does something 100% of the time (idle = 0%).

Visually, processor time is more or less evenly distributed between a large number of user (fio code itself) and nuclear functions (asynchronous I / O, block level, driver, many small peaks are interrupts). You cannot see any single function where an abnormally large amount of CPU time was spent. It looks good and, when viewing stacks, it doesn’t come up with ideas that one could twist.

So, we found out that with active IO load, the processor is easy to overload. The number of processor utilization indicates that it is busy for the operating system, but says nothing about loading the processor nodes. In particular, the processor may appear to be loaded while waiting for external components, such as memory. We don’t have a goal here to figure out processor utilization efficiency, but to understand the potential of tuning, it’s interesting to take a look at the “CPU counters” that are partially accessible through 'perf'.

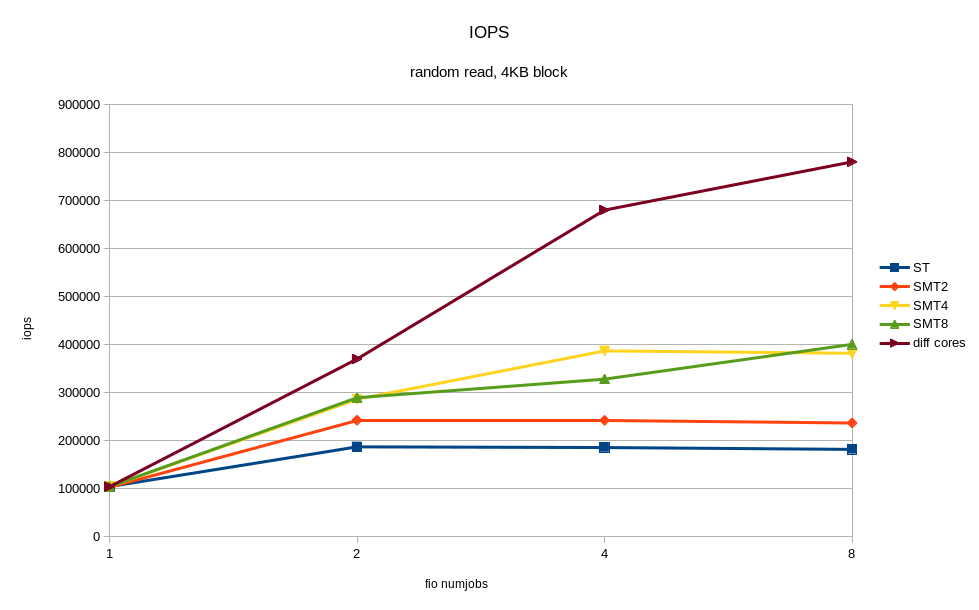

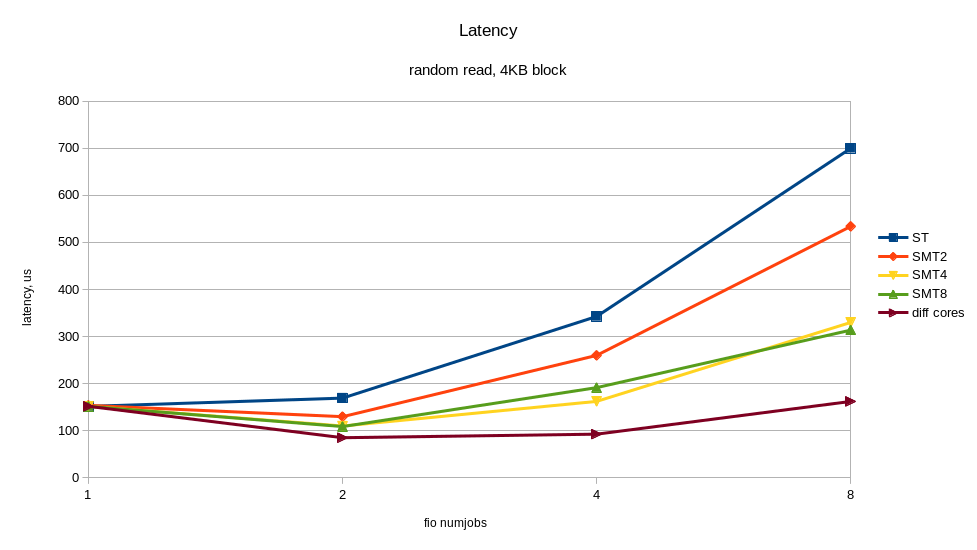

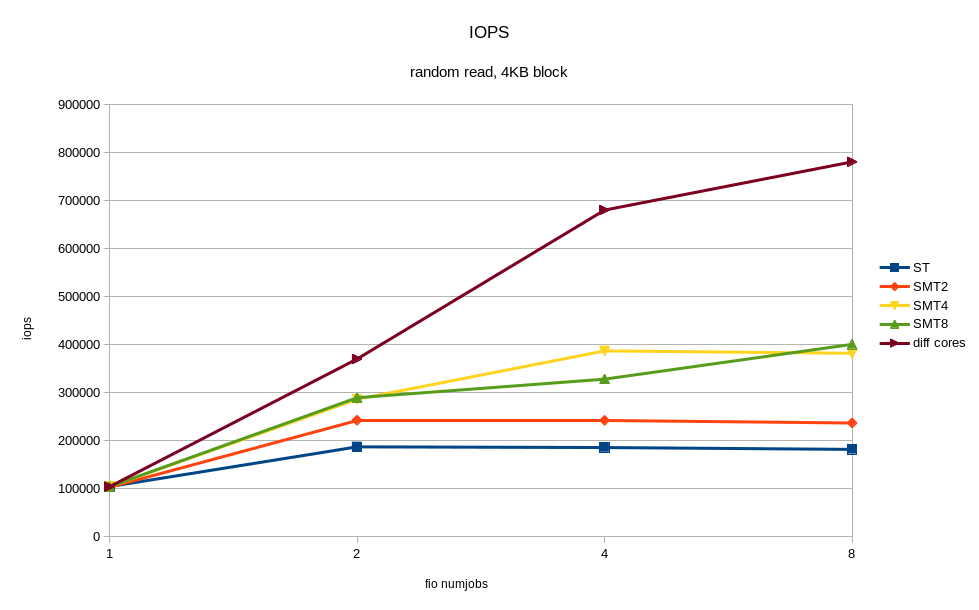

The output above shows that IPC (insn per cycle) 0.73 is not so bad, but theoretically it can be up to 8 on Power8. In addition, 50% of the “backend cycles idle” (PM_CMPLU_STALL metric) can mean waiting for memory. That is, the processor is busy for the Linux scheduler, but the resources of the processor itself are not particularly loaded. It is quite possible to expect an increase in performance from the inclusion of multithreading (SMT), with an increase in the number of threads. The result of what happened when you turned on SMT is shown in the graphs. I received a significant increase in speed from additional fio processes running on other threads of the same processor. For comparison, there is a case when all the threads work on different cores (diff cores).

The graphs show that the inclusion of SMT8 gives an almost two-fold increase in speed and a decrease in response time. Quite well and we are shooting> 400K IOPS from one core! Along the way, we see that one core, even with SMT8 enabled, is not enough to fully load the NVMe disk. Spreading fio streams across different cores, we get almost twice the best disk performance - this is what modern NVMe can do.

Thus, if the application architecture allows you to adjust the number of writing / reading processes, then it is better to adjust their number to the number of physical / logical cores in order to avoid slowdowns from overloaded processors. A single NVMe disk can easily overload the processor core. The inclusion of SMT4 and SMT8 gives a multiple increase in performance and can be useful for loads with intensive input-output.

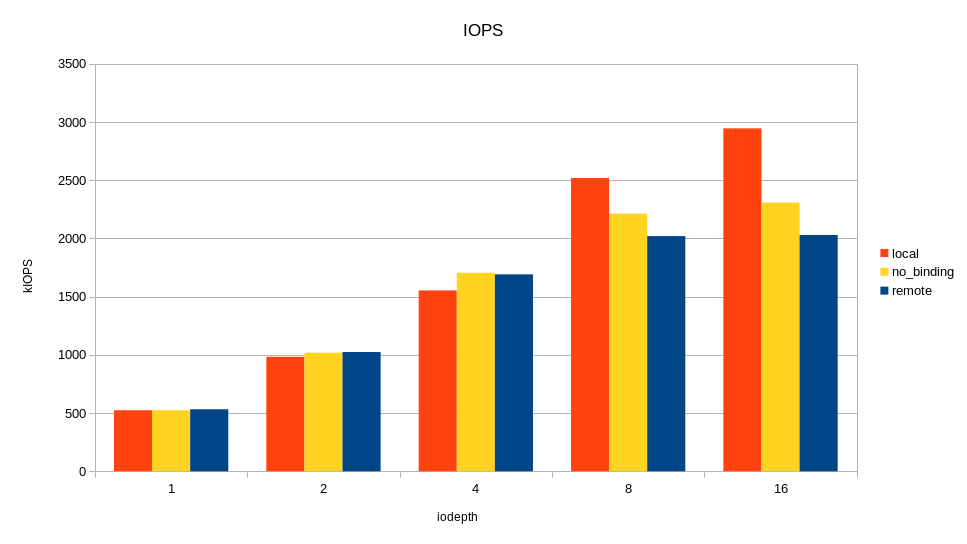

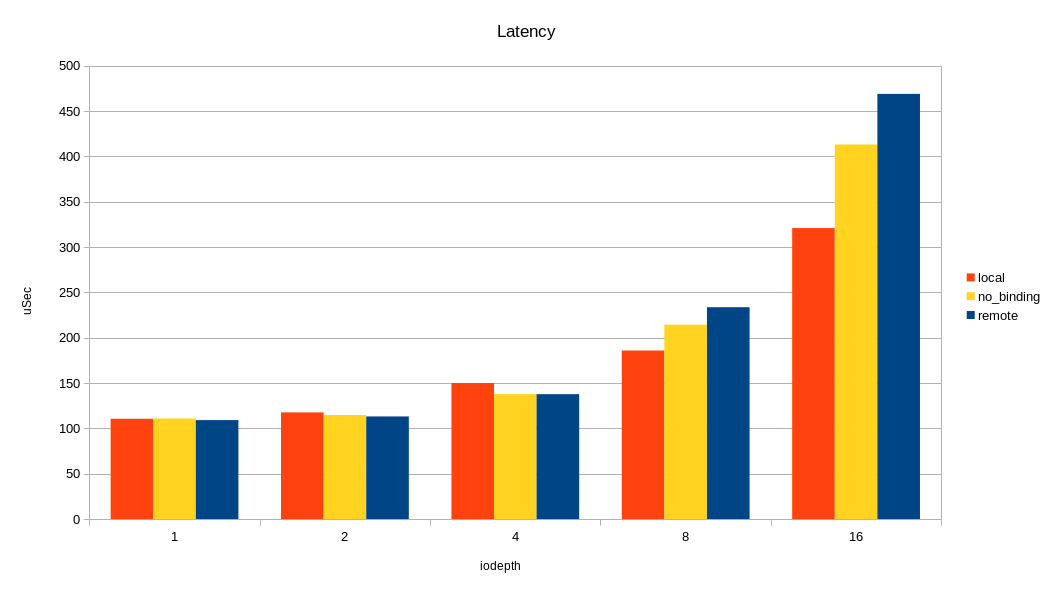

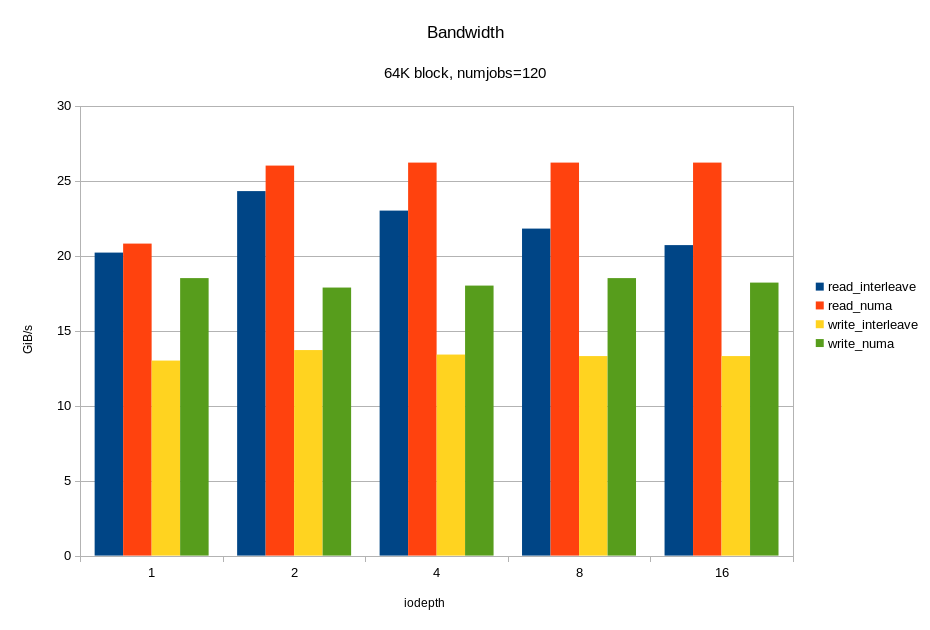

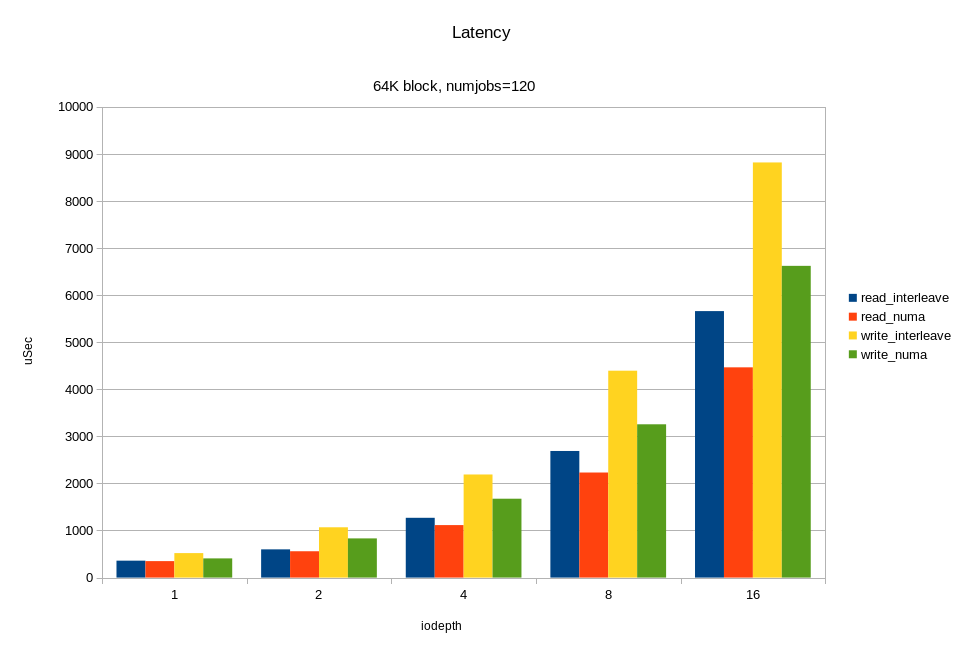

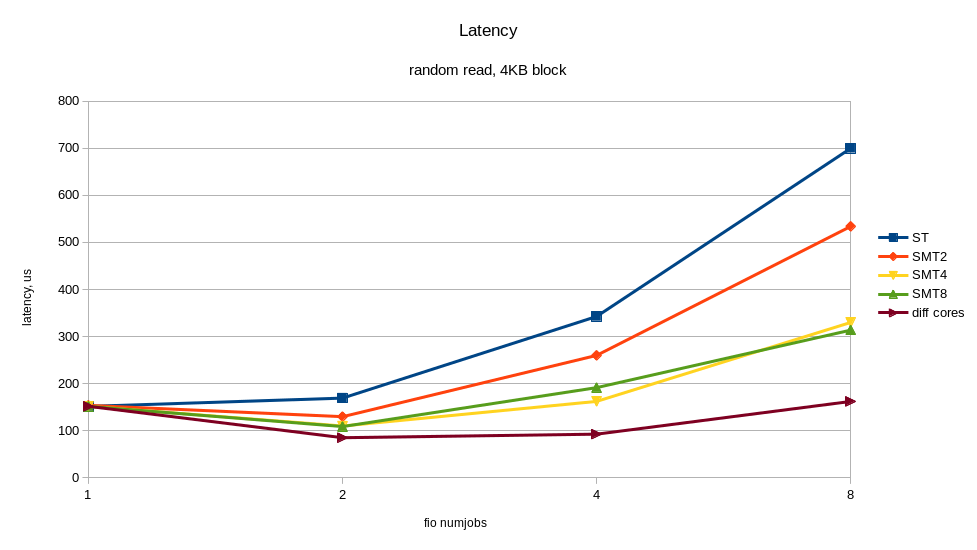

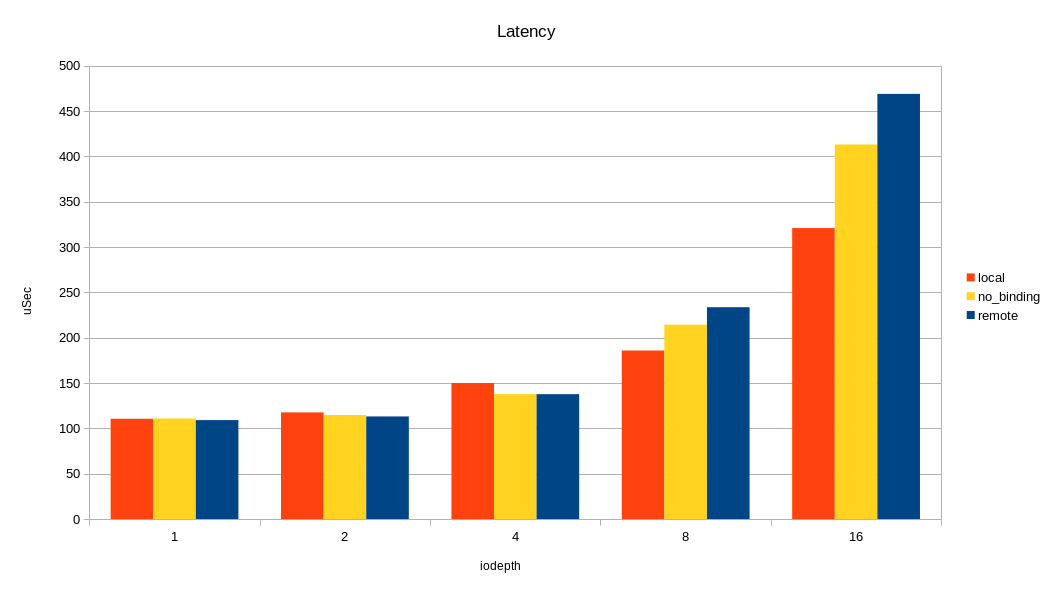

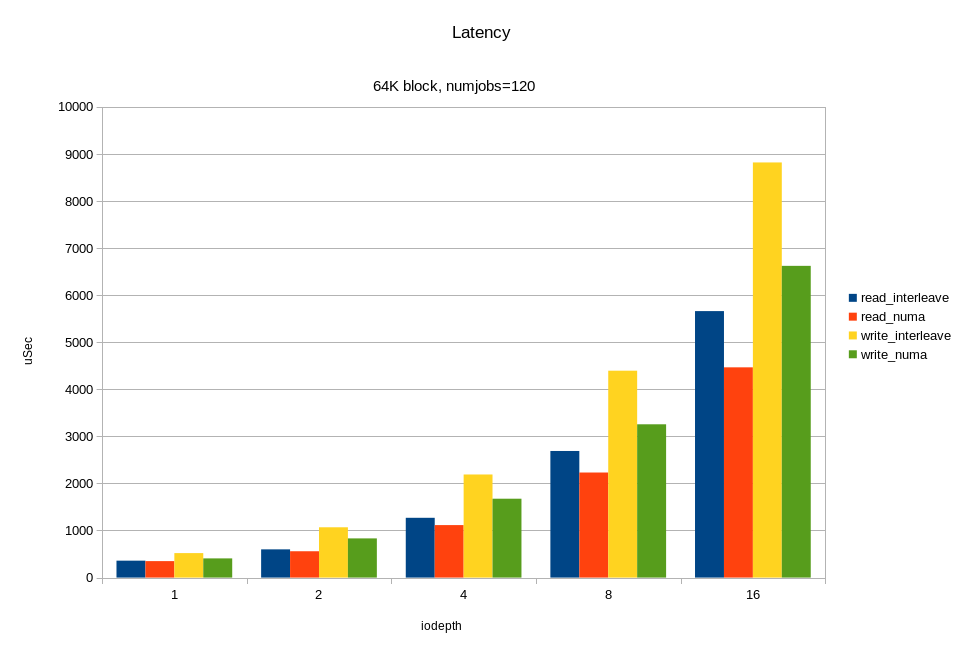

For load balancing, 24 internal NVMe server disks are evenly connected to four processor sockets. Accordingly, for each disk there is a “native” socket (NUMA locality) and a “remote” one. If an application accesses disks from a “remote” socket, there may be overhead from the effect of the interprocessor bus. We decided to see how access from a remote socket affects the final disk performance. For the test, run fio again and use numactl to bind the fio processes to the same socket. First, to the "native" socket, then to the "remote". The purpose of the test is to understand whether it is worth wasting energy on setting up NUMA, and what effect can we expect? On the graph, I compared only one remote socket out of three because there was no difference between them.

Fio configuration:

Changing the queue, I watched the bandwidth and response time, starting the load in the local socket, remote, and without binding to the socket in general.

As can be seen in the graphs, the difference between the local and remote socket is very noticeable on a large load. Overheads occur when the queue is 16 (iodepth = 16)> 2M IOPS with a 4K block (> 8 GB / s, simply put). One could conclude that paying attention to NUMA is only on tasks where you need a large bandwidth on input-output. But not everything is so simple, in a real application, except for I / O, there will be traffic on the interprocessor bus when accessing memory in remote NUMA locality. As a result, a slowdown can occur with less I / O traffic.

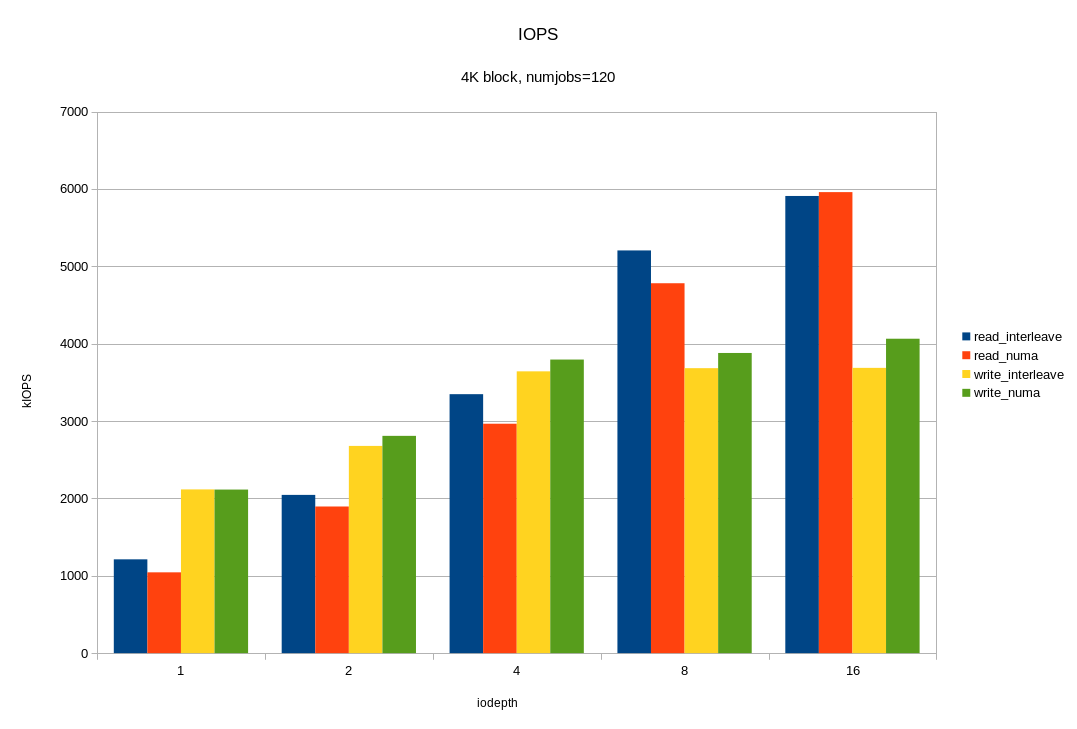

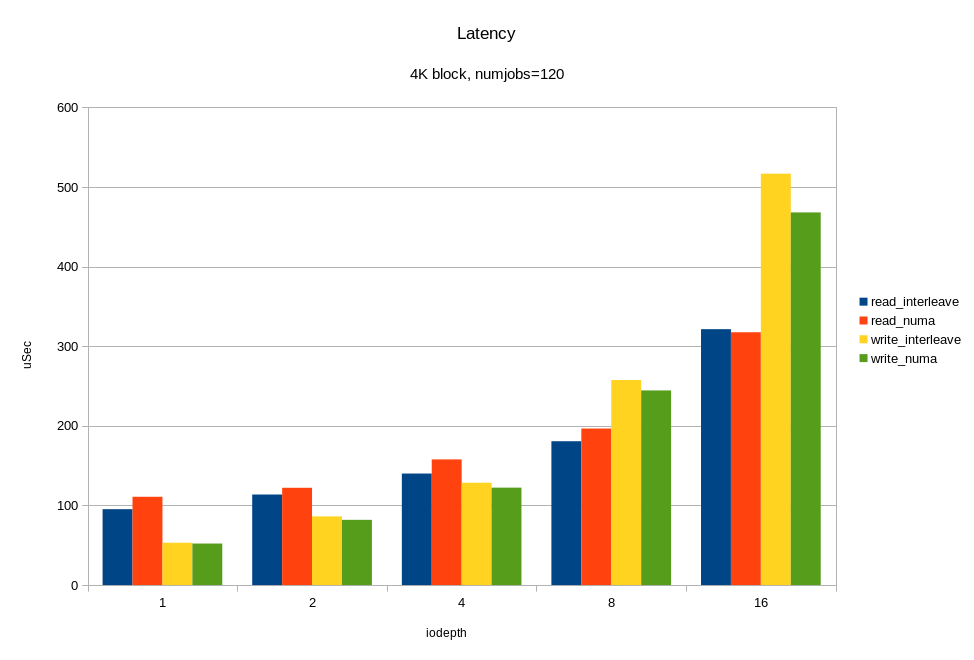

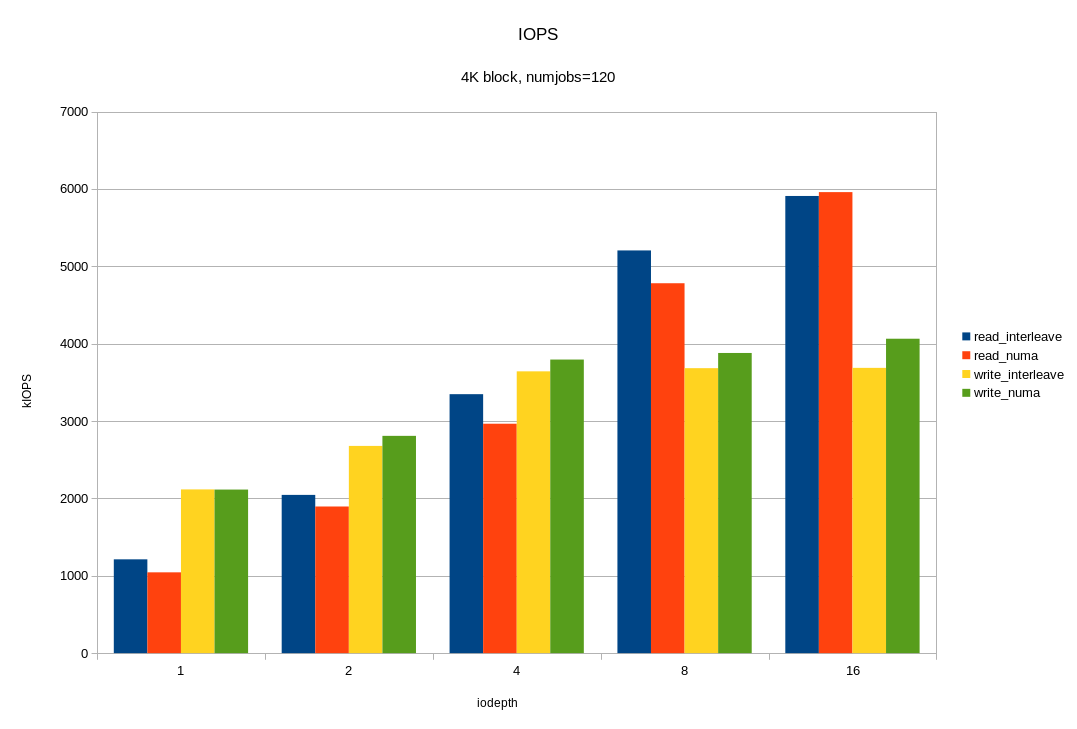

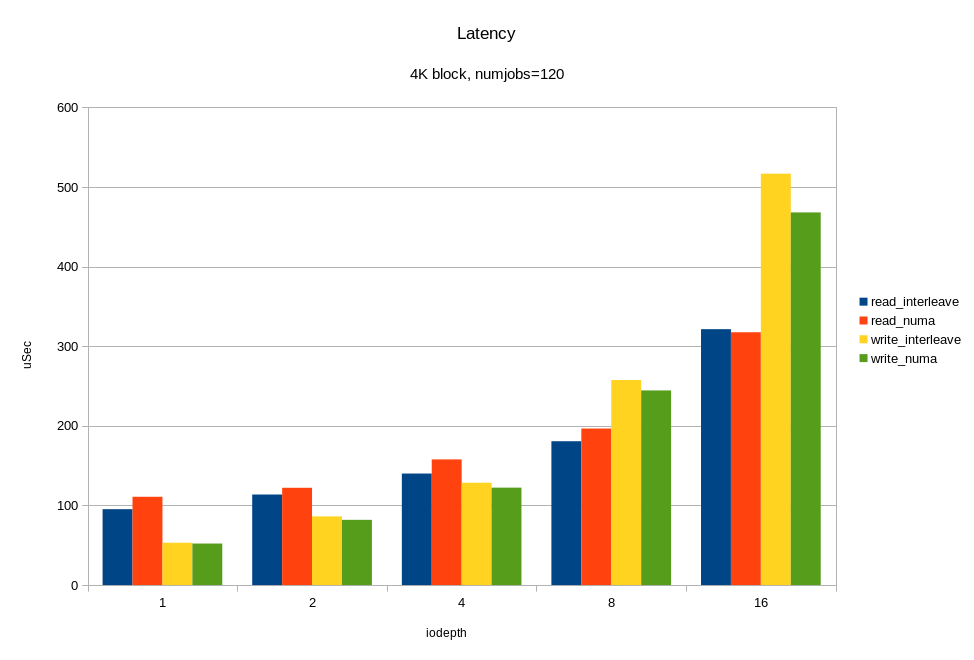

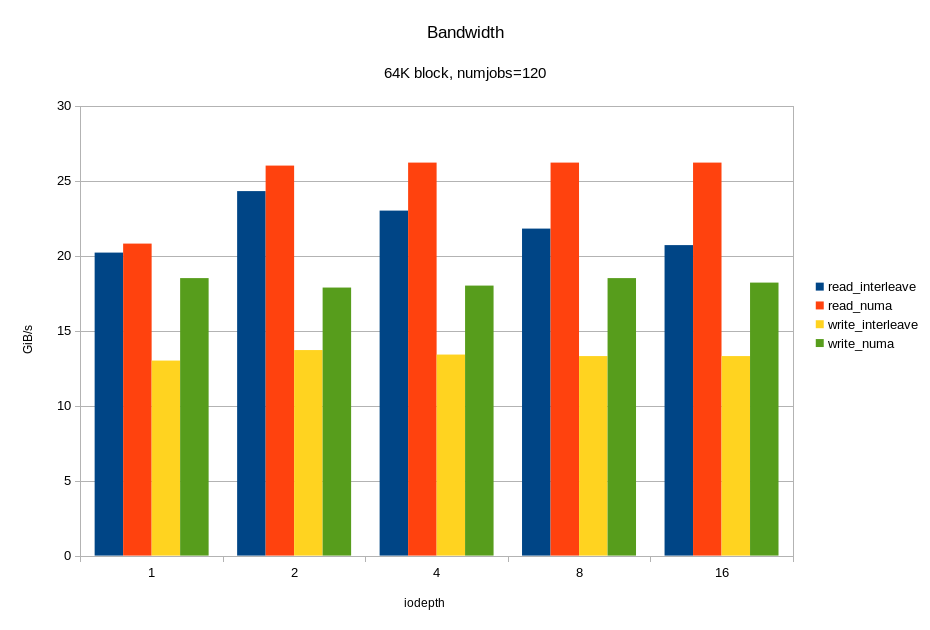

And now, the most interesting. We will load all available 12 disks at the same time. Moreover, taking into account the previous experiments, we will do it in two ways:

Look what happened:

For random read operations with a 4KB block, we received ~ 6M IOPS with a response time <330 µs. For a block of 64KB we got 26.2 GB / s. Probably, we run into the x16 bus between the processor and the PCIe switch. Let me remind you, this is half the hardware configuration! Again, we see that at high loads, binding I / O to the “home” locality gives a good effect.

It is generally inconvenient to give disks to the application as a rule. The application may be either too little one disk, or too much. Often you want to isolate application components from each other through different file systems. On the other hand, I want to balance the load between the I / O channels evenly. LVM comes to the rescue. Disks are combined into disk groups and distribute the space between applications through logical volumes. In the case of conventional spindles, or even disk arrays, LVM overhead is relatively small compared to disk latency. In the case of NVMe, the response time from the disks and the overhead of the software stack are the numbers of the same order. interesting to see them separately.

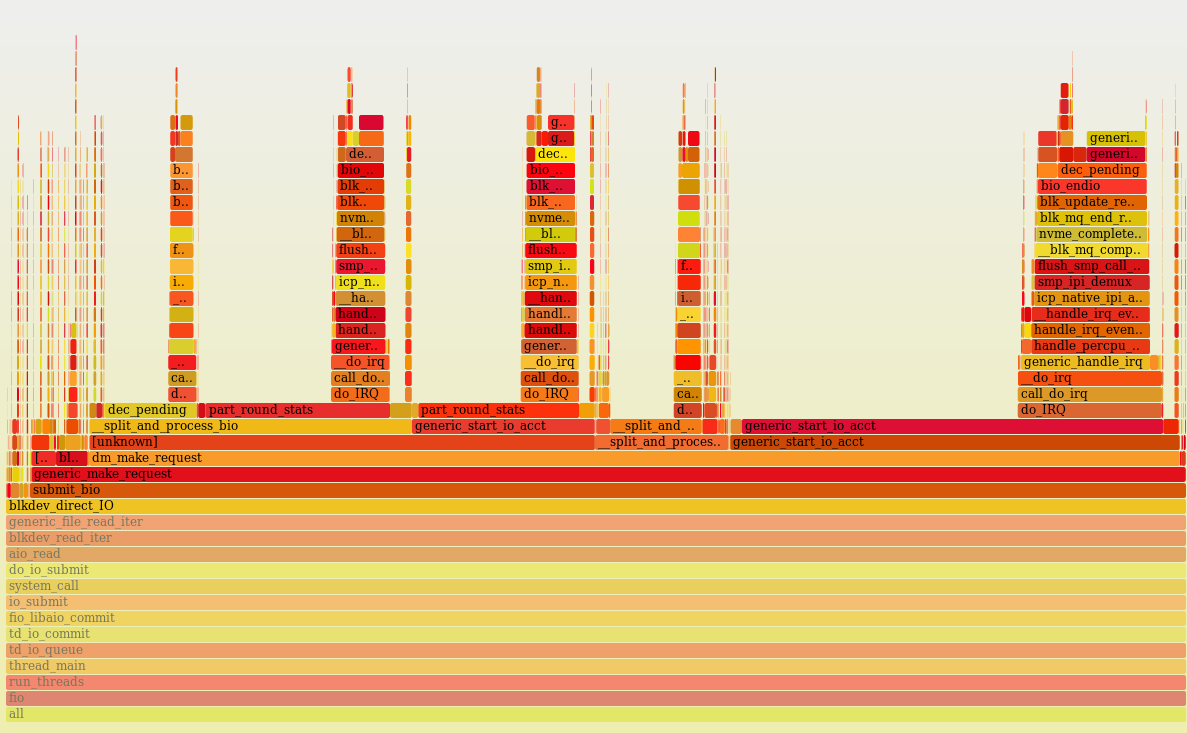

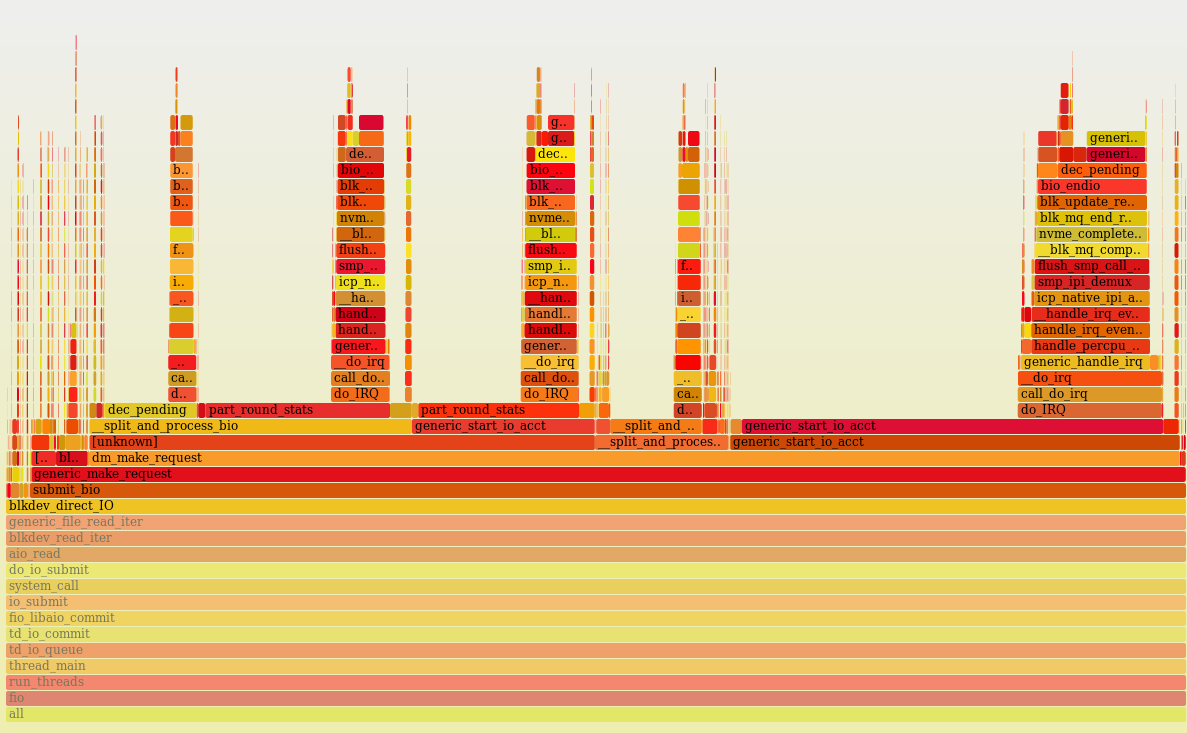

I created an LVM-group with the same disks as in the previous test, and loaded the LVM-volume with the same number of reading threads. As a result, I received only 1M IOPS and 100% processor load. Using perf, I did a processor profiling, and this is what happened:

In Linux, LVM uses Device Mapper, and obviously the system spends a lot of time when calculating and updating disk statistics in the generic_start_io_acct () function. I did not find how to disable statistics collection in Device Mapper (in dm_make_request ()). There is probably some potential for optimization. In general, at this point, the use of Device Mapper can have a bad effect on performance under heavy IOPS load.

Polling is a new mechanism for working Linux input / output drivers for very fast devices. This is a new feature, and I will mention it only to say why it has not been tested in this review. The novelty of the feature is that the process is not removed from execution while waiting for a response from the disk subsystem. Context switching is usually performed while I / O is waiting and is costly in itself. Overhead costs for context switching are evaluated in microsecond units (the Linux kernel needs to remove one process from execution, calculate the most worthy candidate for execution, etc., etc.) Interruptions during polling can be saved (only context switching is removed) or completely eliminated . This method is justified for a number of conditions:

The reverse side of the polling is an increase in processor load.

In the current Linux kernel (4.10 for me now), polling is enabled by default for all NVMe devices, but it works only for cases when the application specifically asks for it to be used for certain “especially important” I / O requests. An application should set the RWF_HIPRI flag in the preadv2 () / pwritev2 () system calls.

Since single-threaded applications do not belong to the main topic of the article, polling is postponed for the next time.

Although we have a test sample with half the disk subsystem configuration, the results are impressive: almost 6M IOPS with 4KB and> 26 GB / s for 64KB. For the built-in disk subsystem of the server, this looks more than convincing. The system looks balanced in the number of cores, the number of NVMe disks per core and the width of the PCIe bus. Even with terabytes of memory, the entire volume can be read from disks in a matter of minutes.

The NVMe stack in Linux is lightweight, the processor is overloaded only with a large load of fio> 400K IOPS, (4KB, read, SMT8).

NVMe disk is fast in itself, and it is quite difficult to bring it to saturation with the application. There is no longer a problem with slow disks, but there may be a problem with the limitations of the PCIe bus and software I / O stack, and sometimes kernel resources. During the test, we almost did not rest on the disks. Intensive I / O heavily loads all server subsystems (memory and processor). Thus, for I / O-intensive applications, it is necessary to configure all server subsystems: processors (SMT), memory (interleaving, for example), applications (number of writing / reading processes, queue, binding to disks).

If the planned load does not require large calculations, but it is intensive in input-output with a small block, it is still better to take POWER8 processors with the largest number of cores from the available line, that is 12.

The inclusion of additional software layers (such as Device Mapper) on the NVMe I / O stack can significantly reduce peak performance.

On a large block (> = 64KB), binding IO loads to NUMA to the localities (processors) to which the disks are connected, reduces the response time from the disks and speeds up I / O. The reason is that on such a load, the width of the bus from the processor to the disks is important.

On a small block (~ 4KB) everything is less clear. When tying the load to the locality, there is a risk of uneven CPU utilization. That is, you can simply overload the socket to which the disks are connected and the load is tied.

In any case, when organizing I / O, especially asynchronous, it is better to distribute the load across different cores using NUMA utilities in Linux.

Using SMT8 greatly improves performance with a large number of writing / reading processes.

Historically, the I / O subsystem is slow. With the advent of flush, it has become fast, and with the advent of NVMe, quite fast. Traditional discs have mechanics. It makes the spindle the slowest element of the computing complex. What follows from this?

Test method and load

I'll start from afar. Up to 4 processors (up to 48 POWER8 cores) and a lot of memory (up to 8 TB) can be put into our server. This opens up many opportunities for applications, but a large amount of data in RAM entails the need to store them somewhere. The data must be quickly retrieved from the disks and also quickly re-stuffed. In the near future we are waiting for the beautiful world of disaggregated non-volatile and shared memory. In this beautiful future, perhaps, there will be no need for a backing store at all. The processor will copy the bytes directly from the internal registers to non-volatile memory with an access time like DRAM (tens NS) and the memory hierarchy will be reduced by one floor. All this is then, now all the data are usually stored on a block disk subsystem.

We define the initial conditions for testing:

')

The server has a relatively large number of cores. This is useful for parallel processing of large amounts of data. That is, one of the priorities is the high throughput capacity of the I / O subsystem with a large number of parallel processes. As a result, it is logical to use microbenmark and set up quite a lot of parallel threads.

In addition, the I / O subsystem is built on NVMe disks, which can process many requests in parallel. Accordingly, we can expect a performance boost from asynchronous I / O. In other words, interesting is the high throughput in parallel processing. This is more appropriate for the purpose of the server. Performance on single-threaded applications, and achieving a minimum response time, although it is one of the goals of future tuning, is not considered in this test.

Benchmarks of individual NVMe disks are full on the network, it’s not worth creating extra ones. In this article, I consider the disk subsystem as a whole, so the disks will be loaded mainly in groups. As a load, we will use 100% random read and write with a block of different sizes.

What metrics look?

On a small block of 4K, we’ll look at IOPS (number of operations per second) and secondarily latency (response time). On the one hand, the focus on IOPS is a legacy from hard drives, where random access by a small unit brought the greatest delays. In the modern world, all-flash systems are capable of issuing millions of IOPS, often more than software can use. Now “IOPS-intensive loads” are valuable because they show the balance of the system in terms of computing resources and bottlenecks in the software stack.

On the other hand, for some of the tasks, it is not the number of operations per second that is important, but the maximum throughput on a large block ≥64KB. For example, when data is drained from memory into disks (database snapshot) or the database is loaded into memory for in-memory computing, warming up the cache. For a server with 8 terabytes of memory, the throughput of the disk subsystem is of particular importance. On a large block we will look at the bandwidth, that is, megabytes per second.

Built-in disk subsystem

The server disk subsystem can include up to 24 NVMe standard disks. The drives are evenly distributed across four processors using two PMC 8535 PCI Express switches. Each switch is logically divided into three virtual switches: one x16 and two x8. Thus, for each processor is available PCIe x16, or up to 16 GB / s. 6 NVMe disks are connected to each processor. In total, we expect throughput up to 60 GB / s from all drives.

For tests, I have an instance of a server with 4 processors (8 cores per processor, the maximum is 12 cores). The disks are connected to two sockets out of four. That is, it is half of the maximum configuration of the disk subsystem. On the backplane with PCI Express switches of the first revision, two Oculink connectors were faulty, and therefore only half of the drives are available. In the second revision, this has already been fixed, but here I was able to put only half of the disks, namely, the following configuration was obtained:

- 4 × Toshiba PX04PMB160

- 4 × Micron MTFDHAL2T4MCF-1AN1ZABYY

- 3 × INTEL SSDPE2MD800G4

- 1 × SAMSUNG MZQLW960HMJP-00003

The variety of disks is caused by the fact that we simultaneously test them to form a nomenclature of standard components (disks, memory, etc.) from 2-3 manufacturers.

Load minimum configuration

To begin, let's perform a simple test in the minimum configuration - one disk (Micron MTFDHAL2T4MCF-1AN1ZABYY), one POWER8 processor and one fio stream with a queue = 16.

[global] ioengine=libaio direct=1 group_reporting=1 bs=4k iodepth=16 rw=randread [ /dev/nvme1n1 P60713012839 MTFDHAL2T4MCF-1AN1ZABYY] stonewall numjobs=1 filename=/dev/nvme1n1 It turned out like this:

# numactl --physcpubind=0 ../fio/fio workload.fio /dev/nvme1n1 P60713012839 MTFDHAL2T4MCF-1AN1ZABYY: (g=0): rw=randread, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=16 fio-2.21-89-gb034 time 3233 cycles_start=1115105806326 Starting 1 process Jobs: 1 (f=1): [r(1)][13.7%][r=519MiB/s,w=0KiB/s][r=133k,w=0 IOPS][eta 08m:38s] fio: terminating on signal 2 Jobs: 1 (f=0): [f(1)][100.0%][r=513MiB/s,w=0KiB/s][r=131k,w=0 IOPS][eta 00m:00s] /dev/nvme1n1 P60713012839 MTFDHAL2T4MCF-1AN1ZABYY: (groupid=0, jobs=1): err= 0: pid=3235: Fri Jul 7 13:36:21 2017 read: IOPS=133k, BW=519MiB/s (544MB/s)(41.9GiB/82708msec) slat (nsec): min=2070, max=124385, avg=2801.77, stdev=916.90 clat (usec): min=9, max=921, avg=116.28, stdev=15.85 lat (usec): min=13, max=924, avg=119.38, stdev=15.85 ………... cpu : usr=20.92%, sys=52.63%, ctx=2979188, majf=0, minf=14 What do we see here? Received 133K IOPS with a response time of 119 µs. Note that the CPU usage is 73%. It's a lot. What is the processor busy with?

We use asynchronous I / O, and this simplifies the analysis of the results. fio separately counts slat (submission latency) and clat (completion latency). The first includes the execution time of the read system call before returning to the user space. That is, all the overhead of the kernel before the request leaves in iron is shown separately.

In our case, the slat is only 2.8 μs per request, but given the repetition of this 133,000 times per second, we get a lot: 2.8 μs * 133,000 = 372 ms. That is, the processor spends 37.2% of the time only on the IO submission. And there is also the fio code itself, interrupts, the work of the asynchronous I / O driver.

The total processor load is 73%. It looks like another fio won't pull the kernel, but let's try:

Starting 2 processes Jobs: 2 (f=2): [r(2)][100.0%][r=733MiB/s,w=0KiB/s][r=188k,w=0 IOPS][eta 00m:00s] /dev/nvme1n1 P60713012839 MTFDHAL2T4MCF-1AN1ZABYY: (g=0): rw=randread, bs=(R) pid=3391: Sun Jul 9 13:14:02 2017 read: IOPS=188k, BW=733MiB/s (769MB/s)(430GiB/600001msec) slat (usec): min=2, max=963, avg= 3.23, stdev= 1.82 clat (nsec): min=543, max=4446.1k, avg=165831.65, stdev=24645.35 lat (usec): min=13, max=4465, avg=169.37, stdev=24.65 ………… cpu : usr=13.71%, sys=36.23%, ctx=7072266, majf=0, minf=72 With two threads, the speed grew from 133k to 180k, but the core is overloaded. According to the top processor utilization 100% and clat increased. That is, 188k is the limit for one core on this load. It is easy to see that the growth of clat is caused by the processor, and not by the disk. Let's see the 'biotop' ():

PID COMM D MAJ MIN DISK I/O Kbytes AVGus 3553 fio R 259 1 nvme0n1 633385 2533540 109.25 3554 fio R 259 1 nvme0n1 630130 2520520 109.25 Because of the enabled tracing, the speed slipped somewhat, but the response time from the disks ~ 109 µs is the same as in the previous test. Measurements in another way (sar -d) show the same numbers.

For the sake of curiosity, it is interesting to see what the processor is doing:

Single core (perf + flame graph) load profile with multithreading disabled and two fio processes running. As you can see, it does something 100% of the time (idle = 0%).

Visually, processor time is more or less evenly distributed between a large number of user (fio code itself) and nuclear functions (asynchronous I / O, block level, driver, many small peaks are interrupts). You cannot see any single function where an abnormally large amount of CPU time was spent. It looks good and, when viewing stacks, it doesn’t come up with ideas that one could twist.

The effect of POWER8 multithreading on I / O speed

So, we found out that with active IO load, the processor is easy to overload. The number of processor utilization indicates that it is busy for the operating system, but says nothing about loading the processor nodes. In particular, the processor may appear to be loaded while waiting for external components, such as memory. We don’t have a goal here to figure out processor utilization efficiency, but to understand the potential of tuning, it’s interesting to take a look at the “CPU counters” that are partially accessible through 'perf'.

root@vesninl:~# perf stat -C 0 Performance counter stats for 'CPU(s) 0': 2393.117988 cpu-clock (msec) # 1.000 CPUs utilized 7,518 context-switches # 0.003 M/sec 0 cpu-migrations # 0.000 K/sec 0 page-faults # 0.000 K/sec 9,248,790,673 cycles # 3.865 GHz (66.57%) 401,873,580 stalled-cycles-frontend # 4.35% frontend cycles idle (49.90%) 4,639,391,312 stalled-cycles-backend # 50.16% backend cycles idle (50.07%) 6,741,772,234 instructions # 0.73 insn per cycle # 0.69 stalled cycles per insn (66.78%) 1,242,533,904 branches # 519.211 M/sec (50.10%) 19,620,628 branch-misses # 1.58% of all branches (49.93%) 2.393230155 seconds time elapsed The output above shows that IPC (insn per cycle) 0.73 is not so bad, but theoretically it can be up to 8 on Power8. In addition, 50% of the “backend cycles idle” (PM_CMPLU_STALL metric) can mean waiting for memory. That is, the processor is busy for the Linux scheduler, but the resources of the processor itself are not particularly loaded. It is quite possible to expect an increase in performance from the inclusion of multithreading (SMT), with an increase in the number of threads. The result of what happened when you turned on SMT is shown in the graphs. I received a significant increase in speed from additional fio processes running on other threads of the same processor. For comparison, there is a case when all the threads work on different cores (diff cores).

The graphs show that the inclusion of SMT8 gives an almost two-fold increase in speed and a decrease in response time. Quite well and we are shooting> 400K IOPS from one core! Along the way, we see that one core, even with SMT8 enabled, is not enough to fully load the NVMe disk. Spreading fio streams across different cores, we get almost twice the best disk performance - this is what modern NVMe can do.

Thus, if the application architecture allows you to adjust the number of writing / reading processes, then it is better to adjust their number to the number of physical / logical cores in order to avoid slowdowns from overloaded processors. A single NVMe disk can easily overload the processor core. The inclusion of SMT4 and SMT8 gives a multiple increase in performance and can be useful for loads with intensive input-output.

Impact of NUMA Architecture

For load balancing, 24 internal NVMe server disks are evenly connected to four processor sockets. Accordingly, for each disk there is a “native” socket (NUMA locality) and a “remote” one. If an application accesses disks from a “remote” socket, there may be overhead from the effect of the interprocessor bus. We decided to see how access from a remote socket affects the final disk performance. For the test, run fio again and use numactl to bind the fio processes to the same socket. First, to the "native" socket, then to the "remote". The purpose of the test is to understand whether it is worth wasting energy on setting up NUMA, and what effect can we expect? On the graph, I compared only one remote socket out of three because there was no difference between them.

Fio configuration:

- 60 processes (numjobs). The number is taken from the hardware configuration. We have 8 processors installed in the test sample and SMT8 is turned on. From the point of view of the operating system, 64 processes can be run on each socket. That is, I brazenly adjusted the load to the hardware capabilities.

- block size - 4kb

- load type - random read 100%

- load object - 6 disks connected to socket 0.

Changing the queue, I watched the bandwidth and response time, starting the load in the local socket, remote, and without binding to the socket in general.

As can be seen in the graphs, the difference between the local and remote socket is very noticeable on a large load. Overheads occur when the queue is 16 (iodepth = 16)> 2M IOPS with a 4K block (> 8 GB / s, simply put). One could conclude that paying attention to NUMA is only on tasks where you need a large bandwidth on input-output. But not everything is so simple, in a real application, except for I / O, there will be traffic on the interprocessor bus when accessing memory in remote NUMA locality. As a result, a slowdown can occur with less I / O traffic.

Performance under maximum load

And now, the most interesting. We will load all available 12 disks at the same time. Moreover, taking into account the previous experiments, we will do it in two ways:

- the kernel chooses which socket to run fio on without considering the physical connection;

- fio only works on the socket to which the disks are connected.

Look what happened:

For random read operations with a 4KB block, we received ~ 6M IOPS with a response time <330 µs. For a block of 64KB we got 26.2 GB / s. Probably, we run into the x16 bus between the processor and the PCIe switch. Let me remind you, this is half the hardware configuration! Again, we see that at high loads, binding I / O to the “home” locality gives a good effect.

LVM Overhead

It is generally inconvenient to give disks to the application as a rule. The application may be either too little one disk, or too much. Often you want to isolate application components from each other through different file systems. On the other hand, I want to balance the load between the I / O channels evenly. LVM comes to the rescue. Disks are combined into disk groups and distribute the space between applications through logical volumes. In the case of conventional spindles, or even disk arrays, LVM overhead is relatively small compared to disk latency. In the case of NVMe, the response time from the disks and the overhead of the software stack are the numbers of the same order. interesting to see them separately.

I created an LVM-group with the same disks as in the previous test, and loaded the LVM-volume with the same number of reading threads. As a result, I received only 1M IOPS and 100% processor load. Using perf, I did a processor profiling, and this is what happened:

In Linux, LVM uses Device Mapper, and obviously the system spends a lot of time when calculating and updating disk statistics in the generic_start_io_acct () function. I did not find how to disable statistics collection in Device Mapper (in dm_make_request ()). There is probably some potential for optimization. In general, at this point, the use of Device Mapper can have a bad effect on performance under heavy IOPS load.

Polling

Polling is a new mechanism for working Linux input / output drivers for very fast devices. This is a new feature, and I will mention it only to say why it has not been tested in this review. The novelty of the feature is that the process is not removed from execution while waiting for a response from the disk subsystem. Context switching is usually performed while I / O is waiting and is costly in itself. Overhead costs for context switching are evaluated in microsecond units (the Linux kernel needs to remove one process from execution, calculate the most worthy candidate for execution, etc., etc.) Interruptions during polling can be saved (only context switching is removed) or completely eliminated . This method is justified for a number of conditions:

- requires high performance for single-threaded tasks;

- the main priority is the minimum response time;

- Direct IO is used (no file system cache);

- for an application, the processor is not a bottleneck.

The reverse side of the polling is an increase in processor load.

In the current Linux kernel (4.10 for me now), polling is enabled by default for all NVMe devices, but it works only for cases when the application specifically asks for it to be used for certain “especially important” I / O requests. An application should set the RWF_HIPRI flag in the preadv2 () / pwritev2 () system calls.

/* flags for preadv2/pwritev2: */ #define RWF_HIPRI 0x00000001 /* high priority request, poll if possible */ Since single-threaded applications do not belong to the main topic of the article, polling is postponed for the next time.

Conclusion

Although we have a test sample with half the disk subsystem configuration, the results are impressive: almost 6M IOPS with 4KB and> 26 GB / s for 64KB. For the built-in disk subsystem of the server, this looks more than convincing. The system looks balanced in the number of cores, the number of NVMe disks per core and the width of the PCIe bus. Even with terabytes of memory, the entire volume can be read from disks in a matter of minutes.

The NVMe stack in Linux is lightweight, the processor is overloaded only with a large load of fio> 400K IOPS, (4KB, read, SMT8).

NVMe disk is fast in itself, and it is quite difficult to bring it to saturation with the application. There is no longer a problem with slow disks, but there may be a problem with the limitations of the PCIe bus and software I / O stack, and sometimes kernel resources. During the test, we almost did not rest on the disks. Intensive I / O heavily loads all server subsystems (memory and processor). Thus, for I / O-intensive applications, it is necessary to configure all server subsystems: processors (SMT), memory (interleaving, for example), applications (number of writing / reading processes, queue, binding to disks).

If the planned load does not require large calculations, but it is intensive in input-output with a small block, it is still better to take POWER8 processors with the largest number of cores from the available line, that is 12.

The inclusion of additional software layers (such as Device Mapper) on the NVMe I / O stack can significantly reduce peak performance.

On a large block (> = 64KB), binding IO loads to NUMA to the localities (processors) to which the disks are connected, reduces the response time from the disks and speeds up I / O. The reason is that on such a load, the width of the bus from the processor to the disks is important.

On a small block (~ 4KB) everything is less clear. When tying the load to the locality, there is a risk of uneven CPU utilization. That is, you can simply overload the socket to which the disks are connected and the load is tied.

In any case, when organizing I / O, especially asynchronous, it is better to distribute the load across different cores using NUMA utilities in Linux.

Using SMT8 greatly improves performance with a large number of writing / reading processes.

Final thoughts on the topic.

Historically, the I / O subsystem is slow. With the advent of flush, it has become fast, and with the advent of NVMe, quite fast. Traditional discs have mechanics. It makes the spindle the slowest element of the computing complex. What follows from this?

- First, the speed of the disks is measured in milliseconds, and they are many times slower than all other server elements. As a result, the chance to rest against the speed of the bus and disk controller is relatively low. It is much more likely that a problem will arise with spindles. It happens that one disk is loaded more than the others, the response time from it is slightly higher, and this slows down the entire system. With NVMe, the bandwidth of the disks is huge, the bottleneck is shifting.

- Second, in order to minimize disk latency, the disk controller and the operating system use optimization algorithms, including caching, deferred writing, and read ahead. It consumes computational resources and complicates setup. When the discs are immediately fast, there is no need for a large amount of optimization. Rather, its goals change. Instead of reducing the expectations inside the disk, it becomes more important to reduce the delay to the disk and as soon as possible to bring a block of data from memory to disk. The NVMe stack in Linux does not require configuration and runs quickly right away.

- And third, fun about performance consultants. In the past, when the system slowed down, it was easy and pleasant to look for the cause. Swear on the storage system and most likely you will not be mistaken. Database consultants love and know how to do this. In the system, with traditional disks, you can always find some problem with the storage performance and puzzle the vendor, even if the database slows down due to something else. With fast drives, bottlenecks will increasingly shift to other resources, including the application. Life will be more interesting.

Source: https://habr.com/ru/post/334260/

All Articles