Inside Docker Networks: Linking Docker Swarm Containers and Overlay Networks

In a previous article, I explained how Docker uses Linux virtual interfaces and bridge interfaces to establish connectivity between containers across bridge networks. This time, I’ll explain how Docker uses vxlan technology to create overlay networks that are used in swarm clusters, as well as where to view and inspect this configuration. I will also explain how different types of networks solve different communication tasks for containers running in swarm clusters.

I assume that readers already know how to deploy swarm clusters and start services in Docker Swarm. Also at the end of the article I will provide several links to useful resources with which you can explore the subject in detail and delve into the context of the topics discussed here. Again, I will wait for your opinions in the comments.

Table of contents

Docker Swarm and Overlay networks

Overlay networks are used in the context of clusters (Docker Swarm), where the virtual network that containers use connects several physical hosts on which Docker is running. When you run a container on a swarm cluster (as part of a service), many networks join by default, and each of them meets different communication requirements.

For example, I have 3 docker swarm cluster nodes:

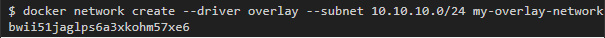

First, I will create an overlay network called my-overlay-network:

Then I will start the service with a container on which a simple web server is running, which looks at port 8080 to the outside world. This service will have 3 replicas, and I note that it is connected to only one network (my-overlay-network):

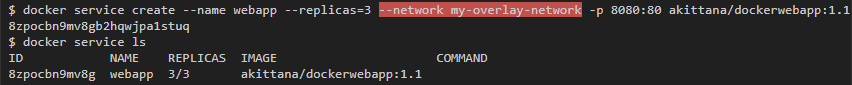

If you then list all the interfaces available to any running container, there will be 3 of them. At the same time, if you run the container on one host, you can only expect 1 interface:

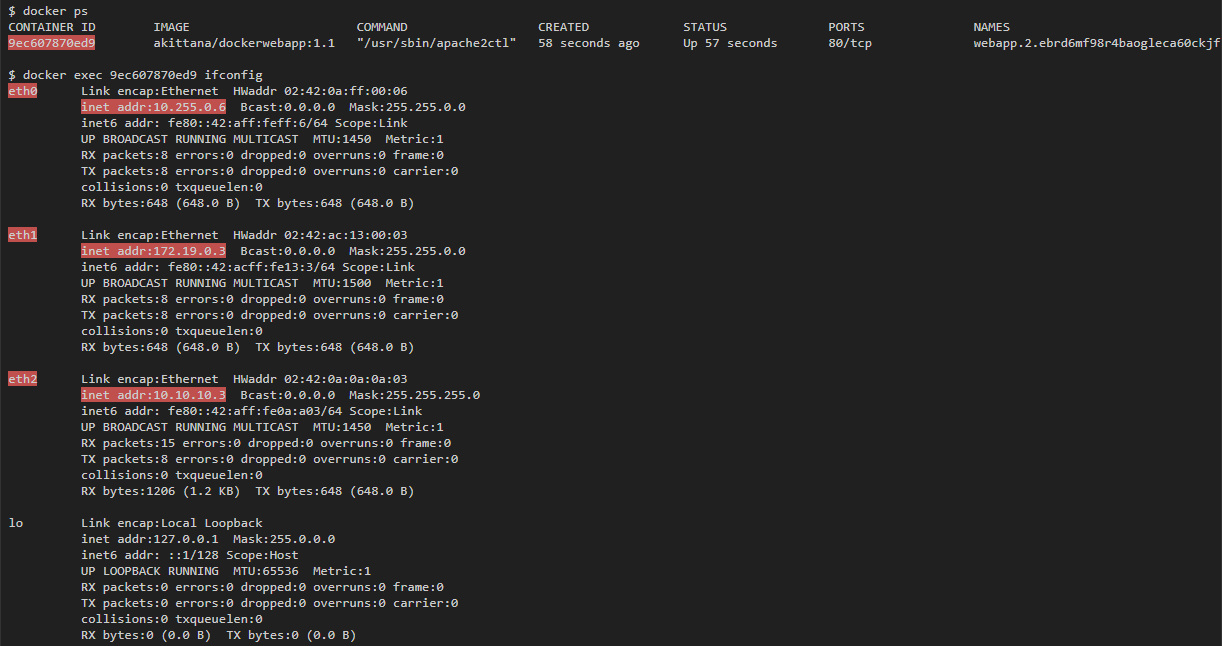

The container is connected to my-overlay-network via eth2, which can be understood by IP address. eth0 and eth1 are connected to other networks. If you run docker network ls , then you can see 2 additional networks that have been added: docker_gwbridge and ingress , and by the addresses of the subnets you can understand that they are tied to eth0 and eth1:

Overlay

Overlay network creates a subnet that containers can use in different hosts of the swarm cluster. Containers on different physical hosts can exchange data over the overlay network (if they are all attached to the same network).

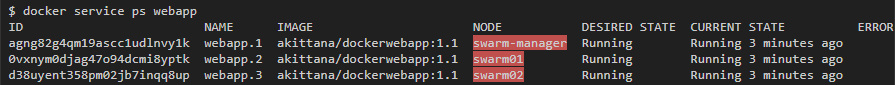

For example, for the web application that we launched, you can see one container on each host in the swarm cluster:

I can get an overlay IP address for each container using the ifconfig eth2 (eth2 is the interface attached to the overlay network).

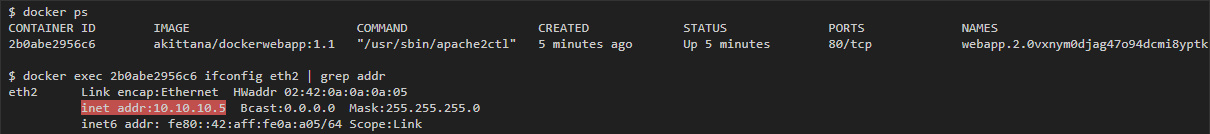

On swarm01:

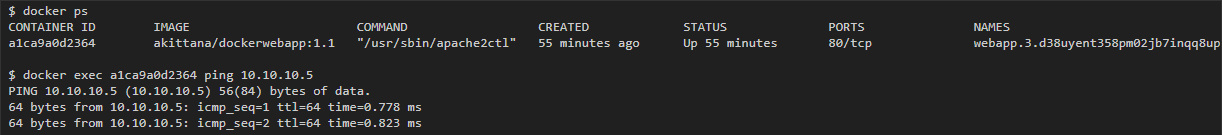

Then from the container to swarm02 I should be able to ping 10.10.10.5 (the container's IP to swarm01):

vxlan

Overlay network uses vxlan technology, which encapsulates layer 2 frames in layer 4 packets (UDP / IP). With this action, Docker creates virtual networks on top of existing connections between hosts that may end up within the same subnet. Any points that are part of this virtual network look to each other as if they are connected over a switch and do not care about the device of the main physical network.

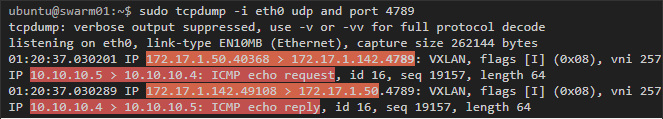

To see this process in action, you can capture traffic on hosts that are part of an overlay network. In the last example, capturing traffic to swarm01 or swarm02 will reveal icmp traffic between containers running on them (vxlan uses udp port 4789):

In this example, two layers can be seen in the packets. The first is the udp vxlan tunnel traffic between hosts on port 4789, and inside you can see the second - icmp traffic with the container's IP addresses.

Encryption

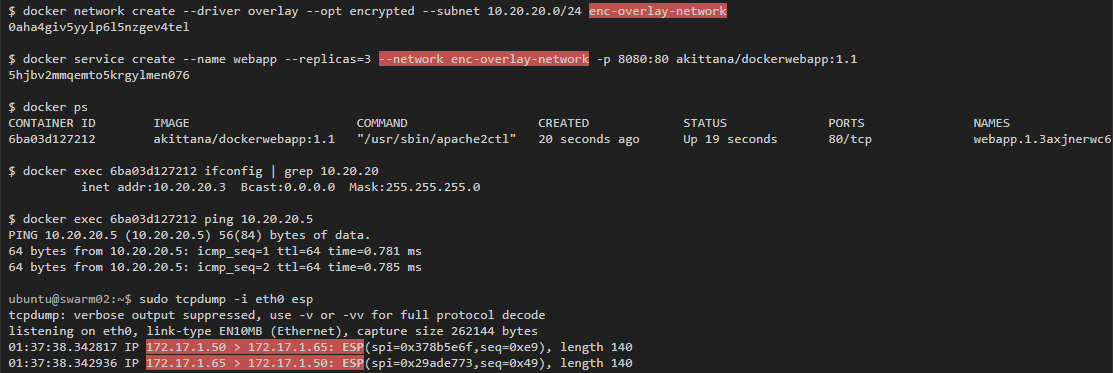

Traffic capture in this example showed that if you see traffic between hosts, you will also see traffic inside the containers passing through the overlay network. That is why Docker has an encryption option. You can start automatic IPSec encryption of vxlan tunnels by simply adding --opt encrypted when creating a network.

If you run the same test, but using an encrypted overlay network, then you can see only encrypted packets between the hosts:

Inspecting Vxlan Tunnel Interfaces

Like bridge networks, Docker creates a bridge interface for each overlay network that connects virtual tunnel interfaces that perform vxlan tunnel communication between hosts. However, these tunnel interfaces (bridge and vxlan) are not created directly on the tunnel host. They are in different containers that Docker launches for each overlay network created.

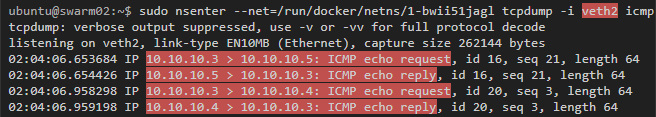

To really inspect these interfaces, you must use nsenter to run commands inside the container network that manages the tunnels and virtual interfaces. This command should be run on hosts with containers that participate in the overlay network.

You also need to edit /etc/systemd/system/multi-user.target.wants/docker.service on the host and comment out MountFlags=slave according to the instructions in this discussion .

Finally, if we start capturing traffic on the veth interface, we will see traffic that leaves the container, but before it is sent to the vxlan tunnel (the ping mentioned above still works):

ingress

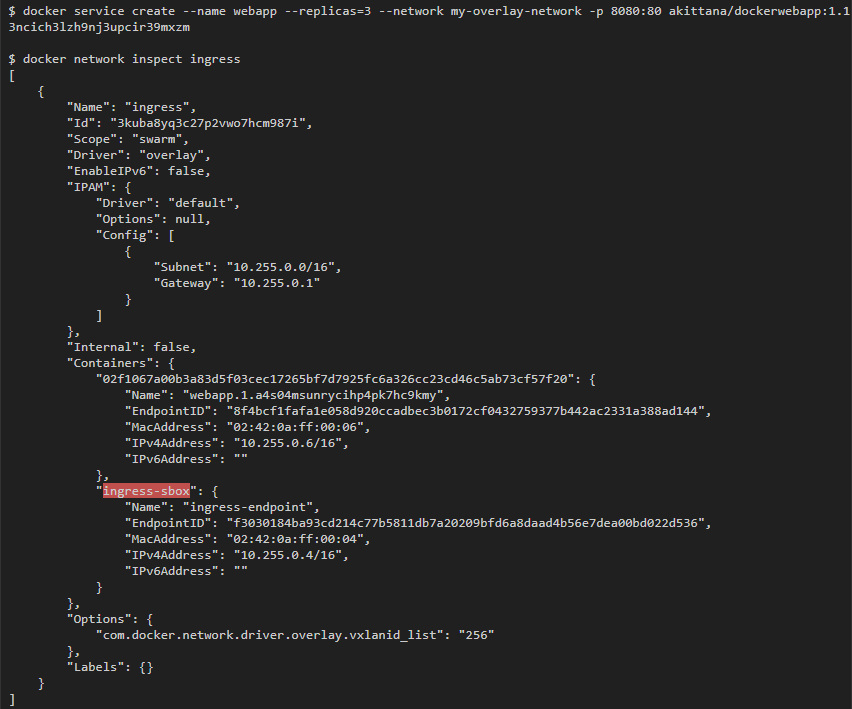

The second network to which the containers were attached is the ingress network. This is an overlay network, but it is installed by default immediately after starting the swarm cluster. This network is responsible for connections that are established with containers from the outside world. It is also in it that the load balancing provided by the swarm cluster takes place.

Load balancing is performed by IPVS in a container that Docker swarm runs by default. You can see that this container is attached to the ingress network (I used the same web service as before: it opens port 8080, which is attached to port 80 in containers):

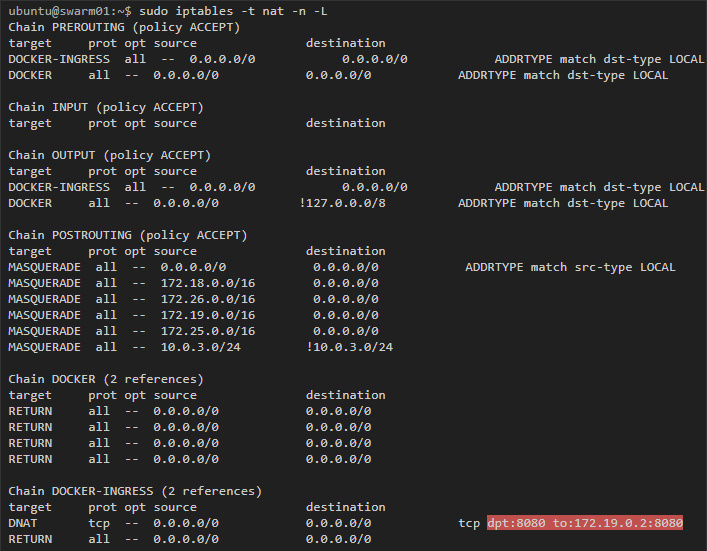

To begin with, take a look at the host — at any host that participates in the swarm cluster:

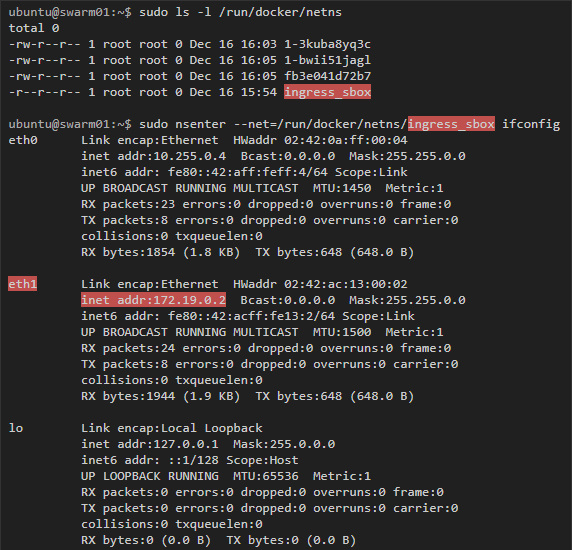

Here we see the rule that traffic destined for port 8080 is redirected to 172.19.0.2. This address belongs to the ingress-sbox container, if we inspect its interfaces, we will get the following:

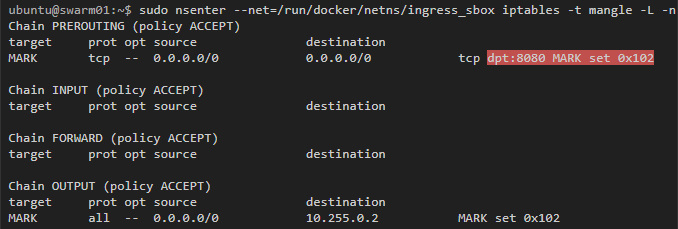

Docker uses iptables mangle rules to assign specific number to packets for port 8080. IPVS will use this number to balance the load in suitable containers:

How Docker swarm uses iptables and IPVS for load balancing containers can be further explored in the Deep Dive into Docker 1.12 Networking video.

Docker_gwbridge

Finally, let's talk about the docker_gwbridge network. This is a bridge network with a corresponding interface called docker_gwbridge, which is created on each host of the swarm cluster. The docker_gwbridge network connects traffic from the containers of a swarm cluster to the outside world. For example, such traffic will turn out if we send a request to Google.

I will not go into details, since I considered the bridge-network in detail in the previous article .

Conclusion

A container running on a swarm cluster can by default be connected to three or more networks. The first network, docker_gwbridge, allows containers to communicate with the outside world. The ingress network is only needed to establish incoming connections from the outside world. And, finally, overlay networks: they are created by the user himself and can be attached to containers. These networks serve as a common subnet for containers of a single network in which they can exchange data directly (even if they are running on different physical hosts).

There are also spaces of different networks that are created by default on the swarm cluster. These spaces help manage vxlan tunnels for overlay networks and load balancing rules for incoming connections.

Links / Resources

')

Source: https://habr.com/ru/post/334004/

All Articles