Release cycle for Infrastructure as Code

On the Internet, you can find quite a few articles on the topic Infrastructure as Code, SaltStack utilities, Kitchen-CI and so on, however, as I have not seen various IaC examples, they often remain only code, as a rule, with the division into branches in VCS corresponding the name of the environment type, for example dev / int, is possible even with tags, and as a rule, there is no need to talk about a full-fledged development cycle of configurations. In any case, with companies with which this situation is familiar, I did not find any articles.

Maybe it is understandable - total Agile and "once and in production."

I will try to rectify the situation of this article.

Brief job description

In this article, I will talk about solutions in the field of configuration and management of infrastructure solutions for projects of a platform consisting of 20 different components, not counting the analytical unit, with a total of 70 "server units" - just in such systems, there is more than enough space for creativity and engineering.

To be honest, not such a large number, especially if all this is controlled and configured automatically. Could tell more about the system, but to be honest, about the same thing, just smeared on a greater number of instances, and the "enterprise", read as kostulnosti more.

An example of the environment so for the seed.

The described platform is controlled entirely via SaltStack, the creation and launch of new instances is naturally named by the SaltCloud module. Of course, each service platform must be configured, but do not forget about the configuration of system services.

So, for example, the configuration of the most ordinary web server will include not only nginx / httpd and the site configuration itself, but also system services:

- network configuration

- configure ssh daemon

- host authorization

- tcp_wrapper setting

- iptables

- selinux / apparmor

- time synchronization

- logging

- audit

Do not forget about the DNS records for the site, issue and re-issue of certificates.

If you describe the configuration for each service separately, you can go crazy, even if you just copy-paste it.

Fortunately, SaltStack, as well as most other configuration management systems, has reproducible configuration blocks called Formulas . However, copying formulas, or imposing them directly from the repository, even if using a tag or a special release brunch, is not entirely correct from the point of view of stabilization and reproducibility - the tag can be rewritten, the brunch is updated, and monitor for a valid revision and tighten it is too difficult because it is necessary to keep a list of correspondences of dependencies between formulas relying on revisions. To this end, the process of versioning modules and dependencies was invented a long time ago. Yes, of course, it is possible, along with the revision, to maintain the dependency on the version, however VCS were invented to maintain the history of file changes, and not to fix release artifacts.

You should not also forget about the situation when you need to use formulas in the customer’s environment, and, to put it mildly, it is not safer to open access to the server of the company’s repositories from the customer’s environment.

SaltStack provides a component that allows you to work with binary packages with versions.

This component is called SaltStack Packet Manager or SPM for short. At its core, the resulting package is tar, compressed bzip with meta information, for which the FORMULA file is responsible. It is worth noting that to maintain the repository, you also need to create a meta-information file generated in the presence of all stored artifacts in the repository, the principle is the same as that of the Yum repository. There are several shortcomings in SPM, such as the inability to enumerate the list of formulas available in the repository, despite the fact that it tightens the metadata file that contains this information, specify the version of the formula dependency, but this is only due to the youth of SPM itself, it appeared if memory does not change, in version 2016.3.

Release object

Let's still get to the point. To do this, consider the configuration of the Gitbucket service, who is not up to date - the open source git repository management system written in scala, with a fairly good API, a plug-in system, a pleasant and convenient web interface, perfectly compared to such masters as Bitbucket, GitHub, GitLab (without CI) and especially gorgeous compared to Beanstalk.

So, as I said, it is written in scala, is a web application, so we need at least the following configurations - java, tomcat, nginx.

We will use Kitchen-CI on the Docker host as a kitchen-salt provider.

Let's take a look at the configuration directory structure just in case:

gitbucket-formula |-- gitbucket | |-- frontend | | |-- templates | | | |-- nginx-http.conf | | | |-- nginx-https.conf | | | `-- upstream.conf | | `-- init.sls | |-- plugins | | `-- init.sls | |-- templates | | |-- database.conf | | `-- gitbucket.conf | |-- init.sls | `-- settings.jinja |-- test | `-- integration | |-- fe | | `-- serverspec | | `-- frontend_spec.rb | |-- fe-ssl | | `-- serverspec | | `-- frontend_ssl_spec.rb | |-- gitbucket | | `-- serverspec | | `-- gitbucket_spec.rb | `-- helpers | `-- serverspec | |-- fe_shared_spec.rb | |-- fe_ssl_shared_spec.rb | `-- gitbucket_shared_spec.rb |-- .editorconfig |-- .gitattributes |-- .gitconfig |-- .gitignore |-- .kitchen.yml |-- pillar.example |-- FORMULA `-- README.md As you can see, the formula contains not only the basic service configuration parameters, but also tests written for serverspec in the test directory, the FORMULA meta information file, the configuration descriptor for kitchen, and the git repository service configuration files.

At the moment, we are interested in exactly the .kitchen.yml file:

--- driver: name: docker use_sudo: false require_chef_omnibus: false socket: tcp://dckhub.platops.org:2375 volume: /sys/fs/cgroup cap_add: - SYS_ADMIN provisioner: name: salt_solo formula: gitbucket salt_bootstrap_options: 'stable 2016.11' dependencies: - path: ../tomcat-formula name: tomcat - path: ../oracle-jdk-formula name: oracle-jdk - path: ../nginx-formula name: nginx state_top: base: '*': - gitbucket pillars: top.sls: base: '*': - gitbucket gitbucket.sls: tomcat: instance: name: 'gitbucket' connport: '8080' sslport: '8443' xms: '512M' xmx: '1G' mdmsize: '256M' frontend.sls: gitbucket: frontend: enabled: False ssl: enabled: False external: False crt: | -----BEGIN CERTIFICATE----- C ssl localhost -----END CERTIFICATE----- key: | -----BEGIN RSA PRIVATE KEY----- -----END RSA PRIVATE KEY----- platforms: - name: debian driver_config: image: debian:8 run_command: /sbin/init provision_command: - apt-get install -y --no-install-recommends wget curl tar mc iproute apt-utils apt-transport-https locales - localedef --no-archive -c -i en_US -f UTF-8 en_US.UTF-8 && localedef --no-archive -c -i ru_RU -f UTF-8 ru_RU.UTF-8 - name: centos driver_config: image: dckreg.platops.org/centos-systemd:7.3.1611 run_command: /sbin/init provision_command: - yum install -y -q wget curl tar mc iproute - localedef --no-archive -c -i en_US -f UTF-8 en_US.UTF-8 && localedef --no-archive -c -i ru_RU -f UTF-8 ru_RU.UTF-8 - sed -i -r 's/^(.*pam_nologin.so)/#\1/' /etc/pam.d/sshd - sed -i 's/tsflags=nodocs//g' /etc/yum.conf suites: - name: gitbucket - name: fe provisioner: pillars: top.sls: base: '*': - gitbucket - frontend frontend.sls: gitbucket: frontend: enabled: True - name: fe-ssl provisioner: pillars: top.sls: base: '*': - gitbucket - frontend frontend.sls: gitbucket: frontend: enabled: True url: localhost ssl: enabled: True In the Driver section, we specify the parameters for working with the docker host, a local address can also be specified, in addition, other drivers can be used, for example, vagrant , ec2 and others .

The Provisioner section is responsible for the configuration needed by SaltStack for the configuration rolling, it is here that we establish the dependencies listed above for this formula, the service configurations transmitted via pillar.

The Platforms section defines platforms, read as the operating systems on which testing will be performed.

And finally Suites, in this section we set the types of configurations that we will test on the platforms listed above. In the above descriptor for Kitchen, testing of three types is configured:

- net service setup - java + tomcat 8 + gitbucket

- setup with nginx over HTTP only

- configure with nginx with HTTP / S

A total of 3 configurations will be tested for each OS - Debian Jessie and CentOS 7.

As you can see from the directory view, there is a test folder in it, which contains the separation according to the testing suites - gitbucket, fe, fe-ssl, also pay attention to the helpers folder, it contains shared_examples for each type of testing, in order not to write the same tests.

Dependencies

Let's focus our attention on the Provisioner section, in addition to the pillar configuration and the parameters of the bootstrap for SaltStack, this section also contains information about the dependencies of this configuration, which should be available by relative or absolute paths during the configuration process. As you can see, only those configurations that are directly involved in testing this service are listed.

Of course, we can add those 9 system services listed above to fully cover the instance configuration, but first, such tests will take hours, for example, the Jenkins formula with installing all the necessary plug-ins for two OS families with 3 configurations for each lasts about an hour, - secondly, depending on the specific infrastructure, their set will be different and the number of such sets tends to infinity, and in the third case, such testing will still be performed when knurling on the integration environment.

Now that we are familiar with the subject of the release, we can proceed to the actual construction of the release configuration process.

We will use the familiar Jenkins as a sceduler, Nexus OSS 2 will be used to store artifacts.

Release Pipeline

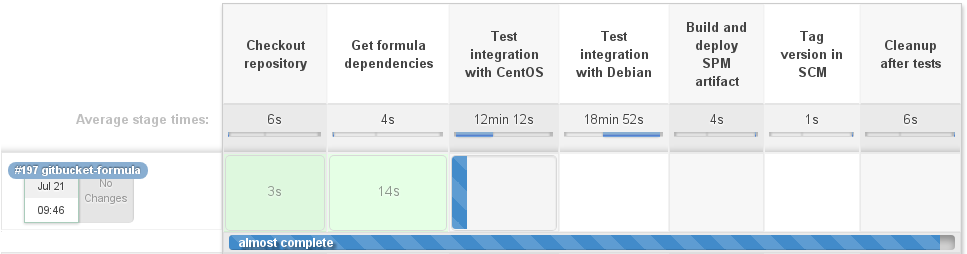

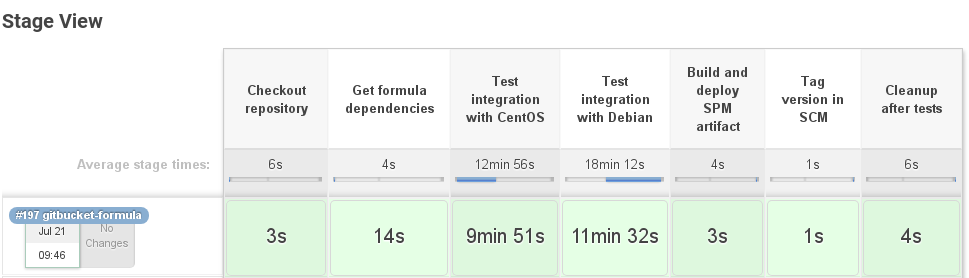

It's time to write a Jenkinsfile with a description of everything you need to run and so beautiful little squares with stages.

As a launch node, we will start, with the help of the Docker-cloud plugin, a container in which all the components we need are installed - ruby, test-kitchen, kitchen-salt, kitchen-docker and, in fact, the docker-engine itself, to be able to work with docker host.

First, download the repository with the basic formula in the directory with the repository name. As we have already said, we need other formulas as dependencies, it’s time to get a list of them and pull them off into a workspace. Please note that we use the standard naming of repositories, so we can use relative paths to the directory with the dependency by the repository name.

node("kitchen-ci") { try { stage('Checkout repository') { checkout changelog: false, poll: false, scm: [ $class : 'GitSCM', branches : [ [ name: "${branch}" ] ], doGenerateSubmoduleConfigurations: false, extensions : [ [ $class: 'RelativeTargetDirectory', relativeTargetDir: "${repository}" ] ], userRemoteConfigs : [ [ url: "${gitRepositoryUrl}/${repository}.git" ] ] ] dir("${repository}") { gitCommit = sh(returnStdout: true, script: "git rev-parse HEAD").trim() } currentBuild.displayName = "#${env.BUILD_NUMBER} ${repository}" currentBuild.description = "${branch}(${gitCommit.take(7)})" } } catch (err) { currentBuild.result = failedStatus } def kitchenConfig = readYaml(file: "${repository}/.kitchen.yml") def formulaMetadata = readYaml(file: "${repository}/FORMULA") def dependencies = kitchenConfig.provisioner.dependencies def platforms = kitchenConfig.platforms try { stage('Get formula dependencies') { for (dependency in dependencies) { depRepo = dependency['path'] - ~/\.{2}\// println "Prepare \"${depRepo}\" as dependency" checkout changelog: false, poll: false, scm: [ $class : 'GitSCM', branches : [ [ name: '*/master' ] ], doGenerateSubmoduleConfigurations: false, extensions : [ [ $class: 'RelativeTargetDirectory', relativeTargetDir: "${depRepo}" ] ], userRemoteConfigs : [ [ url: "${gitRepositoryUrl}/${depRepo}.git" ] ] ] } } } catch (err) { currentBuild.result = failedStatus } For simplicity, we do not specify the version of the dependency and download the latest stable from the wizard, however, this is easy to implement through the file versions that we install when successfully completing this pipeline to then tag along, or from the same binary repository.

Also, we will run testing only for two types of platforms, solely in order not to reset the display of beautiful squares.

The next step is actually launching testing of the targeted platform with the kitchen verify . This command starts the container assembly, then rolls the SaltStack configuration, after which Kitchen runs the tests written in Serverspec:

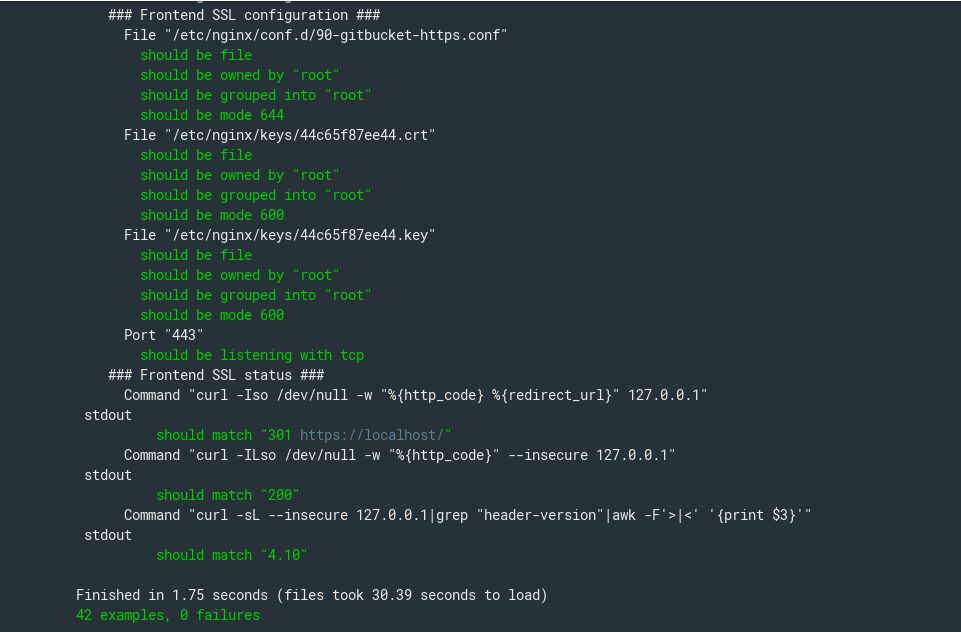

for (targetPlatform in targetPlatforms) { try { stage("Test integration with ${targetPlatform}") { dir("${repository}") { if (platforms['name'].containsAll(targetPlatform.toLowerCase())) { ansiColor('xterm') { sh "kitchen verify ${targetPlatform.toLowerCase()}" } } else { println "Skipping checks for \"${targetPlatform}\" platform" } } } } catch (err) { currentBuild.result = failedStatus } } In the fe-ssl configuration, 42 tests are run, including those listed below:

require 'serverspec' set :backend, :exec host_name = `cat /etc/hostname` instance_name = 'gitbucket' nginx_confd = '/etc/nginx/conf.d' nginx_keyd = '/etc/nginx/keys' status_host = '127.0.0.1' gitbucket_version = '4.10' ssl_vhost = nginx_confd + '/90-' + instance_name + '-https.conf' certs = [ nginx_keyd + '/' + host_name.chomp + '.crt', nginx_keyd + '/' + host_name.chomp + '.key', ] ports = %w[ 443 ] shared_examples_for 'gitbucket nginx ssl frontend' do describe '### Frontend SSL configuration ###' do describe file(ssl_vhost) do it { should be_file } it { should be_owned_by 'root' } it { should be_grouped_into 'root' } it { should be_mode 644 } end certs.each do |cert| describe file(cert) do it { should be_file } it { should be_owned_by 'root' } it { should be_grouped_into 'root' } it { should be_mode 600 } end end ports.each do |port| describe port(port) do it { should be_listening.with('tcp') } end end end describe '### Frontend SSL status ###' do describe command('curl -Iso /dev/null -w "%{http_code} %{redirect_url}" '+ status_host ) do its(:stdout) { should match '301 https://localhost/' } end describe command('curl -ILso /dev/null -w "%{http_code}" --insecure '+ status_host ) do its(:stdout) { should match '200' } end describe command('curl -sL --insecure ' +\ status_host +\ '|grep "header-version"|awk -F\'>|<\' \'{print $3}\'' ) do its(:stdout) { should match gitbucket_version } end end end

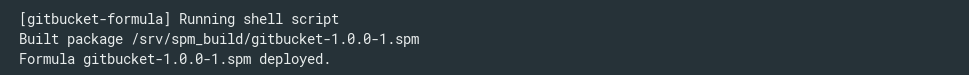

So, all the tests passed, then it is time to build the package and upload it to the binary artifacts repository.

The artifact is spm build , and since we have already started the shell stage, we’ll see the curl artifact.

try { stage('Build and deploy SPM artifact') { if (currentBuild.result != failedStatus) { withCredentials([[$class : 'UsernamePasswordMultiBinding', credentialsId : binaryRepoCredentials, usernameVariable: 'USERNAME', passwordVariable: 'PASSWORD']]) { dir("${repository}") { sh """#!/usr/bin/env bash sudo spm build . formula=\$(ls -1A ${spmBuildDir}|grep '.spm') if [[ -n \$formula ]]; then response=\$(curl --compressed \ -u ${env.USERNAME}:${env.PASSWORD} \ -s -o /dev/null -w "%{http_code}\n" \ -A 'Jenkins POST Invoker' \ --upload-file ${spmBuildDir}/\$formula \ ${binaryRepoUrl}); if [[ \$response -ne 201 ]]; then echo "Something goes wrong! HTTP_CODE: \$response"; exit 1; else echo "Formula \$formula deployed."; fi else echo "Couldn't find file"; exit 1; fi """ } } } else { println "We will not packing failed formulas" } } } catch (err) { currentBuild.result = failedStatus }

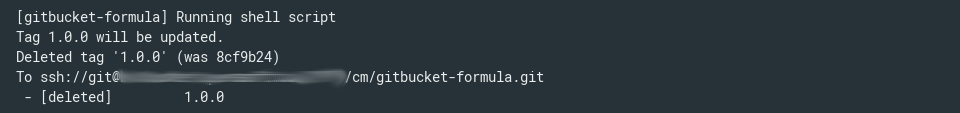

This is almost all finished, it remains to put the tag on the current commit and run the kitchen destroy to remove all created containers.

try { stage('Tag version in SCM') { currentTag = formulaMetadata['version'] if (currentBuild.result != failedStatus) { dir("${repository}") { sh """#!/usr/bin/env bash if [[ \$(git tag -l|grep ${currentTag}) ]]; then if [[ "\$(git show-ref --tags|grep ${currentTag}|awk '{print \$1}'|cut -b-7)" != "${gitCommit.take(7)}" ]]; then echo "Tag \"${currentTag}\" will be updated." git tag -d ${currentTag} git push origin :${currentTag} else echo "Tag \"${currentTag}\" already exists. Skipping." fi else echo "Creating tag \"${currentTag}\"" git tag ${currentTag} git push origin --tags fi """ } } else { println "We will not tag revision for failed formulas" } } } catch (err) { currentBuild.result = failedStatus } try { stage('Cleanup after tests') { dir("${repository}") { sh "kitchen destr" } } } catch (err) { currentBuild.result = failedStatus }

Generally, in Kitchen-CI there is a test command that runs such stages as destroy , create , converge , setup , verify and again destroy , however some of our formulas use container linking in the testing process, for example, FreeIPA, which starts the server configuration first, checks its performance , then the container starts with the client's configuration and enters it into the domain of the linked server, but if you run test , the server will rise, test, and then be removed before raising the client. That is why we use the verify command and the most recent stage in the pipeline is precisely the cleaning through destroy .

We work with a repository

The formula has been tested, a tag has been placed in the repository, the artifact has been deposited in the binary repository, but it is still not available for SaltStack - you need to update the meta-information, which requires running the spm create_repo $DIR command in the repository directory with all formulas.

Nexus is also configured via SaltStack, and therefore SPM is installed on it locally, which we will use.

Install the python-inotify module on the Nexus host and configure SaltStack Beacon for salt-minion, which will follow every five seconds changes in the formula directory:

#/etc/salt/minion.d/beacons.conf beacons: inotify: interval: 5 disable_during_state_run: True /opt/nexus/data/storage/org.platops.salt.formulas: mask: - create - modify - delete - attrib recurse: True auto_add: True exclude: - /opt/nexus/data/storage/org.platops.salt.formulas/SPM-METADATA - /opt/nexus/data/storage/org.platops.salt.formulas/.nexus/ At this stage, we need to restart salt-minion, after that, in case of changing the files in the directory, salt-master will receive an event about it:

salt/beacon/nexus.platops.org/inotify//opt/nexus/data/storage/org.platops.salt.formulas { "_stamp": "2017-07-09T10:31:11.283771", "change": "IN_ATTRIB", "id": "nexus.platops.org", "path": "/opt/nexus/data/storage/org.platops.salt.formulas/gitbucket-1.0.0-1.spm" On salt-master, we set up a reactor , which monitors events from the configured beacon and starts the configured state:

#/etc/salt/master.d/reactor.conf reactor: - 'salt/beacon/nexus.platops.org/inotify//opt/nexus/data/storage/org.platops.salt.formulas': - /srv/salt/reactor/update-spm-metadata.sls And actually state which runs the command we need:

#/srv/salt/reactor/update-spm-metadata.sls update spm repository metadata: local.cmd.run: - tgt: {{ data['id'] }} - arg: - spm create_repo /opt/nexus/data/storage/org.platops.salt.formulas Do not forget to restart salt-master, salt-minion and everything, in fact, every five seconds, the beacon checks for events through inotify in the directory and starts updating the repository metadata.

For those who use Nexus 3, it is also possible to implement this, look towards the Nexus webhooks and Salt-API .

Install formulas

Configure the repository in /etc/salt/spm.repos.d/nexus.repo on the salt-master, note that the password is set with plain-text, do not forget to set the appropriate permissions on this file, 400 for example, and use the proxy user with limited permissions, well, or add SPM to use encrypted passwords:

#/etc/salt/spm.repos.d/nexus.repo nexus.platops.org: url: https://nexus.platops.org/content/sites/org.platops.salt.formulas username: saltmaster password: supersecret We update the data on the formulas on salt-master and set the formula:

spm update_repo spm install gitbucket Installing packages: gitbucket Proceed? [N/y] y ... installing gitbucket Actually everything, a tested, tagged and packaged formula was installed in the salt-master directory.

We have organized such a fairly simple but effective release cycle of configurations for ourselves, coupled with the assembly of pull-requests, it helps us in the early stages to detect problems in formula dependencies, in the configuration of services, and also allows us to easily distribute configurations between various customer environements.

There is no limit to perfection, and you can add a lot more, be it to automatically start updating the repository data on the salt-master and automatically updating the formulas on it, testing with dependencies with a hard-coded version for greater compatibility, adding support for password encryption to SPM, adding to SPM capabilities for displaying a list of available formulas, adding to SPM the ability to install dependencies of a specific version, expanding the number of platforms tested, and so on and so forth.

By the way, in the tests that I cited, the test values are rigidly set, which are also installed in pillar in .kitchen.yml, which in essence creates the information digestion, thanks to my colleague Alexander Shevchenko , we no longer use them, but I think about that , he will write himself.

Thank you all, I hope the article will be useful in your work.

')

Source: https://habr.com/ru/post/333922/

All Articles