Cooking cache correctly

Many believe that the cache, and even distributed, is such an easy way to make everything faster and better. But, as practice shows, incorrect use of the cache often, if not always, makes everything worse.

Under the cut, a couple of stories await you about how an improperly bolted cache killed a performance.

- All the events described in the article are fictitious, any coincidences with real deployments are random.

- The Apache Ignite logic described in the article is intentionally simplified, important details are omitted.

- Do not try to repeat this in production without reading the official documentation thoughtfully!

- Some of the design patterns described are anti-patterns, remember this before implementation :)

My base slows down, and with your cache it slows down even more: (

What does an average web service look like?

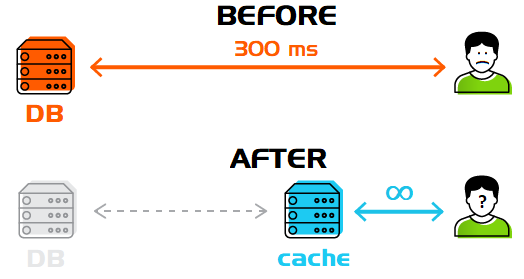

⟷ When the database stops cope need to do what?

Right! Buy a new iron!

And when will not help?

Right! Configure the cache, preferably distributed!

⟷ ⟷ Will it be faster? Is not a fact!

Why? Suppose you have achieved incredible concurrency and can accept thousands of requests at the same time. But, all of them will come in one key - a hot offer from the first page.

Further, all threads will follow the same logic: “there are no values in the cache, I will follow it to the base”.

What will happen in the end? Each thread comes down to the database and updates the value in the cache. As a result, the system will spend more time than if the cache was not in principle.

And the solution to the problem is very simple - synchronization of requests for the same keys.

Apache Ignite offers simple caching via Spring Caching with synchronization support.

@Cacheable("dynamicCache") public String cacheable(Integer key) { // :( return longOp(key); } @Cacheable(value = "dynamicCache", sync = true) public String cacheableSync(Integer key) { // , , // , , // , longOp(key) return longOp(key); } Synchronization functionality was added to Apache Ignite in version 2.1 .

Now fast! But still slowly: (

This story is a direct continuation of the previous one and is intended to show that the developers of caches also do not always use them correctly.

So, the fix adding cache synchronization turned out to be in production and ... did not help.

No one broke for the hot product from the first page, and all 1000 threads went to different keys at the same time, and synchronization mechanisms became a bottleneck.

In the previous article, I talked about how the Apache Ignite synchronization tools worked before .

Information about all synchronization tools was previously stored in the ignite-sys-cache , using the DATA_STRUCTURES_KEY key in the form of Map<String, DataStructureInfo> and each synchronizer addition looked like:

// lock(cache, DATA_STRUCTURES_KEY); // Map<String, DataStructureInfo> map = cache.get(DATA_STRUCTURES_KEY); map.put("Lock785", dsInfo); cache.put(DATA_STRUCTURES_KEY, map); // unlock(cache, DATA_STRUCTURES_KEY) In summary, when creating the necessary synchronizers, all threads sought to change the value with the same key.

Fast again! But is it safe?

Apache Ignite got rid of the “most important” key in version 2.1 , starting to save information about synchronizers separately. Users received an increase of more than 9000% in the real scenario.

And everything was fine until the light blinked, the bespereboiniki failed, and the users lost their warmed cache.

In Apache Ignite , starting from version 2.1, there appeared its own persistence implementation.

But, by itself, Persistence does not give guarantees of obtaining a consistent state during restart, since data is synchronized (flushed to disk) periodically, rather than in real time. The main objective of Persistence is the ability to store and efficiently process more data on one computer than it enters into its memory.

Consistency guarantee is achieved using the Write-ahead logging approach. Simply put, data is first written to disk as a logical operation, and then stored in a distributed cache.

When the server is restarted on disk, we will have a status that is relevant at some point in time. Enough for this state to roll WAL, and the system is again operational and consistent (full ACID). Recovery takes seconds, at worst minutes, but not hours or days required to warm up the cache with actual requests.

Now reliable! Is it fast?

Persistence slows down the system (for writing, not for reading). The included WAL slows down the system even more, it is a payment for reliability.

There are several levels of logging that give different guarantees:

- DEFAULT - full guarantees for saving data at any load level

- LOG_ONLY - full guarantees, except for the case of failure of the operating system

- BACKGROUND - there are no guarantees, but Apache Ignite will try :)

- NONE - the log of operations is not kept.

The difference in speed DEFAULT and NONE on the weighted average system reaches 10 times.

Let's return to our situation. Suppose we chose the BACKGROUND mode, which is 3 times slower than NONE and now we are not afraid to lose the warmed cache (we may lose operations from the last few minutes before the crash, but no more).

In this mode, we worked for several months, anything happened and we easily restored the system after crashes. All around happy and happy.

If it were not for one “but”, today, on December 20, at the peak of sales, we realized that the servers are 80% loaded and are about to crash under load.

Turning off WAL (transfer to NONE) would give us a 3 times reduction in load, but for this you need to restart the entire Apache Ignite cluster and lose the opportunity to recover on such a cluster in case of anything - go back to the “ fast and unreliable ” point?

In Apache Ignite , starting with version 2.4 , it became possible to disable WAL without restarting the cluster, and then enable it with the restoration of all guarantees.

SQL

// ALTER TABLE tableName NOLOGGING // ALTER TABLE tableName LOGGING Java

// ignite.cluster().isWalEnabled(cacheName); // ignite.cluster().disableWal(cacheName); // ignite.cluster().enableWal(cacheName); Now and quickly and reliably, but if that - there are options ...

Now, if we need to disable logging (to hell with the guarantee, the main thing is to relive the load!) We can temporarily disable WAL and enable it at any convenient time.

And to quickly download a huge set of historical data at the start of the system.

It is also worth mentioning that disconnection is possible within only certain caches, and not the entire system, i.e. in which case the data in the caches for which the disconnection was not conducted, will not be affected.

At the same time, after WAL is turned on, the system guarantees the behavior according to the selected WAL mode.

Kesh, even distributed, is not a panacea!

Not a single technology, no matter how sophisticated and advanced it is, can solve all your problems. Incorrectly used technology is likely to make it worse, and used correctly, it is unlikely to close all the gaps.

The cache, including the distributed one, is a mechanism that gives acceleration only with proper use and thoughtful tuning. Keep this in mind before introducing it into your project, make measurements before and after in all the cases connected with it ... and let the performance be with you!

')

Source: https://habr.com/ru/post/333868/

All Articles