What is SMT and how it works in applications - pros and cons

While I am delighting my graphomaniac biases by writing a detailed technical article about Windows Performance Station, I wanted to share my thoughts about what SMT brings to AMD and Intel processors good and bad, and how Windows Performance Station will help here.

For those who are interested in this topic, welcome to…

So, first, let's define SMT.

')

As Wikipedia tells us, SMT (from English simultaneous multithreading) is simultaneous multithreading, i.e. multiple threads are executed simultaneously, rather than sequentially, as is the case in “temporal multithreading”.

Many people know this technology called “Intel Hyper-Threading”, everything has been written about it for a long time, but so far I have come across the fact that many developers, and, moreover, ordinary people do not understand what the main essence of the “simultaneous” execution of several commands one processor core and what problems it carries.

For a start, let's talk about temporal multithreading. Before the implementation of SMT technology in the form of "Hyper-Threading" technology was used "temporal multithreading".

Everything is simple, let's imagine that we have one pipeline and one worker (CPU Core), which performs operations on numbers and records the result. Suppose for these operations he needs a screwdriver and a wrench. The operating system (OS) puts our worker on the conveyor in order one operation for a screwdriver, and behind it one operation for a wrench. One worker at a time can operate either with a wrench or only with a screwdriver. Thus, laying out a different number of different blocks, the OS determines the priority of performing certain operations from different applications. We can indicate the proportion of some blocks to others inside the OS when we indicate the priority of the process. This is exactly what all task managers do, including and "Windows Performance Station". This prioritization extends further to SMT mechanisms and all work with pipelines.

With the advent of SMT, the situation becomes a bit more complicated.

Imagine a conveyor and two workers who have one screwdriver and one wrench for two. At the same time, each of them can operate either with a screwdriver or with a wrench only. One conveyor is conventionally divided into two halves along. SMT allows you to add two numbers to such a conveyor at once, one for working with a screwdriver and the other for working with a wrench, so the actions of these workers look like this:

- The first worker receives an operation for a screwdriver, and the second, standing opposite, at the same time, an operation for a wrench, after which both record the result.

Based on this, when there is an operation on the pipeline (A and B) on the one hand and (D and E) on the other hand, everything is fine, but when parallelizing the chain of calculations there are two problems:

1. On the one side of the conveyor was the action (A and B) = C, and on the other (D and E) = C,

those. you must first write one C value, and then a second C value, but not simultaneously (control conflict).

2. On the one side of the conveyor was the action (A and B) = C, and on the other (A and C) = D,

those. you must first calculate C, and then calculate D, but not simultaneously (data conflict).

Both conflicts cause a delay in the execution of instructions and are solved by sequential execution of commands. To reduce such delays, elements of a processor called a predictor of transitions and a processor cache were introduced.

The predictor of transitions, as the name implies, carries out a prediction :) He predicts the likelihood of the first problem occurring when different transformations must occur on one number.

In turn, the processor cache is needed for a quick solution to the second problem, when we stop the solution of the expression (A and C) = D and write the result of execution (A and B) = C in the cache, after which we immediately calculate (A and C) = D.

In fairness, it is worth clarifying that the problem of parallelizing the pipeline also appears in multi-core processors without SMT, but multi-core processors do not have a processor idle moment when there are two screwdrivers for two workers, since in this terminology, each worker has his own screwdriver and his wrench.

All these dances around the processor guessing how to parallelize current operations lead to serious energy losses and to tangible friezes when starvation of different types of tasks on cores with SMT occurs.

In general, it is worth keeping in mind that Intel developed Hyper-Threading simultaneously with the creation of its first multi-core Xeon processors and, in fact, this technology can be considered a kind of compromise when a double pipeline is put on one core.

From the presentation of marketers, it is customary to praise how well one core can perform several tasks at the same time and how it improves performance "in some usage scenarios", however, it is customary to conceal the problems inherent in the SMT concept.

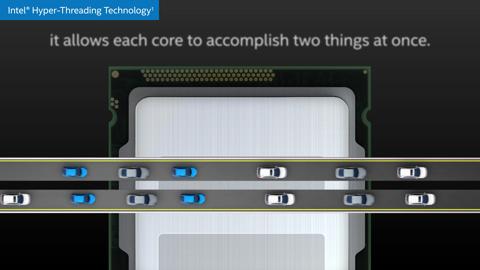

It is noteworthy that on the Intel site, in a commercial, dual core is shown rather than Hyper-Threading, the one who has finished reading up to this point has probably already guessed why :)

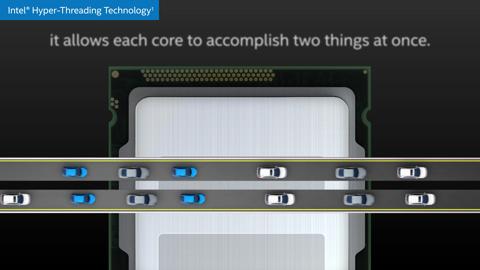

Image from video:

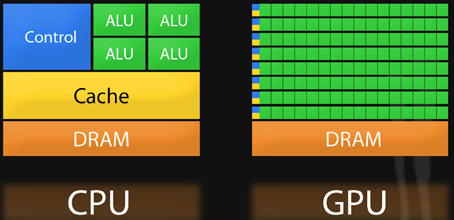

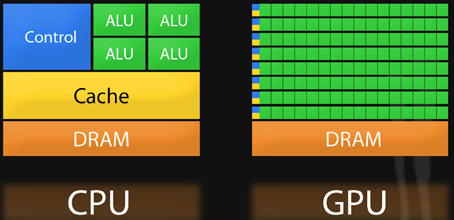

More accurate image:

What conclusion can be made here and what to improve?

The mapping feature of loading logical cores in Windows OS introduces confusion about the real-world kernel load information with SMT. If you see that two neighboring kernels are busy ~ 50% this can mean two things:

1) both cores perform two parallel calculations and are 50% loaded (everything is ok here).

2) both cores perform one calculation alternately (as if two workers passed a wrench through each other through clock).

Therefore, if you see that all the cores of your processor with SMT are loaded 50% or more, the load does not increase, most likely this means that the processor utilization is 100%, but it is of the same type of task that it cannot divide to perform on SMT!

Together with obvious advantages, SMT brings friezes into tasks that are time-sensitive (video / music playback or FPS in games). That is why many gamers watch FPS drop when SMT / Hyper-Threading is enabled.

As I wrote earlier, the “Windows Performance Station” application can sort the blocks laid out on the pipeline even at the stage of task processing by the OS kernel. With the help of priorities and separation of processes by processor cores, you can lay out certain blocks on the pipeline in the right quantity and put blocks of different types for different virtual cores to prevent fasting of different types of tasks. It is for this dynamic analysis task in the “Windows Performance Station” that we combined the neural network and the task manager. As a result, the neural network analyzes the task and decomposes it according to the received data according to different rules, so that each core in the SMT pair performs different tasks.

Through this approach, SMT processors on Windows can work more efficiently with multitasking and multi-threaded processes. And that is why we are very pleased with the appearance of SMT in the new AMD Ryzen processors.

The application "Windows Performance Station" is free and does not contain advertising, it can be downloaded from our website via the link in the spoiler:

You can read more about Windows Performance Station in my previous article.

Many thanks to all who read to the end.

For those who are interested in this topic, welcome to…

So, first, let's define SMT.

')

As Wikipedia tells us, SMT (from English simultaneous multithreading) is simultaneous multithreading, i.e. multiple threads are executed simultaneously, rather than sequentially, as is the case in “temporal multithreading”.

Many people know this technology called “Intel Hyper-Threading”, everything has been written about it for a long time, but so far I have come across the fact that many developers, and, moreover, ordinary people do not understand what the main essence of the “simultaneous” execution of several commands one processor core and what problems it carries.

For a start, let's talk about temporal multithreading. Before the implementation of SMT technology in the form of "Hyper-Threading" technology was used "temporal multithreading".

Everything is simple, let's imagine that we have one pipeline and one worker (CPU Core), which performs operations on numbers and records the result. Suppose for these operations he needs a screwdriver and a wrench. The operating system (OS) puts our worker on the conveyor in order one operation for a screwdriver, and behind it one operation for a wrench. One worker at a time can operate either with a wrench or only with a screwdriver. Thus, laying out a different number of different blocks, the OS determines the priority of performing certain operations from different applications. We can indicate the proportion of some blocks to others inside the OS when we indicate the priority of the process. This is exactly what all task managers do, including and "Windows Performance Station". This prioritization extends further to SMT mechanisms and all work with pipelines.

With the advent of SMT, the situation becomes a bit more complicated.

Imagine a conveyor and two workers who have one screwdriver and one wrench for two. At the same time, each of them can operate either with a screwdriver or with a wrench only. One conveyor is conventionally divided into two halves along. SMT allows you to add two numbers to such a conveyor at once, one for working with a screwdriver and the other for working with a wrench, so the actions of these workers look like this:

- The first worker receives an operation for a screwdriver, and the second, standing opposite, at the same time, an operation for a wrench, after which both record the result.

Based on this, when there is an operation on the pipeline (A and B) on the one hand and (D and E) on the other hand, everything is fine, but when parallelizing the chain of calculations there are two problems:

1. On the one side of the conveyor was the action (A and B) = C, and on the other (D and E) = C,

those. you must first write one C value, and then a second C value, but not simultaneously (control conflict).

2. On the one side of the conveyor was the action (A and B) = C, and on the other (A and C) = D,

those. you must first calculate C, and then calculate D, but not simultaneously (data conflict).

Both conflicts cause a delay in the execution of instructions and are solved by sequential execution of commands. To reduce such delays, elements of a processor called a predictor of transitions and a processor cache were introduced.

The predictor of transitions, as the name implies, carries out a prediction :) He predicts the likelihood of the first problem occurring when different transformations must occur on one number.

In turn, the processor cache is needed for a quick solution to the second problem, when we stop the solution of the expression (A and C) = D and write the result of execution (A and B) = C in the cache, after which we immediately calculate (A and C) = D.

In fairness, it is worth clarifying that the problem of parallelizing the pipeline also appears in multi-core processors without SMT, but multi-core processors do not have a processor idle moment when there are two screwdrivers for two workers, since in this terminology, each worker has his own screwdriver and his wrench.

All these dances around the processor guessing how to parallelize current operations lead to serious energy losses and to tangible friezes when starvation of different types of tasks on cores with SMT occurs.

In general, it is worth keeping in mind that Intel developed Hyper-Threading simultaneously with the creation of its first multi-core Xeon processors and, in fact, this technology can be considered a kind of compromise when a double pipeline is put on one core.

From the presentation of marketers, it is customary to praise how well one core can perform several tasks at the same time and how it improves performance "in some usage scenarios", however, it is customary to conceal the problems inherent in the SMT concept.

It is noteworthy that on the Intel site, in a commercial, dual core is shown rather than Hyper-Threading, the one who has finished reading up to this point has probably already guessed why :)

Image from video:

More accurate image:

What conclusion can be made here and what to improve?

The mapping feature of loading logical cores in Windows OS introduces confusion about the real-world kernel load information with SMT. If you see that two neighboring kernels are busy ~ 50% this can mean two things:

1) both cores perform two parallel calculations and are 50% loaded (everything is ok here).

2) both cores perform one calculation alternately (as if two workers passed a wrench through each other through clock).

Therefore, if you see that all the cores of your processor with SMT are loaded 50% or more, the load does not increase, most likely this means that the processor utilization is 100%, but it is of the same type of task that it cannot divide to perform on SMT!

Together with obvious advantages, SMT brings friezes into tasks that are time-sensitive (video / music playback or FPS in games). That is why many gamers watch FPS drop when SMT / Hyper-Threading is enabled.

As I wrote earlier, the “Windows Performance Station” application can sort the blocks laid out on the pipeline even at the stage of task processing by the OS kernel. With the help of priorities and separation of processes by processor cores, you can lay out certain blocks on the pipeline in the right quantity and put blocks of different types for different virtual cores to prevent fasting of different types of tasks. It is for this dynamic analysis task in the “Windows Performance Station” that we combined the neural network and the task manager. As a result, the neural network analyzes the task and decomposes it according to the received data according to different rules, so that each core in the SMT pair performs different tasks.

Through this approach, SMT processors on Windows can work more efficiently with multitasking and multi-threaded processes. And that is why we are very pleased with the appearance of SMT in the new AMD Ryzen processors.

The application "Windows Performance Station" is free and does not contain advertising, it can be downloaded from our website via the link in the spoiler:

Download

You can read more about Windows Performance Station in my previous article.

Windows Performance Station or how I taught the computer to work effectively

Many thanks to all who read to the end.

Source: https://habr.com/ru/post/333632/

All Articles