Testing the Mobile Delphi Application Database

In the previous article, “Choosing a DBMS for a Mobile Delphi Application,” as its name implies, the first stage was shown in developing the application subsystem that is responsible for storing and most of the processing of its data; the clarification about the “most part” was made for a reason, as the result of the indicated choice fell on the Interbase DBMS precisely because of the possibility to use stored procedures (CP), which became the focus of the main logic on working with data, leaving the Delphi code uncomplicated challenge to call them.

For a better understanding of the need for testing in this particular case, it should be noted that in the described project, a rather high quality bar was initially set, the maintenance of which, in terms of the functionality implemented in the procedures, was achieved, including through autotests, checking key CPs (they responsible for the application-critical functionality ( recommendation system ). It is one of the ways to organize such testing - based on DUnitX and XML - and is the subject of the article.

If you yourself have already used, or are familiar with the literature explaining the benefits of automated tests, then this semi-introductory section can be completely overlooked, especially since it does not contain any theory, but gives examples of the benefits brought to this particular project.

')

If we discard primitive, stupid and easily detectable errors, it seems always an empty result due to a forgotten SUSPEND — that is, all those that immediately manifest themselves with mandatory manual testing of newly written code on minimal test data — then we can distinguish 2 categories of successfully resolved problems :

Having learned about the delights of automatic testing, an inexperienced developer may want to apply it everywhere, however ... World peace is certainly wonderful, but what is the price of such aspirations? The author spent on the actual tests themselves - the preparation of a test plan, the selection of test data, about 3-4 weeks (and this is without taking into account the time to create the infrastructure for their launch, as described in the section below). Therefore, it is recommended to relate the benefits and costs individually in each case.

Let us turn to the practical part, having preliminarily formulated the technical requirements that autotests should meet:

Real files are much larger than this example - from 3,000 to 4,500 lines, and editing them in text form is not reasonable - the chance to make a mistake is already very high in the tests, so the specialized Altova XMLSpy editor, which has a tabular mode for working with XML, was used. Thanks to this tool, you can fully focus on the essence, without being distracted by the tags, their nesting, etc.:

The selected library allows you to place tests in any class, marking it and the corresponding methods with special attributes, for example:

Here

The obstacle can be easily overcome due to the mechanism of plug-ins - DUnitX allows you to create sets flexibly and fill them according to your needs; moreover, the “boxed” mechanism based on attributes is also implemented as a plug-in (see DUnitX.FixtureProviderPlugin.pas ), which means it is well tested and there are no surprises to expect when working with it.

Consideration of the module with the new plugin will start with the resulting classes, leaving the implementation of the methods a little later:

The first two classes,

After becoming acquainted with the purpose of the classes, it remains to demonstrate the rest of the code:

The requirements for autotests mentioned a ready-made form that allows you to manage the launch and view its results - it was about DUNitX.Loggers.MobileGUI.pas , which, after minor cosmetic improvements, was applied. The results of the run on 3 platforms, with one intentionally failed test, are presented below:

For a better understanding of the need for testing in this particular case, it should be noted that in the described project, a rather high quality bar was initially set, the maintenance of which, in terms of the functionality implemented in the procedures, was achieved, including through autotests, checking key CPs (they responsible for the application-critical functionality ( recommendation system ). It is one of the ways to organize such testing - based on DUnitX and XML - and is the subject of the article.

Was there a use?

If you yourself have already used, or are familiar with the literature explaining the benefits of automated tests, then this semi-introductory section can be completely overlooked, especially since it does not contain any theory, but gives examples of the benefits brought to this particular project.

')

If we discard primitive, stupid and easily detectable errors, it seems always an empty result due to a forgotten SUSPEND — that is, all those that immediately manifest themselves with mandatory manual testing of newly written code on minimal test data — then we can distinguish 2 categories of successfully resolved problems :

- The most obvious, for the sake of which, in fact, basically such tests are written, is a layer of logical errors , when the required algorithm is implemented in some way incorrectly. The tests were able to identify up to a dozen of such flaws, and these cases with a high degree of probability would not be detected by manual checks, since special, specially selected test data were required.

- Not so rich in harvest, but also leading to unacceptable errors, the category is the imperfection of Interbase itself . Thanks to the tests, an unpleasant acquaintance with such nuances as an unstable cursor and a connection with CP , the parameters of which are taken from the query tables, took place.

Having learned about the delights of automatic testing, an inexperienced developer may want to apply it everywhere, however ... World peace is certainly wonderful, but what is the price of such aspirations? The author spent on the actual tests themselves - the preparation of a test plan, the selection of test data, about 3-4 weeks (and this is without taking into account the time to create the infrastructure for their launch, as described in the section below). Therefore, it is recommended to relate the benefits and costs individually in each case.

From words - to business

Let us turn to the practical part, having preliminarily formulated the technical requirements that autotests should meet:

- Cross platform : running on Windows and mobile devices; The desktop OS is needed for an obvious reason - the test run is performed many times faster, hence their development is carried out on it, after which the final run on the mobile OS takes place.

- Using the DUnitX library - it, in contrast to DUnit , is developing steadily and has a ready-made graphical interface on FireMonkey (albeit medium-sized), the same for all platforms.

- The separation of the launch infrastructure (DUnitX-parts) from the actual semantic content of the tests - test data, list of CPs with execution parameters, expected results - all this should be stored in an XML file . This requirement somewhat complicates the task, but it gives advantages: the essence of the tests remains unclouded, only the service Delphi code does not complicate them, and second, if necessary, you can safely switch to another test library. A small fragment of such XML will help to better understand what has been said (references to it will appear later in the text):

<?xml version="1.0" encoding="utf-8"?> <> <__> <_> < ="SHOPPING_LIST"/> </_> </__> <__/> <> <!-- .--> <>...</> ... <!--2 , .--> <> <_> < ="SHOPPING_LIST"> <> <ID ="">1</ID> <NAME =""> </NAME> <ADD_DATE ="__">1.2.2015</ADD_DATE> </> </> < ="LIST_ITEM"> <> <ID ="">1</ID> <LIST_ID ="">1</LIST_ID> <GOODS_ID ="">107</GOODS_ID> <AMOUNT ="">1</AMOUNT> <ADD_DATE ="__">25.2.2015 15:12</ADD_DATE> </> ... </> </_> < ="RECOMMEND_GOODS_TO_EMPTY_LIST" _=""> <> <_> <TARGET_DATE ="__">16.9.2014</TARGET_DATE> </_> </> ... <> <_> <TARGET_DATE ="__">1.3.2015</TARGET_DATE> </_> <> <> <GOODS_ID ="">107</GOODS_ID> <RECOMMENDATION_ID ="">0</RECOMMENDATION_ID> <ACCURACY ="">0.75</ACCURACY> </> </> </> ... </> </> <>...</> ... </> </>

Real files are much larger than this example - from 3,000 to 4,500 lines, and editing them in text form is not reasonable - the chance to make a mistake is already very high in the tests, so the specialized Altova XMLSpy editor, which has a tabular mode for working with XML, was used. Thanks to this tool, you can fully focus on the essence, without being distracted by the tags, their nesting, etc.:

DUnitX extension

The selected library allows you to place tests in any class, marking it and the corresponding methods with special attributes, for example:

[TestFixture] TTestSet = class public [SetupFixture] procedure Setup; [TearDownFixture] procedure Teardown; [Setup] procedure TestSetup; [TearDown] procedure TestTeardown; [Test] procedure Test1; [Test] [TestCase(' 1', '1,1')] [TestCase(' 2', '2,2')] procedure Test2(const IntegerParameter: Integer; const StringParameter: string); end; Here

TTestSet is a test suite (fixture, in terms of a library) of 2 tests, the second of which will be executed a couple of times - with both specified options of parameters. However, this standard method does not suit us completely, because the number of tests and parameter values are statically set at the compilation stage, and we need to dynamically generate both a list of test cases (one for each XML file) and a list of tests in each of them (taking from the corresponding file on the tag "Test").The obstacle can be easily overcome due to the mechanism of plug-ins - DUnitX allows you to create sets flexibly and fill them according to your needs; moreover, the “boxed” mechanism based on attributes is also implemented as a plug-in (see DUnitX.FixtureProviderPlugin.pas ), which means it is well tested and there are no surprises to expect when working with it.

"Interface" part of the module

Consideration of the module with the new plugin will start with the resulting classes, leaving the implementation of the methods a little later:

unit Tests.XMLFixtureProviderPlugin; interface implementation uses DUnitX.TestFramework, DUnitX.Utils, DUnitX.Extensibility, ... Xml.XMLDoc, {$IFDEF MSWINDOWS} Xml.Win.msxmldom {$ELSE} Xml.omnixmldom {$ENDIF}; type TXMLFixtureProviderPlugin = class(TInterfacedObject, IPlugin) protected procedure GetPluginFeatures(const context: IPluginLoadContext); end; TXMLFixtureProvider = class(TInterfacedObject, IFixtureProvider) protected procedure GenerateTests(const Fixture: ITestFixture; const FileName: string); procedure Execute(const context: IFixtureProviderContext); end; TDBTests = class abstract { , , , «», , . } ... public procedure Setup; virtual; procedure Teardown; virtual; procedure TestSetup; virtual; abstract; procedure TestTeardown; virtual; abstract; procedure Test(const TestIndex: Integer); virtual; abstract; end; TXMLBasedDBTests = class(TDBTests) private const TestsTag = ''; ... private FFileName: string; FXML: TXMLDocument; // , XML. ... public procedure AfterConstruction; override; { AfterConstruction – Setup . : https://github.com/VSoftTechnologies/DUnitX/commit/267111f4feec77d51bf2307a194f44106d499680#diff-745fb4ee38a43631f57d1b6ef88e0ffcR212 } destructor Destroy; override; [SetupFixture] procedure Setup; override; [TearDownFixture] procedure Teardown; override; [Setup] procedure TestSetup; override; [TearDown] procedure TestTeardown; override; [Test] procedure Test(const TestIndex: Integer); override; function DetermineTestIndexes: TArray<Integer>; property FileName: string read FFileName write FFileName; end; // ... initialization TDUnitX.RegisterPlugin(TXMLFixtureProviderPlugin.Create); end. The first two classes,

TXMLFixtureProviderPlugin and TXMLFixtureProvider , are needed for embedding plugins into the existing system and are only interesting with the implementation of their methods. The next, TDBTests , is also of little interest, since by and large it is highlighted in the hierarchy in order to encapsulate the database-specific things, so you should go directly to the heir - TXMLBasedDBTests . His duties are tied to the stages of his life:- Immediately after the start of the application responsible for running the tests, DUnitX compiles a list of test cases: at this moment objects of the specified class are created using the

DetermineTestIndexesmethod, which returns the indices of the child nodes of the Tests node (see the XML fragment above). With its implementation, it is not possible to do a little blood-just knowing the number of nodes-descendants and returning, conditionally, the sequence of indices from 1 to N — will not work, because, first of all, some of the nodes are comments, and it is also possible to temporarily disable the test (instead of removing it from file).

As a result, for each index obtained, a test will be added (test case, to be strict) to the set. - This stage, in general, may be absent, but if the user receives a command to run the tests (by pressing a button), the following chain of calls follows:

Setup- The

Testis repeatedly executed, to which the indices obtained at the first stage are transmitted. Each of hisTestSetuppreceded byTestSetup, and after completion is followed byTestTeardown. Teardown

Implementation methods

After becoming acquainted with the purpose of the classes, it remains to demonstrate the rest of the code:

TXMLFixtureProviderPluginprocedure TXMLFixtureProviderPlugin.GetPluginFeatures(const context: IPluginLoadContext); begin context.RegisterFixtureProvider(TXMLFixtureProvider.Create); end;TXMLFixtureProviderprocedure TXMLFixtureProvider.Execute(const context: IFixtureProviderContext); var XMLDirectory, XMLFile: string; begin {$IFDEF MSWINDOWS} XMLDirectory := { .}; {$ELSE} XMLDirectory := TPath.GetDocumentsPath; {$ENDIF} for XMLFile in TDirectory.GetFiles(XMLDirectory, '*.xml') do GenerateTests ( context.CreateFixture(TXMLBasedDBTests, TPath.GetFileNameWithoutExtension(XMLFile), ''), XMLFile ); end; procedure TXMLFixtureProvider.GenerateTests(const Fixture: ITestFixture; const FileName: string); procedure FillSetupAndTeardownMethods(const RTTIMethod: TRttiMethod); var Method: TMethod; TestMethod: TTestMethod; begin Method.Data := Fixture.FixtureInstance; Method.Code := RTTIMethod.CodeAddress; TestMethod := TTestMethod(Method); if RTTIMethod.HasAttributeOfType<SetupFixtureAttribute> then Fixture.SetSetupFixtureMethod(RTTIMethod.Name, TestMethod); if RTTIMethod.HasAttributeOfType<TearDownFixtureAttribute> then Fixture.SetTearDownFixtureMethod(RTTIMethod.Name, TestMethod, RTTIMethod.IsDestructor); if RTTIMethod.HasAttributeOfType<SetupAttribute> then Fixture.SetSetupTestMethod(RTTIMethod.Name, TestMethod); if RTTIMethod.HasAttributeOfType<TearDownAttribute> then Fixture.SetTearDownTestMethod(RTTIMethod.Name, TestMethod); end; var XMLTests: TXMLBasedDBTests; RTTIContext: TRttiContext; RTTIMethod: TRttiMethod; TestIndex: Integer; begin XMLTests := Fixture.FixtureInstance as TXMLBasedDBTests; XMLTests.FileName := FileName; RTTIContext := TRttiContext.Create; try for RTTIMethod in RTTIContext.GetType(Fixture.TestClass).GetMethods do begin FillSetupAndTeardownMethods(RTTIMethod); if RTTIMethod.HasAttributeOfType<TestAttribute> then for TestIndex in XMLTests.DetermineTestIndexes do Fixture.AddTestCase( RTTIMethod.Name, TestIndex.ToString, '', '', RTTIMethod, True, [TestIndex] ); end; finally RTTIContext.Free; end; end;TDBTestsprocedure TDBTests.Setup; begin // . ... end; procedure TDBTests.Teardown; begin // . ... end;TXMLBasedDBTestsprocedure TXMLBasedDBTests.AfterConstruction; begin inherited; // FXML. ... FXML.DOMVendor := GetDOMVendor({$IFDEF MSWINDOWS} SMSXML {$ELSE} sOmniXmlVendor {$ENDIF}); // . ... end; destructor TXMLBasedDBTests.Destroy; begin // . ... inherited; end; function TXMLBasedDBTests.DetermineTestIndexes: TArray<Integer>; var TestsNode: IXMLNode; TestIndex: Integer; TestIndexList: TList<Integer>; begin FXML.LoadFromFile(FFileName); try TestsNode := FXML.DocumentElement.ChildNodes[TestsTag]; TestIndexList := TList<Integer>.Create; try for TestIndex := 0 to TestsNode.ChildNodes.Count - 1 do if { ?} then TestIndexList.Add(TestIndex); Result := TestIndexList.ToArray; finally TestIndexList.Free; end; finally FXML.Active := False; end; end; procedure TXMLBasedDBTests.Setup; begin inherited; FXML.LoadFromFile(FFileName); end; procedure TXMLBasedDBTests.Teardown; begin FXML.Active := False; inherited; end; procedure TXMLBasedDBTests.TestSetup; begin inherited; // «__». ... end; procedure TXMLBasedDBTests.TestTeardown; begin inherited; // «__». ... end; procedure TXMLBasedDBTests.Test(const TestIndex: Integer); var TestNode: IXMLNode; begin inherited; TestNode := FXML.DocumentElement.ChildNodes[TestsTag].ChildNodes[TestIndex]; // . ... end;

Graphical interface

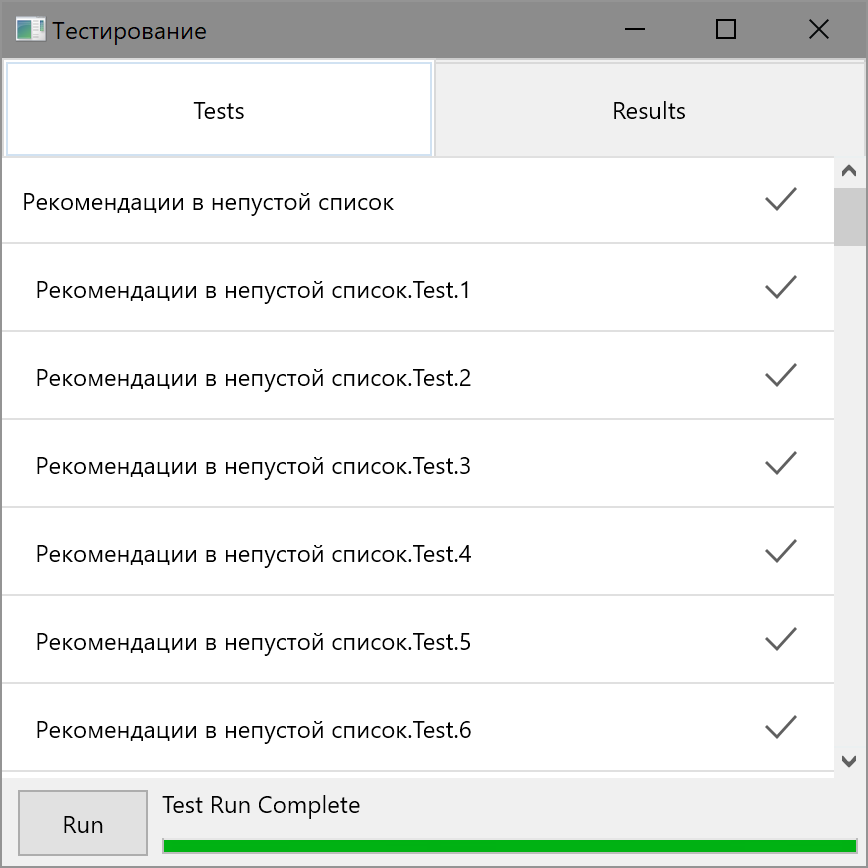

The requirements for autotests mentioned a ready-made form that allows you to manage the launch and view its results - it was about DUNitX.Loggers.MobileGUI.pas , which, after minor cosmetic improvements, was applied. The results of the run on 3 platforms, with one intentionally failed test, are presented below:

- Windows (runtime 7s)

- Android (run time 35 s)

- iOS (runtime 28 seconds)

Source: https://habr.com/ru/post/333548/

All Articles