AWS DeepLearning AMI - why (and how) is it worth using

Sometimes good things come for free ...

What is AMI?

For those of you who do not know what AMI is, let me quote the official documentation on this issue:

Amazon Machine Image (AMI) provides the data you need to run a virtual server instance in the cloud. You configure AMI when you run an instance, and you can run as many instances from AMI as you need. You can also run virtual machine instances from as many different AMIs as you need.

This should be enough to understand the rest of the article, but I would advise you to spend some time on the official AMI documentation .

Deep learning or deep learning is a set of machine learning algorithms that try to simulate high-level abstractions in data using architectures consisting of many non-linear transformations

Deep learning is part of a wider family of machine learning methods that match data presentation. An observation (for example, an image) can be represented in many ways, such as a vector of intensity of values per pixel, or (in a more abstract form) as a set of primitives, areas of a certain shape, etc.

On the other hand, there are opinions that deep learning is nothing but a buzzword or rebranding for neural networks. Wiki .

What is AWS DeepLearning AMI (aka DLAMI) and why should it be used?

Training (training) of neural networks can be done in 2 ways: using a CPU or using a GPU. I think it’s no secret to anyone that learning with the help of the GPU shows better results in terms of speed (and, as a result, costs) than learning with the help of the CPU, therefore all modern machine learning systems support the GPU. However, in order to use all the advantages of a GPU, it is not enough just to have this same GPU, you also need to “squat”:

- Get the GPU itself.

- Customize its driver.

- Find libraries that can use all the features of your GPU. Libraries must be compatible with drivers and hardware from 1 and 2.

- You need to have a neural network that was compiled with the libraries that were previously found.

So what needs to be done to solve all these 4 bad luck? There are 2 options:

- Download the source code of the network and the library to work with the GPU, and then build everything yourself in the desired configuration (in reality, it is even more difficult to do than it sounds).

- Find the GPU support framework build, then install everything you need and hope that it will take off.

Both cases have different pros and cons, however there is one big minus for these options - both require some technical knowledge from the user. This is the main reason why not many people, as we would like, train neural networks on the GPU.

How can DLAMI solve this problem? Yes, it is easy, the fact is that DLAMI is the first free solution, including everything you need right out of the box:

- Drivers for the latest graphics processor from Nvidia;

- Recent libraries CUDA and CuDNN;

- Prebuilt GPU-enabled frameworks (and compiled with those versions of CUDA and CuDNN that are available in AMI).

Al, by the way, a list of frameworks that work out of the box:

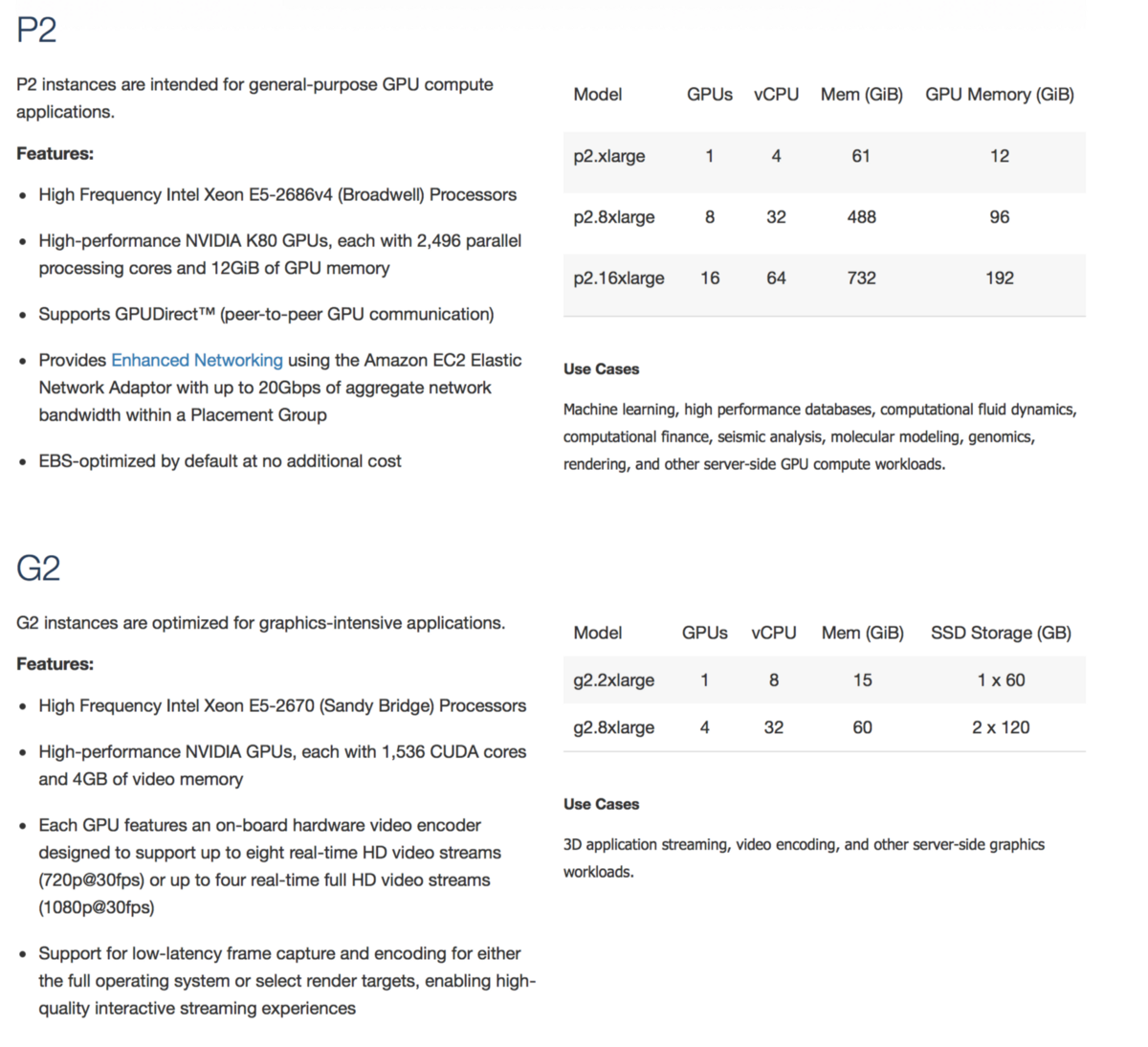

DLAMI can be used with GPU-compatible machines on AWS, for example P2 or G2:

You can, by the way, try to play around with the newly released G3

I hope, now we have the answer to the question: why and who needs to use DLAMI. Now let's discuss the answer to the next question ...

How exactly can you create a car with DLAMI?

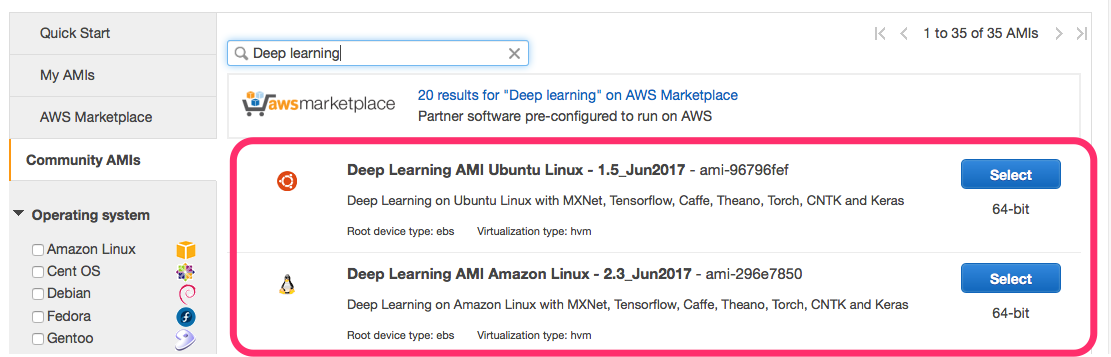

To do this, you first need to choose which option DLAMI is preferable:

- based on Ubuntu (can be used with any Ubuntu packages).

- Amazon Linux based (includes all AWS programs, such as awscli, out of the box).

If we have decided on the DLAMI type, we will proceed with the ways of creating DLAMI-based machines:

- Using AWS EC2 Marketplace:

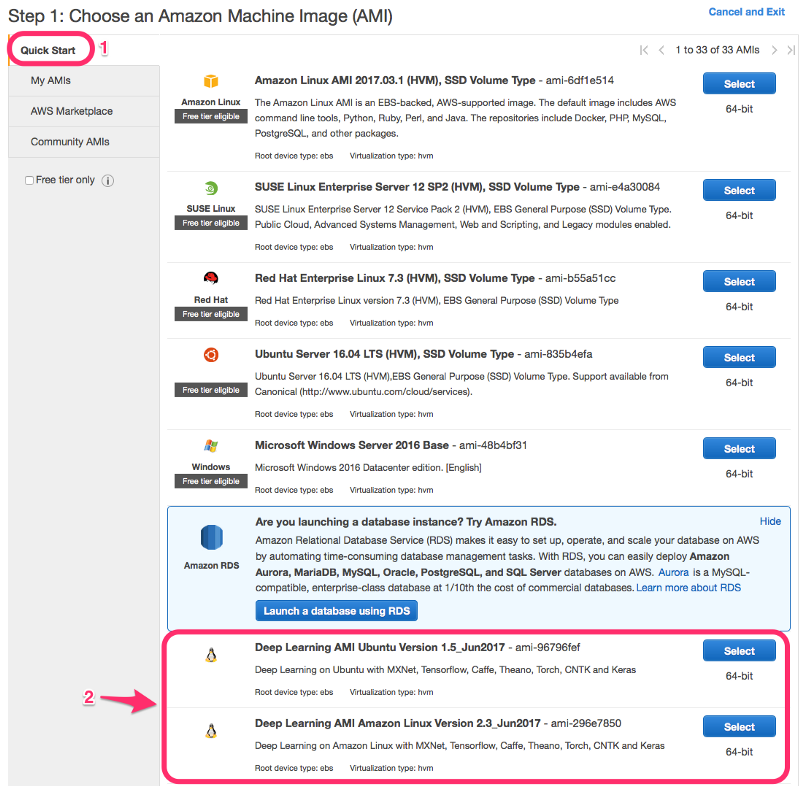

- Using the EC2 console.

The EC2 console actually provides two ways to create it, the usual creation:

And accelerated EC2 console creation, applying the default configuration:

Update issues

There is one disclaimer that needs to be discussed. Since all the frameworks are built from scratch, you can’t just take it and update it to the latest version, there is a risk to get a version of the framework that is not built with GPU support (or not compatible with the CUDA version). So upgrade packages at your own risk!

I agree that this makes it difficult to upgrade to new versions of the frameworks, since you need to upgrade to a new AMI, and not just upgrade the package. In turn, the transition to a new AMI can be painful. So keep this in mind when creating a new instance of a virtual machine, I would advise you to create a separate EBS to store your data, which you can easily unmount and use with a new instance of a virtual machine with an updated version of AMI. Well, or store the data in the repository.

In practice, I found that it is not such a big problem for machines that are not used for research purposes for a long time. Plus DLAMI usually includes fairly fresh versions of frameworks.

')

Source: https://habr.com/ru/post/333380/

All Articles