Oculus Rift integration into desktop Direct3D application on the example of Renga

Hello! In this article I want to make out the process of connecting a virtual reality helmet to a desktop application for Windows. It's about Oculus Rift.

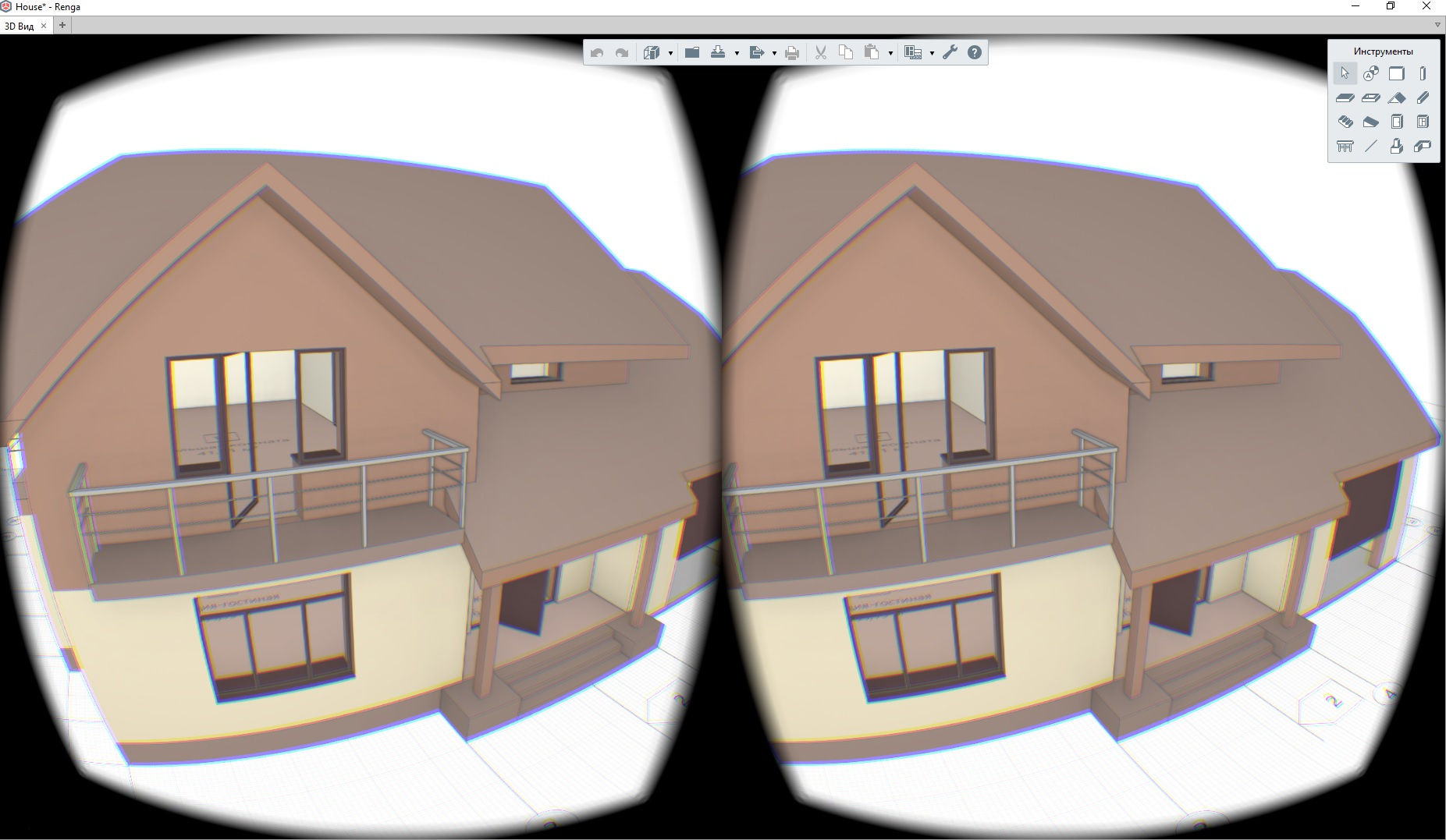

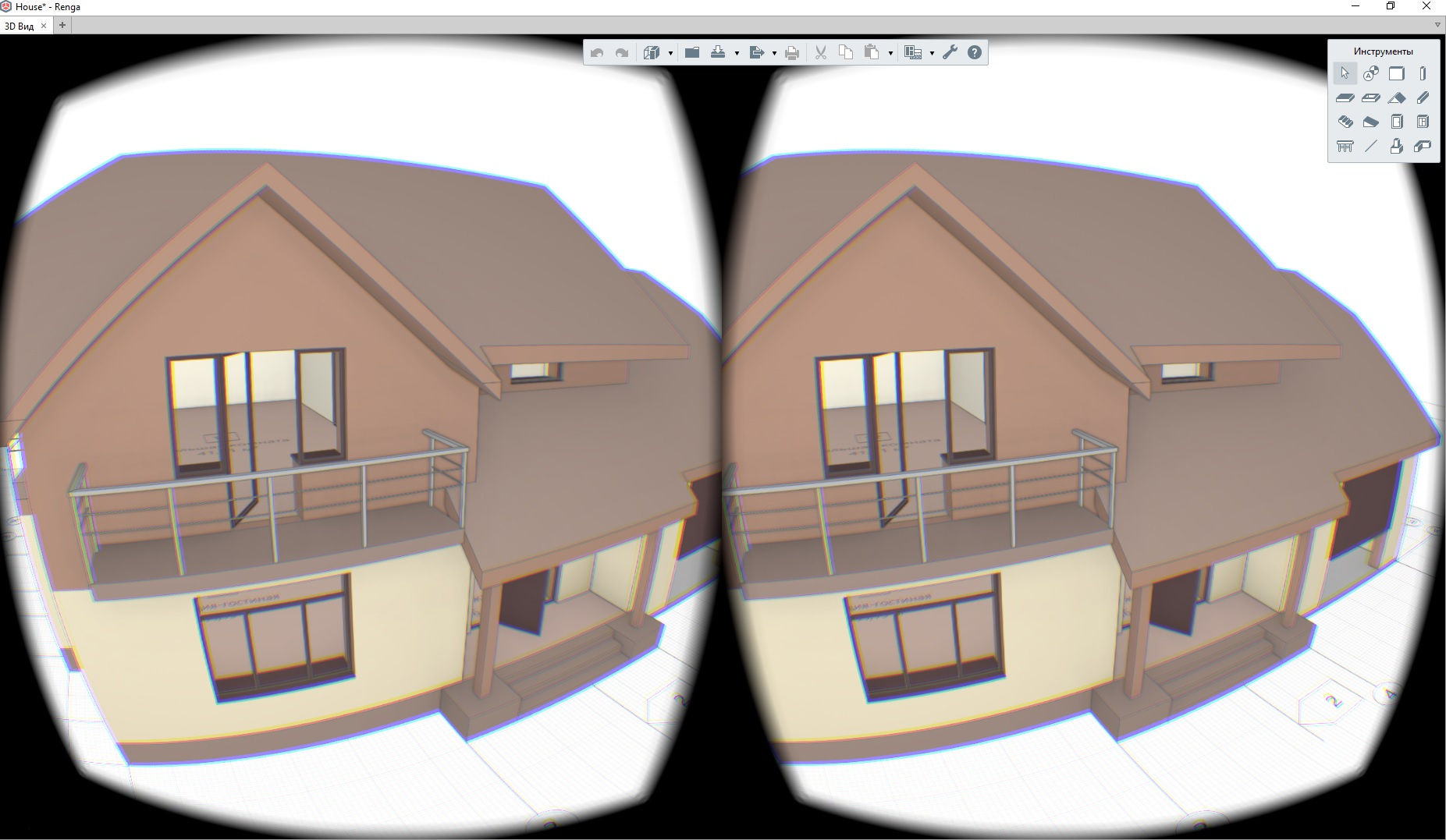

Architectural visualization is a very fertile topic for various kinds of experiments. We decided to keep up with the trend. In one of the following versions of our BIM-systems ( let me remind you that I work at Renga Software , a joint venture of ASCON and 1C): Renga Architecture - for architectural and construction design and Renga Structure - for designing the structural part of buildings and structures, It will be possible to walk on the projected building in a virtual reality helmet. It is very convenient for demonstration of the project to the customer and evaluation of those or other design decisions from the point of view of ergonomics.

')

The site developer helmet is available for download SDK . At the time of this writing, the latest available version is 1.16. There is also OpenVR from Valve. I did not try this thing myself, but there are suspicions that it works worse than the native for Oculus SDK.

Let us examine the main stages of connecting the helmet to the application. First you need to initialize the device:

Initialization complete. Now we have a session - this is a pointer to the internal structure of ovrHmdStruct. We will use it for all requests to the oculus runtime. luid is the identifier of the graphics adapter that the helmet is connected to. It is required for configurations with multiple video cards or laptops. The application should use the same adapter for drawing.

The process of creating a frame in the usual mode and for the helmet Oculus Rift is not very different.

For each eye, we need to create a texture with SwapChain and RenderTarget.

To create textures, Oculus SDK provides a set of functions.

An example of a wrapper for creating and storing SwapChain and RenderTarget for each eye:

Using this wrapper, we create a texture for each eye, where we will draw the 3D scene. The size of the texture needs to be learned from Oculus Runtime. To do this, you need to get a description of the device and using the ovr_GetFovTextureSize function to get the required texture size for each eye:

Another convenient to create the so-called Mirror Texture. This texture can be displayed in the application window. Oculus Runtime will copy the image for two eyes after post-processing into this texture.

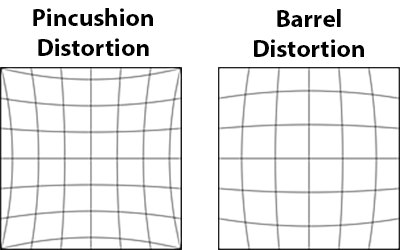

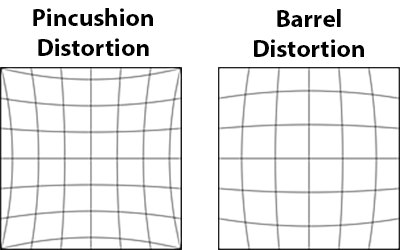

If you do not do post processing, the person will see in the helmet an image obtained using an optical system with positive distortion (image on the left). To compensate for the oculus imposes the effect of negative distortion (image right).

The code for creating mirror texture:

An important point when creating textures. When creating a SwapChain using the ovr_CreateTextureSwapChainDX function, we pass in the desired texture format. This format is used later for post-processing by the Oculus runtime.

For everything to work properly, the application must create a swap chain in the sRGB color space. For example, OVR_FORMAT_R8G8B8A8_UNORM_SRGB . If you do not do gamma correction in your application, then you need to create a swap chain in sRGB format. Set the ovrTextureMisc_DX_Typeless flag in ovrTextureSwapChainDesc. Create a Render Target in DXGI_FORMAT_R8G8B8A8_UNORM format. If this is not done, the image on the screen will be too light.

After we have connected the helmet and created textures for each eye, we need to draw the scene into the corresponding texture. This is done in the usual way that direct3d provides. There is nothing interesting. You only need to get his position from the helmet; this is done like this:

Now, when creating a frame, you need to remember to set the correct view matrix for each eye, taking into account the position of the helmet.

After we have rendered the scene for each eye, we need to transfer the resulting textures to the post-processing in Oculus runtime.

This is done like this:

After that, the finished image will appear in the helmet. In order to show in the application what the person in the helmet sees, you can copy the contents of the mirror texture we created earlier into the back buffer of the application window:

That's all. Many good examples can be found in the Oculus SDK.

Architectural visualization is a very fertile topic for various kinds of experiments. We decided to keep up with the trend. In one of the following versions of our BIM-systems ( let me remind you that I work at Renga Software , a joint venture of ASCON and 1C): Renga Architecture - for architectural and construction design and Renga Structure - for designing the structural part of buildings and structures, It will be possible to walk on the projected building in a virtual reality helmet. It is very convenient for demonstration of the project to the customer and evaluation of those or other design decisions from the point of view of ergonomics.

')

The site developer helmet is available for download SDK . At the time of this writing, the latest available version is 1.16. There is also OpenVR from Valve. I did not try this thing myself, but there are suspicions that it works worse than the native for Oculus SDK.

Let us examine the main stages of connecting the helmet to the application. First you need to initialize the device:

#define OVR_D3D_VERSION 11 // in case direct3d 11 #include "OVR_CAPI_D3D.h" bool InitOculus() { ovrSession session = 0; ovrGraphicsLuid luid = 0; // Initializes LibOVR, and the Rift ovrInitParams initParams = { ovrInit_RequestVersion, OVR_MINOR_VERSION, NULL, 0, 0 }; if (!OVR_SUCCESS(ovr_Initialize(&initParams))) return false; if (!OVR_SUCCESS(ovr_Create(&session, &luid))) return false; // FloorLevel will give tracking poses where the floor height is 0 if(!OVR_SUCCESS(ovr_SetTrackingOriginType(session, ovrTrackingOrigin_EyeLevel))) return false; return true; } Initialization complete. Now we have a session - this is a pointer to the internal structure of ovrHmdStruct. We will use it for all requests to the oculus runtime. luid is the identifier of the graphics adapter that the helmet is connected to. It is required for configurations with multiple video cards or laptops. The application should use the same adapter for drawing.

The process of creating a frame in the usual mode and for the helmet Oculus Rift is not very different.

For each eye, we need to create a texture with SwapChain and RenderTarget.

To create textures, Oculus SDK provides a set of functions.

An example of a wrapper for creating and storing SwapChain and RenderTarget for each eye:

struct EyeTexture { ovrSession Session; ovrTextureSwapChain TextureChain; std::vector<ID3D11RenderTargetView*> TexRtv; EyeTexture() : Session(nullptr), TextureChain(nullptr) { } bool Create(ovrSession session, int sizeW, int sizeH) { Session = session; ovrTextureSwapChainDesc desc = {}; desc.Type = ovrTexture_2D; desc.ArraySize = 1; desc.Format = OVR_FORMAT_R8G8B8A8_UNORM_SRGB; desc.Width = sizeW; desc.Height = sizeH; desc.MipLevels = 1; desc.SampleCount = 1; desc.MiscFlags = ovrTextureMisc_DX_Typeless; desc.BindFlags = ovrTextureBind_DX_RenderTarget; desc.StaticImage = ovrFalse; ovrResult result = ovr_CreateTextureSwapChainDX(Session, pDevice, &desc, &TextureChain); if (!OVR_SUCCESS(result)) return false; int textureCount = 0; ovr_GetTextureSwapChainLength(Session, TextureChain, &textureCount); for (int i = 0; i < textureCount; ++i) { ID3D11Texture2D* tex = nullptr; ovr_GetTextureSwapChainBufferDX(Session, TextureChain, i, IID_PPV_ARGS(&tex)); D3D11_RENDER_TARGET_VIEW_DESC rtvd = {}; rtvd.Format = DXGI_FORMAT_R8G8B8A8_UNORM; rtvd.ViewDimension = D3D11_RTV_DIMENSION_TEXTURE2D; ID3D11RenderTargetView* rtv; DIRECTX.Device->CreateRenderTargetView(tex, &rtvd, &rtv); TexRtv.push_back(rtv); tex->Release(); } return true; } ~EyeTexture() { for (int i = 0; i < (int)TexRtv.size(); ++i) { Release(TexRtv[i]); } if (TextureChain) { ovr_DestroyTextureSwapChain(Session, TextureChain); } } ID3D11RenderTargetView* GetRTV() { int index = 0; ovr_GetTextureSwapChainCurrentIndex(Session, TextureChain, &index); return TexRtv[index]; } void Commit() { ovr_CommitTextureSwapChain(Session, TextureChain); } }; Using this wrapper, we create a texture for each eye, where we will draw the 3D scene. The size of the texture needs to be learned from Oculus Runtime. To do this, you need to get a description of the device and using the ovr_GetFovTextureSize function to get the required texture size for each eye:

ovrHmdDesc hmdDesc = ovr_GetHmdDesc(session); ovrSizei idealSize = ovr_GetFovTextureSize(session, (ovrEyeType)eye, hmdDesc.DefaultEyeFov[eye], 1.0f); Another convenient to create the so-called Mirror Texture. This texture can be displayed in the application window. Oculus Runtime will copy the image for two eyes after post-processing into this texture.

If you do not do post processing, the person will see in the helmet an image obtained using an optical system with positive distortion (image on the left). To compensate for the oculus imposes the effect of negative distortion (image right).

The code for creating mirror texture:

// Create a mirror to see on the monitor. ovrMirrorTexture mirrorTexture = nullptr; mirrorDesc.Format = OVR_FORMAT_R8G8B8A8_UNORM_SRGB; mirrorDesc.Width = width; mirrorDesc.Height =height; ovr_CreateMirrorTextureDX(session, pDXDevice, &mirrorDesc, &mirrorTexture); An important point when creating textures. When creating a SwapChain using the ovr_CreateTextureSwapChainDX function, we pass in the desired texture format. This format is used later for post-processing by the Oculus runtime.

For everything to work properly, the application must create a swap chain in the sRGB color space. For example, OVR_FORMAT_R8G8B8A8_UNORM_SRGB . If you do not do gamma correction in your application, then you need to create a swap chain in sRGB format. Set the ovrTextureMisc_DX_Typeless flag in ovrTextureSwapChainDesc. Create a Render Target in DXGI_FORMAT_R8G8B8A8_UNORM format. If this is not done, the image on the screen will be too light.

After we have connected the helmet and created textures for each eye, we need to draw the scene into the corresponding texture. This is done in the usual way that direct3d provides. There is nothing interesting. You only need to get his position from the helmet; this is done like this:

ovrHmdDesc hmdDesc = ovr_GetHmdDesc(session); ovrEyeRenderDesc eyeRenderDesc[2]; eyeRenderDesc[0] = ovr_GetRenderDesc(session, ovrEye_Left, hmdDesc.DefaultEyeFov[0]); eyeRenderDesc[1] = ovr_GetRenderDesc(session, ovrEye_Right, hmdDesc.DefaultEyeFov[1]); // Get both eye poses simultaneously, with IPD offset already included. ovrPosef EyeRenderPose[2]; ovrVector3f HmdToEyeOffset[2] = { eyeRenderDesc[0].HmdToEyeOffset, eyeRenderDesc[1].HmdToEyeOffset }; double sensorSampleTime; // sensorSampleTime is fed into the layer later ovr_GetEyePoses(session, frameIndex, ovrTrue, HmdToEyeOffset, EyeRenderPose, &sensorSampleTime); Now, when creating a frame, you need to remember to set the correct view matrix for each eye, taking into account the position of the helmet.

After we have rendered the scene for each eye, we need to transfer the resulting textures to the post-processing in Oculus runtime.

This is done like this:

OculusTexture * pEyeTexture[2] = { nullptr, nullptr }; // ... // Draw into eye textures // ... // Initialize our single full screen Fov layer. ovrLayerEyeFov ld = {}; ld.Header.Type = ovrLayerType_EyeFov; ld.Header.Flags = 0; for (int eye = 0; eye < 2; ++eye) { ld.ColorTexture[eye] = pEyeTexture[eye]->TextureChain; ld.Viewport[eye] = eyeRenderViewport[eye]; ld.Fov[eye] = hmdDesc.DefaultEyeFov[eye]; ld.RenderPose[eye] = EyeRenderPose[eye]; ld.SensorSampleTime = sensorSampleTime; } ovrLayerHeader* layers = &ld.Header; ovr_SubmitFrame(session, frameIndex, nullptr, &layers, 1); After that, the finished image will appear in the helmet. In order to show in the application what the person in the helmet sees, you can copy the contents of the mirror texture we created earlier into the back buffer of the application window:

ID3D11Texture2D* tex = nullptr; ovr_GetMirrorTextureBufferDX(session, mirrorTexture, IID_PPV_ARGS(&tex)); pDXContext->CopyResource(backBufferTexture, tex); That's all. Many good examples can be found in the Oculus SDK.

Source: https://habr.com/ru/post/332806/

All Articles