How to make complicated simple. The history of the creation of "Project1917"

At the end of June 2016, the guys from the “Project 1917”, dedicated to the centenary of the October revolution in Russia, asked to help them implement the web part of their project. It was supposed that it would be a social network where Nicholas II would post his photos, Lenin would like him, Trotsky commented. We were not the first to whom they addressed: someone said that in a very short time it would be impossible to do this, or it would be very expensive.

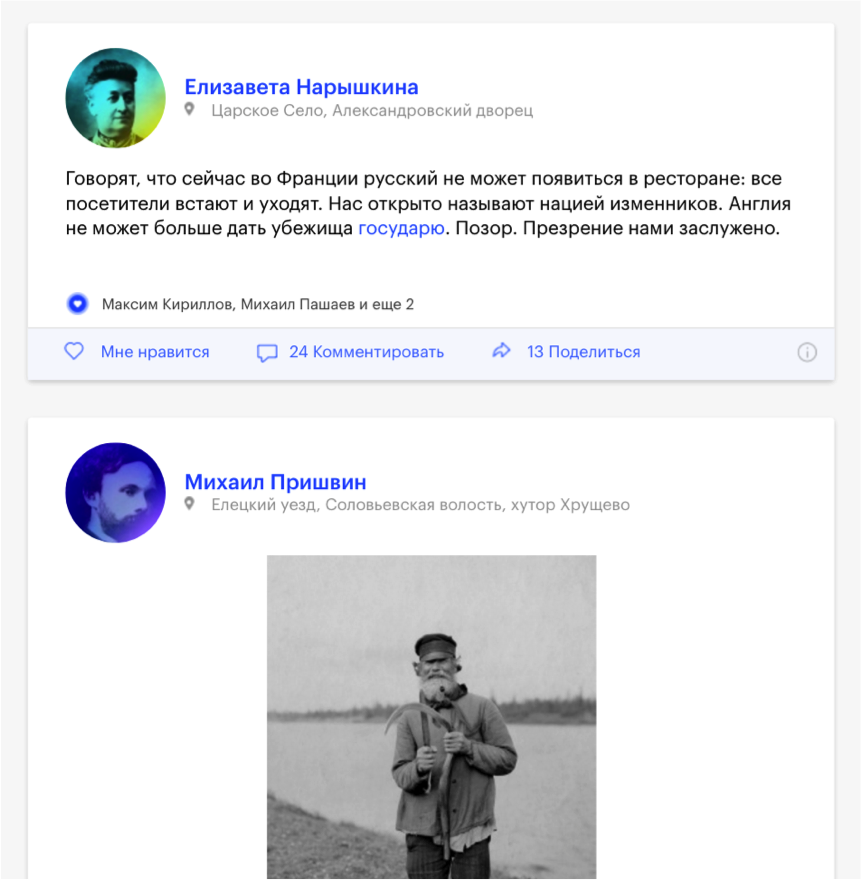

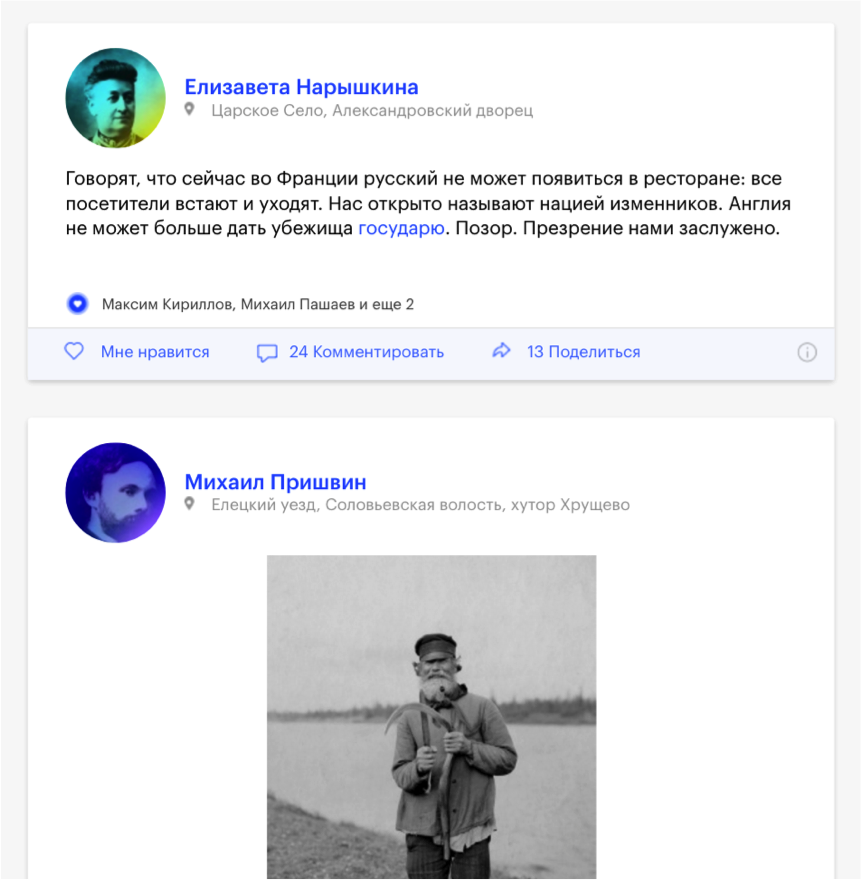

The creators of the project say that this is the best social network in history - because all its users have long died. This is a website in the format of a social network, where users are real historical figures who write about events that happened exactly a hundred years ago. All materials are based on real historical documents, letters, publicistic materials, using data from photo archives, from video archives. Readers of our time can comment on these posts, like, share them on social networks.

')

Having studied the problem, we realized that it is quite realizable, and we can manage to do it in the designated time frame. Despite the fact that our main profile is administration and support of high-load projects, we started with web development, and still sometimes we are engaged in development (who, if not we, know better how the site infrastructure should be designed for high traffic ).

The key point: we had a deadline deadline date - accurate to the minute. It is 15:00 on November 14th - at that time the press releases will go to the media, Yandex will publish the project in its own place, a man with a megaphone will make an official project announcement on Red Square. The probability to move the deadline at least an hour was equal to zero.

At the stage of coordination of work with the client, we determined a clear schedule for the project. It is clear that a lot of materials, and the site should be filled with content not an hour before the release, but much earlier. In early August, we decided to roll out the admin panel and begin to impose. In September - to modify the admin panel and start linking data, in October, implement interaction with users of our time (make authorization, implement sheers, likes, comments). November 7 (good date), it was decided to conduct load testing. November 14 - the launch of the project, a week after launch, we implement push-notifications to return the user to the site. In December, the site was supposed to be translated into English.

July 3, we signed a contract, work plan and made the first commit in the repository.

In a complex project with a hard deadline, it is very important that the team does not look like this: Vasya, who is working, and ten managers on him

The first principle is no rocket science (no rocket technologies), although the dates are tight. We are not building a rocket, which should fly to Mars and save a man who has run out of potatoes. We just make a social network.

If in the process of solving some problem such a sticker appeared in one of the working chats, it meant: “Guys, something is wrong with you, you need to break the task into smaller subtasks, solve it separately and not complicate your life ".

In the team, we had a project manager, three programmers, two administrators, two testers, and at some point it was decided to divide the team into two geographical areas: some of the guys left Irkutsk to work in Moscow to ensure a long working day (we have the main office in Irkutsk, and the development was first conducted there).

Which stack was chosen? Every day there are new technologies, and in all this it is very easy to get lost, to include something unnecessary, simply because it is fashionable. So do not - do not forget the old Russian saying: "The steeper the jeep, the longer it takes to go behind the tractor." And in programming this saying is applicable.

How did we decide to build a stack? We set up Nginx with PHP-FPM as the web server, MySQL 5.7 was chosen for the base, we chose Laravel as the backend framework - this is our working framework. Angular 1.5 was chosen as the frontend framework.

One of the main principles why Angular 1.5 is - we use only what we know well, or we can quickly find the answer if we don’t know something. The first more or less stable version of the second Angulyar came out two weeks after we began development, and we were no longer up to rewriting everything. Prior to this, with versions 1.5, 1.4, we worked quite a lot, we knew all the pitfalls, so we decided to do what we know how to use.

We took Imagemagic to work with graphics, we took FFmpeg to work with video materials and gifs, we definitely use Memcached, and we use Gulp with our own written tasks to collect and work with statics.

A month later we gave the admin panel. How to build admin part, how the site is filled with content? As a basis, we put the L5-repository package in the backend - this is the package for Laravel, which allows literally three teams to implement the RESTful API to us, to make us all the models, to create out of migration boxes with complex relationships, to generate a full controller for us to work with data. The front end admin was executed as a one-page web application: the ngResource service is being actively used to interact with the API. On the frontend side, we tried to implement a self-signed JS-cache so that the admin data was not constantly requested from the server, if we downloaded something once, and we work with it on the client. All admin panel is built on Angular components.

It was very important to discuss with the client how they want to build the work of the editorial board. The ideal admin version is when five people are working on a section at a time: someone has updated the name, someone has the text. The client has planned a consistent work: they will be responsible for the text of the post and responsible for the order of the posts. We made it so that the components do not overlap with each other, and update only the data with which they work. On average, we spent about an hour developing a component, and some fundamentally new section in the admin panel was rolled out in a day.

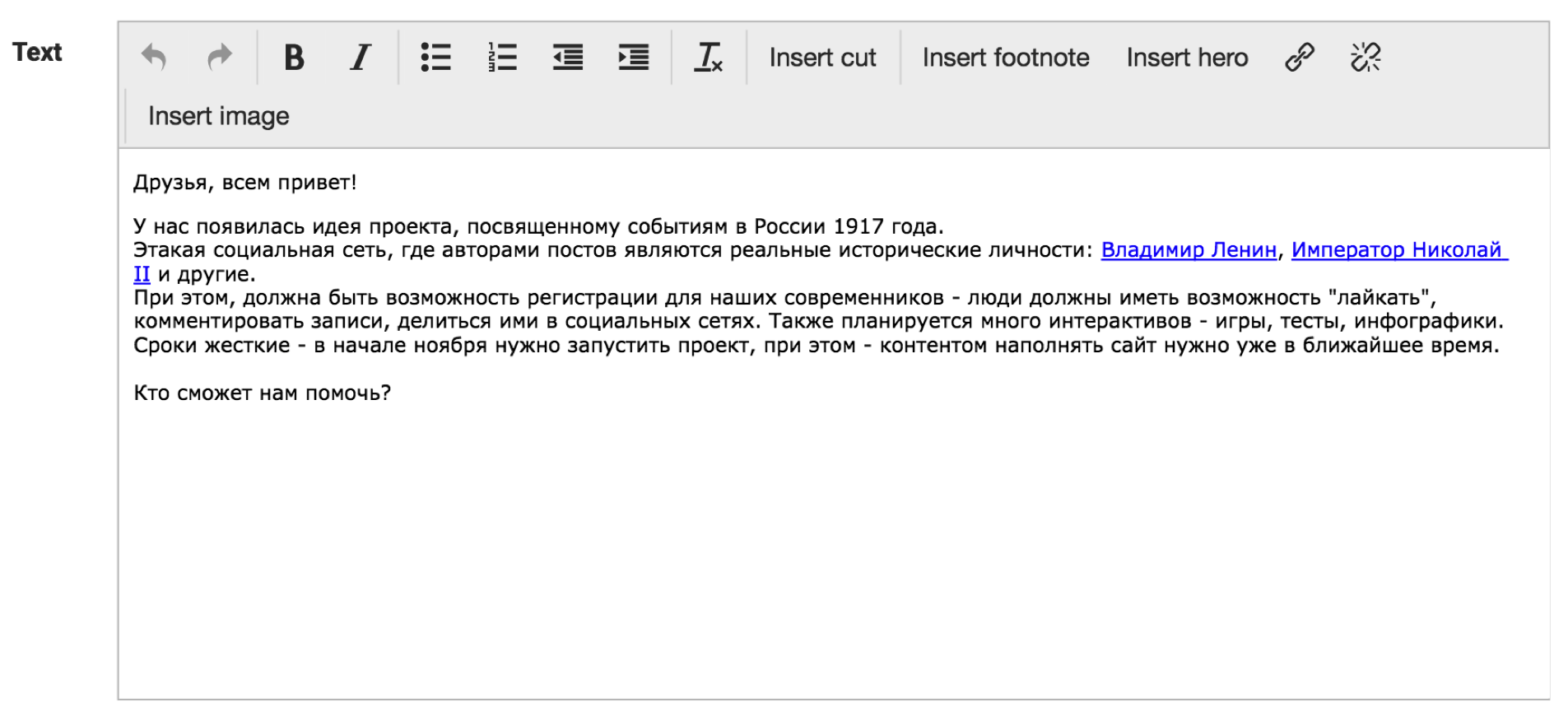

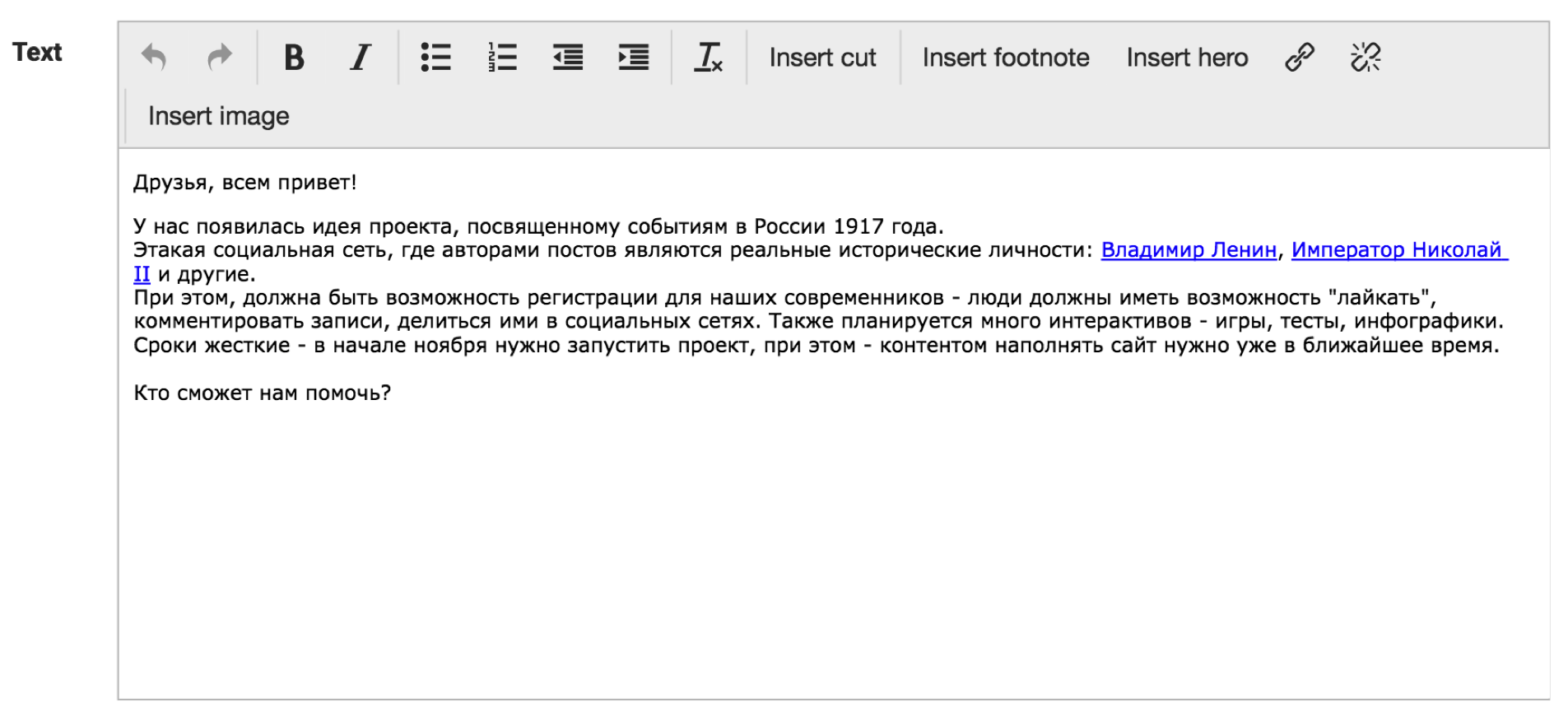

Our simplest component is a text editor. It is clear that this is the usual TinyMCE, but a little modified. It can be seen that we have buttons “insert link”, “insert hero” and immediately in the text you can see that a link to Vladimir Lenin will be inserted into the text, where there will be a nice popup with his photo, and so on.

This is how the editorial board looked, which for the first time saw our admin panel - they were very happy, it was very convenient and so simple that you can even teach the dog to use it:

So, we have done the admin panel, we are getting to the public part of the project - to the front end. It so happened that the mockups gave us a little bit later, every day the tasks changed on the fly. This was the first alarm bell: we may not be in time. We decided to immediately typeset and adapt to live data.

How did we decide to implement the frontend? For searches, filtering, sorting, we also use the package that we use in the admin - L5-repository, that is, we work with all the data as with the repository. From the point of view of the PHP code, we separate out some blocks on the site into separate services, they connect as single objects, and you need to build them so that if you need to pull out one component from the site and insert it somewhere else, everything should work.

For example, we have such a basic component - the calendar. Immediately from this component we have a component "cap". This is a beautiful picture with popular posts, with the ability to share:

Next we have a tape of posts that “communicates” with the calendar and looks, “what day should be drawn”.

A recommendation block and a subscription block depend on the tape. Such blocks as weather, currencies, birthdays depend on the calendar. Almost like in the present Facebook you can see the whole picture of the day at once.

On the backend, that is, on the server, it was possible to predict the load - we identified a certain number of RPS in the TZ, but we cannot predict from which devices the site will be viewed. Therefore, it was decided to make the public part of the frontend as simple as possible.

First, all the static is immediately requested from the cache from us, for dynamic unique user information we use an API that is not cached. All dynamic loading works through HTML. The server generates a static tape, for example, scrolled - a new page has come to HTML, that is, the data is not in JSON. If all of a sudden we still need to somehow work on a page with JavaScript, we either optimize or minimize the use of AngularJS, and actively use the Bindonce library, which allows us to implement one-time bindings. All images are optimized using Nginx, using the http_image_filter module, and in the case of some peak loads, we distribute the static distribution to CDN.

They were implemented in a week. First, what is, for example, like or comment? In fact, from a database point of view, this is a common table that implements a many-to-many connection: for example, post id, there is a user id. We decided to approach differently, and to work with this relationship is not as with an attitude, that is, for example, that our post has comments, we have highlighted comments in a separate entity, which has has one post, has One has author. At the same time, in Google, on request “how to write likes” suggests using such a link, for example, as morph-by-many. When the table stores not two objects for the objects it links, but like-type, like-id. For example, if you want to make likes for posts, for videos, you are prompted to make like-type, like-id. We decided that this is unnecessary - we will have only one table of likes.

Farther. We actively used mutator methods in order, for example, to get the number of Sherov on a single page line, for example, this is our Wk-Sher, Ok-Sher, Fb-Sher. On the frontend, Localstorage is used, which in the case of, for example, non-support by the browser, can drop to the level of cookies. There, for example, we store information that the user liked the post, that is, that each time we did not make requests to the database, we did not receive a list of posts. Once we save everything in Localstorage, the page is rendered, the heart is painted over, we don’t make an extra request to the database.

We do not cache the user interface API - it should receive, for example, actual comments in real time. You cannot tell the user: Sorry, we have five minutes of cache, in five minutes your comment will appear. ” And if suddenly we need some, for example, dynamic communication with the server, we can safely use events with channels from Laravel, which already work with Node.js.

Now about sharing. Many ask why we do not use some kind of ready-made solution for this. The fact is that besides the content, we have a lot of special projects where a person can take a test, upload a photo, a postcard will be generated for him, he feeds it into a social network. At the same time, if another person saw this picture in a tape of his friend, he pushes it and should go to the test page. That is, at the level of Ngnix, we send bots to stubs, and not redirect bots to where we need to go.

Also in our sharing system, we implemented our counting mechanism. For all such systems, it is very important to display relevant information (the number of reposts), and also to store them in the database to calculate certain statistics, ratings, and so on. Very often, programmers who encounter a sharing first time implement the calculation as follows: on assignments in the cron, the backend makes requests to social networks, gets fresh data, saves. This approach has two serious drawbacks - firstly, the information at the current time may not be relevant (for example, CZK updates data on 1000 posts every 10 minutes), secondly, for frequent calls to social networks from one IP address. Addresses can ban you.

We went the other way. At the moment when the user checks the post to his social network, we parallel with the sharing popup make a request to the social network api from the js code (from the client’s ip address, respectively), we get the current number of reposts, add 1 to this number ( not yet posheral) and save to the database. Accordingly, we constantly maintain the base of the number of shareings up to date, and even there is such an effect that, for example, you posheril, and the number has increased by 10, it means that someone with you now sherit, you are like, “Oh, cool, everything works in real time, as I like it! ”.

Approaching November 14, nothing foreshadowed trouble, we calmly passed load testing, fully prepared the site for release, as at 9 pm Moscow time such a message appeared. The guys changed their mind and decided to move the development of push on now. Peak traffic was expected just the day of launch, and the subscriber base had to be collected that day. When people develop push notifications, they don’t pay attention to the main problem: the cost of a mistake in development is a new domain and a lost subscriber base. For example, you have been conjuring for a long time and finally achieved what appeared in your browser like this “I confirm sending me notifications”. If the code you looked at this moment like this (more people never see this plate in their lives until they reset perms to the pushes in the settings):

You have not saved the token, but simply have it depleted

How we decided to implement the push. The basis was taken by Google Fairbase. Since there was little time left, we will definitely look at the documentation and do everything on it. In case the person was not authorized on the site, they decided to do it very simply. We generate some kind of unique cookie to this person, and in the database we save the token with it. Then the person logged in to the site, we saw that he has a cookie, there is a token with it in the database, we reassign the token to a specific user, and then send him some personal notifications.

We decided to use the Laravel-FCM package as a mailer. The one who worked with FCM or Firebase knows that, in order to send a message, you need to divide everything into packs for a thousand tokens, make separate requests. Laravel-FCM takes care of it. At the same time, he very simply allows us to generate payload into pushes, to make some kind of personal picture for a person, a personal title, a personal text, a personal link, and so on.

We had time. Rolled out the push for an hour before release. The next evening they wrote about the project The Village, Medusa, CNN, BBC, in the Tretyakov Gallery they organized a banquet for the media, to which we were invited. And such a small irony: until December 2016, the head office of our company was officially located in the middle of the forest. Imagine how cool - you did a project that you wrote about in CNN, and you did it in the forest.

So, we launched the project, it works, the bugs are corrected, December is in the yard, we need to do localization. Localized the project in two days.

Store in the database information about the two language versions, we decided so. For each entity that can be translated into another language (post, hero, group, video, geolocation), we added the fields: lang, which stores the language - Russian / English, and the field mirror_id, where the id of the “doubler” record is stored when copying.

With such a copy, for example, if an object has any relationship (the author has a post, geolocation), all its child entities are also copied, and if the entity has already been copied (mirror_id is not empty), then the copy on the record will reassign the relationship to this entity, if not, it will first be copied and then re-adjusted.

For example, in the Russian version there are two posts written by Nicholas II, when copying the first post, both the post and the author are copied, while copying the second one is only the second post, and Nikolay II already copied earlier will be the author.

How does the project live now? We have already developed the main functionality, practically nothing changes, we do special projects (games, tests), and so far everyone has a desire to rewrite everything. We are directing energy to peaceful purposes - if there was any special project, and we did something that wasn’t perfect, we don’t rewrite it, but implement it in a new special project.

For example, from special projects we did:

The creators of the project say that this is the best social network in history - because all its users have long died. This is a website in the format of a social network, where users are real historical figures who write about events that happened exactly a hundred years ago. All materials are based on real historical documents, letters, publicistic materials, using data from photo archives, from video archives. Readers of our time can comment on these posts, like, share them on social networks.

')

Having studied the problem, we realized that it is quite realizable, and we can manage to do it in the designated time frame. Despite the fact that our main profile is administration and support of high-load projects, we started with web development, and still sometimes we are engaged in development (who, if not we, know better how the site infrastructure should be designed for high traffic ).

The key point: we had a deadline deadline date - accurate to the minute. It is 15:00 on November 14th - at that time the press releases will go to the media, Yandex will publish the project in its own place, a man with a megaphone will make an official project announcement on Red Square. The probability to move the deadline at least an hour was equal to zero.

At the stage of coordination of work with the client, we determined a clear schedule for the project. It is clear that a lot of materials, and the site should be filled with content not an hour before the release, but much earlier. In early August, we decided to roll out the admin panel and begin to impose. In September - to modify the admin panel and start linking data, in October, implement interaction with users of our time (make authorization, implement sheers, likes, comments). November 7 (good date), it was decided to conduct load testing. November 14 - the launch of the project, a week after launch, we implement push-notifications to return the user to the site. In December, the site was supposed to be translated into English.

We form a team and start work

July 3, we signed a contract, work plan and made the first commit in the repository.

In a complex project with a hard deadline, it is very important that the team does not look like this: Vasya, who is working, and ten managers on him

The first principle is no rocket science (no rocket technologies), although the dates are tight. We are not building a rocket, which should fly to Mars and save a man who has run out of potatoes. We just make a social network.

If in the process of solving some problem such a sticker appeared in one of the working chats, it meant: “Guys, something is wrong with you, you need to break the task into smaller subtasks, solve it separately and not complicate your life ".

In the team, we had a project manager, three programmers, two administrators, two testers, and at some point it was decided to divide the team into two geographical areas: some of the guys left Irkutsk to work in Moscow to ensure a long working day (we have the main office in Irkutsk, and the development was first conducted there).

Which stack was chosen? Every day there are new technologies, and in all this it is very easy to get lost, to include something unnecessary, simply because it is fashionable. So do not - do not forget the old Russian saying: "The steeper the jeep, the longer it takes to go behind the tractor." And in programming this saying is applicable.

How did we decide to build a stack? We set up Nginx with PHP-FPM as the web server, MySQL 5.7 was chosen for the base, we chose Laravel as the backend framework - this is our working framework. Angular 1.5 was chosen as the frontend framework.

One of the main principles why Angular 1.5 is - we use only what we know well, or we can quickly find the answer if we don’t know something. The first more or less stable version of the second Angulyar came out two weeks after we began development, and we were no longer up to rewriting everything. Prior to this, with versions 1.5, 1.4, we worked quite a lot, we knew all the pitfalls, so we decided to do what we know how to use.

We took Imagemagic to work with graphics, we took FFmpeg to work with video materials and gifs, we definitely use Memcached, and we use Gulp with our own written tasks to collect and work with statics.

We do admin panel and typeset

A month later we gave the admin panel. How to build admin part, how the site is filled with content? As a basis, we put the L5-repository package in the backend - this is the package for Laravel, which allows literally three teams to implement the RESTful API to us, to make us all the models, to create out of migration boxes with complex relationships, to generate a full controller for us to work with data. The front end admin was executed as a one-page web application: the ngResource service is being actively used to interact with the API. On the frontend side, we tried to implement a self-signed JS-cache so that the admin data was not constantly requested from the server, if we downloaded something once, and we work with it on the client. All admin panel is built on Angular components.

It was very important to discuss with the client how they want to build the work of the editorial board. The ideal admin version is when five people are working on a section at a time: someone has updated the name, someone has the text. The client has planned a consistent work: they will be responsible for the text of the post and responsible for the order of the posts. We made it so that the components do not overlap with each other, and update only the data with which they work. On average, we spent about an hour developing a component, and some fundamentally new section in the admin panel was rolled out in a day.

Our simplest component is a text editor. It is clear that this is the usual TinyMCE, but a little modified. It can be seen that we have buttons “insert link”, “insert hero” and immediately in the text you can see that a link to Vladimir Lenin will be inserted into the text, where there will be a nice popup with his photo, and so on.

This is how the editorial board looked, which for the first time saw our admin panel - they were very happy, it was very convenient and so simple that you can even teach the dog to use it:

We finish admin panel and bind data

So, we have done the admin panel, we are getting to the public part of the project - to the front end. It so happened that the mockups gave us a little bit later, every day the tasks changed on the fly. This was the first alarm bell: we may not be in time. We decided to immediately typeset and adapt to live data.

How did we decide to implement the frontend? For searches, filtering, sorting, we also use the package that we use in the admin - L5-repository, that is, we work with all the data as with the repository. From the point of view of the PHP code, we separate out some blocks on the site into separate services, they connect as single objects, and you need to build them so that if you need to pull out one component from the site and insert it somewhere else, everything should work.

For example, we have such a basic component - the calendar. Immediately from this component we have a component "cap". This is a beautiful picture with popular posts, with the ability to share:

Next we have a tape of posts that “communicates” with the calendar and looks, “what day should be drawn”.

A recommendation block and a subscription block depend on the tape. Such blocks as weather, currencies, birthdays depend on the calendar. Almost like in the present Facebook you can see the whole picture of the day at once.

On the backend, that is, on the server, it was possible to predict the load - we identified a certain number of RPS in the TZ, but we cannot predict from which devices the site will be viewed. Therefore, it was decided to make the public part of the frontend as simple as possible.

First, all the static is immediately requested from the cache from us, for dynamic unique user information we use an API that is not cached. All dynamic loading works through HTML. The server generates a static tape, for example, scrolled - a new page has come to HTML, that is, the data is not in JSON. If all of a sudden we still need to somehow work on a page with JavaScript, we either optimize or minimize the use of AngularJS, and actively use the Bindonce library, which allows us to implement one-time bindings. All images are optimized using Nginx, using the http_image_filter module, and in the case of some peak loads, we distribute the static distribution to CDN.

We make likes, sheri, comments

They were implemented in a week. First, what is, for example, like or comment? In fact, from a database point of view, this is a common table that implements a many-to-many connection: for example, post id, there is a user id. We decided to approach differently, and to work with this relationship is not as with an attitude, that is, for example, that our post has comments, we have highlighted comments in a separate entity, which has has one post, has One has author. At the same time, in Google, on request “how to write likes” suggests using such a link, for example, as morph-by-many. When the table stores not two objects for the objects it links, but like-type, like-id. For example, if you want to make likes for posts, for videos, you are prompted to make like-type, like-id. We decided that this is unnecessary - we will have only one table of likes.

Farther. We actively used mutator methods in order, for example, to get the number of Sherov on a single page line, for example, this is our Wk-Sher, Ok-Sher, Fb-Sher. On the frontend, Localstorage is used, which in the case of, for example, non-support by the browser, can drop to the level of cookies. There, for example, we store information that the user liked the post, that is, that each time we did not make requests to the database, we did not receive a list of posts. Once we save everything in Localstorage, the page is rendered, the heart is painted over, we don’t make an extra request to the database.

We do not cache the user interface API - it should receive, for example, actual comments in real time. You cannot tell the user: Sorry, we have five minutes of cache, in five minutes your comment will appear. ” And if suddenly we need some, for example, dynamic communication with the server, we can safely use events with channels from Laravel, which already work with Node.js.

Now about sharing. Many ask why we do not use some kind of ready-made solution for this. The fact is that besides the content, we have a lot of special projects where a person can take a test, upload a photo, a postcard will be generated for him, he feeds it into a social network. At the same time, if another person saw this picture in a tape of his friend, he pushes it and should go to the test page. That is, at the level of Ngnix, we send bots to stubs, and not redirect bots to where we need to go.

Also in our sharing system, we implemented our counting mechanism. For all such systems, it is very important to display relevant information (the number of reposts), and also to store them in the database to calculate certain statistics, ratings, and so on. Very often, programmers who encounter a sharing first time implement the calculation as follows: on assignments in the cron, the backend makes requests to social networks, gets fresh data, saves. This approach has two serious drawbacks - firstly, the information at the current time may not be relevant (for example, CZK updates data on 1000 posts every 10 minutes), secondly, for frequent calls to social networks from one IP address. Addresses can ban you.

We went the other way. At the moment when the user checks the post to his social network, we parallel with the sharing popup make a request to the social network api from the js code (from the client’s ip address, respectively), we get the current number of reposts, add 1 to this number ( not yet posheral) and save to the database. Accordingly, we constantly maintain the base of the number of shareings up to date, and even there is such an effect that, for example, you posheril, and the number has increased by 10, it means that someone with you now sherit, you are like, “Oh, cool, everything works in real time, as I like it! ”.

Develop push notifications and launch

Approaching November 14, nothing foreshadowed trouble, we calmly passed load testing, fully prepared the site for release, as at 9 pm Moscow time such a message appeared. The guys changed their mind and decided to move the development of push on now. Peak traffic was expected just the day of launch, and the subscriber base had to be collected that day. When people develop push notifications, they don’t pay attention to the main problem: the cost of a mistake in development is a new domain and a lost subscriber base. For example, you have been conjuring for a long time and finally achieved what appeared in your browser like this “I confirm sending me notifications”. If the code you looked at this moment like this (more people never see this plate in their lives until they reset perms to the pushes in the settings):

You have not saved the token, but simply have it depleted

How we decided to implement the push. The basis was taken by Google Fairbase. Since there was little time left, we will definitely look at the documentation and do everything on it. In case the person was not authorized on the site, they decided to do it very simply. We generate some kind of unique cookie to this person, and in the database we save the token with it. Then the person logged in to the site, we saw that he has a cookie, there is a token with it in the database, we reassign the token to a specific user, and then send him some personal notifications.

We decided to use the Laravel-FCM package as a mailer. The one who worked with FCM or Firebase knows that, in order to send a message, you need to divide everything into packs for a thousand tokens, make separate requests. Laravel-FCM takes care of it. At the same time, he very simply allows us to generate payload into pushes, to make some kind of personal picture for a person, a personal title, a personal text, a personal link, and so on.

We had time. Rolled out the push for an hour before release. The next evening they wrote about the project The Village, Medusa, CNN, BBC, in the Tretyakov Gallery they organized a banquet for the media, to which we were invited. And such a small irony: until December 2016, the head office of our company was officially located in the middle of the forest. Imagine how cool - you did a project that you wrote about in CNN, and you did it in the forest.

We do localization

So, we launched the project, it works, the bugs are corrected, December is in the yard, we need to do localization. Localized the project in two days.

Store in the database information about the two language versions, we decided so. For each entity that can be translated into another language (post, hero, group, video, geolocation), we added the fields: lang, which stores the language - Russian / English, and the field mirror_id, where the id of the “doubler” record is stored when copying.

With such a copy, for example, if an object has any relationship (the author has a post, geolocation), all its child entities are also copied, and if the entity has already been copied (mirror_id is not empty), then the copy on the record will reassign the relationship to this entity, if not, it will first be copied and then re-adjusted.

For example, in the Russian version there are two posts written by Nicholas II, when copying the first post, both the post and the author are copied, while copying the second one is only the second post, and Nikolay II already copied earlier will be the author.

What's next?

How does the project live now? We have already developed the main functionality, practically nothing changes, we do special projects (games, tests), and so far everyone has a desire to rewrite everything. We are directing energy to peaceful purposes - if there was any special project, and we did something that wasn’t perfect, we don’t rewrite it, but implement it in a new special project.

For example, from special projects we did:

- the game "Do not let Lenin into Russia" is a well-known logic game about a cat, only you had to catch Lenin;

- " Tinder1917" - dating service with the heroes of the revolution;

- the test “Who are you in 1917?” is the most popular one, people came in droves, about one and a half million results were generated.

findings

- Difficult to make simple is easy, to complicate something and to make a simple thing complex is even easier.

- Remember the story, the revolution does not always lead to good results.

- Learn from your mistakes. Until you put the server yourself, you will not understand why he lay down. As long as you yourself do not make some complicated mistake, you will not understand why something has broken. Lost domain in the guns - this is not the worst thing that can happen.

- Do not pursue fashion. Keep track of your stack, do not create there big gardens. If you need to solve some new problem, see if you already have a tool that fits for it, do not find a new fashionable framework, documentation for which is only in Chinese, you are: “Oh, this is fashionable, let's try. " So do not.

- And the uptime figure of 99.99 is not the limit. You can always improve it.

Source: https://habr.com/ru/post/332786/

All Articles