Meanwhile, Proxmox VE has been updated to version 5.0.

Loud this news is not called, but the guys, who year "sawing" Proxmox VE , two days ago released a new version of their offspring - 5.0 .

Loud this news is not called, but the guys, who year "sawing" Proxmox VE , two days ago released a new version of their offspring - 5.0 .We are, of course, interested in the changes - are they pulling at the new major version. In my opinion, quite, and the details, according to tradition, under the cut.

(For those who are not familiar with the words Proxmox VE, here are a few words of description: "Proxmox Virtual Environment (Proxmox VE) is an open source virtualization system based on Debian GNU / Linux. It uses KVM and LXC as hypervisors. Virtual Machine Management and administration of the server itself is done through the web interface or through the standard Linux command line interface. ”)

The official list of changes is pretty short:

')

- The build is based on the Debian 9.0 “Stretch” package base (the previous version was based on Debian 8.0 “Jessie”);

- Linux 4.10 kernel used

- QEMU 2.9 used

- LXC updated to 2.0.8;

- The possibility of asynchronous replication of storage between several cluster nodes is implemented. The feature works when using ZFS, and today is labeled as “technology preview”;

- Updated build templates for isolated LXC environments based on Debian, Ubuntu, CentOS, Fedora, OpenSUSE, Arch Linux, Gentoo and Alpine;

- New / significantly improved remote console noVNC;

- Included is the implementation of the distributed Ceph 12.1.0 Luminous file system (also marked as “technology preview”), with the support of the new BlueStore backend, assembled by Proxmox employees;

- Live migration support using local storage;

- Improved web-interface: improved filtering and batch operations, display of USB and Host PCI addresses;

- Improved ISO installation image;

- Added import of virtual machines from other hypervisors, including

VMware and Hyper-V. - Documentation improvements, plus multiple bug fixes in older releases.

I will describe a couple of things in a little more detail, a few more changes are perfectly visible in the video from the authors of Proxmox VE, which is given at the end of the post.

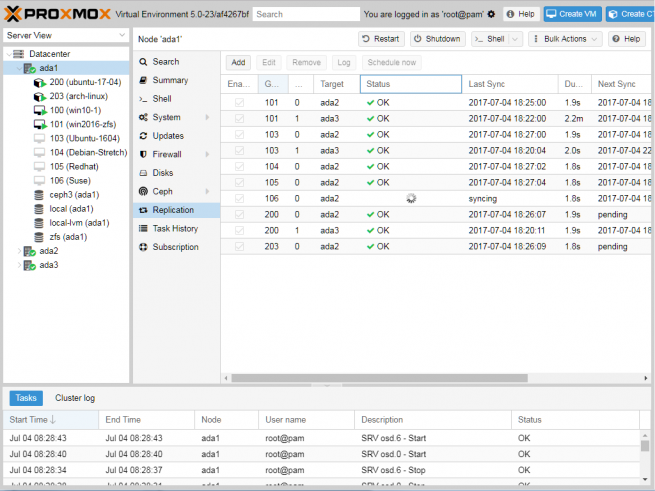

Replication repository

The conversation is about asynchronous replication , when once at a certain time the snapshot of the state of the repository is sent to another repository. This reduces network traffic and allows you to set lower hardware requirements.

You can specify what and how often we replicate, and have a “fairly fresh” copy of data from the main storage on another storage. It all depends on the tasks, but in many cases, for the purposes of quick recovery, even this approach is just a great option. Once again, this function works between zfs repositories.

Import VM from other hypervisors

This kind of import is not needed every day, but when you need it, it takes quite a lot of time to deal with it - it seems that now the process will turn out. Of course, imports are the problems of launching a guest based on a new hypervisor (different guest OSs will react differently, say, changing the list of equipment), but the functionality itself is relevant. It is assumed that the import will come from the most popular formats (say, vmdk), but most hypervisors can now export machines to the VMWare format - after which we use the new utility function qm (called qm importdisk), and we are already fighting with the OS in virtual.

Source: https://habr.com/ru/post/332542/

All Articles