The market of detection and recognition systems: Emotions and "emotional calculations"

Nowadays, recognition technologies are no longer available. Emotion recognition and “emotional computation” are part of a large body of science, which also includes such fundamental concepts as pattern recognition and visual information processing. With this post, we want to open our blog on Habré and conduct a small review of solutions presented on the market of emotion recognition systems - let's see which companies are working in this segment and what exactly they are doing.

/ Flickr / Britt Selvitelle / CC

/ Flickr / Britt Selvitelle / CC

The market for detection and recognition of emotions (EDRS) is actively developing. According to a number of experts, he will demonstrate an average annual growth of 27.4% and will reach $ 29.1 billion by 2022. Such figures are quite justified, because the software for recognizing emotions already allows you to determine the state of the user at an arbitrary point in time using a webcam or specialized equipment, while simultaneously analyzing the behavioral patterns, physiological parameters and changes in the user's mood.

')

Systems that read, transmit, and recognize data of an emotional nature can be divided into groups according to the type of reaction definition: according to physiological indicators, facial expressions, body language and movements, as well as voice [we will discuss in more detail the following two options for defining reactions in the following materials] .

Physiology as a source of information about human emotions is often resorted to in clinical trials. For example, this method of detecting emotions was built into the method of biofeedback (biofeedback) when the patient returns to the computer monitor the current values of his physiological parameters determined by the clinical protocol: cardiogram, heart rate, electrical activity of the skin (EAK), etc. .

Similar techniques have found application in other areas. For example, the definition of emotions from physiological data is a key function of the NeuroSky MindWave Mobile device, which is worn on the head and starts the built-in sensor of brain activity. It records the degree of concentration, relaxation or anxiety of a person, assessing it on a scale from 1 to 100. MindWave Mobile adapts the method of EEG recording adopted in scientific studies. Only in this case, the system is equipped with only one electrode, in contrast to laboratory installations, where their number exceeds ten.

An example of the detection of emotional reactions on facial expressions is the FaceReader service of the Dutch company Noldus Information Technology. The program is able to interpret facial expressions, distributing them into seven main categories: joy, sadness, anger, surprise, fear, disgust, and neutral. In addition, FaceReader can accurately determine the age and sex of a person by a person with a sufficiently high accuracy.

The principles of the program are based on computer vision technology. In particular, we are talking about the Active Template method, which consists in imposing a deformable pattern on the face image, and the Active Appearance Model method, which allows you to create an artificial face model at control points, taking into account the surface details. According to the developers, the classification takes place through neural networks with a training building of 10 thousand photos.

Large corporations have also declared themselves in this area. For example, Microsoft is developing its own project called Project Oxford - a set of ready-made REST APIs that implement computer vision algorithms (and not only). The software is able to distinguish between photographs such emotions as anger, contempt, disgust, fear, happiness, sadness and surprise, as well as inform the user about the absence of any visibly expressed emotions.

It is important to note the fact that Russian companies are also working on emotion recognition systems. For example, the EmoDetect EDR system is on the market. Software solutions can determine the psycho-emotional state of a person by sampling images (or video). The qualifier reveals six basic emotions - joy, surprise, sadness, anger, fear and disgust already mentioned above.

Recognition is carried out on the basis of 20 informative local facial features characterizing the psycho-emotional state of a person (ASM). The calculation of motor units and their classification by the coding system of facial movements of P. Ekman (FACS Action Units) are also made. In addition, the solution builds graphs of the dynamic change in the intensity of the subject's emotions over time and generates reports on the results of video processing.

In addition, within the framework of this post, it is impossible to ignore the topic of tracking emotions according to eye movements, the main parameters of which are fixations and saccades. The most common method of their registration is called video oculography (or Aytreking, a more familiar tracing of the English term), the principle of which is to record the movement of the eye with high frequency. There is also a toolkit in videooculography — the eytrekery involved in experimental studies of various types.

So, Neurodata Lab together with the Ocutri development team created a prototype of Eye Catcher 0.1 software that allows you to extract eye and head movement data from video files recorded on a regular camera. This technology opens up new horizons in the study of human eye movements in natural conditions and significantly expands research capabilities. In addition, lines of IT devices are produced by companies of global importance, such as SR Research (EyeLink), Tobii, SMI (recently acquired by Apple), as well as GazeTracker, Eyezag, Sticky, and others. The main working tool of the latter is also a webcam.

To date, videooculography is used both in science and in the gaming industry and online marketing (neuromarketing). Crucially important when purchasing from an online store is the location of the product information that facilitates the conversion. It is required and thoroughly consider the position of banners and other visual advertising.

For example, Google is working on the design of display surfaces on an issue page using light tracking in order to generate the most effective offers for advertisers. Oculography offers a sound, correct analysis method that provides significant practical assistance to web designers and helps to ensure that the information above the fold is better perceived by users.

The key vector for the development of new information technologies being introduced into human life is the improvement of human-computer interaction - human-computer interaction (HCI). The emergence of EDR systems has led to the emergence of such a thing as emotional computing, or, according to well-established English-language terminology, affective computing. Affective computing is a type of HCI, in which the device is able to detect and react appropriately to the user's feelings and emotions, determined by facial expressions, posture, gestures , speech characteristics and even body temperature. In connection with this, solutions referring to the subcutaneous blood flow (as the Canadian startup NuraLogix does) are curious.

The amount of research and funding suggests that this direction is extremely promising. According to marketsandmarkets.com, the affective computing market will grow from 12.2 billion dollars in 2016 to 54 billion dollars by 2021 with an average annual growth rate of 34.7%, although its lion’s share, as before, will remain for the leading market players ( Apple, IBM, Google, Facebook, Microsoft, etc.).

The recognition of the status of emotional computing as an independent research niche and the growth of public interest in this area has been around since 2000, when Rosalind Picard published her book under the symbolic title “Affective Computing” - this monograph initiated the profile research at MIT . Later, scientists from other countries joined them.

Information in our brain is emotionally predetermined , and we often make decisions simply under the influence of an emotional impulse. That is why Picard in his book presented the idea of constructing machines that would be directly connected with human emotions and even able to influence them.

The most widely discussed and widely used approach to creating affective computing applications is to build a cognitive model of emotions. The system generates emotional states and their corresponding expressions on the basis of a set of principles [forming emotions], instead of a strict set of signal-emotion pairs. It is also often combined with the technology of recognition of emotional states, which focuses on the signs and signals that appear on our face, body, skin, etc. The image below shows several emotions classified by the mimic channel:

Emotions: anger, fear, disgust, surprise, happiness and sadness ( source )

Emotions: anger, fear, disgust, surprise, happiness and sadness ( source )

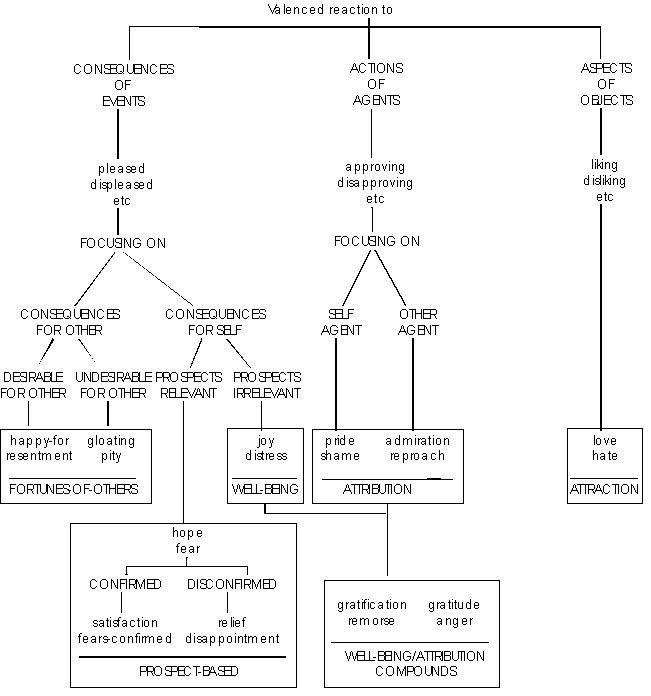

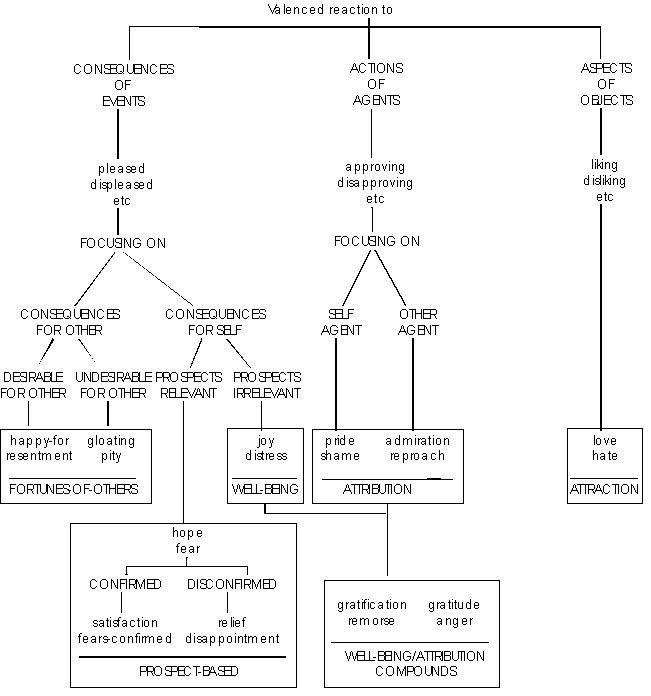

Emotions are defined processes. Therefore, the task of affective computing is to achieve user interaction in a manner close to everyday human communication - the machine must adapt to the user's emotional state and influence it. For this approach, even the rules formulated by American researchers Ortoni, Clore and Collins (Ortony, Clore and Collins) were invented .

Rule from the OCC model ( source )

Rule from the OCC model ( source )

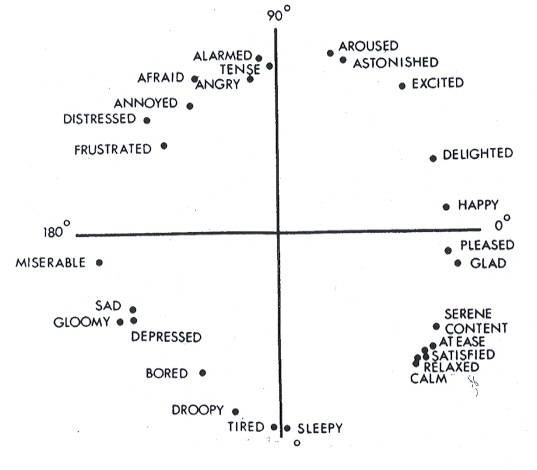

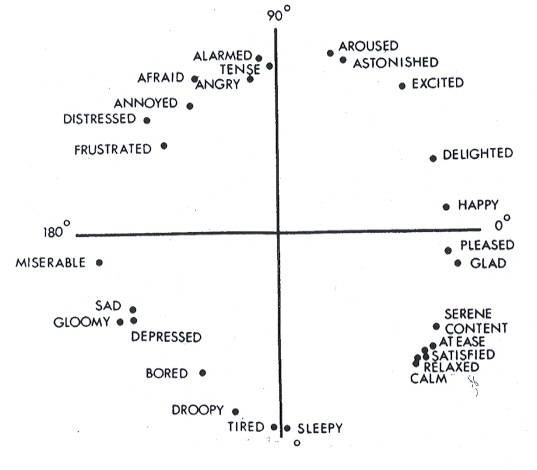

Among the relevant examples of “emotional computing” systems is the work of Rosalind Picard and her colleagues. In order to increase the effectiveness of student learning, scientists proposed an original emotional model based on the Russell cyclical model. Ultimately, they wanted to create an electronic companion that tracks the emotional state of the student and determines whether he needs help in the process of learning new knowledge.

Russell's Cyclical Model ( source )

Russell's Cyclical Model ( source )

An interesting application of software solutions developed by the Picard group at MIT is to teach autistic children how to identify their own emotions and emotional states of people around them. All this stimulated Empatica, which offers consumers (including patients with epilepsy) wearable bracelets under the Embrace brand, recording a galvanic skin reflex (GSR) and allowing for real-time monitoring of sleep quality, as well as levels of stress and physical activity.

Another company, whose origins were Rosalind Picard and her graduate student from MIT Rana El Kalibu (Rana el Kaliouby), is called Affectiva. The developers of the company laid out their SDK on the Unity platform, opening up access to third-party developers for experimenting, testing, and implementing all sorts of microprojects. The company’s assets currently include the world's largest database of analyzed individuals — more than five million copies, as well as the pioneer experience in a number of industries where before that the emotion recognition technology was hardly taken into account, but the analysis is still carried out only within six basic emotions and one channel (microexpressions of the face).

In this vein, many laboratories and start-ups are developing today. For example, Sentio Solutions is developing a Feel bracelet that tracks, recognizes and collects data about a person’s emotions throughout the day. At the same time, the mobile application offers recommendations that should form a positive, from an emotional point of view, habits of the user. Sensors built into the bracelet monitor several physiological signals, such as pulse, galvanic skin reactions, skin temperature, and the algorithms of the system translate biological signals into the “language” of emotions.

It is worth mentioning the company Emteq. Their Faceteq platform is not only able to track the condition of the driver of the car [fatigue], but is also used for medical purposes - a special application provides practical assistance to people suffering from facial paralysis. Work is also underway to implement the solution in the sphere of virtual reality, which will allow the VR helmet to project the user's emotional responses to the avatar.

Today, in 2017, the strategic goal of research in the field of “emotional computing” and EDRS is to go beyond the narrow framework of mono- or bicanal logic, which will allow you to get closer to real, rather than purely declared (let's say, mechanically combining microexpression with wearable biosensors or eytracking ), complex multimodal technologies and methods of recognition of emotional states.

Neurodata Lab specializes in the development of highly intelligent technological solutions for the recognition of emotions and the introduction of EDRS technologies in various sectors of the economy: mass-market projects, Internet of Things (IoT), robotics, entertainment industry, intelligent transport systems and digital medicine.

At the same time, there is a smooth transition from the analysis of static photos and images to the dynamics of the A / V stream and the communicative environment in its various manifestations. All these tasks are non-trivial, which solve many key problems of the industry and require in-depth research, the collection of vast amounts of data and a comprehensive interpretation of the reactions of the human body (verbal and non-verbal).

People are not interested in communicating with non-emotional agents, from which there is no emotional return, no response. Scientists are applying considerable force to radically change the situation, but they face certain difficulties. One of them is the absence of neurons in the computing systems. Inside such systems there are only algorithms, and this is an objective reality.

Mankind is trying to understand how these or other psychological phenomena are formed in order to reproduce them within computing systems. For example, the US government is engaged in this, developing the architecture of TrueNorth, which is based on neurobiological principles. The processor has a non-classical architecture, that is, it does not adhere to the von Neumann architecture, but is inspired by some models of the work of the neocortex (which Kurzweil describes in detail in his texts).

In the future [and it may turn out to be not as far away as it seems at first glance] the progress of such technologies will make it possible to design self-learning systems that do not need programming. They will have to apply completely different teaching techniques. And we can not exclude the fact that as a result, the development of computing technology will go in a completely different direction.

PS In our subsequent materials we plan to touch on such topics as speech recognition and face recognition, to talk about the influence of physiological features on the recognition of emotions and what tasks are solved by modern neurosystems. Subscribe to our blog, not to miss, friends!

/ Flickr / Britt Selvitelle / CC

/ Flickr / Britt Selvitelle / CCEmotion Recognition Systems (EDRS)

The market for detection and recognition of emotions (EDRS) is actively developing. According to a number of experts, he will demonstrate an average annual growth of 27.4% and will reach $ 29.1 billion by 2022. Such figures are quite justified, because the software for recognizing emotions already allows you to determine the state of the user at an arbitrary point in time using a webcam or specialized equipment, while simultaneously analyzing the behavioral patterns, physiological parameters and changes in the user's mood.

')

Systems that read, transmit, and recognize data of an emotional nature can be divided into groups according to the type of reaction definition: according to physiological indicators, facial expressions, body language and movements, as well as voice [we will discuss in more detail the following two options for defining reactions in the following materials] .

Physiology as a source of information about human emotions is often resorted to in clinical trials. For example, this method of detecting emotions was built into the method of biofeedback (biofeedback) when the patient returns to the computer monitor the current values of his physiological parameters determined by the clinical protocol: cardiogram, heart rate, electrical activity of the skin (EAK), etc. .

Similar techniques have found application in other areas. For example, the definition of emotions from physiological data is a key function of the NeuroSky MindWave Mobile device, which is worn on the head and starts the built-in sensor of brain activity. It records the degree of concentration, relaxation or anxiety of a person, assessing it on a scale from 1 to 100. MindWave Mobile adapts the method of EEG recording adopted in scientific studies. Only in this case, the system is equipped with only one electrode, in contrast to laboratory installations, where their number exceeds ten.

An example of the detection of emotional reactions on facial expressions is the FaceReader service of the Dutch company Noldus Information Technology. The program is able to interpret facial expressions, distributing them into seven main categories: joy, sadness, anger, surprise, fear, disgust, and neutral. In addition, FaceReader can accurately determine the age and sex of a person by a person with a sufficiently high accuracy.

The principles of the program are based on computer vision technology. In particular, we are talking about the Active Template method, which consists in imposing a deformable pattern on the face image, and the Active Appearance Model method, which allows you to create an artificial face model at control points, taking into account the surface details. According to the developers, the classification takes place through neural networks with a training building of 10 thousand photos.

Large corporations have also declared themselves in this area. For example, Microsoft is developing its own project called Project Oxford - a set of ready-made REST APIs that implement computer vision algorithms (and not only). The software is able to distinguish between photographs such emotions as anger, contempt, disgust, fear, happiness, sadness and surprise, as well as inform the user about the absence of any visibly expressed emotions.

It is important to note the fact that Russian companies are also working on emotion recognition systems. For example, the EmoDetect EDR system is on the market. Software solutions can determine the psycho-emotional state of a person by sampling images (or video). The qualifier reveals six basic emotions - joy, surprise, sadness, anger, fear and disgust already mentioned above.

Recognition is carried out on the basis of 20 informative local facial features characterizing the psycho-emotional state of a person (ASM). The calculation of motor units and their classification by the coding system of facial movements of P. Ekman (FACS Action Units) are also made. In addition, the solution builds graphs of the dynamic change in the intensity of the subject's emotions over time and generates reports on the results of video processing.

In addition, within the framework of this post, it is impossible to ignore the topic of tracking emotions according to eye movements, the main parameters of which are fixations and saccades. The most common method of their registration is called video oculography (or Aytreking, a more familiar tracing of the English term), the principle of which is to record the movement of the eye with high frequency. There is also a toolkit in videooculography — the eytrekery involved in experimental studies of various types.

So, Neurodata Lab together with the Ocutri development team created a prototype of Eye Catcher 0.1 software that allows you to extract eye and head movement data from video files recorded on a regular camera. This technology opens up new horizons in the study of human eye movements in natural conditions and significantly expands research capabilities. In addition, lines of IT devices are produced by companies of global importance, such as SR Research (EyeLink), Tobii, SMI (recently acquired by Apple), as well as GazeTracker, Eyezag, Sticky, and others. The main working tool of the latter is also a webcam.

To date, videooculography is used both in science and in the gaming industry and online marketing (neuromarketing). Crucially important when purchasing from an online store is the location of the product information that facilitates the conversion. It is required and thoroughly consider the position of banners and other visual advertising.

For example, Google is working on the design of display surfaces on an issue page using light tracking in order to generate the most effective offers for advertisers. Oculography offers a sound, correct analysis method that provides significant practical assistance to web designers and helps to ensure that the information above the fold is better perceived by users.

Affective computing - "emotional computing"

The key vector for the development of new information technologies being introduced into human life is the improvement of human-computer interaction - human-computer interaction (HCI). The emergence of EDR systems has led to the emergence of such a thing as emotional computing, or, according to well-established English-language terminology, affective computing. Affective computing is a type of HCI, in which the device is able to detect and react appropriately to the user's feelings and emotions, determined by facial expressions, posture, gestures , speech characteristics and even body temperature. In connection with this, solutions referring to the subcutaneous blood flow (as the Canadian startup NuraLogix does) are curious.

The amount of research and funding suggests that this direction is extremely promising. According to marketsandmarkets.com, the affective computing market will grow from 12.2 billion dollars in 2016 to 54 billion dollars by 2021 with an average annual growth rate of 34.7%, although its lion’s share, as before, will remain for the leading market players ( Apple, IBM, Google, Facebook, Microsoft, etc.).

The recognition of the status of emotional computing as an independent research niche and the growth of public interest in this area has been around since 2000, when Rosalind Picard published her book under the symbolic title “Affective Computing” - this monograph initiated the profile research at MIT . Later, scientists from other countries joined them.

Information in our brain is emotionally predetermined , and we often make decisions simply under the influence of an emotional impulse. That is why Picard in his book presented the idea of constructing machines that would be directly connected with human emotions and even able to influence them.

The most widely discussed and widely used approach to creating affective computing applications is to build a cognitive model of emotions. The system generates emotional states and their corresponding expressions on the basis of a set of principles [forming emotions], instead of a strict set of signal-emotion pairs. It is also often combined with the technology of recognition of emotional states, which focuses on the signs and signals that appear on our face, body, skin, etc. The image below shows several emotions classified by the mimic channel:

Emotions are defined processes. Therefore, the task of affective computing is to achieve user interaction in a manner close to everyday human communication - the machine must adapt to the user's emotional state and influence it. For this approach, even the rules formulated by American researchers Ortoni, Clore and Collins (Ortony, Clore and Collins) were invented .

Among the relevant examples of “emotional computing” systems is the work of Rosalind Picard and her colleagues. In order to increase the effectiveness of student learning, scientists proposed an original emotional model based on the Russell cyclical model. Ultimately, they wanted to create an electronic companion that tracks the emotional state of the student and determines whether he needs help in the process of learning new knowledge.

An interesting application of software solutions developed by the Picard group at MIT is to teach autistic children how to identify their own emotions and emotional states of people around them. All this stimulated Empatica, which offers consumers (including patients with epilepsy) wearable bracelets under the Embrace brand, recording a galvanic skin reflex (GSR) and allowing for real-time monitoring of sleep quality, as well as levels of stress and physical activity.

Another company, whose origins were Rosalind Picard and her graduate student from MIT Rana El Kalibu (Rana el Kaliouby), is called Affectiva. The developers of the company laid out their SDK on the Unity platform, opening up access to third-party developers for experimenting, testing, and implementing all sorts of microprojects. The company’s assets currently include the world's largest database of analyzed individuals — more than five million copies, as well as the pioneer experience in a number of industries where before that the emotion recognition technology was hardly taken into account, but the analysis is still carried out only within six basic emotions and one channel (microexpressions of the face).

In this vein, many laboratories and start-ups are developing today. For example, Sentio Solutions is developing a Feel bracelet that tracks, recognizes and collects data about a person’s emotions throughout the day. At the same time, the mobile application offers recommendations that should form a positive, from an emotional point of view, habits of the user. Sensors built into the bracelet monitor several physiological signals, such as pulse, galvanic skin reactions, skin temperature, and the algorithms of the system translate biological signals into the “language” of emotions.

It is worth mentioning the company Emteq. Their Faceteq platform is not only able to track the condition of the driver of the car [fatigue], but is also used for medical purposes - a special application provides practical assistance to people suffering from facial paralysis. Work is also underway to implement the solution in the sphere of virtual reality, which will allow the VR helmet to project the user's emotional responses to the avatar.

Future

Today, in 2017, the strategic goal of research in the field of “emotional computing” and EDRS is to go beyond the narrow framework of mono- or bicanal logic, which will allow you to get closer to real, rather than purely declared (let's say, mechanically combining microexpression with wearable biosensors or eytracking ), complex multimodal technologies and methods of recognition of emotional states.

Neurodata Lab specializes in the development of highly intelligent technological solutions for the recognition of emotions and the introduction of EDRS technologies in various sectors of the economy: mass-market projects, Internet of Things (IoT), robotics, entertainment industry, intelligent transport systems and digital medicine.

At the same time, there is a smooth transition from the analysis of static photos and images to the dynamics of the A / V stream and the communicative environment in its various manifestations. All these tasks are non-trivial, which solve many key problems of the industry and require in-depth research, the collection of vast amounts of data and a comprehensive interpretation of the reactions of the human body (verbal and non-verbal).

People are not interested in communicating with non-emotional agents, from which there is no emotional return, no response. Scientists are applying considerable force to radically change the situation, but they face certain difficulties. One of them is the absence of neurons in the computing systems. Inside such systems there are only algorithms, and this is an objective reality.

Mankind is trying to understand how these or other psychological phenomena are formed in order to reproduce them within computing systems. For example, the US government is engaged in this, developing the architecture of TrueNorth, which is based on neurobiological principles. The processor has a non-classical architecture, that is, it does not adhere to the von Neumann architecture, but is inspired by some models of the work of the neocortex (which Kurzweil describes in detail in his texts).

In the future [and it may turn out to be not as far away as it seems at first glance] the progress of such technologies will make it possible to design self-learning systems that do not need programming. They will have to apply completely different teaching techniques. And we can not exclude the fact that as a result, the development of computing technology will go in a completely different direction.

PS In our subsequent materials we plan to touch on such topics as speech recognition and face recognition, to talk about the influence of physiological features on the recognition of emotions and what tasks are solved by modern neurosystems. Subscribe to our blog, not to miss, friends!

Source: https://habr.com/ru/post/332466/

All Articles