Kubernetes Network Performance Comparison

Kubernetes requires that each container in a cluster has a unique, routable IP. Kubernetes does not assign IP addresses by itself, leaving this task to third-party solutions.

The purpose of this study is to find a solution with the lowest latency, the highest throughput, and the smallest tuning cost. Since our load depends on delays, we measure delays of high percentiles with a fairly active network load. In particular, we focused on performance in the region of 30-50 percent of the maximum load, since this best reflects typical situations for non-overloaded systems.

')

Options

Docker with --net=host

Our exemplary installation. All other options were compared with her.

The option

--net=host means that containers inherit the IP addresses of their host machines, i.e. there is no network containerization.Lack of network containerization a priori provides better performance than the presence of any implementation - for this reason we used this installation as a reference.

Flannel

Flannel is a virtual network solution supported by the CoreOS project. Well tested and ready for production, so the cost of implementation is minimal.

When you add a flannel machine to a cluster, the flannel does three things:

- Assigns a subnet to a new machine using etcd .

- Creates a virtual bridge interface on the machine (

docker0 bridge). - Configures the packet forwarding backend :

aws-vpc- registers a machine's subnet in the Amazon AWS instance table. The number of entries in this table is limited to 50, i.e. You cannot have more than 50 machines in a cluster if you are using a flannel withaws-vpc. In addition, this backend only works with Amazon AWS;host-gw- creates IP routes to subnets via the IP addresses of the remote machine. Requires direct L2 connectivity between hosts running the flannel;vxlan- creates a virtual interface VXLAN .

Because flannel uses the bridge interface to forward packets, each packet passes through two network stacks when sent from one container to another.

Ipvlan

IPvlan is a driver in the Linux kernel that allows you to create virtual interfaces with unique IP addresses without the need to use a bridge interface.

To assign an IP address to a container using IPvlan requires:

- Create a container with no network interface at all.

- Create an ipvlan interface in a standard network namespace.

- Move the interface to the container namespace.

IPvlan is a relatively new solution, so there are no tools to automate this process yet. Thus, IPvlan deploy on multiple machines and containers becomes more complicated, that is, the implementation cost is high. However, IPvlan does not require a bridge interface and forwards packets directly from the NIC to the virtual interface, so we expected better performance than the flannel.

Load test script

For each option we have done the following steps:

- Set up a network on two physical machines.

- We launched tcpkali in a container on the same machine, setting it up to send requests at a constant speed.

- Run nginx in a container on another machine, setting it up to respond with a fixed-size file.

- They removed the system metrics and tcpkali results.

We ran this test with different numbers of requests: from 50,000 to 450,000 requests per second (RPS).

For each request, nginx responded with a fixed-size static file: 350 bytes (contents of 100 bytes and headers of 250 bytes) or 4 kilobytes.

results

- IPvlan showed the lowest latency and best maximum throughput. Flannel with

host-gwandaws-vpcfollows it with similar metrics, withhost-gwbetter under maximum load. - Flannel with

vxlanshowed the worst results in all tests. However, we suspect that its exceptionally bad percentile of 99,999 was caused by a bug. - The results for the 4-kilobyte response are similar to the 350-byte case, but there are two noticeable differences:

- the maximum RPS is significantly lower, since 4 270 thousand RPS were required for 4-kilobyte responses to fully load the 10-gigabit NIC;

- IPvlan is much closer to

--net=hostwhen approaching a bandwidth limit.

Our current choice is flannel with

host-gw . It has few dependencies (in particular, it does not require AWS or a new version of the Linux kernel), it is easy to install compared to IPvlan and offers sufficient performance. IPvlan is our fallback. If at some point the flannel gets support for IPvlan, we will switch to that option.Despite the fact that the performance of

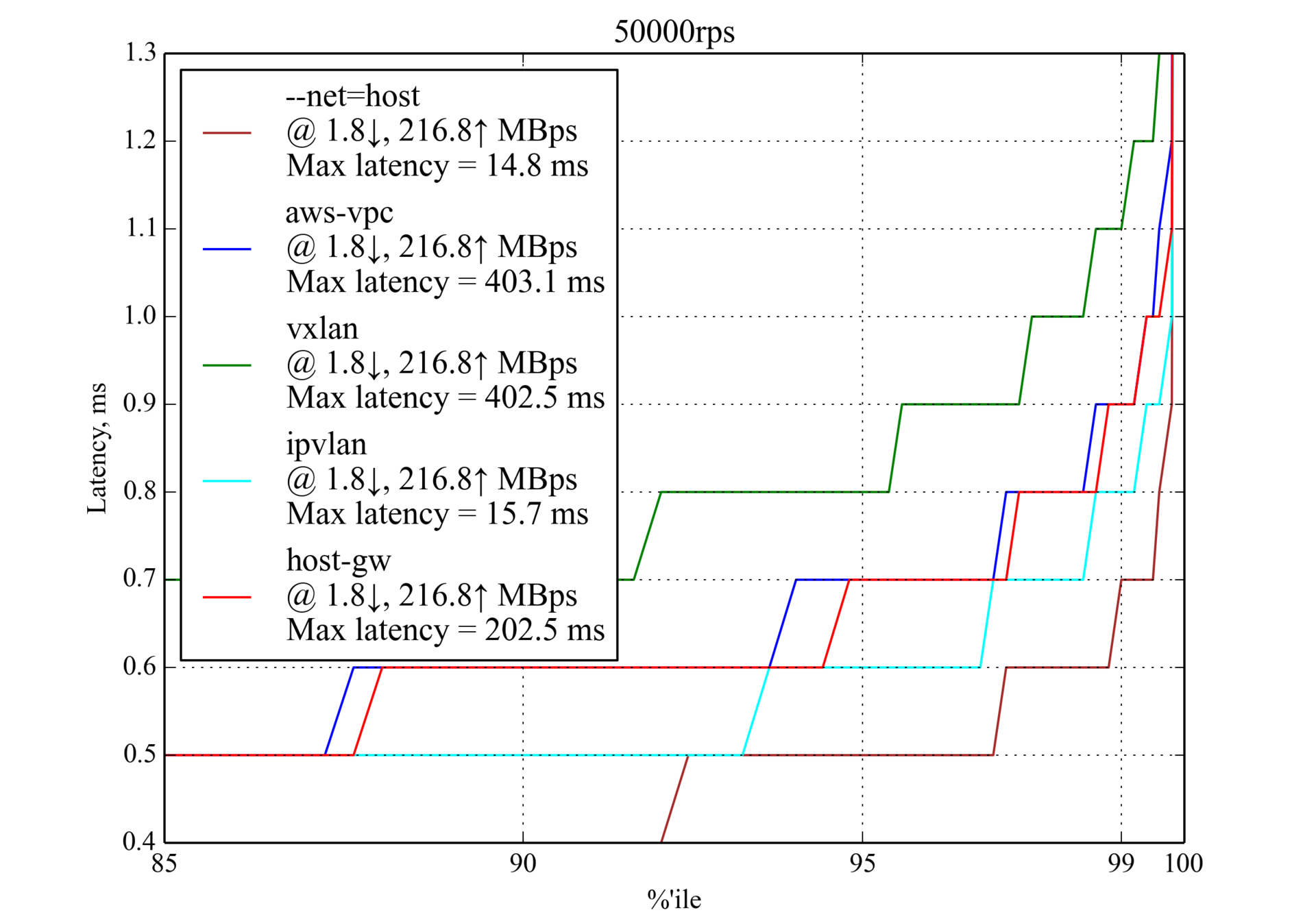

aws-vpc turned out to be a little better than host-gw , the limitation of 50 machines and the fact that it was tied to Amazon AWS became decisive factors for us.50,000 RPS, 350 bytes

At 50,000 requests per second, all candidates showed acceptable performance. You can already notice the main trend: IPvlan shows the best results,

host-gw and aws-vpc follow it, and vxlan - the worst.150,000 RPS, 350 bytes

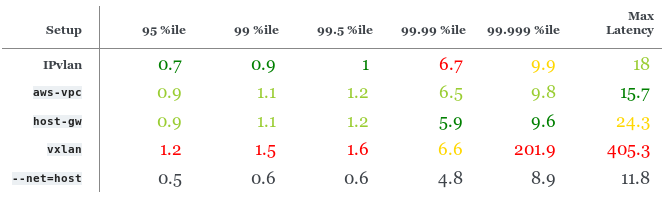

Percentages of delay for 150 000 RPS (≈30% of the maximum RPS), ms

IPvlan is slightly better than

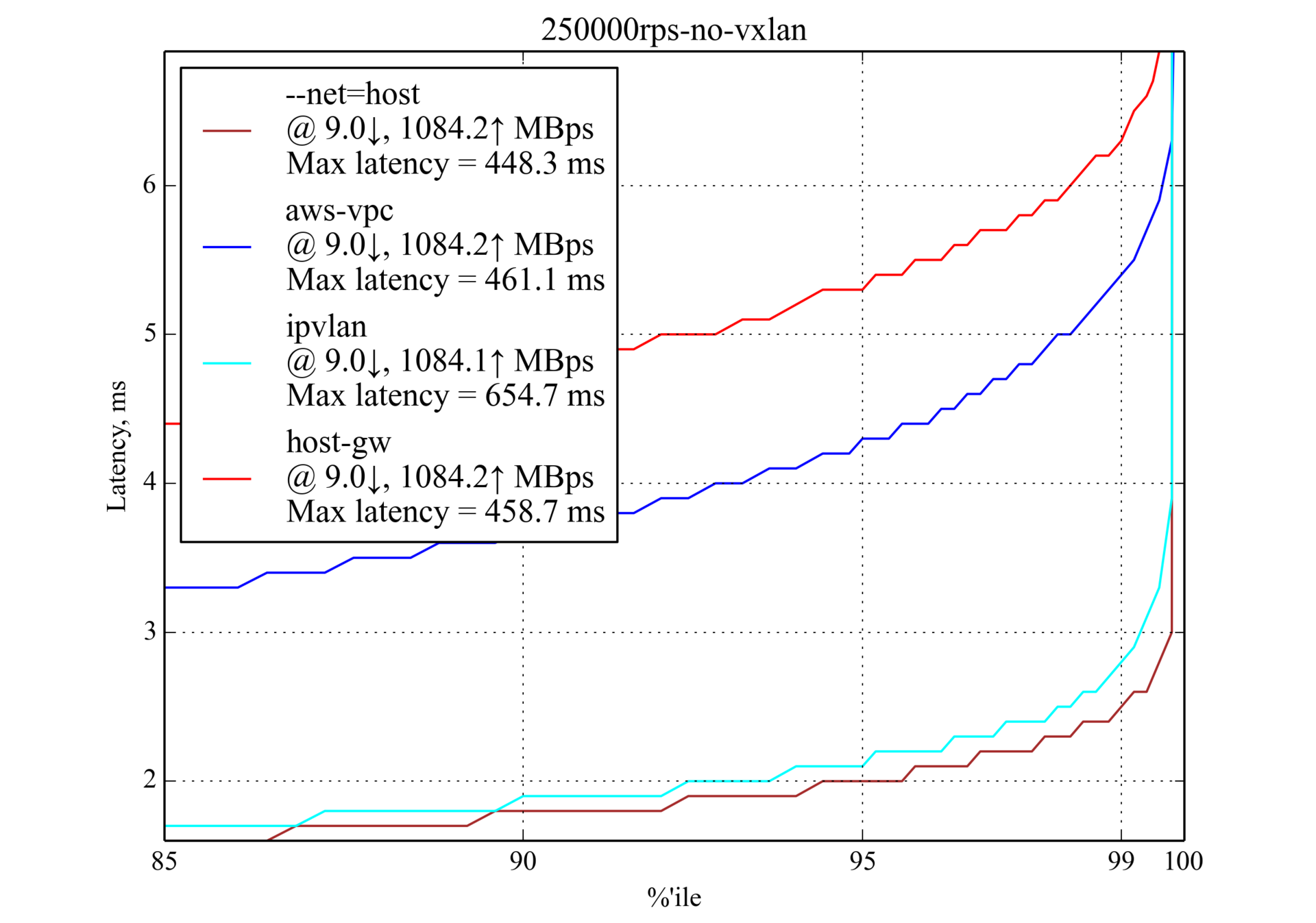

host-gw and aws-vpc , but it has the worst percentile of 99.99. host-gw slightly better performance than aws-vpc .250,000 RPS, 350 bytes

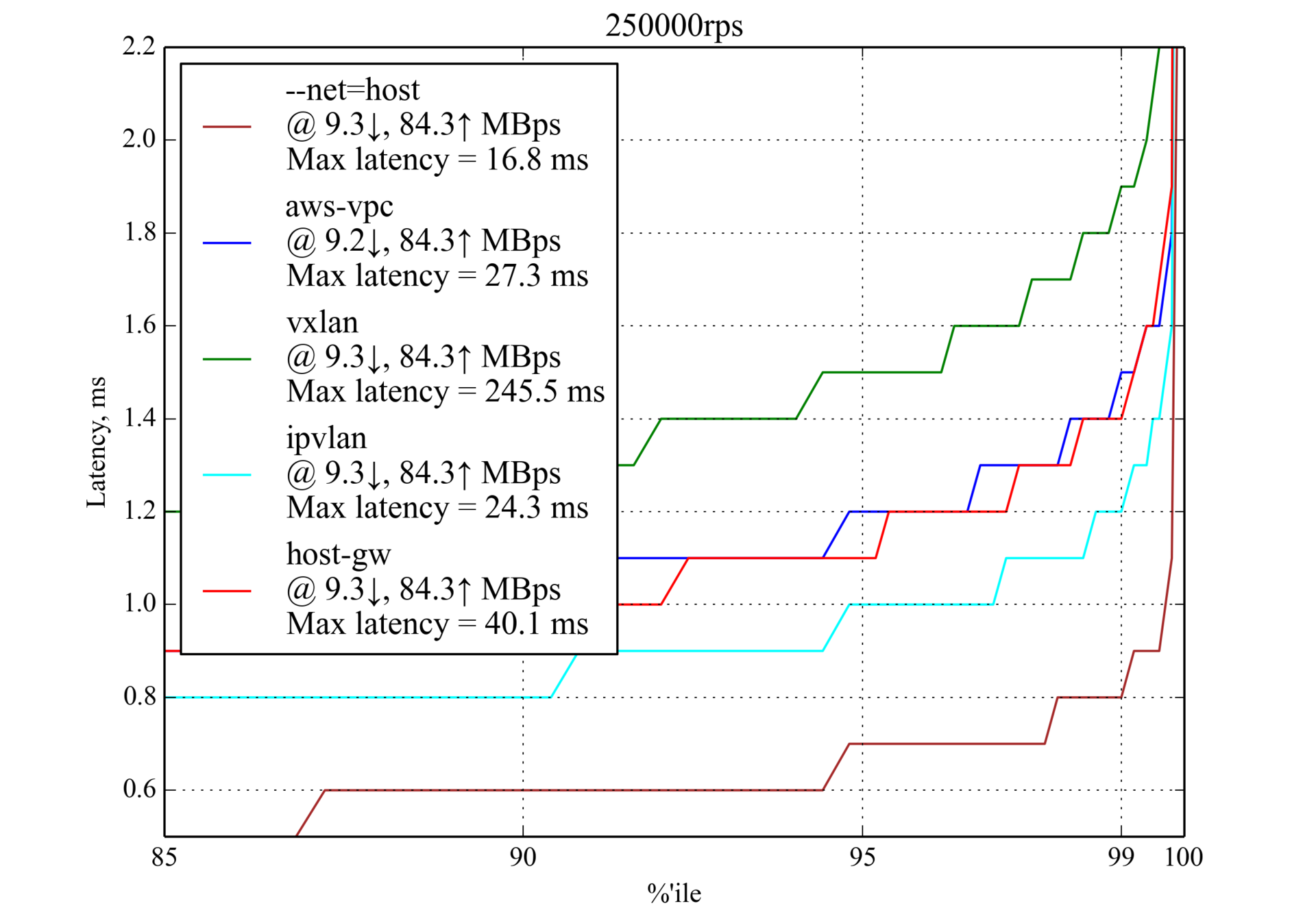

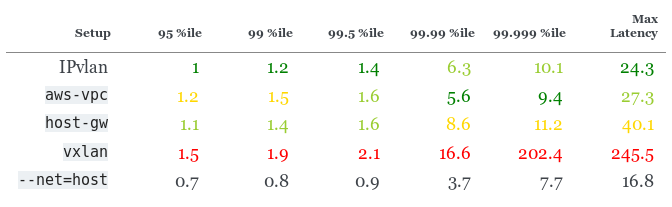

It is assumed that such a load is normal for production, so the results are especially important.

Percentile delays for 250,000 RPS (≈50% of maximum RPS), ms

IPvlan again shows better performance, but

aws-vpc best result in percentiles of 99.99 and 99.999. host-gw superior to aws-vpc in percentiles 95 and 99.350,000 RPS, 350 bytes

In most cases, the delay is close to the results for 250,000 RPS (350 bytes), but it is growing rapidly after the 99.5 percentile, which means an approximation to the maximum RPS.

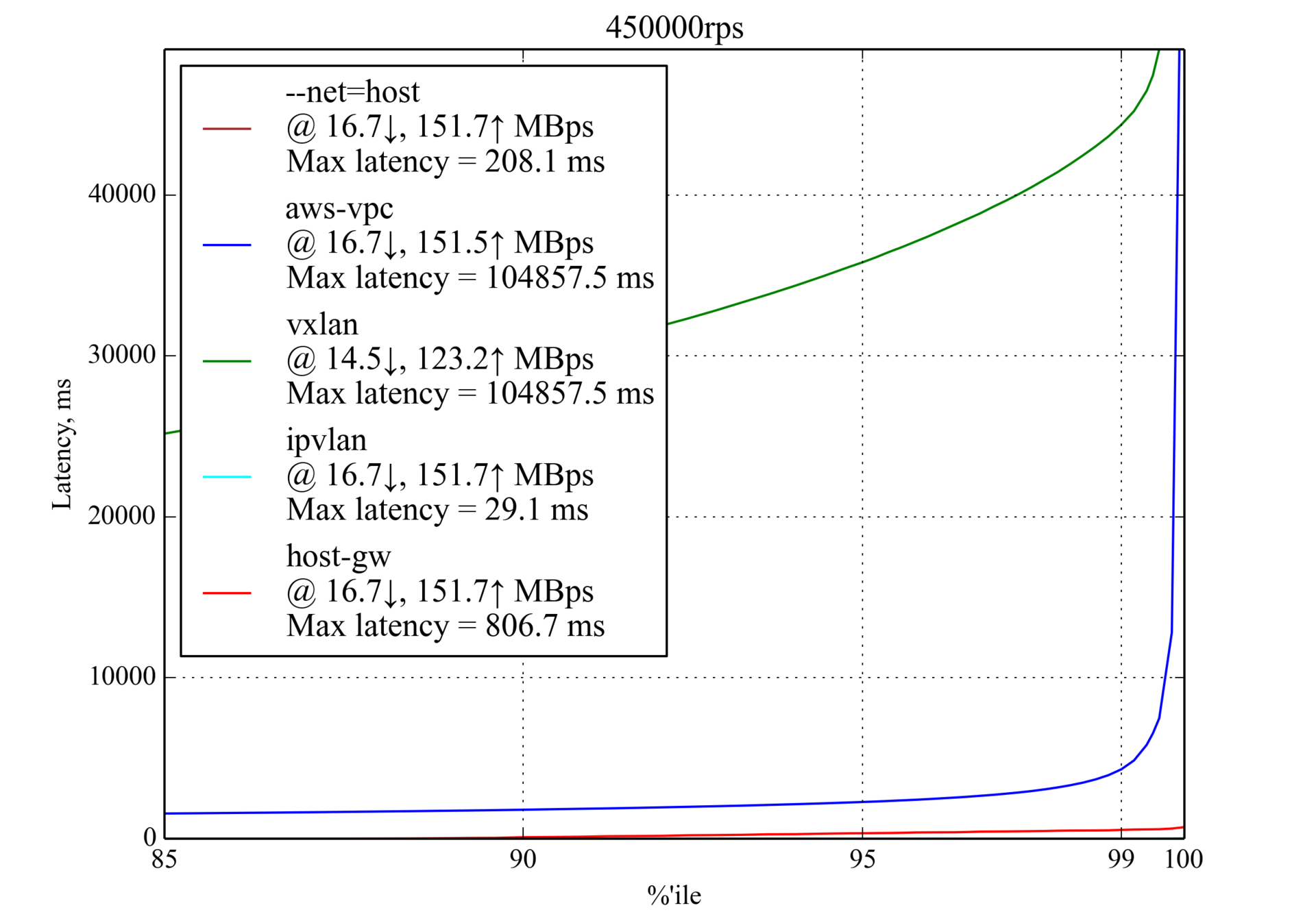

450,000 RPS, 350 bytes

Interestingly,

host-gw shows much better performance than aws-vpc :

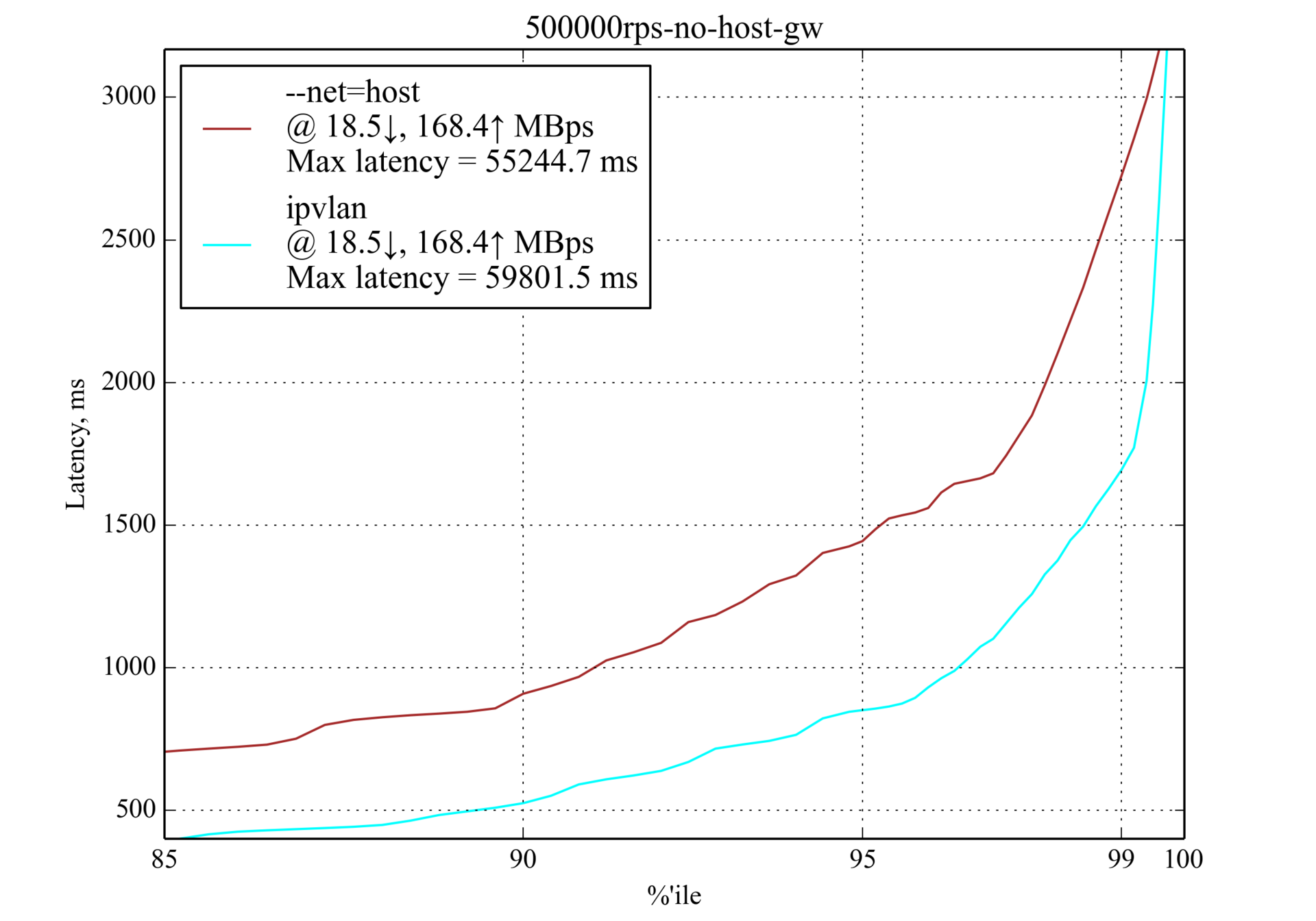

500,000 RPS, 350 bytes

With a load of 500,000 RPS, only IPvlan still works and even surpasses

--net=host , but the delay is so high that we cannot call it valid for applications that are sensitive to delays.

50,000 RPS, 4 kilobytes

Large results of requests (4 kilobytes against 350 bytes tested earlier) lead to a greater network load, but the list of leaders practically does not change:

Percentile delays at 50,000 RPS (≈20% of maximum RPS), ms

150,000 RPS, 4 kilobytes

host-gw a surprisingly poor percentile of 99.999, but it still shows good results for smaller percentiles.Percentages of delay for 150,000 RPS (≈60% of maximum RPS), ms

250,000 RPS, 4 kilobytes

This is the maximum RPS with the greatest answer (4 Kb).

aws-vpc far superior to host-gw , unlike in the case with a small response (350 bytes).Vxlan was again excluded from the schedule.Environment for testing

The basics

To better understand this article and reproduce our test environment, you need to be familiar with the basics of high performance.

These articles contain useful information on this topic:

- How to receive a million packets per second from CloudFlare;

- How to achieve low latency with 10Gbps Ethernet from CloudFlare;

- Scaling in the Linux Networking Stack from the Linux kernel documentation.

Cars

- We used two instances of c4.8xlarge in Amazon AWS EC2 with CentOS 7.

- Enhanced networking is enabled on both machines.

- Each machine is NUMA with 2 processors, each processor has 9 cores, each core has 2 threads (hyperthreads), which ensures the effective launch of 36 threads on each machine.

- Each machine on the network card 10Gbps (NIC) and 60 GB of RAM.

- To support enhanced networking and IPvlan, we installed Linux 4.3.0 kernel with Intel ixgbevf driver.

Configuration

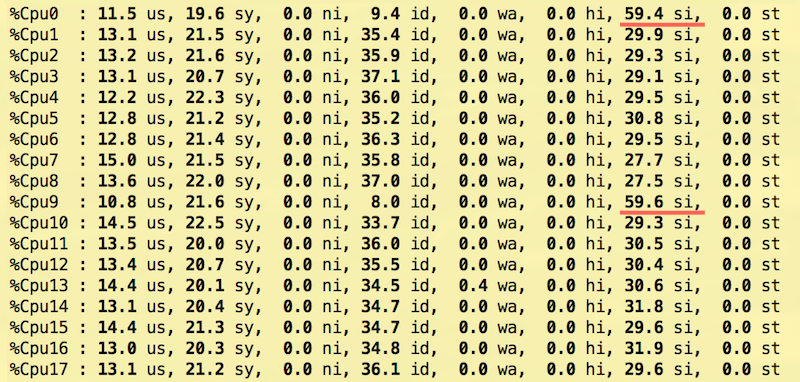

Modern NICs use Receive Side Scaling (RSS) over multiple interrupt request ( IRQ ) lines. EC2 offers only two such lines in a virtualized environment, so we tested several configurations with RSS and Receive Packet Steering (RPS) and came up with the following settings, partly recommended by the Linux kernel documentation:

- IRQ . The first core of each of the two NUMA nodes is configured to receive interrupts from the NIC. To map a CPU to a NUMA node, use

lscpu:$ lscpu | grep NUMA NUMA node(s): 2 NUMA node0 CPU(s): 0-8,18-26 NUMA node1 CPU(s): 9-17,27-35

This setting is done by writing0and9to/proc/irq/<num>/smp_affinity_list, where the IRQ numbers are obtained viagrep eth0 /proc/interrupts:$ echo 0 > /proc/irq/265/smp_affinity_list $ echo 9 > /proc/irq/266/smp_affinity_list - Receive Packet Steering (RPS) . Several combinations have been tested for RPS. To reduce the delay, we unloaded the processors from IRQ processing, using only CPU numbers 1–8 and 10–17. Unlike

smp_affinityin the IRQ, therps_cpussysfs filerps_cpusnot have arps_cpuspostfix, sorps_cpusare_listto enumerate CPUs to which RPS can send traffic (see Linux kernel documentation: RPS Configuration ) :$ echo "00000000,0003fdfe" > /sys/class/net/eth0/queues/rx-0/rps_cpus $ echo "00000000,0003fdfe" > /sys/class/net/eth0/queues/rx-1/rps_cpus - Transmit Packet Steering (XPS) . All NUMA 0 processors (including HyperThreading, i.e. CPUs numbered 0-8, 18-26) were tuned to tx-0, and NUMA 1 processors (9-17, 27-37) were tuned to tx-1 (more see Linux kernel documentation: XPS Configuration ) :

$ echo "00000000,07fc01ff" > /sys/class/net/eth0/queues/tx-0/xps_cpus $ echo "0000000f,f803fe00" > /sys/class/net/eth0/queues/tx-1/xps_cpus - Receive Flow Steering (RFS) . We planned to use 60 thousand permanent connections, and official documentation recommends that this number be rounded to the nearest power of two:

$ echo 65536 > /proc/sys/net/core/rps_sock_flow_entries $ echo 32768 > /sys/class/net/eth0/queues/rx-0/rps_flow_cnt $ echo 32768 > /sys/class/net/eth0/queues/rx-1/rps_flow_cnt - Nginx Nginx used 18 workflows, each with its own CPU (0-17). This is configured using

worker_cpu_affinity:workers 18; worker_cpu_affinity 1 10 100 1000 10000 ...; - Tcpkali Tcpkali does not have built-in support for binding to specific CPUs. To use RFS, we ran tcpkali on the

tasksetand set up the scheduler for the rare reassignment of threads:$ echo 10000000 > /proc/sys/kernel/sched_migration_cost_ns $ taskset -ac 0-17 tcpkali --threads 18 ...

This configuration allowed us to evenly distribute the interrupt load across the processor cores and achieve better throughput while maintaining the same latency as in the other tested configurations.

Kernels 0 and 9 serve only network interrupt (NIC) and do not work with packets, but they remain the most busy:

I also used tuned from Red Hat with the network-latency profile enabled.

To minimize the effect of nf_conntrack, NOTRACK rules have been added.

The sysctl configuration has been configured to support a large number of TCP connections:

fs.file-max = 1024000 net.ipv4.ip_local_port_range = "2000 65535" net.ipv4.tcp_max_tw_buckets = 2000000 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_tw_recycle = 1 net.ipv4.tcp_fin_timeout = 10 net.ipv4.tcp_slow_start_after_idle = 0 net.ipv4.tcp_low_latency = 1 From the translator : Many thanks to my colleagues from Machine Zone, Inc. for testing! It helped us, so we wanted to share it with others.

PS Perhaps you will also be interested in our article " Container Networking Interface (CNI) - network interface and standard for Linux-containers ."

Source: https://habr.com/ru/post/332432/

All Articles