Openstack. Detective story or where the connection is lost? Part two. IPv6 and stuff

Adventure with IPv6

First of all, I’ll say - guys, I don’t understand why IPv6 is needed on a platform that is not bound by this very protocol by itself, and it assigns itself the addresses it wants. My colleague and I decided - “what for goat bayan?” - and banned IPv6. Basically so banned. In three places, at the core and modules level. At the same time, the software was updated to the latest stable version.

- In the / etc / default / grub file, the parameter ipv6.disable = 1 was added to the GRUB_CMDLINE_LINUX_DEFAULT line.

- In the file /etc/modprobe.d/aliases set alias net-pf-10 off and alias ipv6 off

- In the /etc/sysctl.conf file, we assigned net.ipv6.conf.all.disable_ipv6 = 1 and net.ipv6.conf.default.disable_ipv6 = 1

And then we have the distribution of addresses by DHCP. At the same time, of course, the passwords in the new machines ceased to start.

What has changed? It seems that dnsmasq does not start. Then I found old versions of software in backups and put them on. But oh my goodness, it still doesn't work!

What miracles? There are a lot of things in the logs, but no specific instructions on what is going on. He began to wool all logs in a row, including the system. I accidentally saw that there are actually logs telling what happened - but they are not at all in / var / log, as one would expect. No, for some reason they are hidden in / var / lib / neutron / ha_confs and further - the subnet name participates in the directory name. There lies the neutron-generated config and launch logs for keepalived. We have a “stable” configuration. Automatically generated config contains IPv6 addresses. The keepalived daemon does not start - it will hang up from the system. We banned IPv6? After that, dnsmasq no longer starts at the desired address - because there is no address, keepalived should have raised it. I had to return back.

Conclusion: If we disable IPv6 at the kernel level, then dnsmasq stops running.

Do not prohibit IPv6 in the openstack version of Mitaka!

Old problem

')

But the old story continues.

In some cases, the problem occurs immediately after the creation of the virtual machine, in some - not. Let me remind you: periodically begins to disappear part of the package. And sometimes it even looks like the one in the picture below.

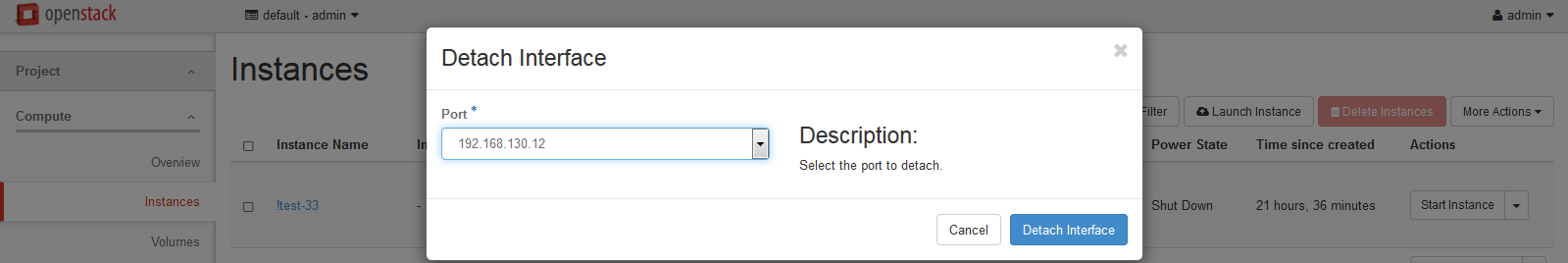

We found a relatively easy way to get around this problem. Now this is done by tearing off the internal IP address and assigning it from another subnet - we often have to add new subnets. The problem goes away for a while. Part of the machines while working fine. The real "dancing with a tambourine."

New Horizons

I recently proved to myself that this problem is in the depth of OpenStack. We built a new platform in the image and likeness of the old, while on the test ranges. Everything worked gorgeous at the establishment of up to 300 cars in the farm, and at the same time no failures!

We were delighted and began to cook it in "production". This implied the introduction of “ragged” IP address ranges - this is how it turned out. We cleaned the farm, removing these three hundred machines. And suddenly on the three test virtuals it happened the same as on the old farm - the packages began to disappear, in large quantities. It seems that the matter is in the network configuration of OpenStack.

Yes Yes! It's all about setting up a virtual router. Unfortunately, examples of normal network construction for more than 20 virtual machines cannot be found on the network. So it seems to be continued ...

Source: https://habr.com/ru/post/332400/

All Articles