The problem of continuous protection of web applications. View from the side of researchers and operators

Today we have an unusual article. As follows from the title, it is devoted to the problems of continuous protection of web applications and is divided into two parts, reflecting two views on the problem: from the position of WAF developers (Andrei Petukhov, SolidLab) and from the point of view of the center for monitoring and countering cyber attacks, which WAF uses to provide customer service (@avpavlov, Solar JSOC).

We will start with the developers, and in a week we will give the floor to the operators of WAF.

')

We are from SolidLab. Our main expertise is application security in all aspects - offensive (analysis of application security, infrastructures, processes) and defensive (building SDLC and certain aspects of it - for example, manual code analysis, training courses and seminars, the introduction of protection in general and our WAF in particular).

To begin, we would like to talk about trends in the world of developing and operating web applications that define the landscape of threats and methods of protection. We believe that arguments about SOC, continuous monitoring and WAFs will soon be inseparable from arguments about continuous development and features of this process for a particular application / service. Let's start with an obvious tendency to reduce the length of the release cycle. Interesting Facts:

Short release cycles lead to a rethinking of the reasons and motives that made it appropriate to use traditional events - to attract external consultants to find flaws (no matter what method is a white or black box), manual testing with your own QA or security team: "I checked the release code and live calmly for half a year until the next one" does not work anymore. In the development with short release cycles, the listed “traditional” events are considered more maturely: as a source of feedback on the processes and their provision with all necessary tools, people, methods, and not as a way to get some protected state of the application and fix it.

Note that the continuity of processes (especially in terms of testing and deployment) implies a transition to a high degree of automation of routine tasks. Automation perfectly helps to reduce the frequency of typical (understandable to all development participants) flaws in the product:

We also note that the solution of routine tasks of modern web platforms and frameworks (RoR, Django, Spring, Struts, ASP.NET MVC, etc.) are trying to take over, without giving developers a chance to shoot themselves in the leg, implementing, for example , your own protection against CSRF or find / replace based template engine. Accordingly, as new web platforms are adapted, in the future we can expect a decrease in the likelihood of introducing into the code the flaws that allow conducting attacks like CSRF, SQL injection, XSS. Needless to say, even the majority of XML parsers today by default prohibit the resolution of external entities (that is, they use the safe defaults principle).

From such an ideological point of view, the tactic of dealing with the shortcomings of other types is not so straightforward:

For completeness, it is important to mention solutions to the listed tasks within sSDLC using methods alternative to monitoring.

You can get rid of atypical flaws through an whateverbox-analysis from a third-party organization that is carried out with a certain periodicity, or through mass popular continuous testing aka Bug Bounty. The disadvantages of the third type at the assembly stage can be eliminated by implementing an external dependency analysis (see Vulners, WhiteSource, OWASP dependency-check), and at the operation stage by performing the same checks with the same tools, but as a separate task and with greater frequency. My colleagues and I will talk more about protection against attacks through continuous monitoring and response.

From the point of view of managing the development process, the security parameters of the software / service being created are the targets to be planned. The tactics of achieving these indicators, in an amicable way, is determined at the stage of project initialization (or at the stage of its reform) and depends on the threat model and risk assessment, constraints (budget, time and others), available production resources (qualification, methodological support) and so on. Further. Our vision is that properly organized monitoring of a web application during the operational phase will not only reduce the risks associated with the realization of threats through the flaws of the web application and its environment (which is obvious), but also reduce the risks associated with incorrect management decisions, which are made during the development process, or the improper implementation of good and correct decisions.

In other words, the presence of correct monitoring increases the number of degrees of freedom in determining the tactics of achieving the security parameters of the software / service being created at the initial stage.

From the reasoning above, it is clear that, among others, proper monitoring should address the following key tasks for protecting web applications:

In addition to the listed tasks, which, in our opinion, should be solved by proper monitoring (and by any WAF as the main tool for building such a process), we also offer a list of important features for WAFs.

In contrast to the task of comparing WAFs in general (according to functional criteria or performance criteria) and talking about efficiency in general (more than one benchmark WAFs were not recognized by the community, all were decently criticized), we want to draw attention to the properties of tools will assess whether the tool is suitable for protecting a particular application. The statement of the problem is just that - to assess the applicability of WAF for a specific application on a specific technology stack.

WAF must understand the application protocol of the protected application.

There is a simple rule: if the WAF cannot parse how the parameter values are transmitted, it will not be able to detect and manipulate these values (injection / tampering).

By application protocol, we understand:

Thus, WAF's parsing of the protocol of the protected application is the definition for each HTTP request, which application function is called or which resource is requested, with what parameters and on whose behalf if it is a closed application area that is available after authentication.

As an example of interesting parameter transfer protocols, the following can be cited:

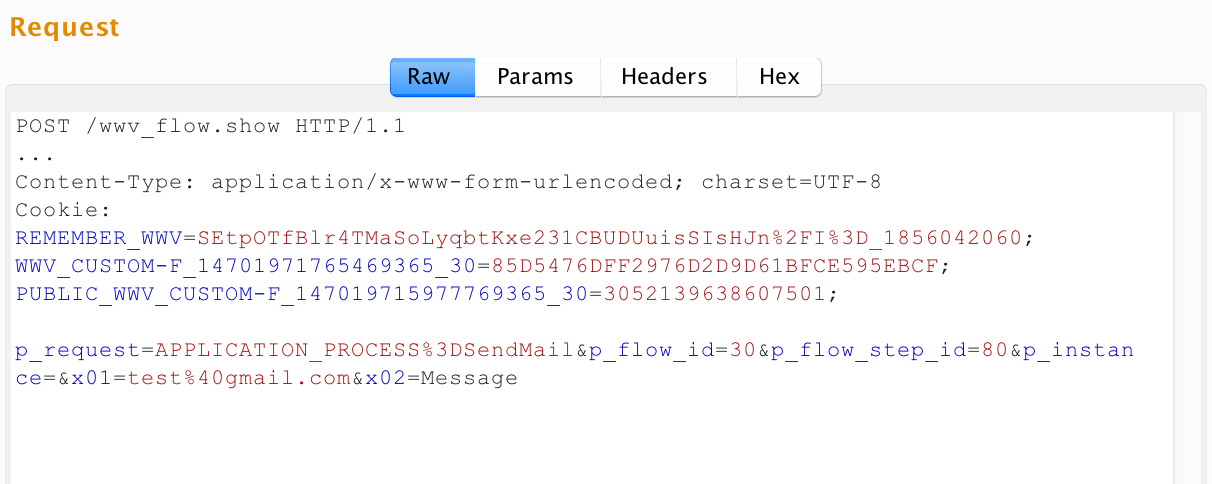

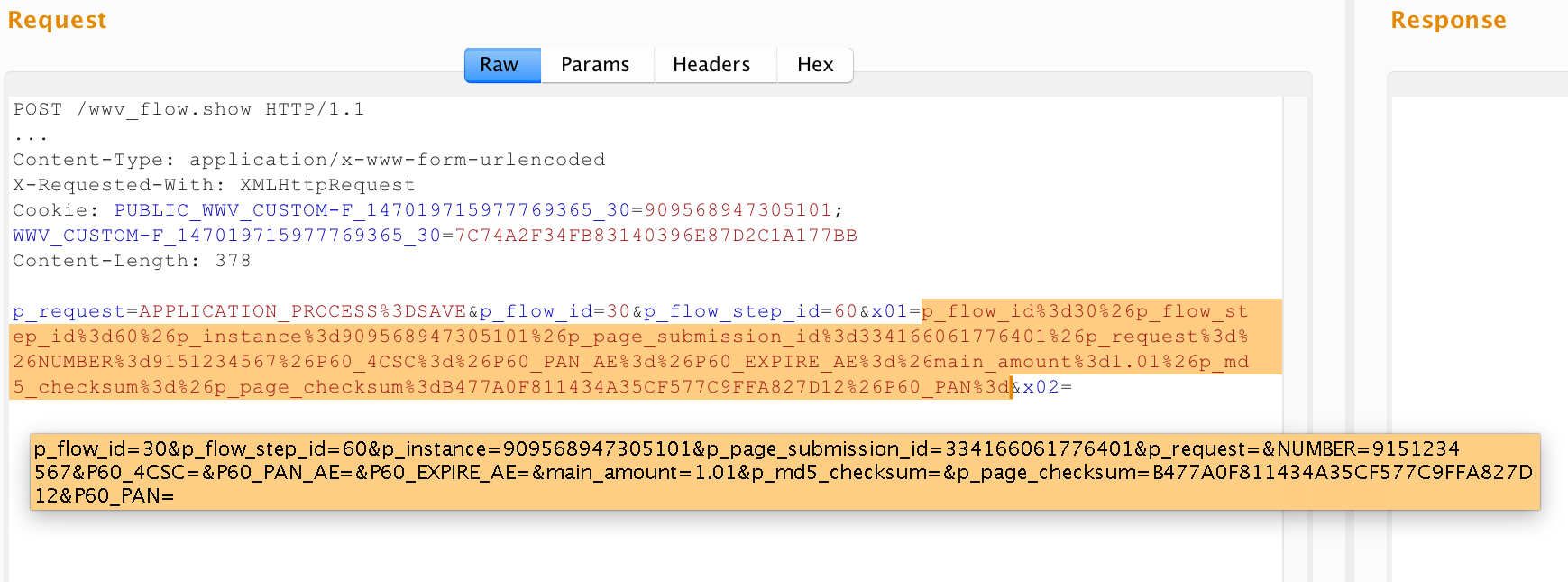

If in the first two examples a protocol parsing was required to determine the values of input parameters, then in applications of this type, WAF must additionally understand which operation or resource is being requested. Note that no one bothers application developers on APEX in the x01, x02, ... parameters to transfer, for example, XML / JSON encoded in base64, or, as in the last snapshot, the X-WWW-URLENCODED parameters serialized.

WAF must have granularity of the level of operations of the protected application.

Applications on APEX perfectly illustrate the following thesis: WAF should apply its mechanisms / policies / rules not with the granularity of HTTP protocol entities (URL sections, headers and their values, parameter names and their values), but with the granularity of the application functions and their input parameters.

Indeed, for an application on APEX, the parameters x01, x02, etc. will be a transport of values for all its functions, but:

It turns out that we want the following from the WAF mechanisms:

The authors of this article encountered a situation where User Tracking [1] did not work on the WAF of one large vendor on an APEX application, just because the rules for sharing successful login actions, unsuccessful login actions, logout actions, and others actions could not be set using the expressive means provided - these were regular expressions over the fields of the HTTP request.

The above reasoning is true, of course, not only for APEX-applications, but also for various applications with complex non-URL-based routing: XML-RPC, JSON-RPC, SOAP, etc.

WAF must be able to set policies at the level of operations and objects of the protected application.

It’s no secret that the main ways of detecting attacks on web applications are parsing for detecting syntax anomalies (corresponds to injection attacks) and statistical analysis for detecting anomalies associated with too many requests (password / token / OTP selection, dirbasting, enumeration of objects applications — for example, existing users, smart DoS, and so on). Attacks that do not cause syntactic or statistical anomalies are much more difficult - a typical example would be a request for someone else's objects (Insecure Direct Object Reference). Such attacks are often called “logical” or attacks on business logic.

A fair question arises - at what level of abstraction do anomalies occur during attacks of this type?

We believe that anomalies arising from attacks on business logic are anomalies of the level of operations and objects of the protected application, which requires understanding application scenarios, life cycle and belonging of its objects to application users, calculating data and object dependencies between individual steps one use case or between different use cases, it is possible to identify anomalies arising from attacks at the logic level.

In our opinion, the perspective of the development of WAFs as monitoring tools is precisely an understanding of the application domain level, working at the level of operations and objects, building dependencies between them and, as a result, detecting attacks on the logic of scenarios in this subject area.

We will start with the developers, and in a week we will give the floor to the operators of WAF.

')

We are from SolidLab. Our main expertise is application security in all aspects - offensive (analysis of application security, infrastructures, processes) and defensive (building SDLC and certain aspects of it - for example, manual code analysis, training courses and seminars, the introduction of protection in general and our WAF in particular).

To begin, we would like to talk about trends in the world of developing and operating web applications that define the landscape of threats and methods of protection. We believe that arguments about SOC, continuous monitoring and WAFs will soon be inseparable from arguments about continuous development and features of this process for a particular application / service. Let's start with an obvious tendency to reduce the length of the release cycle. Interesting Facts:

- The tendency to reduce the release cycle of software exactly as a new trend was noted in 2001 in the article Microsoft Research (visionaries?).

- The Wikipedia article on Continuous Delivery appeared in December 2011.

- In 2014, the Continuous Delivery Conference appeared.

- Over the past 5 years, there have been massive scientific articles (see Google Scholar) on the study of observed phenomena (for example, “Continuous Software Engineering and Beyond: Trends and Challenges”) with an analysis of personal experience (for example, “Continuous delivery? Easy! Just change everything ( well, maybe it is not that easy) ”).

Short release cycles lead to a rethinking of the reasons and motives that made it appropriate to use traditional events - to attract external consultants to find flaws (no matter what method is a white or black box), manual testing with your own QA or security team: "I checked the release code and live calmly for half a year until the next one" does not work anymore. In the development with short release cycles, the listed “traditional” events are considered more maturely: as a source of feedback on the processes and their provision with all necessary tools, people, methods, and not as a way to get some protected state of the application and fix it.

Note that the continuity of processes (especially in terms of testing and deployment) implies a transition to a high degree of automation of routine tasks. Automation perfectly helps to reduce the frequency of typical (understandable to all development participants) flaws in the product:

- Deficiencies, under which they wrote the tests.

- The disadvantages for which they wrote the rules for a static / dynamic analyzer.

- Disadvantages that are a consequence of the human factor (automatic preparation of the environment, configuration and deployment): the probability of their introduction is minimized due to automation and competent change management in those very automation scripts.

We also note that the solution of routine tasks of modern web platforms and frameworks (RoR, Django, Spring, Struts, ASP.NET MVC, etc.) are trying to take over, without giving developers a chance to shoot themselves in the leg, implementing, for example , your own protection against CSRF or find / replace based template engine. Accordingly, as new web platforms are adapted, in the future we can expect a decrease in the likelihood of introducing into the code the flaws that allow conducting attacks like CSRF, SQL injection, XSS. Needless to say, even the majority of XML parsers today by default prohibit the resolution of external entities (that is, they use the safe defaults principle).

From such an ideological point of view, the tactic of dealing with the shortcomings of other types is not so straightforward:

- Unusual logic-level flaws in individual modules (functions that the web platform cannot take over). For example, the disadvantages associated with incorrect / insufficient authorization when accessing objects (Insecure Direct Object Reference).

- Disadvantages that appear at the junction of responsibility of various groups: for example, developers of various services in the microservice architecture, arising in the absence of explicit contracts, or administrators and devops). Examples are the disadvantages associated with importing or uploading files (Insecure File Upload), as well as disadvantages associated with incorrect integration (for example, SSO, payment system, cloud chat).

- Disadvantages associated with the use of 3rd-party libraries / platforms / frameworks with published vulnerabilities. The reasoning concerns, among other things, binary libraries, wrappers over which the application uses, and utilities that run as separate processes (a vivid example of ImageMagic vulnerabilities are CVE-2016-3714, CVE-2016-3715, CVE-2016-3716, CVE -2016-3717, CVE-2016-3718) or ffmpeg. The importance of timely protection against attacks on flaws in the 3rd-party components cannot be overestimated. In 2017, the practical feasibility of mass automated attacks across the entire Internet raises no questions. Examples include recent Joomla raids (for example, CVE-2016-8870 + CVE-2016-8869) or Apache Struts (CVE-2017-5638).

For completeness, it is important to mention solutions to the listed tasks within sSDLC using methods alternative to monitoring.

You can get rid of atypical flaws through an whateverbox-analysis from a third-party organization that is carried out with a certain periodicity, or through mass popular continuous testing aka Bug Bounty. The disadvantages of the third type at the assembly stage can be eliminated by implementing an external dependency analysis (see Vulners, WhiteSource, OWASP dependency-check), and at the operation stage by performing the same checks with the same tools, but as a separate task and with greater frequency. My colleagues and I will talk more about protection against attacks through continuous monitoring and response.

From the point of view of managing the development process, the security parameters of the software / service being created are the targets to be planned. The tactics of achieving these indicators, in an amicable way, is determined at the stage of project initialization (or at the stage of its reform) and depends on the threat model and risk assessment, constraints (budget, time and others), available production resources (qualification, methodological support) and so on. Further. Our vision is that properly organized monitoring of a web application during the operational phase will not only reduce the risks associated with the realization of threats through the flaws of the web application and its environment (which is obvious), but also reduce the risks associated with incorrect management decisions, which are made during the development process, or the improper implementation of good and correct decisions.

In other words, the presence of correct monitoring increases the number of degrees of freedom in determining the tactics of achieving the security parameters of the software / service being created at the initial stage.

From the reasoning above, it is clear that, among others, proper monitoring should address the following key tasks for protecting web applications:

- Operational protection against 1-day attacks on application components. The goal is to prevent an attack on a 1-day vulnerability (and preferably a 0-day) if, for some reason, the attack came before the update.

- Adaptation to the constant changes of the application (both functional and technological). The goal is to have the ability to effectively control customer interactions with changed aspects of the application at each time point (see attacks, be able to granular block, be able to be granular).

- Detection of logical attacks on the application. The goal is to minimize the consequences of atypical (logical) errors that fall into the prod. It is rational to proceed from the assumption that such errors are almost impossible to completely eliminate by automated procedures within the framework of sSDLC, and, therefore, there are only two working options: Bug Bounty and monitoring with response.

In addition to the listed tasks, which, in our opinion, should be solved by proper monitoring (and by any WAF as the main tool for building such a process), we also offer a list of important features for WAFs.

In contrast to the task of comparing WAFs in general (according to functional criteria or performance criteria) and talking about efficiency in general (more than one benchmark WAFs were not recognized by the community, all were decently criticized), we want to draw attention to the properties of tools will assess whether the tool is suitable for protecting a particular application. The statement of the problem is just that - to assess the applicability of WAF for a specific application on a specific technology stack.

WAF must understand the application protocol of the protected application.

There is a simple rule: if the WAF cannot parse how the parameter values are transmitted, it will not be able to detect and manipulate these values (injection / tampering).

By application protocol, we understand:

- A method for addressing application functions / resources. In the simplest applications, this is part of the PATH in the URL. Sometimes there are applications where the URL path is always the same (for example, / do), functions are addressed by the value of an “action” type parameter, and resources are specified by a “page” or “res” type parameter. In the general case, a function / resource can be addressed by an arbitrary set of attributes of an HTTP request.

- The method of transmitting input parameters that parameterize the functions of the application. Note that the specification of the HTTP protocol does not limit the imagination of web developers in choosing the method of transporting the necessary data through the structure of an HTTP request.

Thus, WAF's parsing of the protocol of the protected application is the definition for each HTTP request, which application function is called or which resource is requested, with what parameters and on whose behalf if it is a closed application area that is available after authentication.

As an example of interesting parameter transfer protocols, the following can be cited:

- 3D Secure. At one of the steps of the protocol, the encapsulation looks like this: a POST request comes to the server with the content type application / x-www-form-urlencoded, the request body contains the PaReq parameter. The PaReq parameter is an XML document, compressed using the DEFLATE algorithm, then Base64-encoded and URL-coded. Accordingly, the real parameters of the application are transmitted in the tags and attributes of this XML document. If WAF cannot open such a “matryoshka” and apply analysis policies to parameters within XML (and / or validate its structure), then WAF essentially works in Fail Open mode. Other examples from the same series are numerous XML in JSON and vice versa, of course, not without the help of packaging in BASE64.

- Google Web Toolkit and other protocols that use their own serialization (not JSON / XML / YAML / ...). Instead of a thousand words, one sample query:

Accordingly, if WAF cannot get from the request the final values of the parameters with which the protected application operates, then WAF does not work. Note that there is a decent amount of ways to serialize binary objects (and you can also write your own!). - Oracle Application Express (APEX). Application URLs in APEX look like this:

http://apex.app:8090/apex/f?p=30:180:3426793174520701::::P180_ARTICLE_ID:5024

POST requests in the URL part are similar, and the parameters are transmitted in the body (x-ur-urlencoded), but the names, regardless of the operation being called, are the same: x01 = val1 & x02 = val2 ..., etc. Here is an example request:

If in the first two examples a protocol parsing was required to determine the values of input parameters, then in applications of this type, WAF must additionally understand which operation or resource is being requested. Note that no one bothers application developers on APEX in the x01, x02, ... parameters to transfer, for example, XML / JSON encoded in base64, or, as in the last snapshot, the X-WWW-URLENCODED parameters serialized.

WAF must have granularity of the level of operations of the protected application.

Applications on APEX perfectly illustrate the following thesis: WAF should apply its mechanisms / policies / rules not with the granularity of HTTP protocol entities (URL sections, headers and their values, parameter names and their values), but with the granularity of the application functions and their input parameters.

Indeed, for an application on APEX, the parameters x01, x02, etc. will be a transport of values for all its functions, but:

- The encapsulation of the values of these parameters may be different (see the x01 values in the screenshots above).

- As a result, the types, ranges, and semantics of these parameters for each function of the application will also be different.

It turns out that we want the following from the WAF mechanisms:

- The subsystem of building and applying positive models should build not one common positive model based on all observed values of the parameter x01, but N models according to the number of application functions that accept this parameter.

- The signature analysis subsystem should not apply the same set of signatures to the x01 parameter, but K sets (K < N) depending on the operator's wishes to cover the signatures of x01 values for actions or their groups.

- If the operator wants to configure an additional rule for the x01 parameter (for example, suppress false positives), then he should be able to choose the scope of this rule’s operation not in terms of the HTTP protocol (regular expression over the URL, for example), but again in in terms of the functions of the application that accepts x01 (for example, in the registration function to use, but in the password reset function - not).

The authors of this article encountered a situation where User Tracking [1] did not work on the WAF of one large vendor on an APEX application, just because the rules for sharing successful login actions, unsuccessful login actions, logout actions, and others actions could not be set using the expressive means provided - these were regular expressions over the fields of the HTTP request.

The above reasoning is true, of course, not only for APEX-applications, but also for various applications with complex non-URL-based routing: XML-RPC, JSON-RPC, SOAP, etc.

WAF must be able to set policies at the level of operations and objects of the protected application.

It’s no secret that the main ways of detecting attacks on web applications are parsing for detecting syntax anomalies (corresponds to injection attacks) and statistical analysis for detecting anomalies associated with too many requests (password / token / OTP selection, dirbasting, enumeration of objects applications — for example, existing users, smart DoS, and so on). Attacks that do not cause syntactic or statistical anomalies are much more difficult - a typical example would be a request for someone else's objects (Insecure Direct Object Reference). Such attacks are often called “logical” or attacks on business logic.

A fair question arises - at what level of abstraction do anomalies occur during attacks of this type?

We believe that anomalies arising from attacks on business logic are anomalies of the level of operations and objects of the protected application, which requires understanding application scenarios, life cycle and belonging of its objects to application users, calculating data and object dependencies between individual steps one use case or between different use cases, it is possible to identify anomalies arising from attacks at the logic level.

In our opinion, the perspective of the development of WAFs as monitoring tools is precisely an understanding of the application domain level, working at the level of operations and objects, building dependencies between them and, as a result, detecting attacks on the logic of scenarios in this subject area.

- The User Tracking mechanism allows you to associate requests sent by users of the application after authentication with their usernames. To configure this mechanism, tools usually require entering criteria for a successful login, unsuccessful login and criteria for session invalidation (timeout for inactivity if present, new login under the same user, sending a logout request at a given URL, etc.).

- Picture to Kata taken from the site gamer.ru

Source: https://habr.com/ru/post/331786/

All Articles