Static analysis as part of the Unreal Engine development process

The Unreal Engine project is developing - a new code is added and the already written will be changed. The inevitable consequence of the development of the project is the appearance in the code of new errors that it is desirable to detect as soon as possible. One way to reduce the number of errors is to use the PVS-Studio static code analyzer. Moreover, the analyzer is also developing rapidly and is learning to find new error patterns, some of which will be discussed in this article. If you are concerned about the quality of the code of your projects, then this article is for you.

The publication was prepared by Andrei Karpov, code samples were provided by Ilya Ivanov and Sergey Vasiliev from the PVS-Studio team. The original source is the Unreal Engine Blog: " Static Analysis as part of the Development Process ".

Static code analysis, theory

Static code analysis is the process of identifying errors and shortcomings in the source code of programs. Static analysis can be viewed as an automated code review process. Let us dwell on the review of the code in more detail.

Code review is one of the oldest and most useful methods for detecting defects. It consists in joint attentive reading of the source code and making recommendations for its improvement. In the process of reading the code, errors or sections of code that may become erroneous in the future are detected. It is also considered that the author of the code during the review should not give an explanation of how this or that part of the program works. The algorithm must be understood directly from the program text and comments. If this condition is not met, then the code should be refined.

')

As a rule, code review works well, because programmers are much easier to notice errors in someone else's code. More details on the code review methodology can be found in Steve McConnell's excellent book “Code Complete”.

The code review methodology has two drawbacks:

- Extremely high price. It is necessary to regularly distract several programmers from their main work in order to review a new code or re-review a code after making recommendations. In this case, programmers should regularly take breaks for rest. If you try to view large fragments of code at once, then the attention is quickly dulled, and the benefits of reviewing the code quickly disappear.

- It is difficult for people to detect errors that are not directly related to the new / modified code. Considering the freshly written fragment, it is difficult to assume that the malloc function is working incorrectly in it due to the fact that the stdlib.h header file is not included . This situation is described in more detail in the article " Beautiful 64-bit error in the C language ". Another example: changing the type of function or variable in the header file. In theory, after such changes, you must look through all the code in which this function or variable is used. In practice, this is too time consuming and, as a rule, the review is limited only to those places where the programmer has changed something.

On the one hand, I want to regularly review the code. On the other hand, it is too expensive. A compromise solution is static code analysis tools. They process the source code of the programs and give the programmer recommendations to pay increased attention to certain areas of the code. At the same time, the analyzers do not get tired and check all the code affected by the edits in the header files. Of course, the program will not replace a full review of the code performed by a team of programmers. However, the benefit / price ratio makes using static analysis a very useful practice used by many companies.

Like any other error detection methodology, static analysis has its strengths and weaknesses. There is no one ideal program testing method. The best result can be obtained using a combination of different approaches, such as: good coding style, static code analysis, dynamic code analysis, unit testing, regression testing, and so on.

An important advantage of static analysis is the ability to find many errors immediately after they appear in the code, which means that their correction will be very cheap.

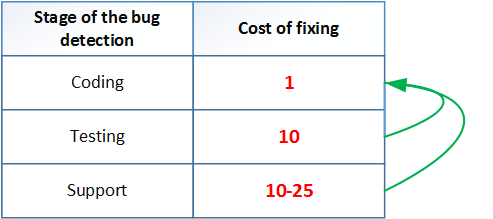

The fact is that the earlier the error is detected, the lower the cost of fixing it. So, according to the data given in McConnell's “Perfect Code”, the correction of an error at the testing stage is ten times more expensive than at the coding (writing code) stage:

Table 1. The average cost of correcting defects depending on the time of their detection (data for the table are taken from S. McConnell’s “Perfect Code”).

Static analysis tools allow you to identify a large number of errors characteristic of the stage of writing code, which significantly reduces the cost of developing the entire project.

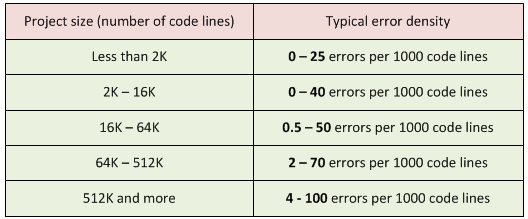

The urgency of using static analyzers will only grow over time. This is due to the gradual increase in the size of the code of modern applications. Programs are getting bigger, getting harder. At the same time, the error density in the code depends on its size nonlinearly.

The larger the project, the more errors per 1000 lines of code it contains. Take a look at this interesting table:

Table 2. Project size and typical error density. Data sources: “Program Quality and Programmer Productivity” (Jones, 1977), “Estimating Software Costs” (Jones, 1998).

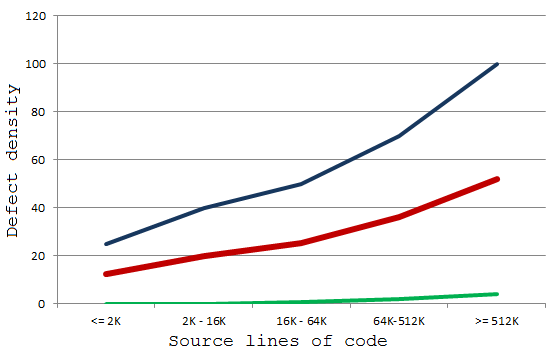

To make it easier to perceive the data, we will construct graphs.

Chart 1. Typical density of errors in the project. Blue is the maximum amount. Red is the average. Green is the least.

It follows from the graph that as the project grows, programmers have to use more and more means that will allow them to maintain the required quality of the project. You can not do everything the same to create high-quality code, which was done, say, 8 years ago. This can be an unpleasant discovery for the team: they seem to be writing code as always, and the quality situation is getting worse.

It is necessary to master new methodologies and tools, otherwise, with the growth of the project, the old technologies will not be enough. And one of the extremely useful techniques that should be used is the static code analysis that we reviewed.

If the reader was not yet familiar with the methodology of static code analysis, I hope I managed to interest him. For a more detailed acquaintance with her, I offer several links:

- John Carmack. Static code analysis .

- Wikipedia. Static code analysis .

- Wikipedia. List of tools for static code analysis .

- Al Bessey, Ken Block, Ben Chelf, Andy Chou, Bryan Fulton, Seth Hallem, Charles Henri-Gros, Asya Kamsky, Scott McPeak, Dawson Engler. A Few Billion Lines Of Code Of Conduction Of The Real World .

- Catherine Milovidova. A selection of videos about static code analysis .

- Blog of the PVS-Studio team.

Now is the time to move from theory to practice and see how static analysis helps a project like the Unreal Engine.

Unreal engine

Our team again had the honor of working with the Unreal Engine code!

Although we already did this two years ago, but in the intervening time, we have recruited more work on editing and improving the code. It is always useful and interesting to look at the code base of such a project after a two-year break. There are several reasons for this.

First of all, we are interested to look at the false alarms of the analyzer. Something can be corrected in our tool, and this will reduce the number of unnecessary messages. The fight against false positives is a constant task for developers of any code analyzers. Those who are interested in this topic, I propose to read the article " How and why static analyzers are struggling with false positives ."

For two years, the Unreal Engine code base has changed quite a lot. Some fragments disappeared, some were added. Sometimes even whole folders. Therefore, not all parts of the code received enough attention, which means that there is also work for PVS-Studio there.

I would like to praise Epic Games for the fact that it pays great attention to the quality of the code and uses such tools as PVS-Studio. The reader, of course, can smile: "Of course, your team should praise Epic Games, because it is your client." Frankly, we have a motive to leave a positive review about the developers from Epic Games. However, I praised the company sincerely. The fact that static code analysis tools are used indicates the maturity of the project development cycle and the company's concern for the reliability and security of the code.

Why am I sure that using PVS-Studio can significantly improve the quality of the code? Because it is one of the most powerful static analyzers that easily detects errors even in such projects as:

- GCC ( errors )

- Clang ( errors , errors , errors )

- Valgrind ( errors )

- GDB ( errors )

- FreeBSD ( errors , errors )

- and so on .

Using PVS-Studio raises the quality of the code by one extra step. Epic Games takes care of everyone who uses the Unreal Engine in their projects. Each corrected error will reduce someone's headache.

Interesting mistakes

I will not talk about all the errors found and fixed by us in the Unreal Engine, but will highlight only a few that, in my opinion, deserve attention. Those interested can familiarize themselves with other errors by looking at the GitHub pull request . To access the source code and the specified pull request, you must have access to the Unreal Engine repository on GitHub. To do this, you must have GitHub and EpicGames accounts, which must be linked to unrealengine.com . After that, you need to accept an invitation to join the Epic Games community on GitHub. Instructions .

The development of the PVS-Studio analyzer is connected not only with the advent of new diagnostics, but also with the improvement of existing ones. For example, algorithms for calculating possible values of variables are improved. Due to this, about a year ago, the analyzer began to detect errors like this:

uint8* Data = (uint8*)PointerVal; if (Data != nullptr || DataLen == 0) { NUTDebug::LogHexDump(Data, DataLen); } else if (Data == nullptr) { Ar.Logf(TEXT("Invalid Data parameter.")); } else // if (DataLen == 0) { Ar.Logf(TEXT("Invalid DataLen parameter.")); } PVS-Studio analyzer warning: V547 Expression 'Data == nullptr' is always true. unittestmanager.cpp 1924

If the condition (Data! = Nullptr || DataLen == 0) fails, then this means that the Data pointer is exactly equal to nullptr . Therefore, further testing (Data == nullptr) does not make sense.

The correct code is:

if (Data != nullptr && DataLen > 0) Diagnostics V547 exists in the analyzer since 2010. However, the imperfection of the mechanism for calculating the values of variables did not allow finding this error. The analyzer saw the presence in the first condition of checking the value of the DataLen variable, and could not figure out what the values of the variables are equal in different conditions. For a person, the analysis of the above code does not constitute any problems, but from the point of view of writing algorithms to search for such errors, everything is not so simple.

I demonstrated the improvement of the internal mechanisms of PVS-Studio, which allowed us to reveal a new error. Figuratively speaking, these improvements were made "in depth", i.e. the analyzer started working more accurately.

We are also making improvements in breadth, supporting new constructions that appear in new versions of the C ++ language. It is not enough to learn how to parse C ++ 11 code, C ++ 14 code, and so on. It is equally important to improve the old diagnostics and implement new ones in order to detect errors in new language constructs. As an example, let me give you the V714 diagnostics, which is looking for incorrect range-based cycles. In the Unreal Engine, V714 diagnostics indicate the following cycle:

for (TSharedPtr<SWidget> SlateWidget : SlateWidgets) { SlateWidget = nullptr; } PVS-Studio warning: V714 is not passed through the loop. vreditorradialfloatingui.cpp 170

The programmer wanted to assign the value nullptr to all elements in the SlateWidgets container. The error is that SlateWidget is an ordinary local variable, created again at each iteration of the loop. Writing a value to this variable does not change the item in the container. For the code to work correctly , you need to create a link:

for (TSharedPtr<SWidget> &SlateWidget : SlateWidgets) { SlateWidget = nullptr; } We, of course, gradually add to the analyzer and diagnostics that have little to do with the language. For example, V767 diagnostics did not exist in 2015, when our team wrote a previous article about testing the Unreal Engine project. Diagnostics appeared in PVS-Studio version 6.07 (August 8, 2016). Thanks to her appearance, such an error was revealed:

for(int i = 0; i < SelectedObjects.Num(); ++i) { UObject* Obj = SelectedObjects[0].Get(); EdObj = Cast<UEditorSkeletonNotifyObj>(Obj); if(EdObj) { break; } } PVS-Studio warning: V767 Suspicious access to element of SelectedObjects' array by a constant index inside a loop. skeletonnotifydetails.cpp 38

The loop should search for an element that is of the type UEditorSkeletonNotifyObj . Because of a random typo when choosing an element, the numeric literal 0 is written instead of the variable i .

The correct code is:

UObject* Obj = SelectedObjects[i].Get(); Let's look at another recently created diagnostics of the V763, which, like the V767, appeared in PVS-Studio version 6.07. The error is interesting, but in order to show it, I will have to bring in the article a rather long body of the RunTest function:

bool FCreateBPTemplateProjectAutomationTests::RunTest( const FString& Parameters) { TSharedPtr<SNewProjectWizard> NewProjectWizard; NewProjectWizard = SNew(SNewProjectWizard); TMap<FName, TArray<TSharedPtr<FTemplateItem>> >& Templates = NewProjectWizard->FindTemplateProjects(); int32 OutMatchedProjectsDesk = 0; int32 OutCreatedProjectsDesk = 0; GameProjectAutomationUtils::CreateProjectSet(Templates, EHardwareClass::Desktop, EGraphicsPreset::Maximum, EContentSourceCategory::BlueprintFeature, false, OutMatchedProjectsDesk, OutCreatedProjectsDesk); int32 OutMatchedProjectsMob = 0; int32 OutCreatedProjectsMob = 0; GameProjectAutomationUtils::CreateProjectSet(Templates, EHardwareClass::Mobile, EGraphicsPreset::Maximum, EContentSourceCategory::BlueprintFeature, false, OutMatchedProjectsMob, OutCreatedProjectsMob); return ( OutMatchedProjectsDesk == OutCreatedProjectsDesk ) && ( OutMatchedProjectsMob == OutCreatedProjectsMob ); } From the above code, the following is important to us:

- Using the first call to the CreateProjectSet function, they attempt to initialize the OutMatchedProjectsDesk and OutCreatedProjectsDesk variables .

- Using the second call, the CreateProjectSet function attempts to initialize the OutMatchedProjectsMob and OutCreatedProjectsMob variables .

Then check that the values of these variables satisfy the condition:

return ( OutMatchedProjectsDesk == OutCreatedProjectsDesk ) && ( OutMatchedProjectsMob == OutCreatedProjectsMob ); Do not look for errors in the body of the considered function, they are not there. I gave this code to show that the CreateProjectSet function is expected to write values in two variables, passed to it as the last two actual arguments.

The error traps us just in the CreateProjectSet function:

static void CreateProjectSet(.... int32 OutCreatedProjects, int32 OutMatchedProjects) { .... OutCreatedProjects = 0; OutMatchedProjects = 0; .... OutMatchedProjects++; .... OutCreatedProjects++; .... } PVS-Studio tool will issue two warnings here:

- V763 Parameter 'OutCreatedProjects' is always rewritten in function body before being used. gameprojectautomationtests.cpp 88

- V763 Parameter 'OutMatchedProjects' is always rewritten in function body before being used. gameprojectautomationtests.cpp 89

The analyzer is absolutely right when it warns that the values of OutCreatedProjects and OutMatchedProjects are not used at all, but are immediately overwritten with the value 0 .

The error is simple: forgot to make variable references. The correct code is:

static void CreateProjectSet(.... int32 &OutCreatedProjects, int32 &OutMatchedProjects) Above, I cited errors that require at least some care for their detection. But much more quite simple mistakes. For example, the break statements are missing:

{ case EWidgetBlendMode::Opaque: ActualBackgroundColor.A = 1.0f; case EWidgetBlendMode::Masked: ActualBackgroundColor.A = 0.0f; } Or incorrect comparison of several variables for equality:

checkf(GPixelFormats[PixelFormat].BlockSizeX == GPixelFormats[PixelFormat].BlockSizeY == GPixelFormats[PixelFormat].BlockSizeZ == 1, TEXT("Tried to use compressed format?")); If someone is new to C ++ and did not understand that this comparison is wrong, I suggest looking into the description of the V709 diagnostic.

Most of such errors are among the detected by PVS-Studio. But since they look so simple, why go unnoticed?

These errors are simple when they are highlighted in the article for the reader. In the code of real applications it is extremely difficult to notice them. Even with code reviews, a glance can slide along the block.

{ case EWidgetBlendMode::Opaque: ActualBackgroundColor.A = 1.0f; case EWidgetBlendMode::Masked: ActualBackgroundColor.A = 0.0f; } and ignore the mistakes in it. The code seems so simple that the programmer does not even read into it, considering it to be absolutely correct.

Let us now discuss the question a bit: is it possible to somehow reduce the number of such errors?

Recommendation

The errors described in the article were found using PVS-Studio and, most likely, the reader expects me to recommend using static code analysis tools. Yes, I recommend integrating the PVS-Studio static code analyzer into the development process. There is no need to deny yourself the opportunity to find a number of errors immediately after writing the code.

However, in this section I would like to discuss a very important point, which, as a rule, is not mentioned in the articles on the quality of the code.

It is impossible to achieve a high quality project, as long as the team of programmers does not recognize for itself that it makes mistakes, including simple ones.

This phrase sounds trite, but it is very important. As long as the programmer does not realize that this statement does not apply to an abstract programmer, but to himself, no tool or methodology will benefit. In other words, programmers are often too proud to admit that they need assistive tools and techniques for writing quality code.

All programmers know that there are errors in the programs. However, they believe that the rules, recommendations and tools do not exist for them, since they are professional developers and write good code.

This is the problem of a person reassessing his level. About her well written in the article " Programmers 'above average' " ( en ). I will quote the passage:

How do you rate your level as a programmer (below average, average, above average)?

According to psychological surveys among different groups , about 90% of programmers answer "Above average".

Obviously, this cannot be true. In a group of 100 people, 50 will always be higher and 50 - below the average. This effect is known as the illusion of superiority . It is described in many areas, but even if you have heard about it, you still probably answer "above average".

This is a problem that prevents programmers from mastering new technologies and methodologies. And my main recommendation is to try to rethink my attitude towards my own work and the work of the team as a whole. The position “I / we are writing an excellent code” is counterproductive. People can make mistakes, and so can programmers.

Having understood all this, you can take the biggest step in the direction of high-quality software.

Note. Project managers, I additionally suggest to look at this article .

I wanted to warn against a single error of reasoning. Static and dynamic analyzers, in general, find fairly simple errors and typos. Yes, they will not find a high-level error in the algorithm, since artificial intelligence has not yet been invented. However, a simple mistake can cause great harm and take a lot of time / effort / money. More: " A simple error in coding does not mean a non-terrible error ."

And again: do not look for a silver bullet. Use a combination of different elements, such as:

- Out of the head "our team is above average";

- The coding standard that all developers in the team adhere to;

- Code reviews (at least for the most important sites and sites written by new employees);

- Static code analysis;

- Dynamic code analysis;

- Regression testing, smoke testing;

- Using unit tests, TDD;

- and so on.

I do not urge to start using all the above at once. In different projects, something will be more useful, something less. The main thing is not to hope for one thing, but to use a reasonable combination of techniques. Only this will give an increase in the quality and reliability of the code.

Conclusion

The Unreal Engine developers take care of the quality of their code, and the PVS-Studio team, as far as they can, helps them with this.

The PVS-Studio team is ready to work with the code and your projects. In addition to selling the PVS-Studio analyzer and its maintenance, we conduct code audits, code migration, etc.

I wish everyone as few bugs as possible in the programs.

Source: https://habr.com/ru/post/331724/

All Articles