Z-order in 8D

Indexes based on self-similar sweeping curves are suitable not only for organizing the search for spatial data. They are efficient on heterogeneous data laid on an integer lattice.

Under the cut, we will check the possibility of using the Z-curve for the implementation of an 8-dimensional index with an eye on the OLAP cube .

Summary of the previous series.

Earlier ( 1 , 2 , 3 , 4 , 5 ) it was shown that the index based on the Z-curve is quite suitable for organizing spatial search. It is very close in speed (in the absence of restrictions on the buffer cache) to the traditionally used R-tree, but it is noticeably smaller, faster built, not afraid of random data, less reads during the search.

Fears.

Technically, there is nothing difficult in the implementation of such an index, it only took to process an 8-dimensional key. Dimension 8 was chosen for technological reasons, if you need a 7-dimensional index, you can safely add it with zeros in one of the coordinates, it almost does not affect the speed, except that the key will be longer. If necessary, nothing prevents you from making a longer one, for example, a 16-dimensional key.

')

It is worth considering in advance what pitfalls can expect us and what to do about it.

- We will experiment on pseudo-random data. But the real data has internal dependencies that are highly dependent on a specific task and difficult to foresee . Yes, it is, as a simulation, our pseudo-random data will actually be 6-dimensional, and we will simply duplicate the two columns.

In general, this problem is fundamental enough for multidimensional R-trees that have page splitting / merging heuristics. If the dependencies in the data do not fit into the heuristics, it can begin to work counterproductively, which affects both the construction / work time and the size of the index.

For the usual B-tree on which our index is built, this problem is not so terrible - the tree itself maintains a balance. - In reality, different dimensions have different scales, the maximum extent can be flattened in one dimension and stretched in another. Yes, of course, but again, this is quite important when building multidimensional R-trees and much less critical in the case of B-trees.

- What about accuracy? In the current implementation, up to 31 bits are allowed to encode the value. If the data (with a floating point) is obtained naturally, then 20 digits will most likely be enough for their coding. There are of course 32-bit ADCs, but this is more exotic. It is clear that double contains 64 bits, but most of the data without loss (or with minimal losses) can be planted on a 31-bit grid. If this is not the case, the data were processed, for example, they were averaged.

If necessary, the resolution of the described index can be increased. This will cause key swelling and performance degradation. However, as long as more than one key fits on a page, they can be organized as a B-tree.

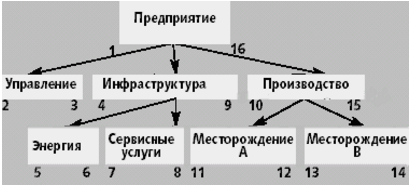

On the other hand, a small loss of accuracy is not critical in our case. After all, we are looking in the interval for each dimension. To get the correct result, it is enough to take an interval with a margin and remove unnecessary post-filtering. - Data may be hierarchical in nature. For example, the number encodes the sensor, but the sensors are grouped, their groups form their own hierarchy. And I want to do a sample of the whole branch of this hierarchy. This problem is solved by traversing and numbering the nodes of the tree in direct order. Then each tree node has an interval of values - from its own identifier to the identifier of its last descendant.

And the intervals are exactly what we need for the search. - Multidimensional rectangles have a developed surface and the Z-curve coding will be very inefficient. There are such concerns at the same time and check.

Benchmark

The following table summarizes the results for several (described below) query types.

Data - 100 000 000 random points, evenly distributed in an 8-dimensional cube with sides [0 ... 1 000 000].

Measurements (as before) were conducted on a virtual machine with two cores and 4 GB of RAM, so the times have no absolute value, but the numbers of the read pages can still be trusted.

The times are shown on the second runs, on the heated server and the virtual machine. The number of buffers read is on a freshly raised server.

Details

- Here is this commit : (SHA-1: 36dd ... 76c7)

- gawk -f gendata.awk〉 data.csv

BEGIN { for (i = 0; i <100000000; i ++) { x = int (1000000 * rand ()); y = int (1000000 * rand ()); z = int (1000000 * rand ()); a = int (1000000 * rand ()); b = int (1000000 * rand ()); c = int (1000000 * rand ()); print "{" x "," y "," z "," a "," b "," c "," a "," b "}"; } }note that “a” and “b” occur twice, we have degenerate data, the real dimension is 6 create table test_points_8d (p integer[8]);COPY test_points_8d from '/home/.../postgresql/contrib/zcurve/data.csv';

10 minutes- \ dt +

Schema | Name | Type | Owner | Size | Description ------- + ---------------- + ------- + ---------- + ------ --- + ------------- public | test_points_8d | table | postgres | 8056 MB |

create index zcurve_test_points_8d on test_points_8d (zcurve_num_from_8coords (p[1], p[2], p[3], p[4], p[5], p[6], p[7], p[8]));

15 minutes- \ di +

Schema | Name | Type | Owner | Table | Size | -------- + ----------------------- + ------- + --------- - + --------------- + ------- + --- public | zcurve_test_points_8d | index | postgres | test_points_8d | 4743 MB

- verification request

select c, t_row from (select c, (select p from test_points_8d t where c = t.ctid) t_row from zcurve_8d_lookup_tidonly('zcurve_test_points_8d', 237000,291000,845000,152000,585000,193000,152000,585000, 247000,301000,855000,162000,595000,203000,162000,595000) as c) x;c | t_row

------+-----------------------------------------------------------

(0,1) | {237787,291065,845813,152208,585537,193475,152208,585537}

as required

| Request type | NPoints | Nreq | Time (ms) | Reads | Shared hits |

|---|---|---|---|---|---|

| all 10,000 each | 1.1e-4 | 100,000 | .46 | 2.0175 | 15.3919 |

| all over 100,000 | 73.9 | 10,000 | 47 | 57.3063 | 1604.18 |

| 3100 000 + 510 000 | 0.0837 | 10,000 | .9 | 8.7637 | 73.8852 |

| 2x100,000 + 610 000 | 0.0096 | 10,000 | .66 | 5.1771 | 40.9089 |

| 1 whole + 1X100,000 + 6X10,000 | 0.0978 | 10,000 | 1.5 | 24.2122 | 199.33 |

| 2 whole + 6X10,000 | 0.9663 | 10,000 | 95 | 115.202 | 1050.8 |

Type of request - one of the following

- all 10,000 each. The total extent side is 1,000,000, requests in the form of a cube with a side of 10,000, and the beginning of the queries are evenly distributed within the total extent. The request party is 1E-2 from the maximum. Since we have 6 independent parameters, we get 1E-12 of the total volume. The number of points is 1E8, which means the theoretical probability of finding a point in such an extent is approximately 1E-4

- all by 100,000. The extent of the query in is a cube with a side of 100,000, which is 1e-1. Since we have 6 independent parameters, we get 1e-6 by volume. The number of points is 1e8, which means the average number of points in such a query is 100. On the other hand, the probability of getting beyond the extent of one coordinate is 10%, for any of 6 - 53% (0.9 ** 6), if the average getting out is 5%, then the probability is 0.735 (0.95 ** 6), which is consistent with the obtained value

- 3100 000 + 510 000. Requests are flattened in 5 dimensions

- 2100 000 + 610 000. Requests are flattened in 6 dimensions

- 1 entirely + 1X100,000 + 6X10,000. Requests are flattened in 6 dimensions, one dimension is taken entirely, one is 100,000. The situation is simulated when one of the values is unknown, you have to take the full interval for it.

- 2 entirely + 6X10 000. Two values are taken entirely, aggravating the situation with the unknown.

NPoints - the average number of points found in the query

Nreq - the size of a series of requests

Time (ms) - the average query time

Reads - the average number of reads per request

Shared Hits - the number of hits in the buffer cache

findings

As expected, the area of request perimeter plays an important role in the cost of the request. In fact, the larger the perimeter, the more pages it will potentially cross, the more it will have to read, the lower the cache efficiency ...

However, for cubic queries, the cost of the query is quite acceptable. In fact, for a query - a cube with a side of 100,000, an average of 57 pages are read with 74 lines of output. In order to read these 74 lines, you will most likely have to read about 74 more pages.

In addition, these data are obtained on evenly distributed data; on clustered data, it is expected that in order to obtain the same output power, query sizes will be much smaller, as will the number of pages read.

It would be interesting to have a look.

PS: on the title picture shows an okterakt - 8-dimensional cube

PPS: thanks to Andrei Borodin (Oktonika, Ekb.) For the tip on

Source: https://habr.com/ru/post/331420/

All Articles