Why connect under attack to DDoS neutralization service is too late

"I used to have to think . " This Russian maxim is applicable to many life situations, but in protecting network resources from denial of service attacks, these words are doubly true.

Pre-planning is extremely important if you are going to create a popular and competitive service somewhere on the Internet - not only attackers, but also competitors can pay attention to it. In the end, an unexpected and large influx of users is essentially no different from a distributed attack.

')

If you have not bothered to protect in advance, the conditions in which you have to return to this topic may be completely suboptimal. We decided to present to the reader a few basic points - the difficulties that any service will face that are under attack and trying to connect to a system for neutralizing denial of service attacks.

Lyrical digression: if you are going to go on vacation in a tropical country on another continent, then it is useful, in addition to conventional travel insurance, to take care of the issue of vaccinations against the most dangerous and common diseases.

Yes, the chance of a mosquito bite is small, if you wear a long sleeve and roll pants into socks. In the extreme case, of course, they will save you. But this process can be delayed, you will spend a lot of nerves and, quite possibly, funds for medical specialists and drugs both on site and upon return. But all you had to do was put a vaccination against a dangerous disease and make sure that your insurance covers all cases.

What are the advantages of connecting to the network filtering traffic before the attack?

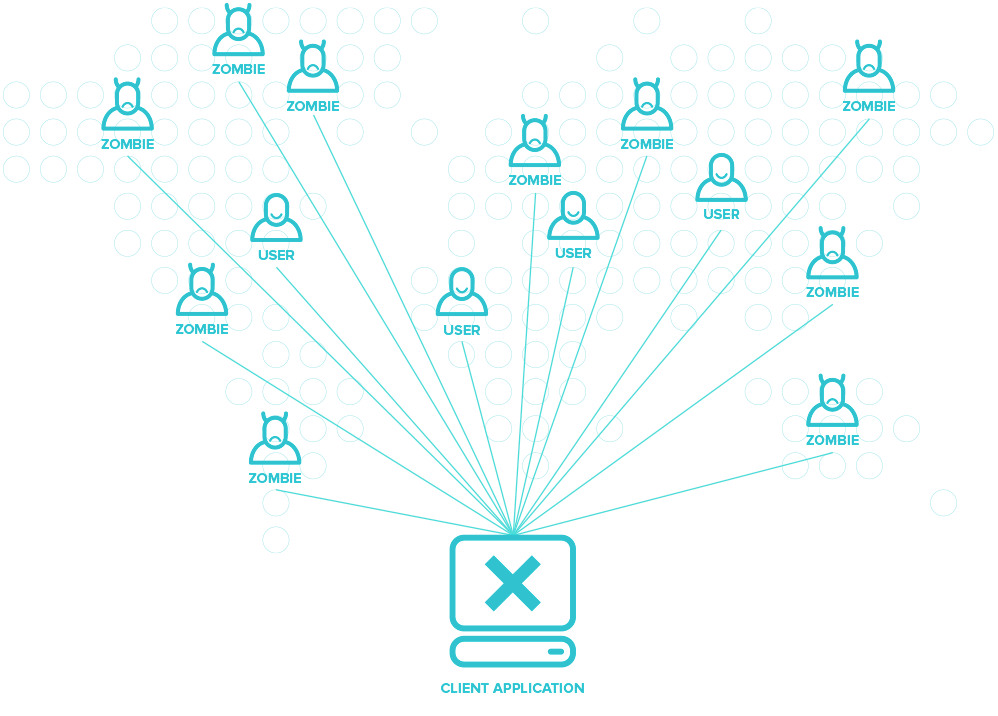

The first and most important thing is the knowledge of user behavior. Observing the traffic of the target resource before the stress, we are well aware of what the normal behavior of the users of the resource is and what traffic statistics are natural for the resource. When under attack, a gradual (or fast, it all depends on the actions of the attackers) degradation of the service occurs, as a result of which the behavior of normal users of the resource changes significantly. In some cases, the actions of legitimate users begin to look like an attack and worsen its consequences, as an example - a cyclic update of the same page by the user until the desired page is received in the browser.

The stronger the degradation of the service under attack, the more difficult it is to distinguish legitimate users from malicious bots. If we have not seen the behavior of users on the threshold of an attack with normal and proven legitimate behavior, we do not know in advance how it is possible to distinguish a real user from a malicious bot who sends garbage requests to the server. In addition, the amount of traffic that accompanies a normal service workflow would also be known.

If at the beginning of the attack, the network or filtration equipment was not included in its neutralization, then in the process of learning for automation and learning models, all users who click on “Refresh page” are almost indistinguishable from the attack traffic, respectively, the probability of their blocking is high. Knowing the previous behavior of users of the resource, you can protect both them and yourself from the occurrence of a similar situation.

Similarly, with the traffic volume and traffic statistics in a normal situation - in the absence of such information at the time of the attack, it will be very difficult to say with certainty whether the traffic returned to normal after neutralizing the attack due to the fact that real users could get blocked just possessing slightly different from the median behavior during the degradation of the service under attack.

Of course, such problems are not always encountered, but become especially acute in some attack scenarios. Depending on the complexity of the attacking bot and the cunning of the attacker, an attack at the root of the service can become really unpleasant - in this case, when the service is degraded, the behavior of the bots looks almost indistinguishable from the behavior of legitimate users who simply do not open anything. In the case of attacks of another type, as a rule, significant differences remain in the legitimate and harmful behavior in terms of data flows.

Imagine a banal domestic situation - a breakthrough of a water pipe, a flood. You were not ready for it, there were no plugs at hand, locking taps on the pipe and so on, the worst scenario. You can quickly plug the hole and stop the flow, but furniture and children's toys are already floating throughout the apartment, and guests are knocking on the door. Can you let them go? Hardly.

What are the real disadvantages and possible consequences when connecting under attack?

“From a sick head to a healthy one” is about neutralizing DDoS. And oddly enough, but even for a long time, companies on the market with professional employees and thousands of consumers across the country or even around the world do not always think about the neutralization of DDoS before the attack begins. We would like to change this situation with the help of conveying adequate information to the audience, but we will not build illusions - until the thunder clap, the peasant will not cross himself.

Let's look at the main difficulties and difficulties that a resource under attack has to cope with, and we, as a service that neutralizes distributed attacks.

The first thing that can be stated with confidence in the case of a service connecting to the filtering system while under attack is that it is highly likely to experience partial or complete inaccessibility among users.

Secondly, if you are attacked, then most likely the attacker already knows not only your domain name, but also the IP address. In addition, usually the IP address of the attacked resource belongs to the hoster, therefore we a priori consider that the hoster is also known. That is, the attacker has sufficient information to modify the attack vector.

In order to quickly move to another hosting provider (it is possible that they are not attacking you), or to change the IP transit provider (perhaps you are just a side victim of attacking a larger target) it takes time.

Of course, working out false positive is not the only difficulty. Connecting under attack does not eliminate the problem of partial or complete unavailability, and if the attack has already begun and managed to cause some harm, then besides work on connecting the resource to the protection system, “damage control” is needed, that is, work on the consequences of the attack on the consumer and customer side.

It happens that the attack "went well" and caused considerable damage. Literally, this means the following: in addition to clogging the communication channel, through which it will be necessary anyway to organize connectivity with the cloud traffic filtering provider, while there are no dedicated channels, the border router or the balancer can “die” (deny service). It can also be bad for other infrastructural components, for example, a firewall (firewall), which is a delicate device and does not like distributed denial-of-service attacks, often looks at the disconnection from the outside world. The stopping of the normal firewall operation threatens a hole in the network perimeter. Yes, it is treated by resetting the failed equipment, but it is still necessary to get there and wait for it to return to a consistent state. All this time, traffic is passed without filtering.

Speaking of attacks on the application layer (L7), here and with the channel, everything can be good, and there is connectivity, but the web application is still sick, depressed and can, for example, fall off from the base, or fall with it. And its return to a normal consistent state can take from several hours to several days, depending on the existing architectural difficulties.

To traffic filtering networks there are two most common ways to connect - using DNS or BGP. Consider these two scenarios separately.

DNS

Suppose that the attack is carried out only by the domain name.

A typical scenario for connecting a site under an DNS attack is as follows: the owner of a network resource represented by a domain name, whose A-record has the current IP address of the web server being attacked, contacts us for help. After complying with all the formalities, Qrator Labs assigns the client a special IP address with which it replaces its current address.

As a rule, at this moment you have to take into account the possible high TTL to change the DNS record, which can be from several hours to days maximum (RFC limit: 2147483647 seconds) - during this time the old A-record will exist in the cache of DNS recursors. Therefore, if you realize in advance that an attack is likely, it is necessary to have a low TTL for the A-record DNS.

But in some cases even this may not save. After all, the attackers during the attack check its effectiveness, and seeing that the attack does not give a result, the smart villain will quickly switch to the attack to the IP address that he "remembers", bypassing the filtering network.

In this case, you need a new IP address, unknown to the attackers and in no way compromising the protected domain name, preferably in a different address block from the old one, and ideally from another service provider. After all, attackers can always go ahead and switch the DDoS attack vector to the address block (prefix) of the provider, with sufficient power. Well, to us - you are welcome. Moving on.

Bgp

In the case of a BGP connection, everything looks different.

If the attacked service has its own address blocks (prefixes) and wants to transfer the entire infrastructure under protection, announcing its own prefixes through the DDoS neutralization service provider - what does the process look like?

The autonomous system under attack is added to our AS-SET in order to be able to announce its own prefixes, after which the notorious day (24 hours) begins to receive this information with all our uplinks and update the prefix lists. Naturally, in an emergency, we try to meet such a client, forcing this process, but this is not possible in each case and is done manually. In the light of the above, time is a key and main stress factor, because protecting a resource requires urgent, “anesthetize instantly.”

In the case when the prefix owner prepares for an attack in advance, conducting a number of preliminary settings, he can be transferred to the Qrator filtering network almost instantly, saving time and nerves of technical specialists during after-hours and in an emergency mode. Mutual integration in this case is not only a technical, but also a psychological process.

Lyrical digression: your connectivity service provider buys IP transit, usually from large and reliable telecom operators, not lower than regional Tier-1 carriers. They filter the prefixes that come to them from clients, based on a certain list (prefix-list), which they take from open databases (RIPE, RADB and others). Regularly some providers of IP transit services for protection from DDoS update these filters cyclically once a day, others do it only on request. A competent service provider neutralizing DDoS points of presence are located around the world, you can not instantly roll it.

The most difficult case of connection under attack is the appeal of the owner of a complex infrastructure that has a variety of equipment: routers, firewalls, load balancers and other wonders of modern technology. In such scenarios, even a regular connection to a DDoS neutralization service provider is a complex process, which is a balanced and planned approach. Even it is somehow embarrassing to mention that in the event of an attack on such a service, its emergency connection to the filtering network eats up a lot of effort and leaves no time for careful planning, because every minute counts under attack. While these problems are not solved, the service has problems with accessibility to users and is degrading.

Often, a DDoS attack is combined in a certain order with a hacker attack - on breaking and entering, either before the denial of service attack or after. Here risks of a completely different order are involved: the leakage of user data, the attacker getting full access to the system. These problems need to be solved perpendicularly with the neutralization of DDoS, which in the worst case leads to even longer unavailability of users, and at best - to an increased delay from those who are trying to request the necessary page.

Postscript

Colleagues, we bring the following important news to your attention: www.ietf.org/mail-archive/web/idr/current/msg18258.html

The initiative to introduce an automatic protection against the occurrence of “route leaks” (route leaks), which was directly involved in the creation of Qrator Labs engineers, successfully passed the “acceptance call” stage and moved to the Interdomain Routing working group (IDR).

The next stage is the finalization of the document within the framework of the IDR and, further, the verification by the steering group ( IESG ). If these stages are successfully completed, the draft will become the new network standard RFC .

Authors: Alexander Azimov, Evgeny Bogomazov, Randy Bush (Randy Bush, Internet Initiative Japan), Kotikalapudi Sriram (Kotikalapudi Sriram, US NIST) and Keyur Patel (Keyur Patel, Arrcus Inc.) realize that there is an acute demand for proposed changes in the industry . However, the rush in this process is also unacceptable, and the authors will make every effort to make the proposed standard convenient for both transnational operators and small home networks.

We thank the technical specialists who expressed their support in the framework of the adoption call. At the same time, special thanks to those who not only expressed their opinion, but also sent their comments. We will try to take them into account with subsequent changes to this draft.

We also want to convey to interested engineers that you still have the opportunity to express your own wishes for making additions and clarifications through the IETF newsletter (draft-ymbk-idr-bgp-open-policy@ietf.org) or through the Qrator Labs initiative website. .

It is also important to note that by the time the final decision is made, the standard should have two working implementations. One of them is already available - this is our development based on the Bird raiding demon, available on Github: github.com/QratorLabs/bird . We invite vendors and the open source community to join this process.

Source: https://habr.com/ru/post/331080/

All Articles