Optical character recognition on the microcontroller

Today, optical character recognition is part of solving such applied tasks as text recognition and digitization, document recognition, license plate recognition, identification of bank cards, reading meter readings, identification of house numbers for creating maps (Google Street View), etc. d.

The recognition of a symbol means the analysis of its image in order to obtain a certain set of signs for comparing them with the signs of a class [ 1 ]. The choice of such a set and the methods for its determination are distinguished by different recognition methods, but for most of them, instantaneous information about all the pixels of the image is needed.

')

The latter circumstance and a sufficiently large amount of computation make it impossible to use low-power computing devices (microcontrollers) for optical character recognition. “And why?”, The informed reader exclaims, “the power of computing devices is constantly growing, and their price is falling!” [ 2 , 3 ]. Suppose the answer is: just wondering, is it possible to simplify the recognition method to such an extent that you can use a microcontroller?

It turned out possible, moreover, it turned out to be possible what seems to be related to the field of fiction, namely:

- recognition regardless of the font;

- recognition of a character string without division into individual characters;

- recognition of "shielded" characters, for example, a character in a character;

- recognition of "broken" characters;

- recognition of characters consisting of several parts;

- recognition without changing signs when turning up to 15 °. Opportunity

recognition of rotated characters at a greater angle due to changes in its signs; - character recognition in a video stream from one frame;

- handwriting recognition;

- limited number of characters to describe a character class, for Arabic numerals

and Latin - one, for Cyrillic - maximum two (for example, for some

variants of writing W); - simple "manual" definition of signs for a new class;

- automatic detection of signs for a new class;

- expansion of classes of characters by simply adding its features to the database;

And all this on a microcontroller.

The main idea of the method

Now more about the method itself. Consider, for example, the various styles of the symbol A:

Despite the visible differences, one can distinguish common features of the structural type, which are necessary signs of an A capital letter (for unbroken characters), namely: if the character in question is an A capital letter, then it will contain a closed area and an area open down.

Once again we emphasize that the indicated signs are necessary, but not sufficient: if we describe the contours around two areas of the specified type,

then it will not necessarily be capital letters A, are possible, for example, D, I, R, lowercase handwritten A, ..:

However, the use of areas as elements of a symbol allows you to generate sufficient features, and, for the vast majority of alphanumeric characters, you can form a single sufficient attribute! It is very easy to form it for each class and, unlike the structural features used by ABBYYTeam in recognizing handwritten characters [ 1 ], its variability is very low and it is possible to form it automatically! In other words, such signs work well for both printed and handwritten characters.

Recognition device

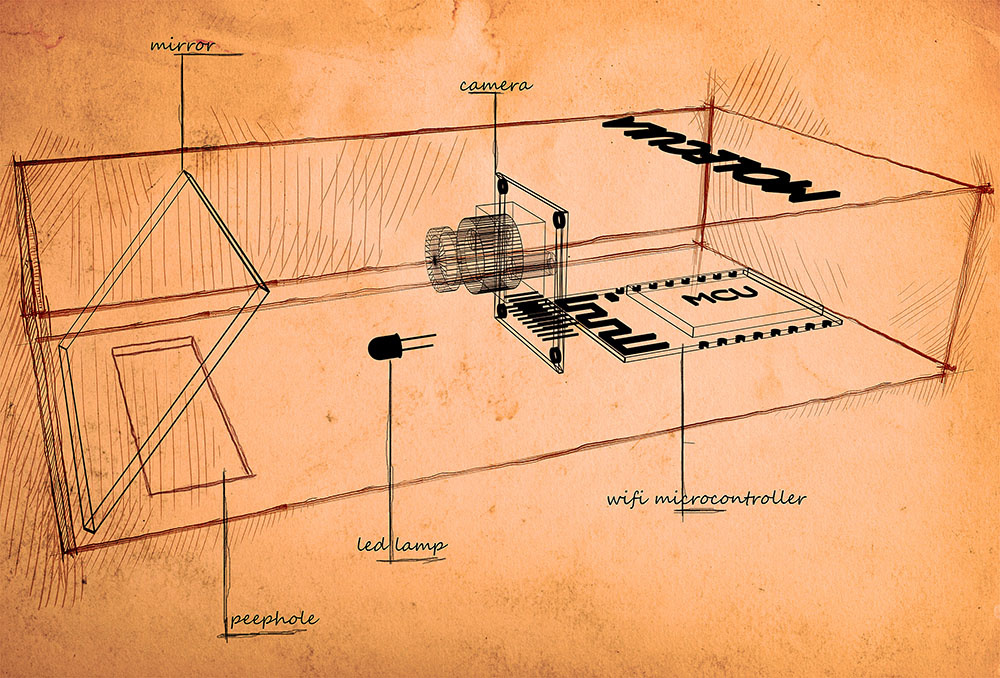

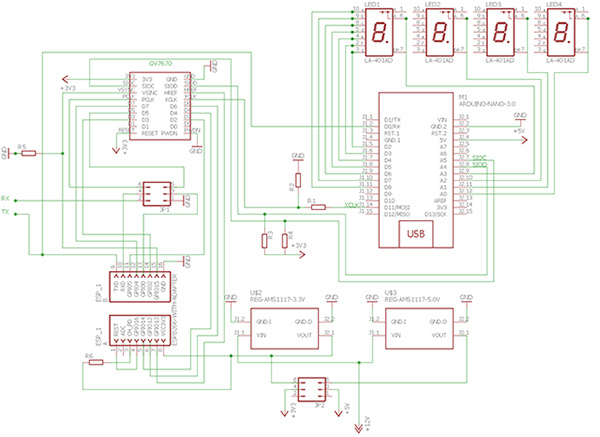

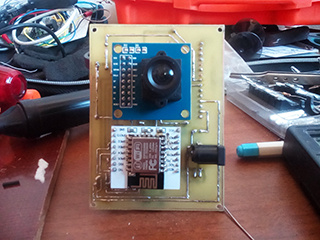

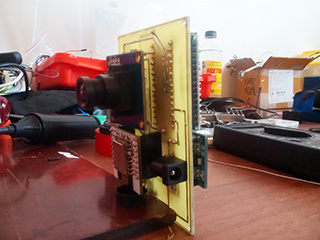

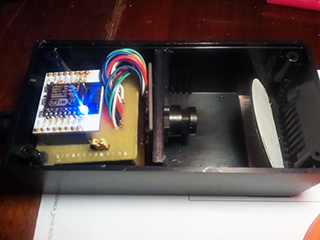

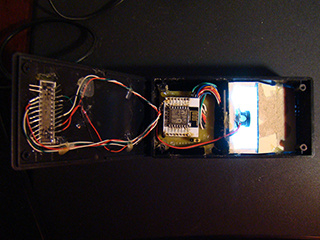

The first verification of the method was described in article [ 4 ]. The method was tested on single digits, obtained by a primitive camera from a mouse from a seven-segment indicator or printed on paper. After the first success, a natural desire arose to check the possibility of recognizing a sequence of characters, and for this you need to use another camera. We used the camera OV7670 (0.3MP). The remaining main components of the scheme remained unchanged - this is the Arduino and ESP8266, but their functions have changed. Arduino is now used to initialize the camera, as a master oscillator, receive recognized characters and display them on indicators. ESP8266 is engaged in obtaining images from the camera and its recognition, in addition, it provides data transmission to Arduino for display and transmission of recognized information via WiFi to external devices, for example, a smartphone. The used circuitry of the device is shown in the figure:

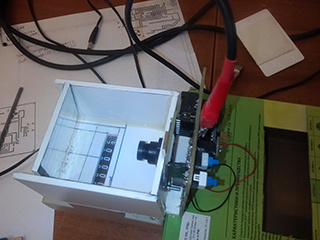

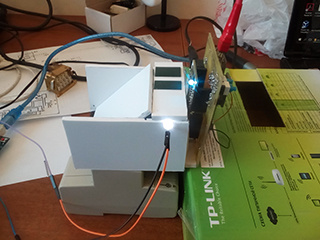

The image enters the device through the slit in its lower part and, being reflected from the mirror, enters the camera. The image is illuminated by an LED through the same mirror. The mechanical scheme of the device is shown in the figure.

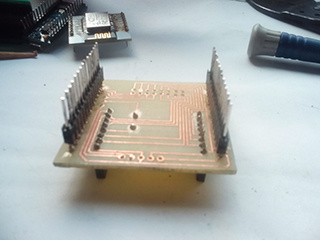

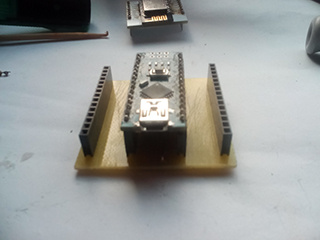

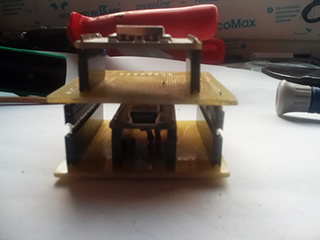

Photos of the working prototype

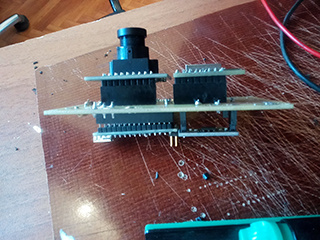

The first version of the working prototype:

The second version of the working prototype:

Image Acquisition on ESP8266

Camera settings during initialization are taken from [ 5 ]. The frame rate is approximately 0.4 fps. Since the number of pins of the ESP8266 is not enough, only 6 high-order bits of each brightness byte of the image are processed (the camera is configured in YUV mode). To obtain an image, a state machine is used (state machine).

According to the datasheet camera OV7670 [ 6 ]

The following conditions of the camera, conditions and signals during its operation can be distinguished:

| State name, number | State description | Signal to transition to another state |

| camoff, 0 | camera not ready to work | vzz (vsync = 1, href = 0 , pclk = 0 ) |

| frapause, 1 | pause between frames. waiting for the start of the frame. | zzz (vsync = 0, href = 0, pclk = 0) |

| framebeg, 2 | reading frame. waiting for the beginning of the line in the frame. | zhz (vsync = 0, href = 1, pclk = 0) |

| framebeg, 2 | reading frame. waiting for the end of the frame after reading last pixel | vzz (vsync = 1, href = 0 , pclk = 0 ) |

| fbyteread, 3 | brightness bytes read. waiting for the pause to start before the color difference byte. | zhz (vsync = 0, href = 1, pclk = 0) |

| fpause, 4 | pause before the color difference byte. expectation start reading the color difference byte. | zhp (vsync = 0, href = 1 , pclk = 1 ) |

| sbyteread, 5 | color difference bytes read. waiting for the pause to start before the luminance byte. | zhz (vsync = 0, href = 1, pclk = 0) |

| spause, 6 | pause before the luminance byte. expectation end of line. | zzz (vsync = 0, href = 0, pclk = 0) |

| spause, 6 | pause before the luminance byte. waiting to start read luminance byte. | zhp (vsync = 0, href = 1 , pclk = 1 ) |

The implementation of the machine is based on the same principles as outlined in [ 7 ]. The whole machine is described by its genome - a three-dimensional vector, the first component of which contains the keys, and the second - the names of the new states, the third - the names of the functions. The key contains information on the current state and the transition signal. For the formation of the key and the signal bit operations are used. The implementation details are clear from the code of the camera reading module.

user_main.c

#include "ets_sys.h" #include "osapi.h" #include "os_type.h" #include <gpio.h> #include "driver/uart_register.h" #include "user_config.h" #include "user_interface.h" #include "driver/uart.h" #include "readCam.h" #define DELAY 5000 /* milliseconds */ LOCAL os_timer_t cam_timer; uint16_t frN; extern uint8_t pixVal; uint8_t rN[10]; LOCAL void ICACHE_FLASH_ATTR getNFrame(void *arg){ uint16_t sig, sV,sH,sP; uint16_t pVal; uint16_t d7,d6,d5,d4,d3,d2; stateMashine camSM; ets_uart_printf("getNFrame...\r\n"); initSMcm(&camSM); while(frN<20){ system_soft_wdt_feed(); pVal= *GPIO_IN; sV=((pVal&(1UL<<VSYNC))>>VSYNC); sH=((pVal&(1UL<<HREF))>>HREF); sP=((pVal&(1UL<<PCLK))>>PCLK); sig=4*sV+2*sH+sP*sH; d7=((pVal&(1UL<<D7))>>D7); d6=((pVal&(1UL<<D6))>>D6); d5=((pVal&(1UL<<D5))>>D5); d4=((pVal&(1UL<<D4))>>D4); d3=((pVal&(1UL<<D3))>>D3); d2=((pVal&(1UL<<D2))>>D2); pixVal=128*d7+64*d6+32*d5+16*d4+8*d3+4*d2; exCAM(&camSM,sig,&frN,rN); } } uint32 ICACHE_FLASH_ATTR user_rf_cal_sector_set(void) { enum flash_size_map size_map = system_get_flash_size_map(); uint32 rf_cal_sec = 0; switch (size_map) { case FLASH_SIZE_4M_MAP_256_256: rf_cal_sec = 128 - 8; break; case FLASH_SIZE_8M_MAP_512_512: rf_cal_sec = 256 - 5; break; case FLASH_SIZE_16M_MAP_512_512: case FLASH_SIZE_16M_MAP_1024_1024: rf_cal_sec = 512 - 5; break; case FLASH_SIZE_32M_MAP_512_512: case FLASH_SIZE_32M_MAP_1024_1024: rf_cal_sec = 1024 - 5; break; default: rf_cal_sec = 0; break; } return rf_cal_sec; } void ICACHE_FLASH_ATTR user_init(void){ void (*cbGetFrame)(void *arg); cbGetFrame=(void*)getNFrame; UARTInit(BIT_RATE_921600); user_gpio_init(); os_timer_disarm(&cam_timer); os_timer_setfn(&cam_timer, (os_timer_func_t *)cbGetFrame, NULL); os_timer_arm(&cam_timer, DELAY, 0); } readCam.h

#ifndef INCLUDE_READCAM_H_ #define INCLUDE_READCAM_H_ #define GPIO_IN ((volatile uint32_t*) 0x60000318) #define WP 320 #define HP 240 #define PIXTYP 0 //image __________________________________________ #define IMAGEY0 60 #define IMAGEH HP/3 //____________________pins_____________________ #define VSYNC 15 #define HREF 13 #define PCLK 3 #define D7 4 #define D6 12 #define D5 0 #define D4 14 #define D3 2 #define D2 5 //*************signals OV7670***************** #define ZZZ 0 #define VZZ 4 #define ZHZ 2 #define ZHP 3 //*************states OV7670******************* #define CAMOFF 0 #define FRAPAUSE 1 #define FRAMEBEG 2 #define FBYTEREAD 3 #define FPAUSE 4 #define SBYTEREAD 5 #define SPAUSE 6 #define SSCC 40//max state_signal_condition count #define STATE_L 5 #define STATE_V 0x1F #define SIG_L 8 #define SIG_V 0xFF typedef struct { uint8 pix[WP] ; }linePixel; typedef struct gen{ uint8_t state; uint8_t sig; uint8_t stateX; void *fp; }gen; typedef struct stateMashine{ uint8_t count; uint16_t ssc[SSCC]; uint8_t stateX[SSCC]; void *fPoint[SSCC]; void *fpd; }stateMashine; #endif /* INCLUDE_READCAM_H_ */ readCam.c

#include "ets_sys.h" #include "osapi.h" #include "os_type.h" #include <gpio.h> #include "driver/uart_register.h" #include "user_config.h" #include "user_interface.h" #include "driver/uart.h" #include "readCam.h" void sendLine(uint16_t lN); void ICACHE_FLASH_ATTR sendFramMark(void); void ICACHE_FLASH_ATTR sendCtr3Byte(uint8_t typ,uint16_t len); void user_gpio_init(void); void sendByte(uint8_t bt); void ICACHE_FLASH_ATTR initSMcm(stateMashine *psm); void exCAM( stateMashine *psm,uint8_t sig,uint16_t *frameN,uint8_t *rN); int indexOf(stateMashine *psm,uint16_t ssc); linePixel lp; uint8_t pixVal; void exCAM( stateMashine *psm,uint8_t sig,uint16_t *frameN,uint8_t *rN){ int16_t ind; uint16_t lN; uint16_t pN; static uint8_t state=CAMOFF,stateX=CAMOFF; static void (*pfun)()=NULL; uint16_t stateSigCond=0; stateSigCond|=((state&STATE_V)<<(16-STATE_L))|((sig&SIG_V)<<(16-STATE_L-SIG_L)); ind=indexOf(psm,stateSigCond); if(ind>-1) stateX=(*psm).stateX[ind]; if(ind>-1) pfun=(*psm).fPoint[ind]; else pfun=(*psm).fpd; pfun(frameN,&lN,&pN,rN); state=stateX; } void _cm0(){} void _cm1(uint16_t *fN,uint16_t *lN,uint16_t *pN){//new frame sendFramMark(); sendCtr3Byte(PIXTYP,0); (*lN)=0; } void _cm2(uint16_t *fN,uint16_t *lN,uint16_t *pN){//frame end if(*lN==HP-1)(*fN)++; } void _cm3(uint16_t *fN,uint16_t *lN,uint16_t *pN){//new line uint16_t pixN; (*pN)=0; // pixN=(*pN);//right image pixN=WP-1-(*pN);//revers image (lp).pix[pixN]=pixVal; (*pN)++; } void _cm4(uint16_t *fN,uint16_t *lN,uint16_t *pN){// first byte uint16_t pixN; // pixN=(*pN);//right image pixN=WP-1-(*pN);//reverse image (lp).pix[pixN]=pixVal; // if(pixN<WP-1)(*pN)++;//right image if(pixN)(*pN)++;//reverse image } void _cm5(uint16_t *fN,uint16_t *lN,uint16_t *pN,uint8_t *rN){//end line uint16_t lineN; lineN=(*lN); sendLine(lineN); if((*lN)<HP-1)(*lN)++; } void _cm99(){} int indexOf(stateMashine *psm,uint16_t ssc){ uint8_t i,count; count=(*psm).count; for(i=0;i<count;i++){ if((*psm).ssc[i]==ssc) return i; } return -1; } void ICACHE_FLASH_ATTR initSMcm(stateMashine *psm){ uint8_t i,count; count=10; gen gen[]={ {CAMOFF,VZZ,FRAPAUSE,_cm0},//0#1 {FRAPAUSE,ZZZ,FRAMEBEG,_cm1},//1#2 {FRAMEBEG,VZZ,FRAPAUSE,_cm2},//2#1 {FRAMEBEG,ZHZ,FBYTEREAD,_cm3},//2#3 {FBYTEREAD,ZHP,FPAUSE,_cm0},//3#4 {FPAUSE,ZHZ,SBYTEREAD,_cm0},//4#5 {SBYTEREAD,ZHP,SPAUSE,_cm0},//5#6 {SPAUSE,ZHZ,FBYTEREAD,_cm4},//6#3 {SPAUSE,ZZZ,FRAMEBEG,_cm5},//6#2 {FPAUSE,ZZZ,FRAMEBEG,_cm5},//5#2 }; (*psm).count=count; for(i=0;i<count;i++){ (*psm).ssc[i]=0; (*psm).ssc[i]|=((gen[i].state&STATE_V)<<(16-STATE_L))| ((gen[i].sig&SIG_V)<<(16-STATE_L-SIG_L)); (*psm).stateX[i]=gen[i].stateX; (*psm).fPoint[i]=gen[i].fp; } (*psm).fpd=_cm99; } void sendByte(uint8_t bt){ uint16_t lenBuff; uint8_t buf[TX_BUFF_SIZE]; while(lenBuff){ lenBuff = (READ_PERI_REG(UART_STATUS(0))>>UART_TXFIFO_CNT_S) & UART_TXFIFO_CNT; } buf[lenBuff] =bt; uart0_tx_buffer(buf, lenBuff + 1); } void sendLine(uint16_t lN){ uint16_t j; uint8_t sByt; for(j=0;j<WP;j++){ sByt=(lp).pix[j]; if(lN<IMAGEY0||lN>(IMAGEY0+IMAGEH))sByt=0xFF; sendByte(sByt); } } void ICACHE_FLASH_ATTR user_gpio_init(void) { ets_uart_printf("GPIO initialisation...\r\n"); PIN_FUNC_SELECT(PERIPHS_IO_MUX_GPIO0_U, FUNC_GPIO0); gpio_output_set(0, 0, 0, BIT0); // Set GPIO0 as input PIN_FUNC_SELECT(PERIPHS_IO_MUX_GPIO2_U, FUNC_GPIO2); gpio_output_set(0, 0, 0, BIT2); // Set GPIO2 as input PIN_FUNC_SELECT(PERIPHS_IO_MUX_U0RXD_U, FUNC_GPIO3); gpio_output_set(0, 0, 0, BIT3); // Set GPIO3 as input PIN_FUNC_SELECT(PERIPHS_IO_MUX_GPIO4_U, FUNC_GPIO4); gpio_output_set(0, 0, 0, BIT4); // Set GPIO4 as input PIN_FUNC_SELECT(PERIPHS_IO_MUX_GPIO5_U, FUNC_GPIO5); gpio_output_set(0, 0, 0, BIT5); // Set GPIO5 as input PIN_FUNC_SELECT(PERIPHS_IO_MUX_MTDI_U, FUNC_GPIO12); gpio_output_set(0, 0, 0, BIT1); // Set GPIO13 as input PIN_FUNC_SELECT(PERIPHS_IO_MUX_MTMS_U, FUNC_GPIO14); gpio_output_set(0, 0, 0, BIT14); // Set GPIO14 as input PIN_FUNC_SELECT(PERIPHS_IO_MUX_MTCK_U, FUNC_GPIO13); // Set GPIO13 function gpio_output_set(0, 0, 0, BIT13); // Set GPIO13 as input PIN_FUNC_SELECT(PERIPHS_IO_MUX_MTDO_U, FUNC_GPIO15); gpio_output_set(0, 0, 0, BIT15); // Set GPIO15 as input ets_uart_printf("...init done!\r\n"); } void ICACHE_FLASH_ATTR sendFramMark(void){ sendByte(42); sendByte(42); } void ICACHE_FLASH_ATTR sendCtr3Byte(uint8_t typ,uint16_t len){ uint8_t lLen,hLen; sendByte(typ); lLen=len&0xFF; hLen=(len&(0xFF<<8))>>8; sendByte(lLen); sendByte(hLen); } Image processing

Image processing consists in progressive binarization, combining the obtained segments, analyzing and synthesizing the obtained figures. The purpose of processing is the formation of integral features, including the properties of the areas included in the figures. Despite the simplicity of the main idea, its implementation contains a number of specific points that cannot be disclosed within the framework of this article.

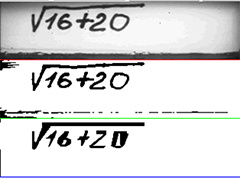

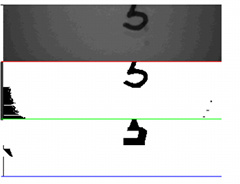

Visualization of the recognition process

To debug the recognition process, we used the visualization on the PC of the original image, binarized, and the image that the microcontroller “sees” or “understands”. Despite the fact that the latter is not very pleased with our eyes, its details are enough to recognize the characters. The figure shows examples of visualization.

It should be noted that sometimes, due to camera synchronization errors, there are situations when lines with color-difference rather than brightness bytes appear in the original picture. In this case, the picture is blurred and recognition does not occur. The task of handling such situations at this stage was not set

Also, recognition does not occur if the text is incorrectly positioned:

For visualization, a small program written in Java Script using nodeWebkit was used.

app.js

* to work with the COM port, you need to assemble the nodeJS "serialport" module under nodewebkit

var btn = document.getElementById('com'); var gui = require("nw.gui"); var select_com = document.getElementById('select_com'); var bdr = document.getElementById('bdr'); var canvas = document.getElementById('canvas'); var dev = document.getElementById('dev'); var ctx = canvas.getContext('2d'); var width = 320, height = 240; var byteCount = (width * height)/3; var lastStr=byteCount-width; var dataArr; var dataStr; var indArr = 0; var dataArrLen = 0; var byteCounter = 0; var newStr = 0; var sendTyp=0; document.addEventListener('DOMContentLoaded', function() { btn.addEventListener('click', function() { connectCom(function(vector) { drawImg(vector); }); }); dev.addEventListener('click', function(){ var win = gui.Window.get(); win.showDevTools(); }); }); function drawImg(imgArr) { var imgData = ctx.createImageData(width, height); var ind; for (var i = 0; i < imgArr.length; i++) { imgData.data[4 * i] = imgArr[i]; imgData.data[4 * i + 1] = imgArr[i]; imgData.data[4 * i + 2] = imgArr[i]; imgData.data[4 * i + 3] = 255; if(i<byteCount&&i>lastStr){ //red line imgData.data[4 * i] = 255; imgData.data[4 * i + 1] = 0; imgData.data[4 * i + 2] = 0; imgData.data[4 * i + 3] = 255; } if(i<2*byteCount&&i>byteCount+lastStr){ //green line imgData.data[4 * i] = 0; imgData.data[4 * i + 1] = 255; imgData.data[4 * i + 2] = 0; imgData.data[4 * i + 3] = 255; } if(i<3*byteCount&&i>2*byteCount+lastStr){ //blue line imgData.data[4 * i] = 0; imgData.data[4 * i + 1] = 0; imgData.data[4 * i + 2] = 255; imgData.data[4 * i + 3] = 255; } } ctx.putImageData(imgData, 0, 0); imgArr.length=0; } function connectCom(callback) { const PIXTYPE=0,BINTYPE=1,FIGTYPE=2; var imgTyp=PIXTYPE; var serialport = require('serialport'); var imgArr = []; var framCount=0,strNum,colNum; var pix=false; var comm = 'COM' + select_com.value; var boudrate = +bdr.value; var SerialPort = serialport.SerialPort; var port = new SerialPort(comm, { baudRate: boudrate, dataBits: 8, stopBits: 1, parity: "none", bufferSize: 65536, parser: SerialPort.parsers.byteLength(1) }); port.on('open', function() { console.log('Port ' + comm + ' Open'); }); port.on('data', function(data) { if(imgTyp==PIXTYPE||imgTyp==BINTYPE){ if (data[0] == 42 && newStr == 0) { newStr = 1; data[0]=255; } if (newStr == 1 && data[0] == 42) { newStr = 2; } if (newStr == 2 && byteCounter <2*byteCount) { colNum=byteCounter%width; strNum=(byteCounter-colNum)/width; if(strNum%2==0){ imgArr[(strNum/2)*width+colNum]=data[0]; } if(strNum%2==1){ imgArr[((strNum-1)/2)*width+byteCount+colNum]=data[0]; } byteCounter++; } if (newStr == 2 && byteCounter == 2*byteCount) { newStr = 0; byteCounter = 0; framCount++; console.log('Frame Num ', framCount); imgTyp=FIGTYPE; } } if(imgTyp==FIGTYPE){ if (data[0] == 42 && newStr == 0) { newStr = 1; data[0]=255; } if (newStr == 1 && data[0] == 42) { newStr = 2; } if (newStr == 2 && byteCounter < byteCount) { imgArr[byteCounter+2*byteCount] = data[0]; byteCounter++; } if (newStr == 2 && byteCounter == byteCount) { newStr = 0; byteCounter = 0; framCount++; console.log('Frame Num ', framCount); imgTyp=PIXTYPE; callback(imgArr); } } }); port.on('error', function() { alert(' '); }); } An example of the operation of the device is shown in a short video.

Video with prototype number 1

Video with prototype number 2

Conclusion

The obtained results show the high efficiency of the recognition method on devices that would seem completely unsuitable for this. A slight improvement in the method associated with the use of information from several frames for additional “peering into” the areas of interest will allow raising the quality of recognition to the level of commercial products.

The approach to analyzing and recognizing multi-attribute objects, such as handwriting strings or hieroglyphs, is also understandable, but this requires devices with more memory than our esp (512K, program size is more than 250K).

Thanks for attention.

References:

1. Text recognition in ABBYY FineReader (2/2)

2. Omega2: the world's smallest microcomputer with Linux and Wi-Fi

3. Orange Pi 2G-IoT - the perfect single board for IoT

4. Recognition of numbers on the microcontroller

5. Sketch Arduino to work with the camera OV7670

6. Datasheet camera OV7670

7. Reflection of dynamics in the access control model

Source: https://habr.com/ru/post/330936/

All Articles