May the Code Review with you

Code review can be a big pain for a team that is just starting to implement it. In any case, you will step on a lot of rakes: you will conduct a review longer than writing code, arrange deadly disputes about the arrangement of brackets and figure out whether it is possible to merge a branch into master before appruva’s commands or not. I have put together a number of practices that will help you make the adaptation process a little less painful - at least they definitely helped me.

This material is a brief squeeze of my experience gained over several years of work in large mobile development teams. The experience is mostly in mobile development, which influenced the examples and references used. For those who prefer not to read, but to watch, within a couple of months there should be a video from the Mobius conference, where I give a talk on the same topic, but with a bunch of detailed practical examples.

To begin with, I will outline the goals that should be pursued when conducting code inspections. It is with these goals and their priority relative to each other that I am guided, advising to implement this or that practice.

')

And now - to the point.

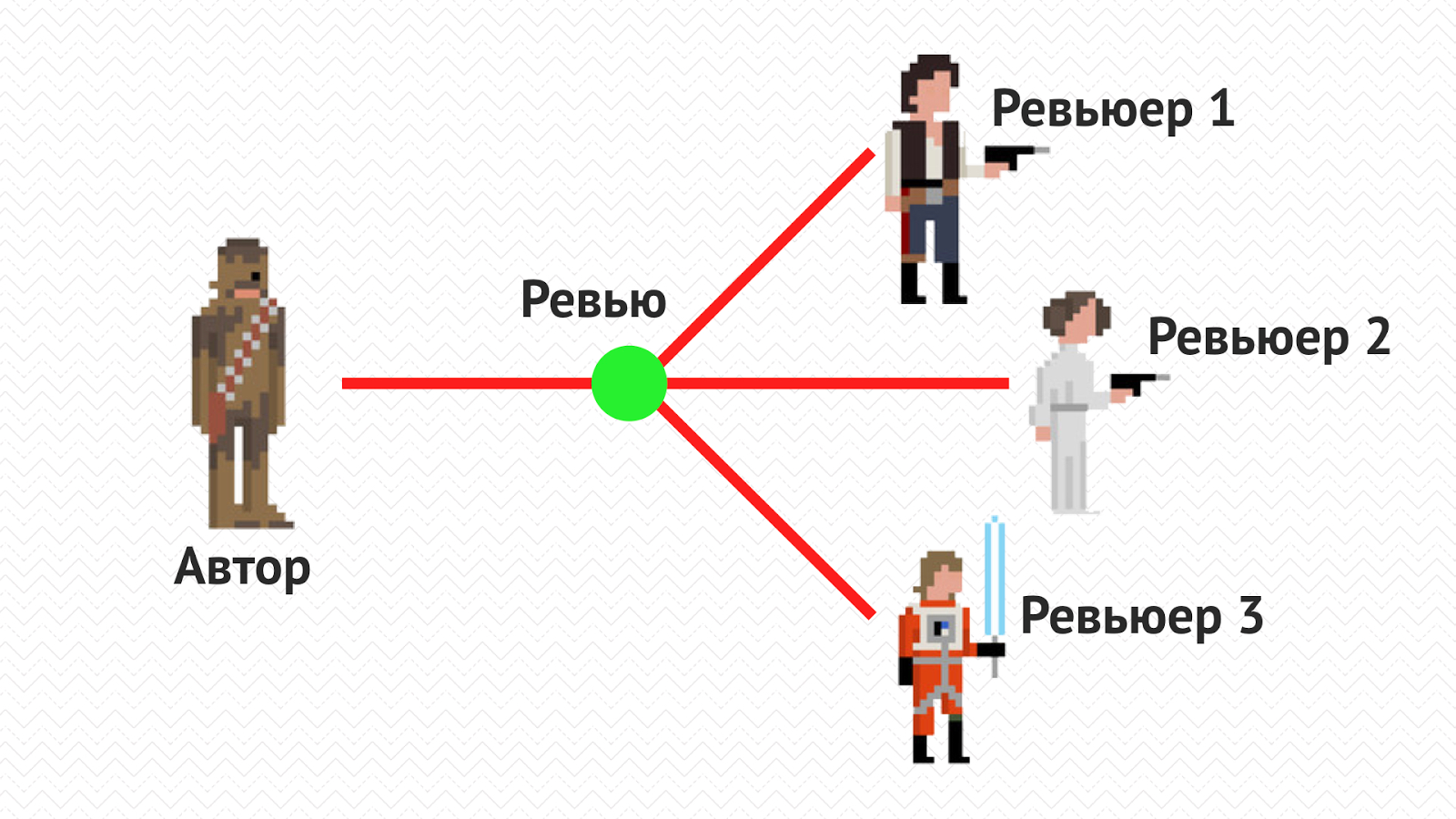

Teams are different - somewhere in the same department there are thirty people who are developing one project, and somewhere the developer alone supports a dozen applications at once.

The first case is probably the most common. This is a team of several people - say, up to four, who work on one project. Here, Git Flow is usually in full swing, tasks are developed independently of each other in separate branches, and when they are completed, they merge into develop.

In this case, the best way is to fly a model in which the approval of all team members is required to merge a branch with a task into the main working branch. This helps to maximize the benefits of all the review objectives listed in the first section.

Scale the previous situation and imagine a large outsourcer, which includes several such small teams, each of which is working on its own project. In such situations, the hierarchical structure is usually complicated and, in addition to the presence of leading developers in each of the teams, a new layer, timlid or technical leader is added, which is responsible for several such projects at once.

The basic approach remains unchanged - for all new tasks, a review is created in which the entire project team takes part, but the final responsibility for the technical decisions taken is the lead developer. In addition, the lead also needs to be connected to an important review - in this way it will stay abreast of what is happening on the project and connect at the right time to solving problems that arise.

Another additional activity goes into the load - periodic cross-project reviews show themselves to be very effective, when the developer becomes familiar with the rest of the projects. This allows everyone to be generally aware of where what technologies and approaches are used, to know who knows what and what. There are different ways to approach a cross-project review - keep a comprehensive list of all projects and sort it by the last review, or set a goal for each developer to inspect all other projects once in a certain period of time. Such reviews are conducted mainly for informational purposes - therefore, the approval of a third-party reviewer is usually not required in order to complete the task - a person who watches the code takes experience from other teams.

Another situation is a big team working on one big project. In such a situation, the establishment of a certain threshold of reviewers, after approval of which it is possible to merge a branch, justifies itself well. This value may depend on the size of the team, but a reasonable framework - 2-4 people.

The big issue is the definition of specific reviewers - they can be selected both randomly, who will respond first, and specifically by the author of the review. You can automate this process to avoid disputes and be more objective. As an example - to determine the owner of the code according to the history of the version control system or the system for code review.

The last case is the saddest. The developer works alone in the team. Firstly, such a goal as collective ownership of the code immediately disappears - there is no collective, there is no ownership either. The remaining goals also become quite degenerate - but, nevertheless, a third-party view of the code will help to find some of the errors and improve the technical level in general.

In this case, the developer needs to seek help in an open community. I advise three channels.

The main problem lies in such a concept as the state of the stream from which to pull a developer away — like death. Imagine the situation yourself: you are immersed in the development of your features, build palaces of abstractions in your head, and suddenly a colleague timidly touches the shoulder and asks to look at its code. Nothing good will come of it - the developer and his task can no longer properly deal with, and for the review is too biased and annoyed.

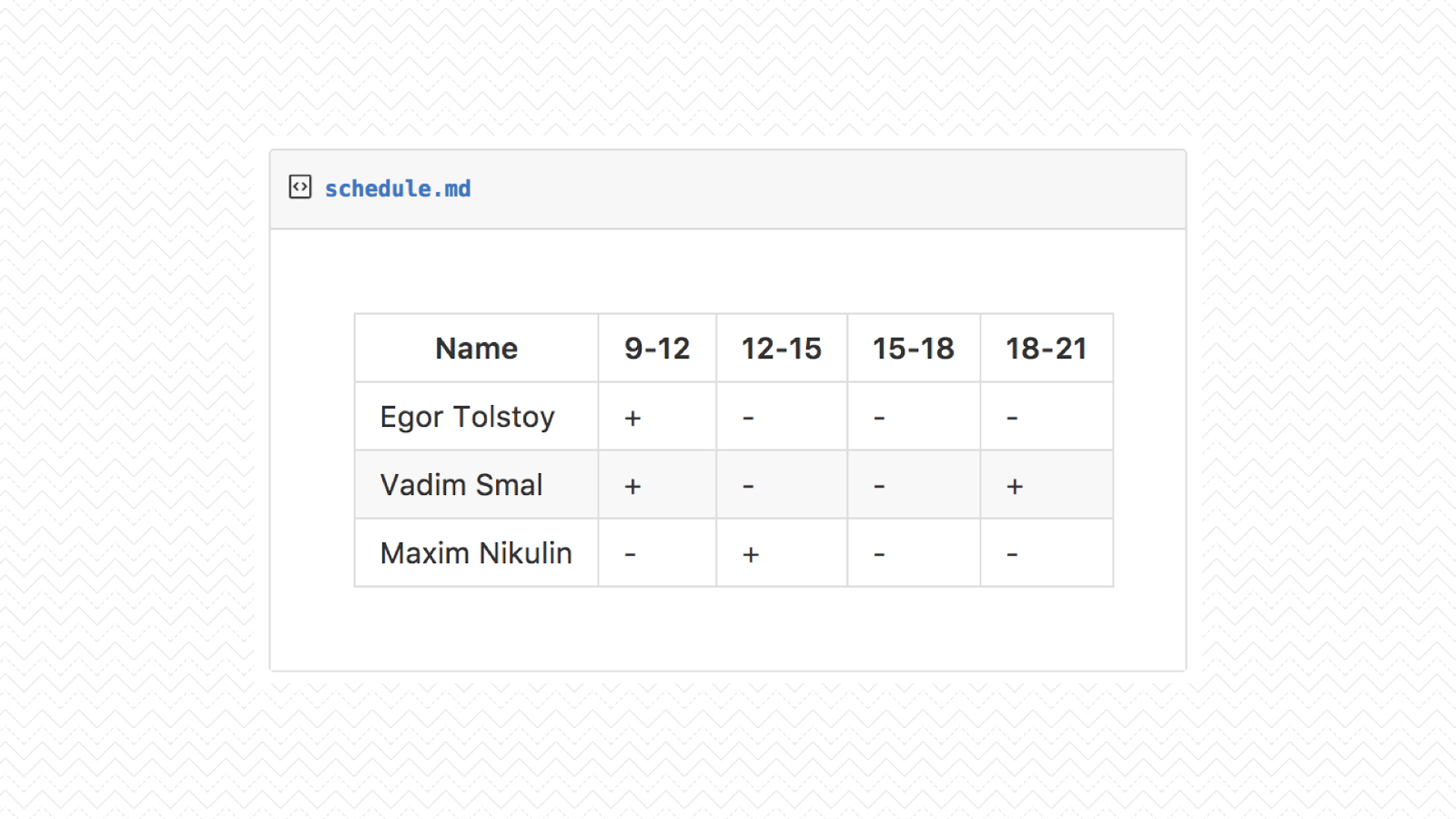

Solves the situation schedule. Each developer in the team determines the period of the day in which he will watch a review. The schedule of the whole team can be kept in one table, so that, guided by this, it was possible to select reviewers for yourself or calculate the time for checking the task. For example, I always set aside an hour of time in the morning, immediately after arriving at work — it just helped me get into a working rhythm.

Of course, there are cases when this mechanism does not work - some reviews really need to be viewed as quickly as possible. The main thing is to adhere to such a regime so that the occurrence of such situations does not become a habit.

I am sure that you are faced with a situation where, after the implementation of the task, it turns out that it was necessary to do it in a completely different way. Code Review is not the best place to argue about global architectural solutions. The code has already been written, the task is almost completed, to rewrite everything from scratch is too expensive. Such things should not be left for later, but discussed in advance.

As an option - conducting an architectural review, protecting your component in front of a team, either in words or as a diagram. Of course, there are cases when it dawns on someone already during the review - then this sentence, if it is really valuable, should be documented in the issue tracker and never returned to it.

As participants you can involve a technical leader of the project, developers who are directly interested in the implementation of this task, and just everyone from the team.

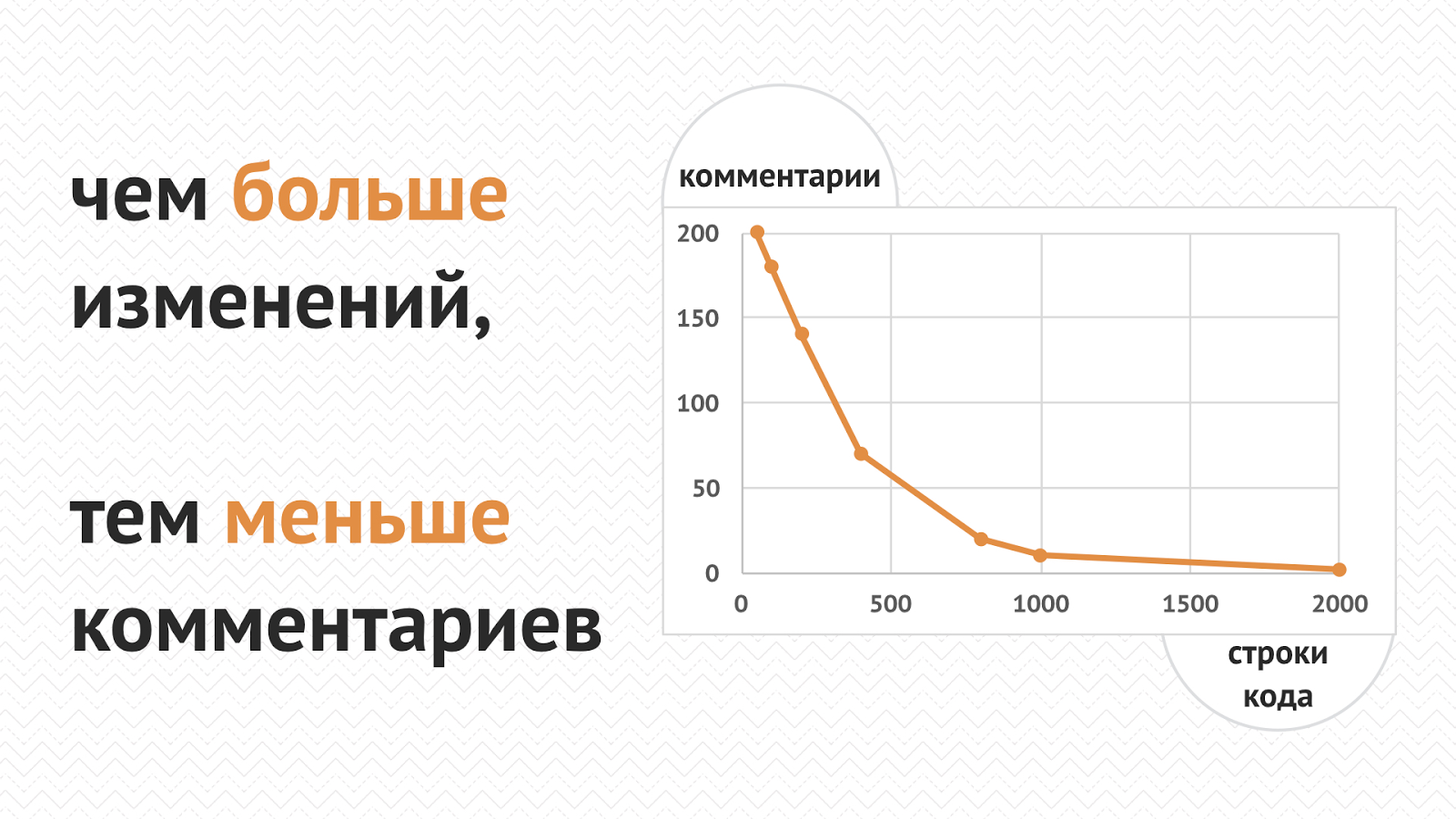

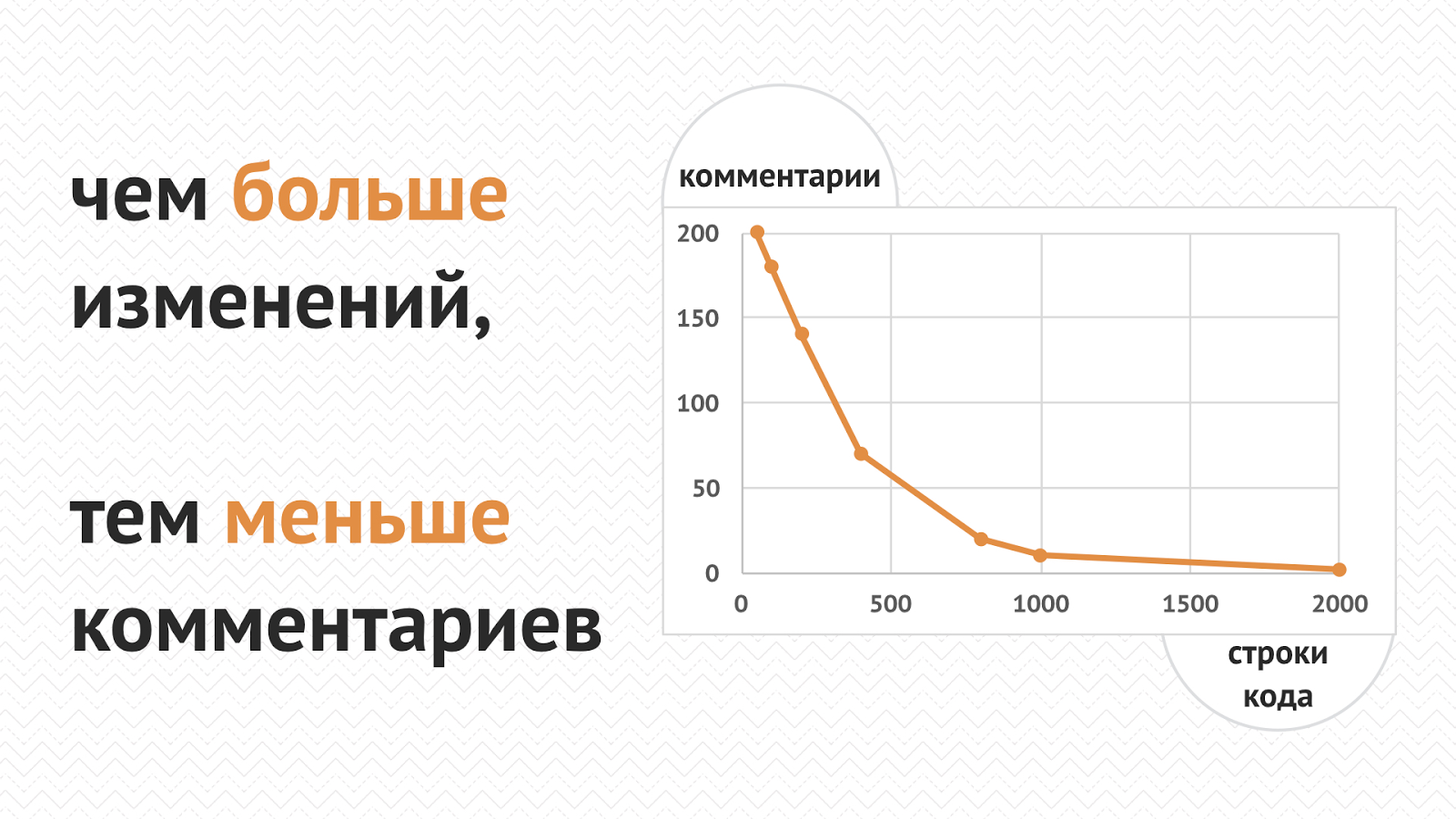

There is one remarkable paradox - the smaller the code for review, the more comments are left to it. This rule remains the same in almost any team. For several lines of code is characterized by the presence of huge and spreading discussions. As the volume of changes increases to several thousand lines, the number of comments drops sharply to one or two marks, on which it remains.

This practice is very simple - you can’t post too many changes at once. This requirement is completely correlated with the standard rules for the decomposition of tasks, each of which should not exceed a working day. If you fill in too much code, then the reviewers will very quickly blur their eyes - and many problems will simply be missed. By the way, an additional requirement can also be included here - remove all information noise that prevents the reviewers from focusing on the actual meaning of the changes.

The author is able to see most of the factual errors in his code himself. Therefore, before you connect your colleagues to the review, you should carefully review your code one or two times. This simple procedure will help to save time and nerves and improve relations with colleagues.

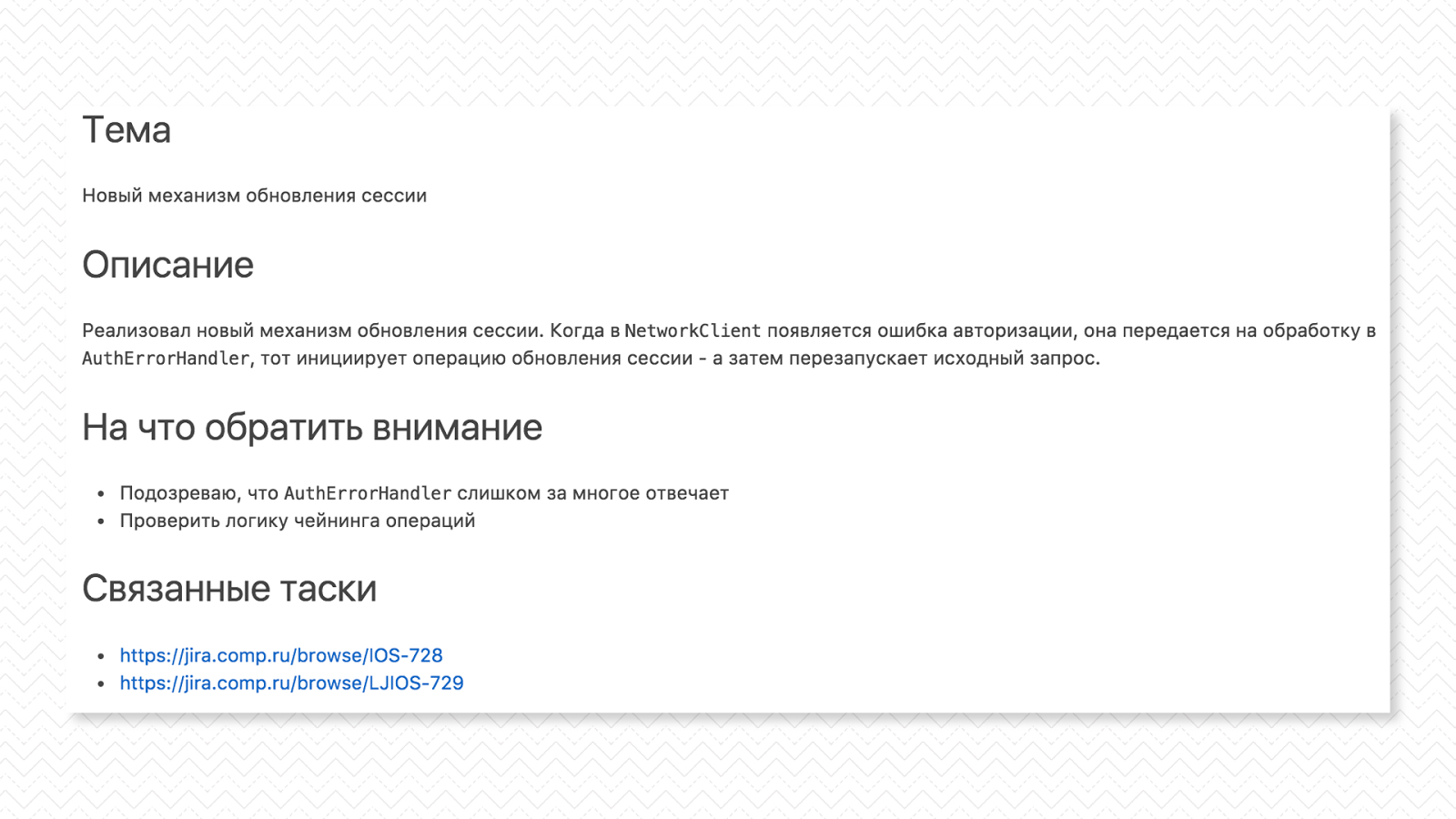

When you conduct a code review on an ongoing basis, it is difficult to focus on the actual meaning of the changes - I already mentioned this. Another way to help reviewers retain focus is to describe in detail the meaning of the changes. I usually use the following template:

Check lists are awesome. Use them for code review. Examples of questions from such a checklist: “Is there any commented out code?”, “Is business logic covered with tests?”, “Are all errors processed?”, “Are the lines in constants rendered?”. Memorize all the questions that often arise for the team to review, and put them in the list.

The main thing - do not give this check-list to grow. Think of ways to automate each of the checks listed there - if you make enough effort, you can close most of your needs. There are a huge number of tools that can help in this work - linters, static analyzers, duplicate code detectors.

The introduction of any process should be accompanied by the collection of metrics of its effectiveness and their further analysis. The process itself is not an end in itself. Code review is not an exception, it is important to be able to understand how much it helps the team in its work.

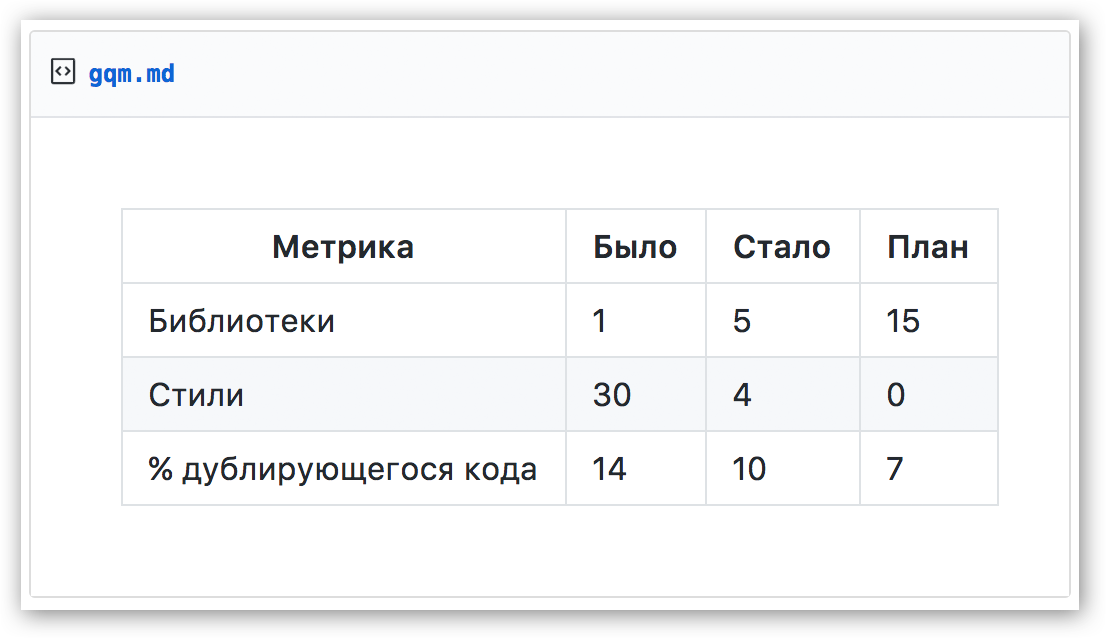

A convenient option is to proceed from the goals we want to achieve when implementing code review. As a cheat sheet, you can use those that I outlined in the first section. One way to measure the effectiveness of achieving such goals is to use the Goal-Question-Metric approach. It consists of three stages — defining the goal we want to measure, asking questions that will help understand the level of goal achievement, and defining metrics that help answer the questions posed. Let us consider an example.

First you need to define the intended target. As an example - “Increase the level of code reuse”. It is possible and even necessary to use several targets at once - and measure them independently of each other.

Then we formulate the questions that need to be answered in order to understand whether we are achieving our goals. In our case, this is “Do we use shared libraries in the project?”, “Are visual styles taken out as a separate component?”, “How much code is duplicated?”.

And finally, the definition of metrics that will help answer the question. In our case, this is the number of shared libraries, the number of places where hard-coded styles remain, the level of code duplication.

Using the framework is quite simple. We measure the indicated metrics before the implementation of the process, we determine the desired result, and periodically, once a month or a quarter, we repeat these measurements. If they can be automated, even cooler. After some time, we analyze the result and determine whether the metrics have changed enough to find the process effective.

Why is this metric really good? Imagine that most of the time, developers were not engaged in re-using code, but holivars on spaces or tabs - with such reviews these key indicators would not have grown much, we would not have achieved the desired result and would understand that something with the process that

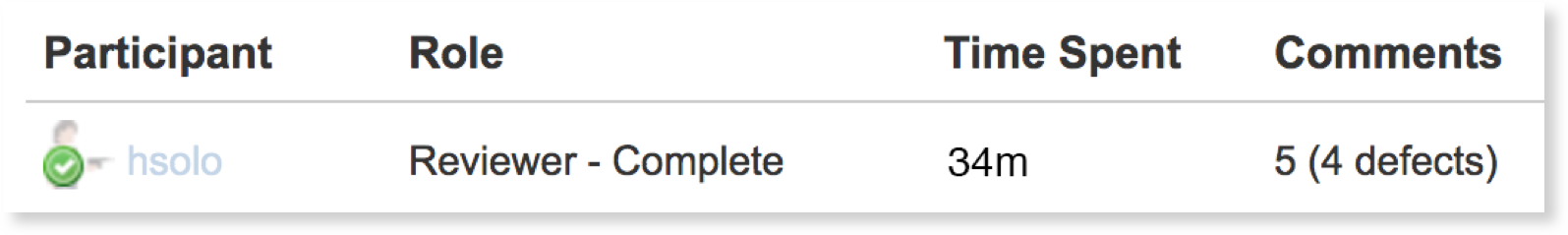

In addition, it is useful to collect some more metrics that may suggest what problems we encountered while using the process.

If you collect such data for each review, then you can quite adequately assess the level of involvement in the process for each team member. A number of systems for conducting code review automatically collect such data - for example, Crucible from Atlassian.

As I mentioned in the introduction, this article is a brief squeeze of my speech, in which I also touch on various tools for automating the process, rules and norms of behavior in a team and other aspects of conducting code review. In order not to miss the appearance of the recording - subscribe to my Telegram channel , where I will definitely post it on readiness. If you have any questions - come to our mitap on Saturday, June 17, and discuss them in an informal setting.

This material is a brief squeeze of my experience gained over several years of work in large mobile development teams. The experience is mostly in mobile development, which influenced the examples and references used. For those who prefer not to read, but to watch, within a couple of months there should be a video from the Mobius conference, where I give a talk on the same topic, but with a bunch of detailed practical examples.

Code Review Objectives

To begin with, I will outline the goals that should be pursued when conducting code inspections. It is with these goals and their priority relative to each other that I am guided, advising to implement this or that practice.

')

- Collective possession of code

Reducing the bus factor due to the fact that each component in the code has seen and analyzed more than one pair of eyes, and the knowledge does not belong to one particular developer. - Reuse code

When several people solving different work tasks and having a different set of knowledge about the product look at each other's code, they can see certain patterns and either suggest re-using one of the ready-made solutions, or select the current one into a separate library. - Sharing knowledge in a team

Everyone in the team has a lot to learn. Someone is good at algorithms, someone understands metaprogramming, and someone is a Jedi MVC. The ability to look at each other's decisions and ask questions is a great way to raise the technical level of the whole team. - Error detection

The most obvious goal is to detect errors of varying degrees of criticality. This is what your manager first thinks about when you offer him to lay a specific time process on code review. - Project uniformity

How many programmers are there in the world, so many different views on how to write code. Tabs and spaces, file structure of the project - in the absence of consistency this can greatly complicate the process of reading and analyzing code.

And now - to the point.

Code Review organization models in different teams

Teams are different - somewhere in the same department there are thirty people who are developing one project, and somewhere the developer alone supports a dozen applications at once.

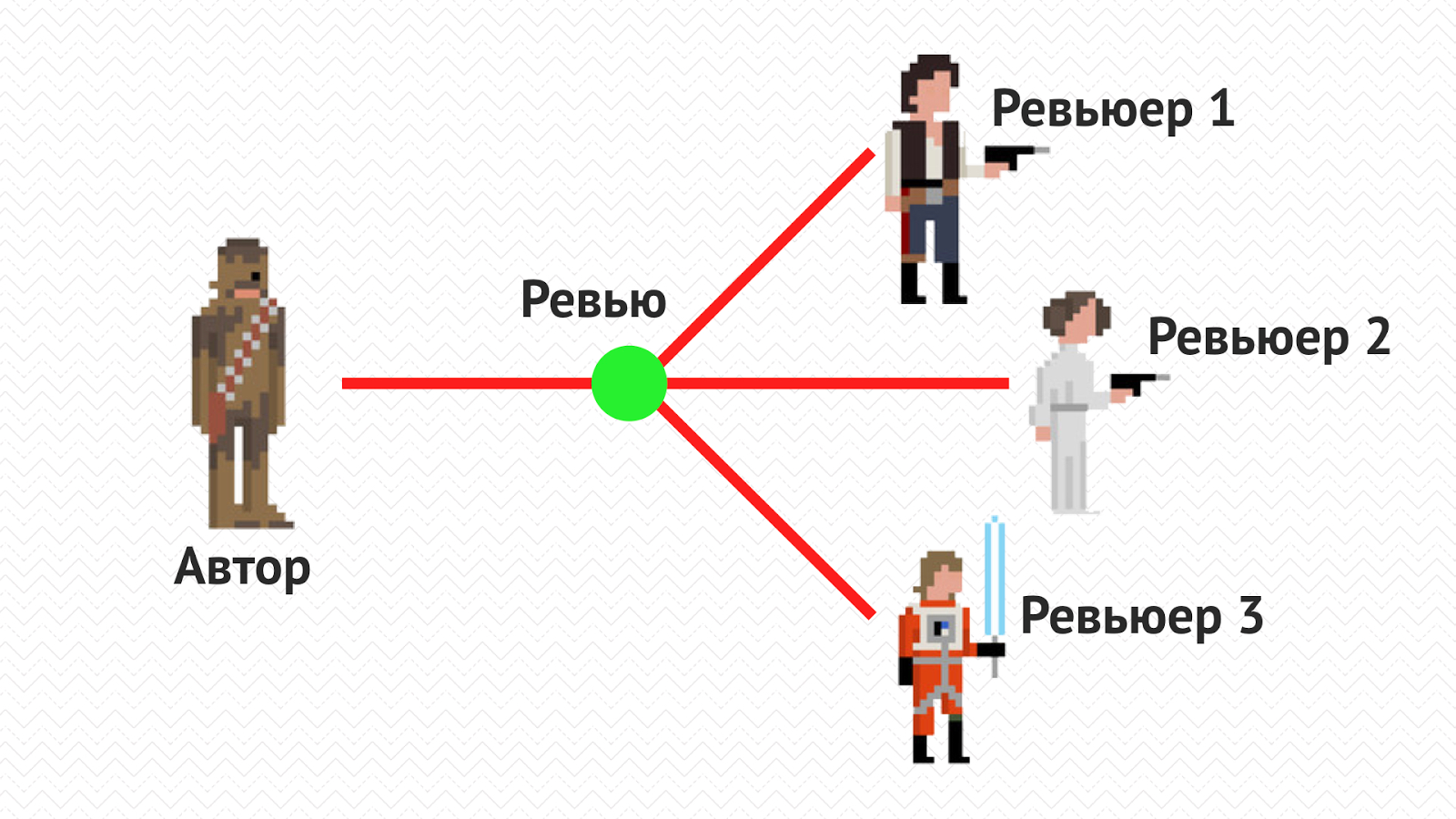

Small team

The first case is probably the most common. This is a team of several people - say, up to four, who work on one project. Here, Git Flow is usually in full swing, tasks are developed independently of each other in separate branches, and when they are completed, they merge into develop.

In this case, the best way is to fly a model in which the approval of all team members is required to merge a branch with a task into the main working branch. This helps to maximize the benefits of all the review objectives listed in the first section.

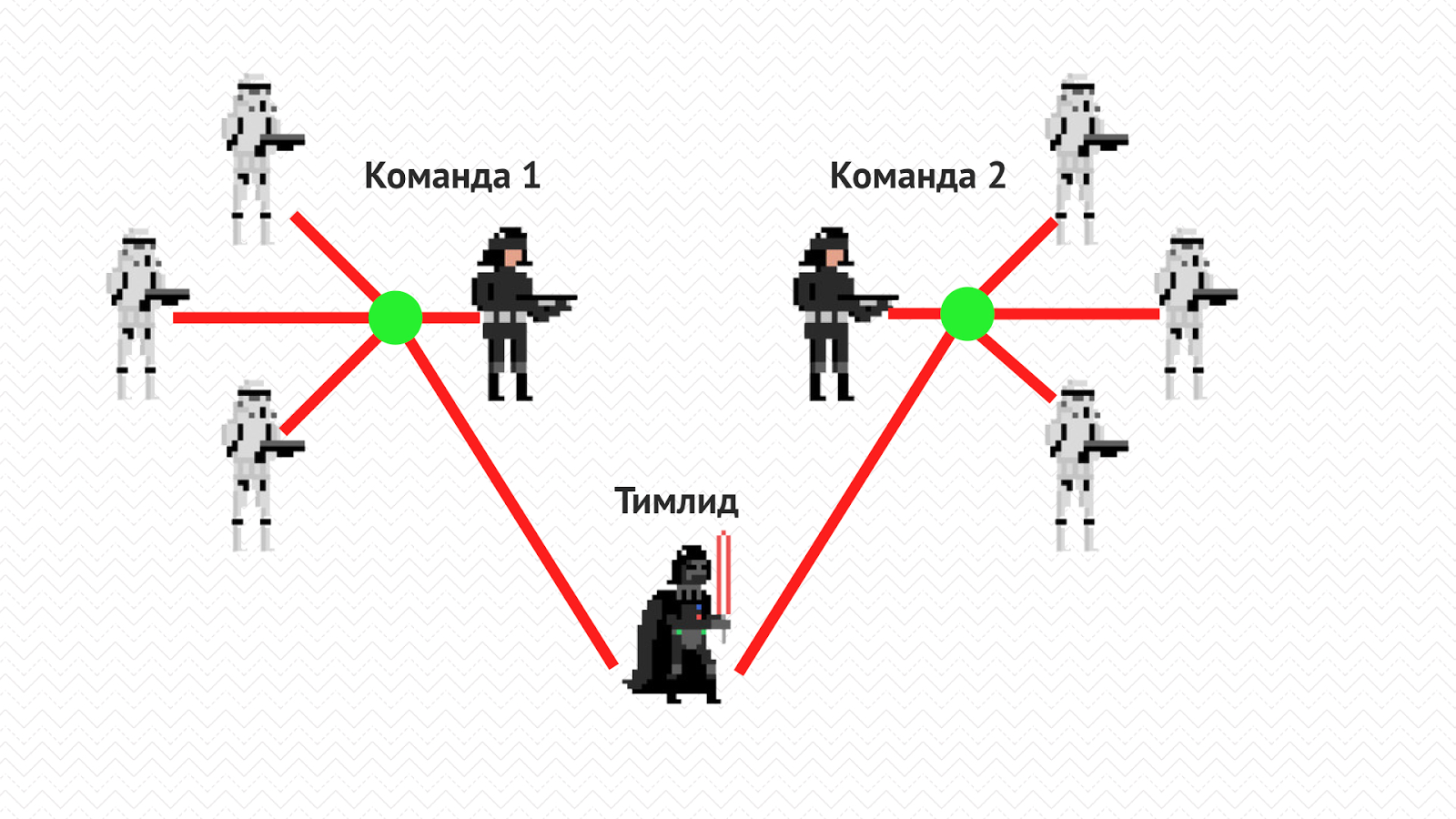

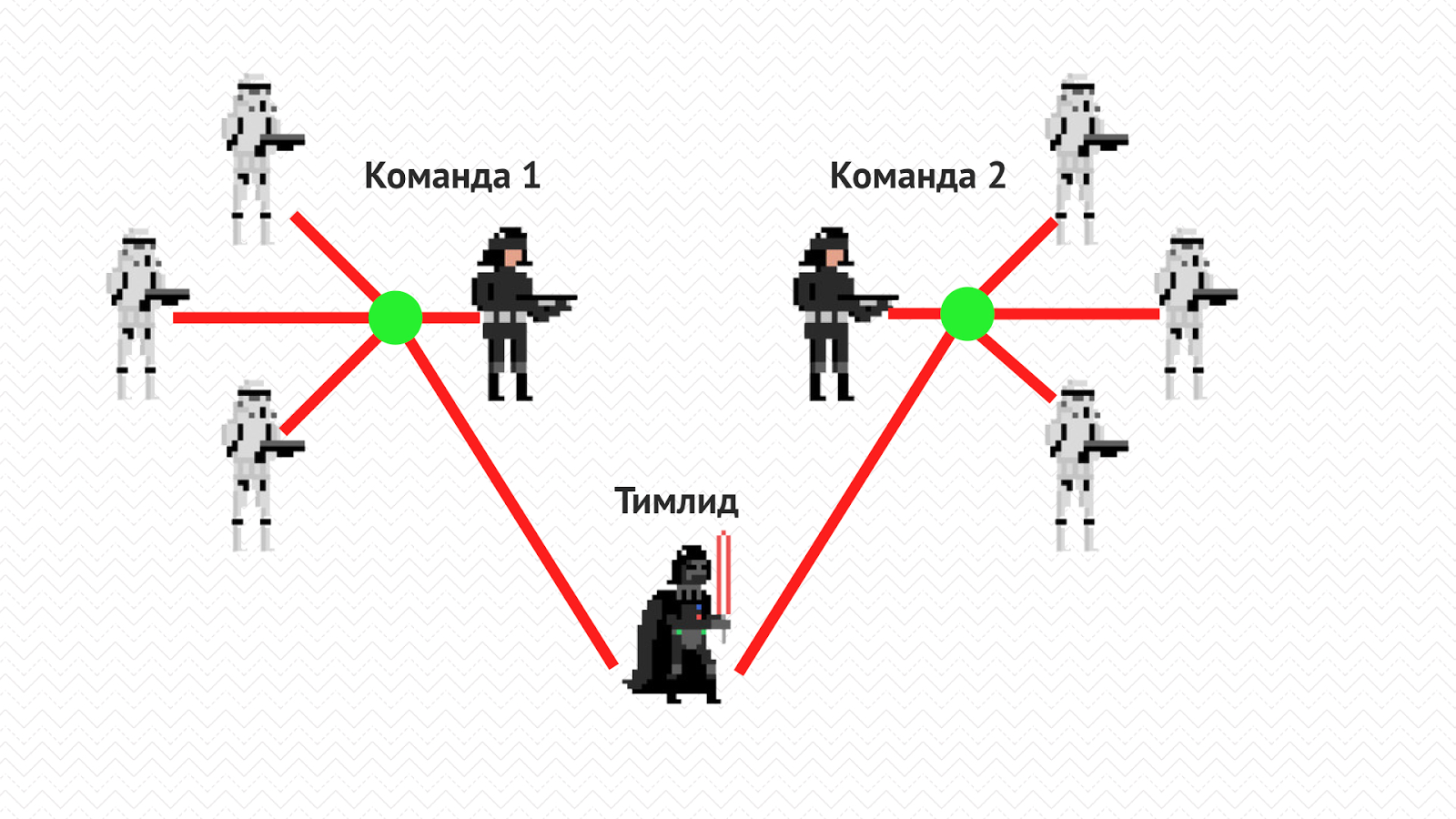

Several separate teams

Scale the previous situation and imagine a large outsourcer, which includes several such small teams, each of which is working on its own project. In such situations, the hierarchical structure is usually complicated and, in addition to the presence of leading developers in each of the teams, a new layer, timlid or technical leader is added, which is responsible for several such projects at once.

The basic approach remains unchanged - for all new tasks, a review is created in which the entire project team takes part, but the final responsibility for the technical decisions taken is the lead developer. In addition, the lead also needs to be connected to an important review - in this way it will stay abreast of what is happening on the project and connect at the right time to solving problems that arise.

Another additional activity goes into the load - periodic cross-project reviews show themselves to be very effective, when the developer becomes familiar with the rest of the projects. This allows everyone to be generally aware of where what technologies and approaches are used, to know who knows what and what. There are different ways to approach a cross-project review - keep a comprehensive list of all projects and sort it by the last review, or set a goal for each developer to inspect all other projects once in a certain period of time. Such reviews are conducted mainly for informational purposes - therefore, the approval of a third-party reviewer is usually not required in order to complete the task - a person who watches the code takes experience from other teams.

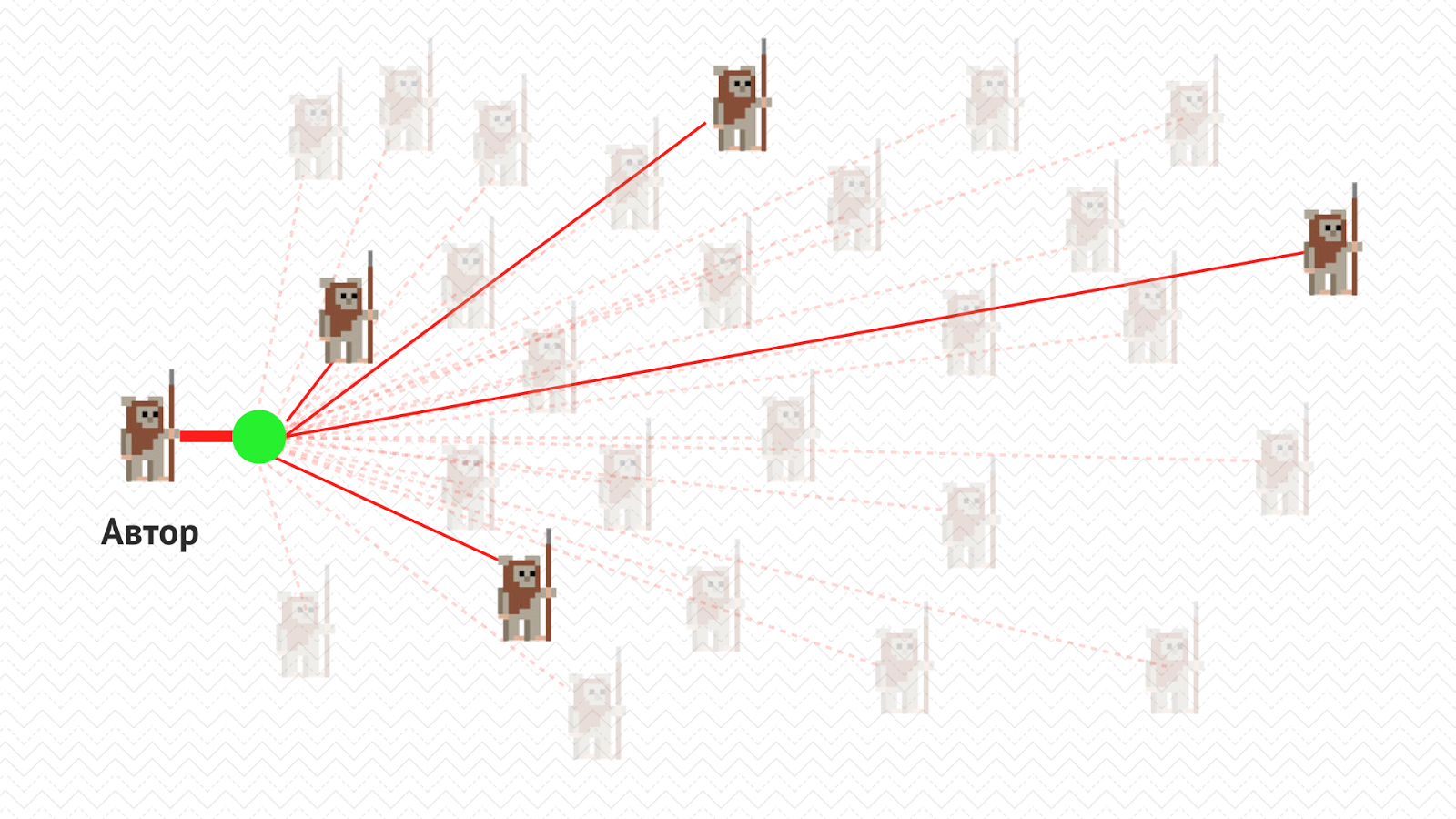

Big team

Another situation is a big team working on one big project. In such a situation, the establishment of a certain threshold of reviewers, after approval of which it is possible to merge a branch, justifies itself well. This value may depend on the size of the team, but a reasonable framework - 2-4 people.

The big issue is the definition of specific reviewers - they can be selected both randomly, who will respond first, and specifically by the author of the review. You can automate this process to avoid disputes and be more objective. As an example - to determine the owner of the code according to the history of the version control system or the system for code review.

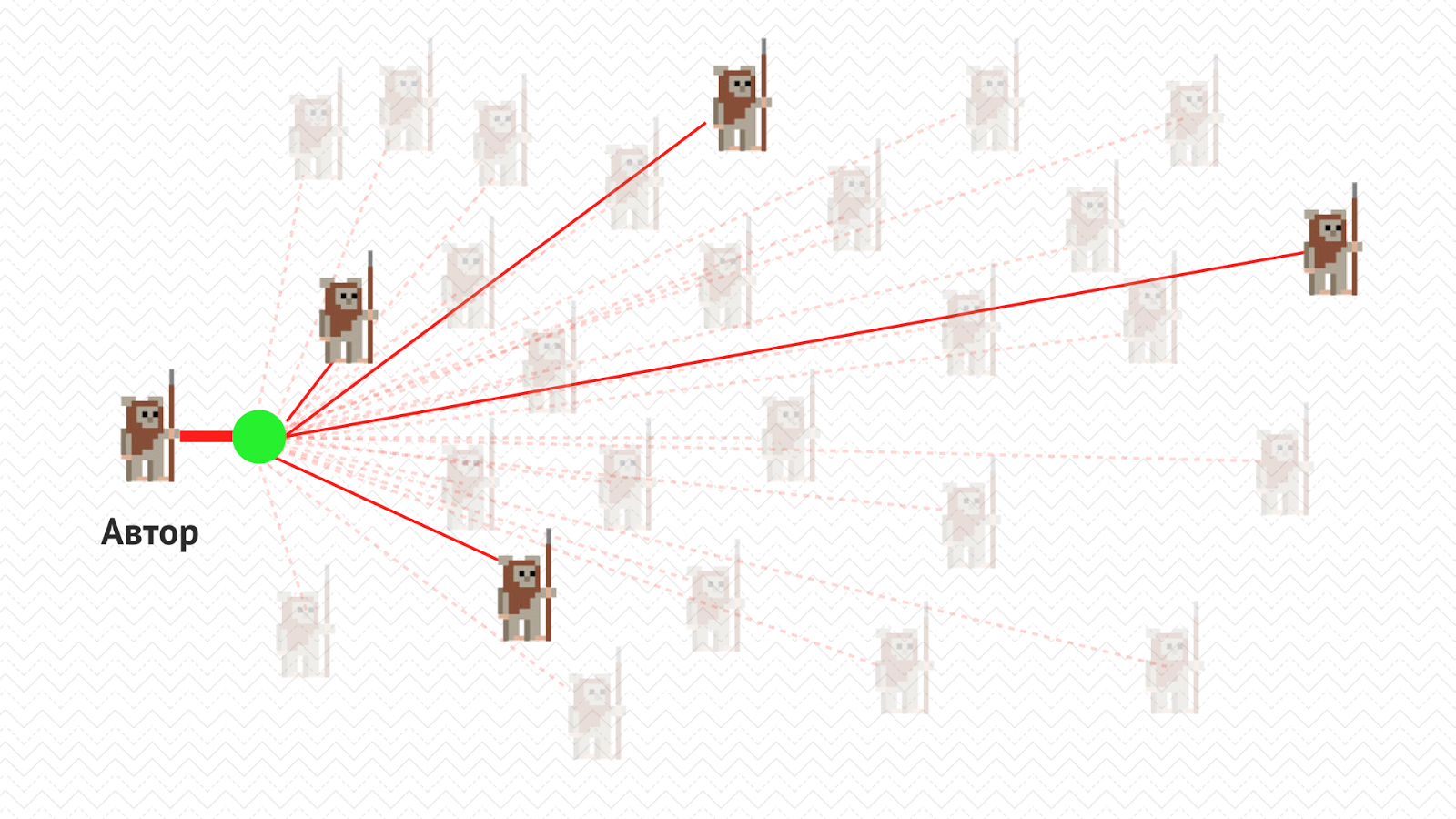

Lone developer

The last case is the saddest. The developer works alone in the team. Firstly, such a goal as collective ownership of the code immediately disappears - there is no collective, there is no ownership either. The remaining goals also become quite degenerate - but, nevertheless, a third-party view of the code will help to find some of the errors and improve the technical level in general.

In this case, the developer needs to seek help in an open community. I advise three channels.

- Different chat rooms and developer communities

Two of my favorites are the Slack chat Cocoa Developers Club and the Telegram chat iOS Good Talks . - Developer Meetings

For example, Peer Lab , which is held in Moscow every week. - Collaboration with a colleague from another function

You can connect your colleague who is developing for a different platform - Android, Web or Backend. But be careful - not everyone is ready and willing to dive into the context of problems from another development area.

Code Review Practices

Work on schedule

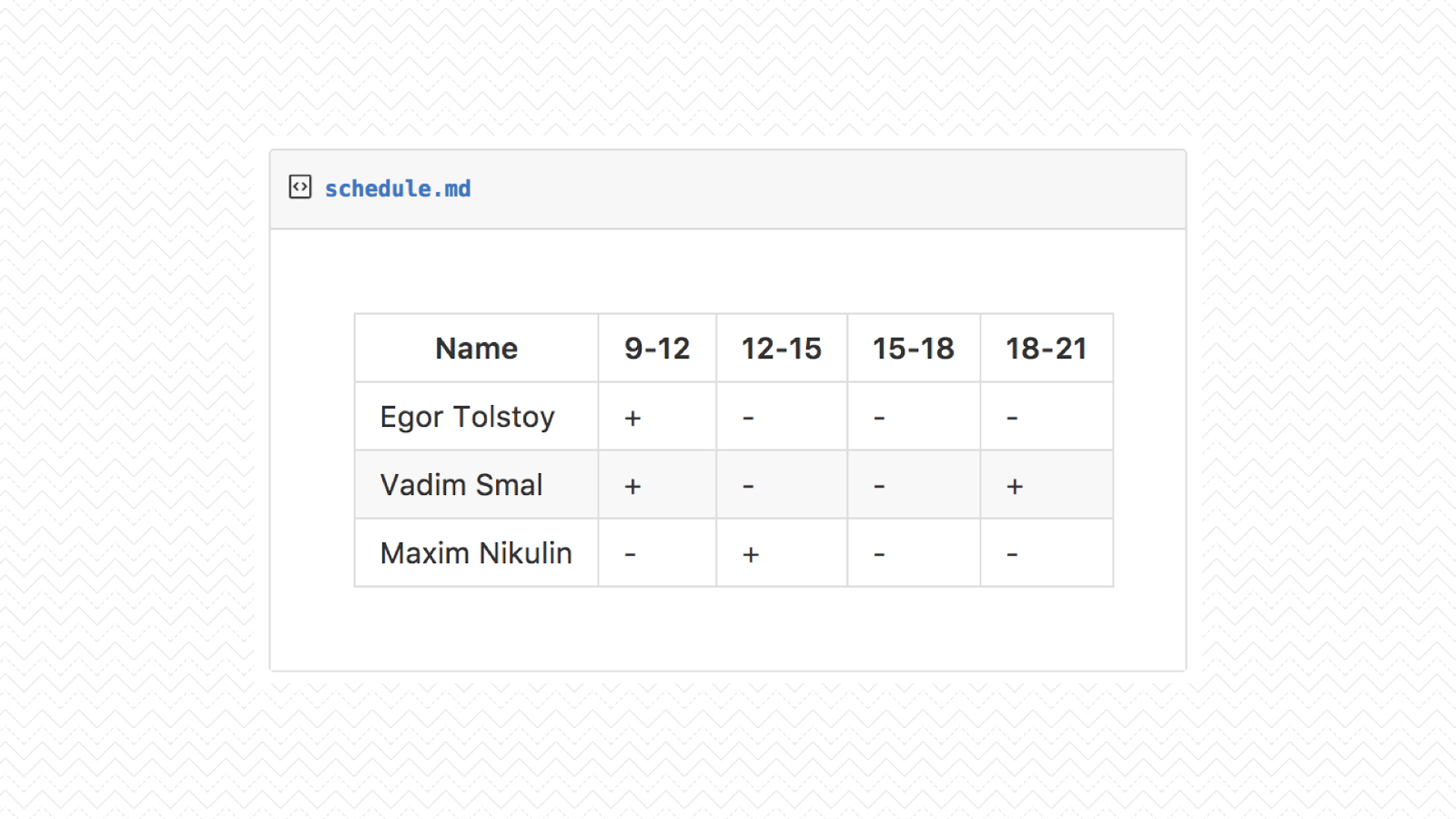

The main problem lies in such a concept as the state of the stream from which to pull a developer away — like death. Imagine the situation yourself: you are immersed in the development of your features, build palaces of abstractions in your head, and suddenly a colleague timidly touches the shoulder and asks to look at its code. Nothing good will come of it - the developer and his task can no longer properly deal with, and for the review is too biased and annoyed.

Solves the situation schedule. Each developer in the team determines the period of the day in which he will watch a review. The schedule of the whole team can be kept in one table, so that, guided by this, it was possible to select reviewers for yourself or calculate the time for checking the task. For example, I always set aside an hour of time in the morning, immediately after arriving at work — it just helped me get into a working rhythm.

Of course, there are cases when this mechanism does not work - some reviews really need to be viewed as quickly as possible. The main thing is to adhere to such a regime so that the occurrence of such situations does not become a habit.

Architectural review

I am sure that you are faced with a situation where, after the implementation of the task, it turns out that it was necessary to do it in a completely different way. Code Review is not the best place to argue about global architectural solutions. The code has already been written, the task is almost completed, to rewrite everything from scratch is too expensive. Such things should not be left for later, but discussed in advance.

As an option - conducting an architectural review, protecting your component in front of a team, either in words or as a diagram. Of course, there are cases when it dawns on someone already during the review - then this sentence, if it is really valuable, should be documented in the issue tracker and never returned to it.

As participants you can involve a technical leader of the project, developers who are directly interested in the implementation of this task, and just everyone from the team.

Size limit review

There is one remarkable paradox - the smaller the code for review, the more comments are left to it. This rule remains the same in almost any team. For several lines of code is characterized by the presence of huge and spreading discussions. As the volume of changes increases to several thousand lines, the number of comments drops sharply to one or two marks, on which it remains.

This practice is very simple - you can’t post too many changes at once. This requirement is completely correlated with the standard rules for the decomposition of tasks, each of which should not exceed a working day. If you fill in too much code, then the reviewers will very quickly blur their eyes - and many problems will simply be missed. By the way, an additional requirement can also be included here - remove all information noise that prevents the reviewers from focusing on the actual meaning of the changes.

Self review

The author is able to see most of the factual errors in his code himself. Therefore, before you connect your colleagues to the review, you should carefully review your code one or two times. This simple procedure will help to save time and nerves and improve relations with colleagues.

Description review

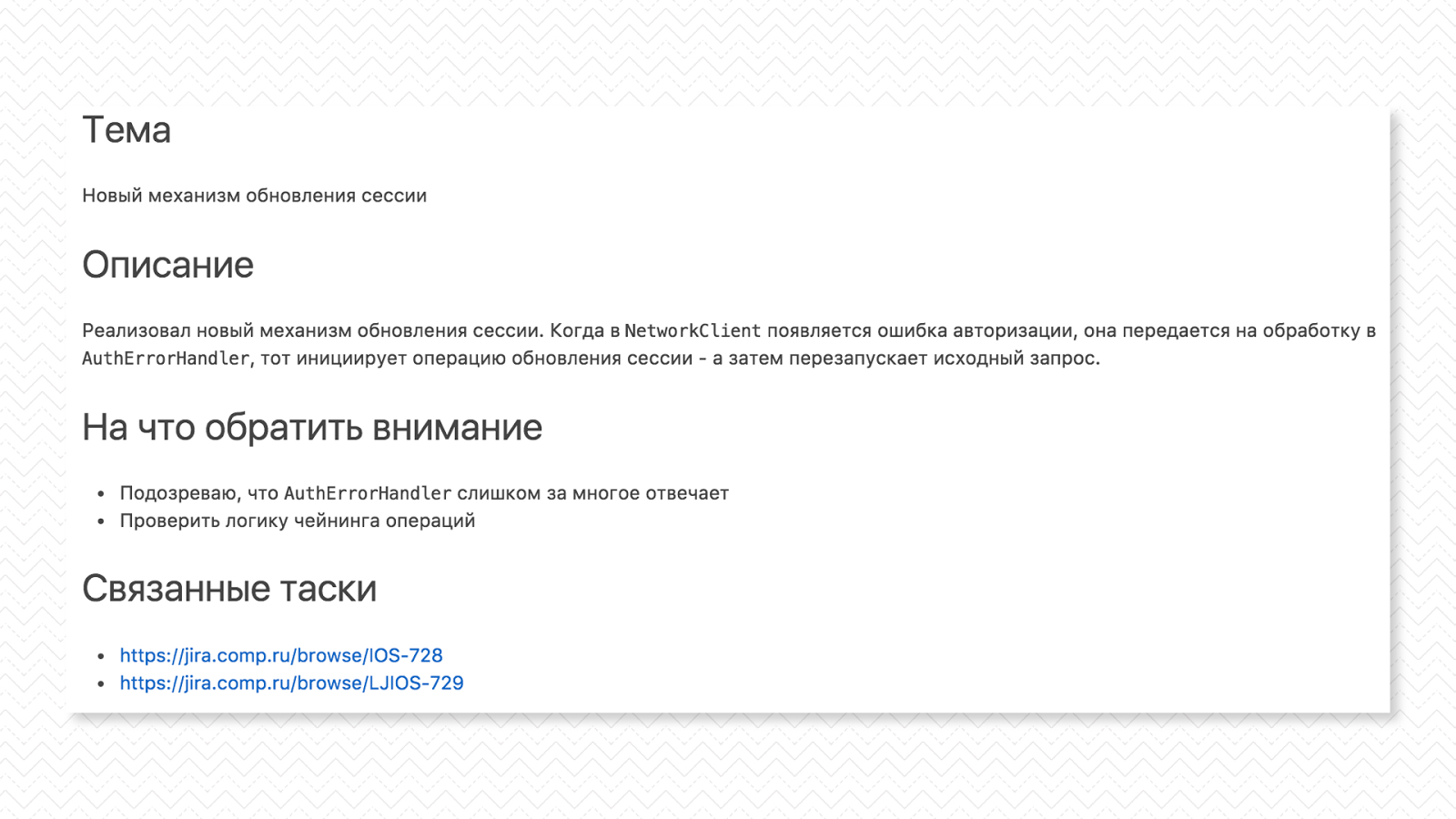

When you conduct a code review on an ongoing basis, it is difficult to focus on the actual meaning of the changes - I already mentioned this. Another way to help reviewers retain focus is to describe in detail the meaning of the changes. I usually use the following template:

- Name review

- Brief Description of Changes

- Things requiring special attention

- Related tasks and materials

Check lists and their automation

Check lists are awesome. Use them for code review. Examples of questions from such a checklist: “Is there any commented out code?”, “Is business logic covered with tests?”, “Are all errors processed?”, “Are the lines in constants rendered?”. Memorize all the questions that often arise for the team to review, and put them in the list.

The main thing - do not give this check-list to grow. Think of ways to automate each of the checks listed there - if you make enough effort, you can close most of your needs. There are a huge number of tools that can help in this work - linters, static analyzers, duplicate code detectors.

Code Review Performance Measurement

The introduction of any process should be accompanied by the collection of metrics of its effectiveness and their further analysis. The process itself is not an end in itself. Code review is not an exception, it is important to be able to understand how much it helps the team in its work.

A convenient option is to proceed from the goals we want to achieve when implementing code review. As a cheat sheet, you can use those that I outlined in the first section. One way to measure the effectiveness of achieving such goals is to use the Goal-Question-Metric approach. It consists of three stages — defining the goal we want to measure, asking questions that will help understand the level of goal achievement, and defining metrics that help answer the questions posed. Let us consider an example.

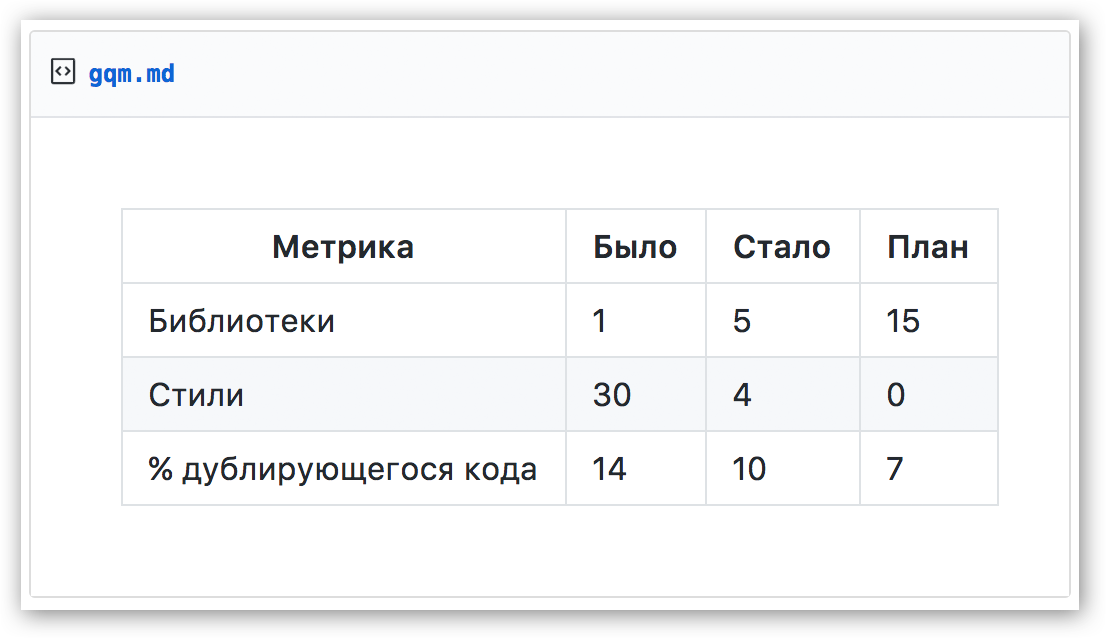

First you need to define the intended target. As an example - “Increase the level of code reuse”. It is possible and even necessary to use several targets at once - and measure them independently of each other.

Then we formulate the questions that need to be answered in order to understand whether we are achieving our goals. In our case, this is “Do we use shared libraries in the project?”, “Are visual styles taken out as a separate component?”, “How much code is duplicated?”.

And finally, the definition of metrics that will help answer the question. In our case, this is the number of shared libraries, the number of places where hard-coded styles remain, the level of code duplication.

Using the framework is quite simple. We measure the indicated metrics before the implementation of the process, we determine the desired result, and periodically, once a month or a quarter, we repeat these measurements. If they can be automated, even cooler. After some time, we analyze the result and determine whether the metrics have changed enough to find the process effective.

Why is this metric really good? Imagine that most of the time, developers were not engaged in re-using code, but holivars on spaces or tabs - with such reviews these key indicators would not have grown much, we would not have achieved the desired result and would understand that something with the process that

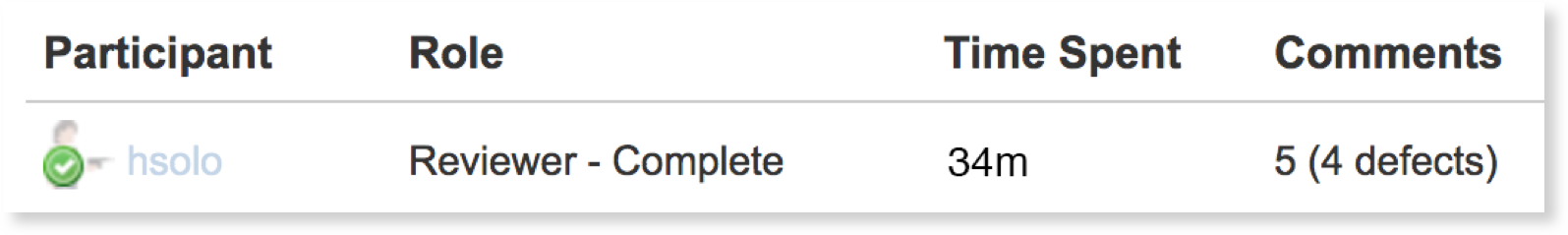

In addition, it is useful to collect some more metrics that may suggest what problems we encountered while using the process.

- Inspection rate - review speed,

- Defect rate - the number of problems found per hour of time spent on the review,

- Defect density - the number of problems found per line of code.

If you collect such data for each review, then you can quite adequately assess the level of involvement in the process for each team member. A number of systems for conducting code review automatically collect such data - for example, Crucible from Atlassian.

Conclusion

As I mentioned in the introduction, this article is a brief squeeze of my speech, in which I also touch on various tools for automating the process, rules and norms of behavior in a team and other aspects of conducting code review. In order not to miss the appearance of the recording - subscribe to my Telegram channel , where I will definitely post it on readiness. If you have any questions - come to our mitap on Saturday, June 17, and discuss them in an informal setting.

useful links

- Examples of a good review: Wire , Artsy , Kickstarter .

- Slack chat Cocoa Developers Club and Telegram chat iOS Good Talks .

- The official site of Peer Lab and Telegram Chat Peer Lab Moscow .

- About Goal-Question-Metric

Source: https://habr.com/ru/post/330846/

All Articles