You are not google

We, programmers, sometimes for some reason go crazy. And for some completely ridiculous reasons. We like to think of ourselves as super-rational people, but when it comes to choosing the key technology of a new product, we are plunged into some kind of madness. Suddenly it turns out that someone heard something about one cool thing, and his colleague read a comment about another on Habré, and a third person saw a blog post about something else similar ... and now we are in complete stupor, helplessly floundering in attempts to choose between completely opposite systems, already forgetting that we are trying to choose at all and why.

Rational people do not make decisions that way. But this is how programmers often decide to use something like MapReduce.

Here's how Joe Hellerstein commented on this choice to his students (in the 54th minute):

')

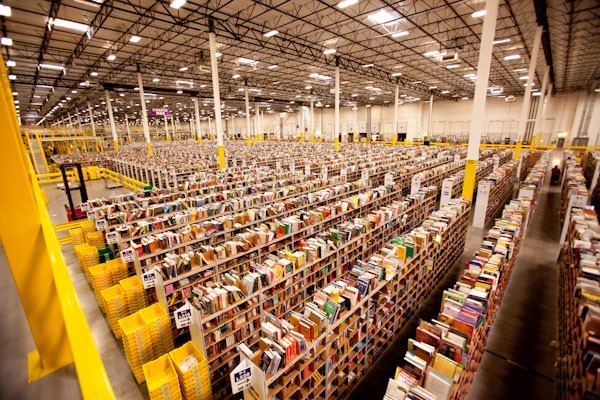

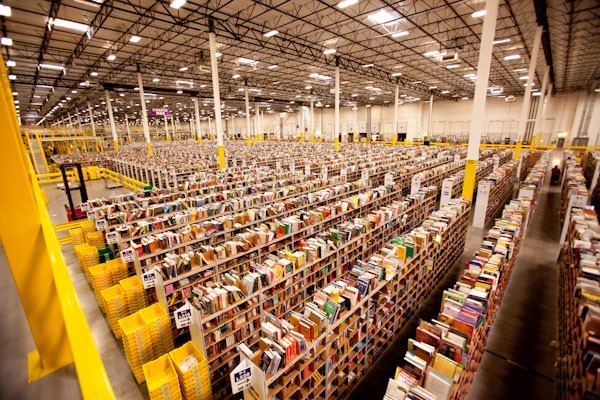

How many floors are in your datacenter? Google is now building four floors, like this one in Oklahoma.

Getting more resiliency than you actually need may seem like a good idea. But this is only if it turned out automatically and for free. But this is not the case, everything has its price: you will not only transfer much more data between nodes (and pay for it), but also step out of the solid ground of classic storage systems (with all their transactions, indexes, query optimizers, and more .d.) on the fragile soil of weak infrastructure of new-fangled systems. This is an important step (and often a step back ). How many Hadoop users have done it consciously? How many of them over time can say that it was a verified and wise move?

MapReduce and Hadoop in this regard are easy targets for criticism, because even the followers of the cargo cult eventually recognized that their rites somehow did not work very well for attracting airplanes. But this observation can also be summarized: if you decide to use some technology created by a large corporation, it may well be that you did not consciously come to this decision; it is quite possible that some kind of mystical belief led to the fact that it is possible to imitate the behavior of giants and thus achieve the same heights.

Yes, this is another "anti-cargo cult" article. But wait! I have a useful checklist below that will help you make smarter decisions.

Next time, when you suddenly find a completely awesome technology, on which you can rewrite all your architecture right today, I’ll ask you to pause:

The above technique is fairly easy to apply in practice. Most recently, I discussed with one company their plans to use Cassandra for a new (fairly loaded with reading operations) project. I read the original document, which described the principles of Dynamo and, knowing that Cassandra is close enough to Dynamo, I realized that these systems were based on the principle of prioritizing write operations (Amazon wanted the “add to cart” operation to always be successful). And they even achieved this by donating, however, the guaranteed consistency and functionality of traditional relational databases. But the company with which I discussed the application of this solution did not put data recording in priorities (all data came in one block once a day), but consistency and productive reading were very much needed.

Amazon sells a lot of different goods. If the operation “add to cart” suddenly starts working unstably - they will lose a lot of money. Do you have the same situation?

The company, which wanted to implement Cassandra, did this because one of the queries in their PostgreSQL took several minutes and they thought that they had rested against the hardware capabilities of the platform. After literally a couple of clarifying questions, it turned out that transferring their base to SSD accelerated this request by 2 orders of magnitude (up to a few seconds). It was still slow, of course, but just appreciate how the transition to SSD (one second thing) could speed up the bottleneck about 100 times. Without any fundamental alterations of architecture. It became absolutely clear that the transition to Cassandra is not the right way. This required some tuning of the existing solution, perhaps re-modeling the database schema, but definitely not trying to solve the reading problem with a tool created to solve the writing problem.

I was very surprised when I learned how one of my familiar companies decided to build their architecture around Kafka. This seemed strange to me, since their main task was processing several dozen (on a good day — several hundred) fairly important transactions. For such an input load, even a “ grandmother manually writing operations to a paper notebook ” solution would be suitable . Kafka, for you to understand, was created to collect all LinkedIn analytical information (all these messages, requests, groups, chat rooms, news, ratings, etc.). A huge amount of data. I can not estimate their volumes now, but a few years ago LinkedIn called a figure of about 1 trillion events per day, with individual peaks up to 10 million per second. Yes, I understand that 10 transactions per day can also be successfully processed with its help. But why do this? The difference of theoretical load and practical necessity is 10 ORDERS!

Our Sun, for example, is only 6 orders of magnitude larger than the Earth.

Yes, it is quite possible that the engineers of this company rationally evaluated all the advantages of Kafka and chose this tool for some unknown reason to me, not related to the loads. But it’s more likely that they just fell under the influence of some enthusiastic response, articles, community opinions or something else, shouting "take Kafka" to them ... No, well, you think 10 orders of magnitude!

An even more popular thing than Amazon's distributed databases is their architectural approach to scale: service-oriented architecture (SOA). In 2001, Amazon realized that it could not effectively scale the load on its front end, and, working to solve this problem, came to SOA. This success story was transmitted orally and in writing from one engineer to another, and now we come to a situation where every first startup out of three programmers and zero users begins their life journey by breaking their home page into nanos-services. By the time Amazon realized the need to move to SOA, they had 7,800 employees and they were selling goods for $ 3 billion a year .

This room holds 7,000 people. And Amazon had 7800, when it needed a transition to SOA.

I am not saying that you need to wait until the 7800th employee is hired to implement SOA. Just ask yourself a question - is this the problem that needs to be solved now? There are no others? If you tell me that this is indeed the case and your company of 50 people right now rests precisely on the absence of SOA, then how do you explain the fact that there are dozens of times large companies that do not suffer from this at all?

Using well-scaling tools like Hadoop or Spark can be very interesting. However, in practice, classical tools are better suited for ordinary and even large loads. Sometimes seemingly “large” volumes can even fit completely into the RAM of a single computer. Did you know that today you can get a terabyte of RAM for about $ 10,000? Even if you have a whole billion users (and you don’t have one), for this price you will receive a whole kilobyte of data in RAM for each of them. Very fast kilobyte available. Perhaps this is not enough for your tasks and you have to read / write something from the disk. But how many disks will it be - are there thousands? Hardly. You do not need solutions like GFS and MapReduce, which were created, for a minute, to store the search index TOTAL INTERNET.

Hard disk space today is much cheaper than in 2003, when the GFS description was published.

You may have read the GFS and MapReduce documentation. Then you can remember that the main problem that Google was trying to solve was not capacity, but bandwidth. They built a distributed system to access the right data faster. But today is the year 2017 and the bandwidth of devices has grown. Please note that you do not need to process as much data as Google does. So maybe it will be enough to buy better hard drives (maybe SSD) and that will be enough?

Perhaps you expect to grow over time. But did you think exactly how much? Will you accumulate data faster than the cost of SSD will fall? How much will your business need to grow to the point when all your data will no longer fit on the same physical machine? In 2016, the Stack Exchange system, serving 200 million requests per day, used only 4 SQL servers : one for Stack Overflow, one for the rest, and two replicas.

You can go through the checklist I specified above and still stop at Hadoop or Spark. And this may even be the right decision. It is important, however, to understand that the right here and now decision will not necessarily remain so for a long time. Google knows this well: as soon as they decided that MapReduce does not work well enough to build a search index, they stopped using it.

This thought is not mine and it is far from new. But, perhaps, in the interpretation of this article, together with the checklist above, it will reach your heart. If not, you can look at materials such as Hammock Driven Development , How to Solve It, or The Art of Doing Science and Engineering - all of them get the same call to think, try to understand the problem before rushing to solve it. In G. Polya's book there is such a phrase:

Rational people do not make decisions that way. But this is how programmers often decide to use something like MapReduce.

Here's how Joe Hellerstein commented on this choice to his students (in the 54th minute):

')

The fact is that there are about 5 companies in the world that process data of such volumes. All the rest are driving all this data back and forth, seeking resiliency, which they really do not need. People have suffered from gigantomania and googlemaking somewhere since the mid-2000s: “we will do everything the way Google does, because we are building one of the largest (in the future) data processing services in the world!”

How many floors are in your datacenter? Google is now building four floors, like this one in Oklahoma.

Getting more resiliency than you actually need may seem like a good idea. But this is only if it turned out automatically and for free. But this is not the case, everything has its price: you will not only transfer much more data between nodes (and pay for it), but also step out of the solid ground of classic storage systems (with all their transactions, indexes, query optimizers, and more .d.) on the fragile soil of weak infrastructure of new-fangled systems. This is an important step (and often a step back ). How many Hadoop users have done it consciously? How many of them over time can say that it was a verified and wise move?

MapReduce and Hadoop in this regard are easy targets for criticism, because even the followers of the cargo cult eventually recognized that their rites somehow did not work very well for attracting airplanes. But this observation can also be summarized: if you decide to use some technology created by a large corporation, it may well be that you did not consciously come to this decision; it is quite possible that some kind of mystical belief led to the fact that it is possible to imitate the behavior of giants and thus achieve the same heights.

Yes, this is another "anti-cargo cult" article. But wait! I have a useful checklist below that will help you make smarter decisions.

Cool technology? Well, let's check out.

Next time, when you suddenly find a completely awesome technology, on which you can rewrite all your architecture right today, I’ll ask you to pause:

- Think about the problem you need to solve in your product. Understand it to the end . Your task should be to solve this problem with the help of some tools, and not to solve "some" problem that the tool you like can solve.

- Select several potential tools. Do not choose one “pet” for yourself until you have a list of several alternatives!

- For each candidate, find his " Main Document " and read it. Not comments or translations, but the “same” document.

- Determine the historical context that led to the creation of this tool the way it was created.

- Ideals do not happen. Weigh the advantages and disadvantages of each tool. Understand what compromises had to go to its creators in order to achieve the main goals they declared.

- Think ! Answer yourself soberly and honestly to the question whether your goals and priorities coincide with the goals and priorities of the creators of the tools you plan to use. What new fact about this tool could make you refuse to use it? For example, how much should the scale of your tasks differ from those for which the tool is designed to make you think about the irrationality of its application?

And you - not Amazon

The above technique is fairly easy to apply in practice. Most recently, I discussed with one company their plans to use Cassandra for a new (fairly loaded with reading operations) project. I read the original document, which described the principles of Dynamo and, knowing that Cassandra is close enough to Dynamo, I realized that these systems were based on the principle of prioritizing write operations (Amazon wanted the “add to cart” operation to always be successful). And they even achieved this by donating, however, the guaranteed consistency and functionality of traditional relational databases. But the company with which I discussed the application of this solution did not put data recording in priorities (all data came in one block once a day), but consistency and productive reading were very much needed.

Amazon sells a lot of different goods. If the operation “add to cart” suddenly starts working unstably - they will lose a lot of money. Do you have the same situation?

The company, which wanted to implement Cassandra, did this because one of the queries in their PostgreSQL took several minutes and they thought that they had rested against the hardware capabilities of the platform. After literally a couple of clarifying questions, it turned out that transferring their base to SSD accelerated this request by 2 orders of magnitude (up to a few seconds). It was still slow, of course, but just appreciate how the transition to SSD (one second thing) could speed up the bottleneck about 100 times. Without any fundamental alterations of architecture. It became absolutely clear that the transition to Cassandra is not the right way. This required some tuning of the existing solution, perhaps re-modeling the database schema, but definitely not trying to solve the reading problem with a tool created to solve the writing problem.

Plus, you're not LinkedIn.

I was very surprised when I learned how one of my familiar companies decided to build their architecture around Kafka. This seemed strange to me, since their main task was processing several dozen (on a good day — several hundred) fairly important transactions. For such an input load, even a “ grandmother manually writing operations to a paper notebook ” solution would be suitable . Kafka, for you to understand, was created to collect all LinkedIn analytical information (all these messages, requests, groups, chat rooms, news, ratings, etc.). A huge amount of data. I can not estimate their volumes now, but a few years ago LinkedIn called a figure of about 1 trillion events per day, with individual peaks up to 10 million per second. Yes, I understand that 10 transactions per day can also be successfully processed with its help. But why do this? The difference of theoretical load and practical necessity is 10 ORDERS!

Our Sun, for example, is only 6 orders of magnitude larger than the Earth.

Yes, it is quite possible that the engineers of this company rationally evaluated all the advantages of Kafka and chose this tool for some unknown reason to me, not related to the loads. But it’s more likely that they just fell under the influence of some enthusiastic response, articles, community opinions or something else, shouting "take Kafka" to them ... No, well, you think 10 orders of magnitude!

Did I mention that you are not an Amazon?

An even more popular thing than Amazon's distributed databases is their architectural approach to scale: service-oriented architecture (SOA). In 2001, Amazon realized that it could not effectively scale the load on its front end, and, working to solve this problem, came to SOA. This success story was transmitted orally and in writing from one engineer to another, and now we come to a situation where every first startup out of three programmers and zero users begins their life journey by breaking their home page into nanos-services. By the time Amazon realized the need to move to SOA, they had 7,800 employees and they were selling goods for $ 3 billion a year .

This room holds 7,000 people. And Amazon had 7800, when it needed a transition to SOA.

I am not saying that you need to wait until the 7800th employee is hired to implement SOA. Just ask yourself a question - is this the problem that needs to be solved now? There are no others? If you tell me that this is indeed the case and your company of 50 people right now rests precisely on the absence of SOA, then how do you explain the fact that there are dozens of times large companies that do not suffer from this at all?

And even Google is not Google.

Using well-scaling tools like Hadoop or Spark can be very interesting. However, in practice, classical tools are better suited for ordinary and even large loads. Sometimes seemingly “large” volumes can even fit completely into the RAM of a single computer. Did you know that today you can get a terabyte of RAM for about $ 10,000? Even if you have a whole billion users (and you don’t have one), for this price you will receive a whole kilobyte of data in RAM for each of them. Very fast kilobyte available. Perhaps this is not enough for your tasks and you have to read / write something from the disk. But how many disks will it be - are there thousands? Hardly. You do not need solutions like GFS and MapReduce, which were created, for a minute, to store the search index TOTAL INTERNET.

Hard disk space today is much cheaper than in 2003, when the GFS description was published.

You may have read the GFS and MapReduce documentation. Then you can remember that the main problem that Google was trying to solve was not capacity, but bandwidth. They built a distributed system to access the right data faster. But today is the year 2017 and the bandwidth of devices has grown. Please note that you do not need to process as much data as Google does. So maybe it will be enough to buy better hard drives (maybe SSD) and that will be enough?

Perhaps you expect to grow over time. But did you think exactly how much? Will you accumulate data faster than the cost of SSD will fall? How much will your business need to grow to the point when all your data will no longer fit on the same physical machine? In 2016, the Stack Exchange system, serving 200 million requests per day, used only 4 SQL servers : one for Stack Overflow, one for the rest, and two replicas.

You can go through the checklist I specified above and still stop at Hadoop or Spark. And this may even be the right decision. It is important, however, to understand that the right here and now decision will not necessarily remain so for a long time. Google knows this well: as soon as they decided that MapReduce does not work well enough to build a search index, they stopped using it.

First understand your problem.

This thought is not mine and it is far from new. But, perhaps, in the interpretation of this article, together with the checklist above, it will reach your heart. If not, you can look at materials such as Hammock Driven Development , How to Solve It, or The Art of Doing Science and Engineering - all of them get the same call to think, try to understand the problem before rushing to solve it. In G. Polya's book there is such a phrase:

It is foolish to try to find an answer to a question that you do not know. It's sad to work on what doesn't solve your problem.

Source: https://habr.com/ru/post/330708/

All Articles