MIT developed a photon chip for deep learning

Deep learning systems based on the imitation of knowledge accumulation by artificial neural networks have been able to absorb information much faster and more efficiently. A joint team of researchers from the Massachusetts Institute of Technology (MIT) and other countries has developed a new approach to learning using light instead of electricity. The results of their research were described on June 12 in Nature Photonics journal by MIT research fellow Yichen Shen, graduate student Nicholas Harris, professors Marin Soljacic and Dirk Englund.

/ photo by Bill Benzon CC

/ photo by Bill Benzon CC

Traditional types of computer architecture are not very effective in terms of important for the neural network computations - multiple matrix multiplication. The MIT team has come up with an efficient way to perform these operations on an optical basis. At the same time, a tuned chip, according to Professor Solzhachik, has practical application, unlike other photon concepts.

')

For example, a similar work was carried out by a team of scientists led by Alexander Tait from Princeton University in New Jersey. Then the researchers managed to create the first photon neural network in which the neurons are represented by light waveguides.

The development of MIT, according to scientists, allows you to instantly produce matrix multiplication without a large expenditure of energy. Some light transformations, such as focusing with a lens, can be thought of as calculations. The new approach of photonic chips involves a lot of light rays, designed in such a way that their waves interact with each other. This creates interference patterns that convey the result of the planned operation.

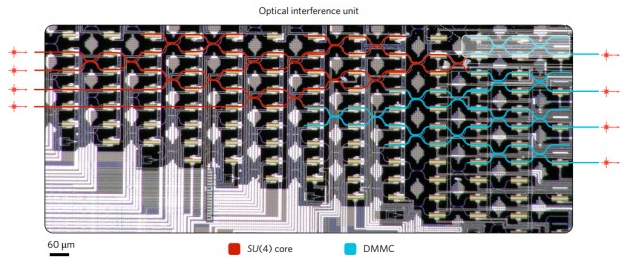

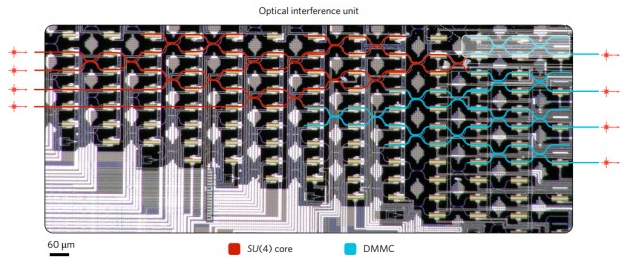

Diagram of a photon chip for deep learning

Diagram of a photon chip for deep learning

Yichen Shen argues that chips with this architecture will be able to perform calculations performed by typical artificial intelligence algorithms, much faster and using less than one thousandth of energy per operation compared to conventional electronic microcircuits.

Scientists call the result of the study “programmable nanophotonic processor”. It is equipped with a set of waveguides, the links between which can be changed as needed for a specific calculation. Nicholas Harris explains that customization is possible for any matrix operation.

To perform calculations, a traditional computer encodes information into several light rays that pass through a number of nodes. Here, an optical element called a Mach-Zehnder interferometer changes the properties of the transmitted rays — this is the equivalent of matrix multiplication. Then the light passes through a series of attenuators, slightly muffling the intensity of the light.

These processes provide training for the optical neural network. However, to maintain it in a trained state, a small amount of energy is still required. The authors of the study indicate that they have a solution that allows the chip to maintain its state without wasting energy. If this works, then the only consumers of energy will be the laser — the source of the light rays — and the computer that encodes the information.

To demonstrate how the system works, the team used a photon chip to recognize the four main vowel sounds. Even in the most primitive version, the system provided an accuracy level of 77% compared to 90% in the case of traditional models. Solzhchik sees no obstacles to improving the system.

Dirk Englund believes that the MIT programmable nanophoton processor can be used in signal processing for data transmission. The development of the research group, in his opinion, is able to cope with the task of converting a signal into digital form faster than its competitors, since light is essentially an analog medium.

Possible application areas for the use of technology team calls the maintenance of data centers or security systems, as well as the use of unmanned vehicles in the structure. But, anyway, before mass distribution it will take much more effort and time than has already been spent on the initial development of the technology.

John Timmer (John Timmer), scientific editor of Ars Technica, argues that the concept has a number of significant limitations. The most important of them is the size of optical microcircuits: to solve a number of commercial tasks, they must be made either large, or the light passed through them several times. In the latter case, you will need to develop a competent algorithm for calculations. Because of this, in the context of more complex operations, most of the claimed benefits may be lost, but if researchers can overcome obstacles and improve the accuracy of training, the system, according to Timmer, will be able to support deep learning using 100,000 times less energy. than traditional gpu.

PS Several other interesting materials from the First Corporate IaaS Blog:

/ photo by Bill Benzon CC

/ photo by Bill Benzon CCTraditional types of computer architecture are not very effective in terms of important for the neural network computations - multiple matrix multiplication. The MIT team has come up with an efficient way to perform these operations on an optical basis. At the same time, a tuned chip, according to Professor Solzhachik, has practical application, unlike other photon concepts.

')

For example, a similar work was carried out by a team of scientists led by Alexander Tait from Princeton University in New Jersey. Then the researchers managed to create the first photon neural network in which the neurons are represented by light waveguides.

The development of MIT, according to scientists, allows you to instantly produce matrix multiplication without a large expenditure of energy. Some light transformations, such as focusing with a lens, can be thought of as calculations. The new approach of photonic chips involves a lot of light rays, designed in such a way that their waves interact with each other. This creates interference patterns that convey the result of the planned operation.

Yichen Shen argues that chips with this architecture will be able to perform calculations performed by typical artificial intelligence algorithms, much faster and using less than one thousandth of energy per operation compared to conventional electronic microcircuits.

Scientists call the result of the study “programmable nanophotonic processor”. It is equipped with a set of waveguides, the links between which can be changed as needed for a specific calculation. Nicholas Harris explains that customization is possible for any matrix operation.

To perform calculations, a traditional computer encodes information into several light rays that pass through a number of nodes. Here, an optical element called a Mach-Zehnder interferometer changes the properties of the transmitted rays — this is the equivalent of matrix multiplication. Then the light passes through a series of attenuators, slightly muffling the intensity of the light.

These processes provide training for the optical neural network. However, to maintain it in a trained state, a small amount of energy is still required. The authors of the study indicate that they have a solution that allows the chip to maintain its state without wasting energy. If this works, then the only consumers of energy will be the laser — the source of the light rays — and the computer that encodes the information.

To demonstrate how the system works, the team used a photon chip to recognize the four main vowel sounds. Even in the most primitive version, the system provided an accuracy level of 77% compared to 90% in the case of traditional models. Solzhchik sees no obstacles to improving the system.

Dirk Englund believes that the MIT programmable nanophoton processor can be used in signal processing for data transmission. The development of the research group, in his opinion, is able to cope with the task of converting a signal into digital form faster than its competitors, since light is essentially an analog medium.

Possible application areas for the use of technology team calls the maintenance of data centers or security systems, as well as the use of unmanned vehicles in the structure. But, anyway, before mass distribution it will take much more effort and time than has already been spent on the initial development of the technology.

John Timmer (John Timmer), scientific editor of Ars Technica, argues that the concept has a number of significant limitations. The most important of them is the size of optical microcircuits: to solve a number of commercial tasks, they must be made either large, or the light passed through them several times. In the latter case, you will need to develop a competent algorithm for calculations. Because of this, in the context of more complex operations, most of the claimed benefits may be lost, but if researchers can overcome obstacles and improve the accuracy of training, the system, according to Timmer, will be able to support deep learning using 100,000 times less energy. than traditional gpu.

PS Several other interesting materials from the First Corporate IaaS Blog:

- Why the result of testing the speed of a disk in a laptop can be better than an industrial server in the cloud?

- What to look for when choosing a cloud PCI DSS hosting service

- Equipment manufacturers and IaaS: the race for the cloud trend

- Features of the choice between private, public and hybrid cloud

- The process of resource consumption VM in a virtual environment

Source: https://habr.com/ru/post/330574/

All Articles