Brocade Ethernet Switches

Brocade, known to most as a manufacturer of storage network switches, also produces Ethernet switches. Some set of such switches (ICX and VDX) was in our hands, and I want to make a short review and point out things that seemed interesting. I will try to avoid marketing formulations and numbers, but for those interested, I’ll point out links to the manufacturer’s website. Let's start :)

ICX Switches

These switches are designed for use in the enterprise network (Enterprise Campus). The ICX family consists of access lines (7150/7250/7450), aggregation (7450), and aggregation / kernel (7750).

The ICX 7150 is the youngest and latest addition to the ICX family. In addition to the standard models for 24 and 48 ports, this line has a compact 12-port switch (7150-C12P) and a switch with 802.3bz Multigigabit Ethernet ports (7150-48ZP). Models on 12/24/48 ports are passively cooled, while models on 24/48 ports with PoE can operate without the use of active cooling.

')

The ICX 7250 is a standard model for 24 and 48 ports. The main difference is 8 uplink / stacking ports.

The ICX 7450 is a standard model for 24 and 48 ports. The switches do not have uplink / stacking ports built in but have 3 slots for modules of additional interfaces (1GE, 10GE, 40GE). Also in this line is a switch with 802.3bz Multigigabit Ethernet ports (7450-32ZP) and 7450-48F aggregation switch (48x1GE SFP).

ICX 7750 - aggregation / core. Available in 3 models (48x10BASE-T, 48x1 / 10GE SFP / SFP +, 26x40GE QSFP), all support the installation of an additional module on 6x40GE QSFP ports. All 40GE ports support breakout mode (dividing a 40GE port into 4 10GE ports).

The switches of the ICX line support a standard set of technologies for such positioning, there are no obvious gaps in the functionality. In the 7150/7250/7450 series, some models support Power over HDBaseT in addition to PoE + (up to 90 watts per port).

Software for managing and monitoring switches - Brocade Network Advisor (runs under Windows or Linux, on a separate server or as a virtual machine).

What I want to note of the features:

And now let's see what can be collected on the basis of ICX. In addition to the usual two / three-tier architecture (on standalone + Multi-Chassis Trunking switches), two solutions are available for Enterprise Campus.

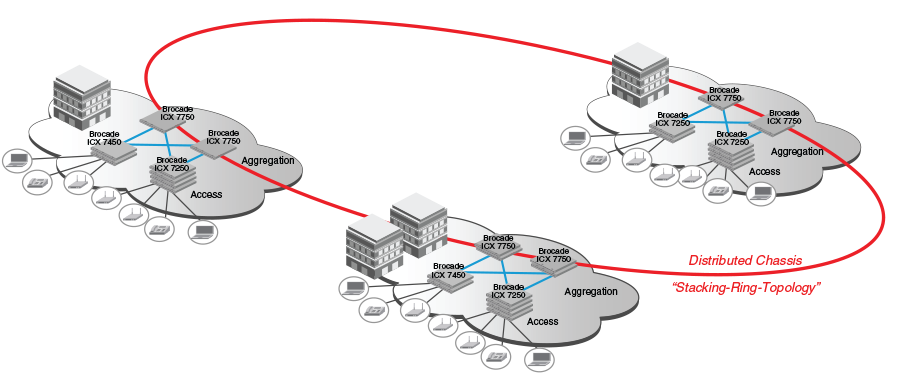

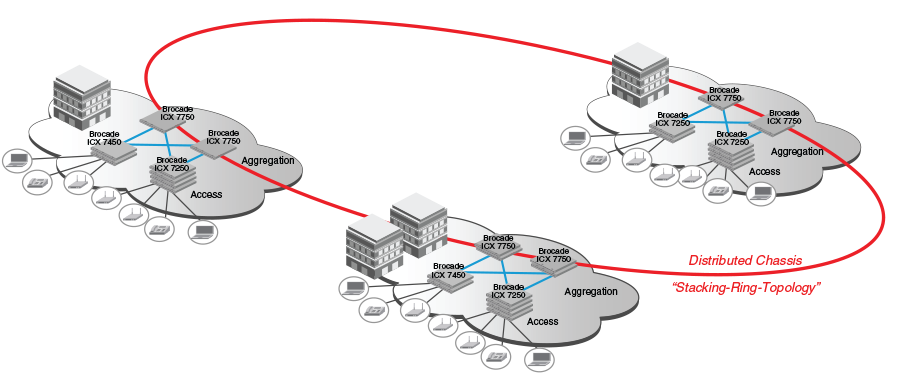

The first, with the use of stacking and, optionally, taking into account the long distance stacking, can look like this:

The scheme, I think, does not require comments.

The second solution is called Campus Fabric. In fact, this is the implementation of the 802.1BR standard (Bridge Port Extension) on ICX switches, where the 7750 acts as a single point of control (Control Bridge - CB), and the other switches as a remote line card (Port Extenders - PE).

What advantages does such an architecture give?

But there are controversial points:

On scaling / backup I will note the following things:

Whether or not to build the entire network on such a decision is a moot point, but as an access solution, the option has the right to life.

VDX Switches

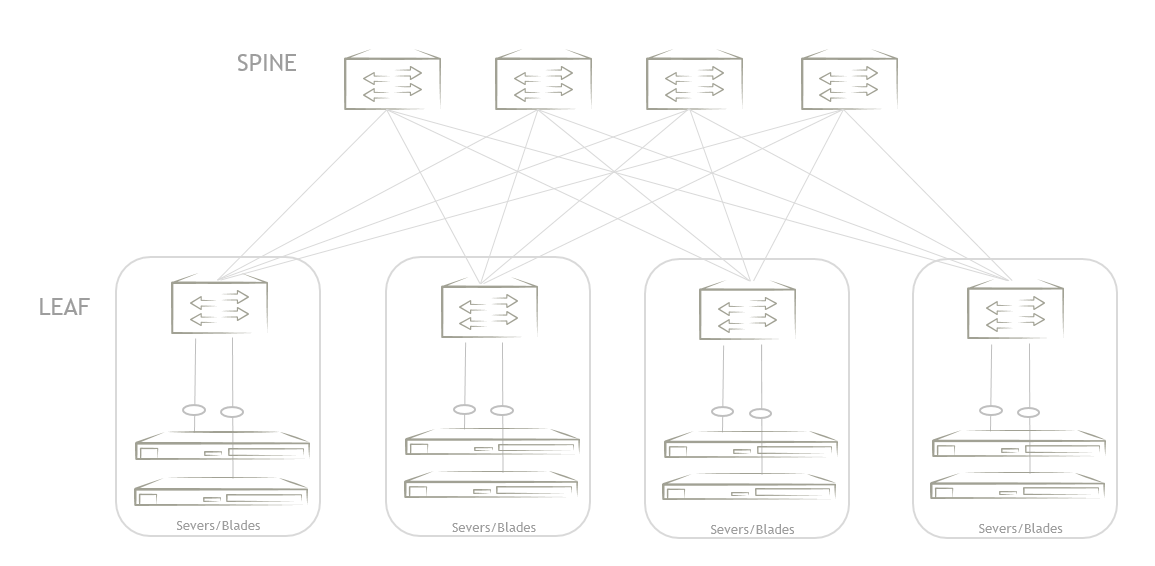

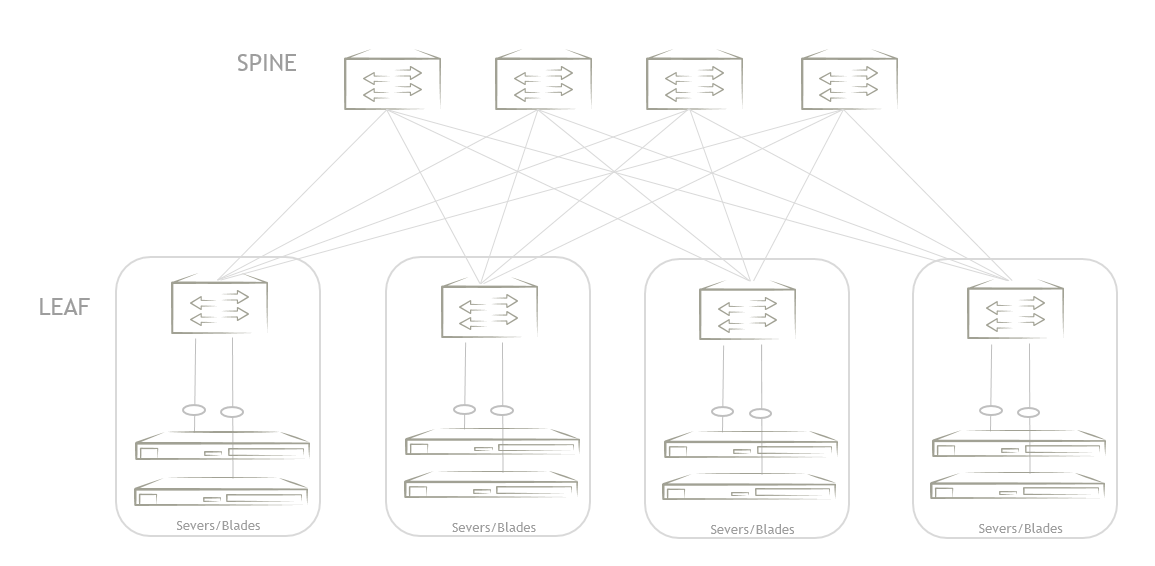

Let me remind you what the folded Clos network looks like:

Leaf switches provide server connectivity, Spine switches provide reserved high-speed connectivity for leaf switches. The picture shows the 3-stage (leaf-spine-leaf) version of such a network, but the Brocade documentation also considers the 5-stage (leaf-spine-superspine-spine-leaf) option.

In the VDX family, I would like to highlight two lines: 6740 and 6940.

VDX 6740 - leaf switches with 48x10GE ports (10GBASE-T or SFP +) + 4x40GE QSFP.

VDX 6940 - leaf / spine switches. 6940-36Q - 36x40GE QSFP ports. 6940-144S - 96x10GE SFP + and 12x40GE QSFP ports (3 40GE ports can be combined into one 100GE port and use QSFP28 transceivers).

These switches are positioned for use in the data center, and have the appropriate characteristics:

I will not deny that the data center network at speeds of 10GE / 40GE is a bit outdated, but Brocade’s 25GE switches in another family (SLX), and they didn’t come to us.

From the features of VDX switches, I note the following:

Architecture

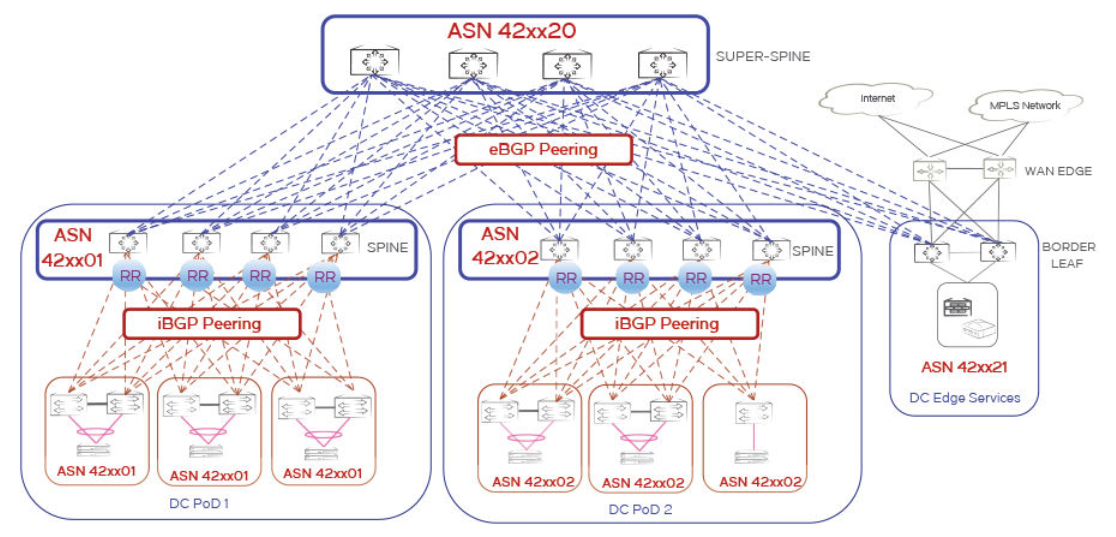

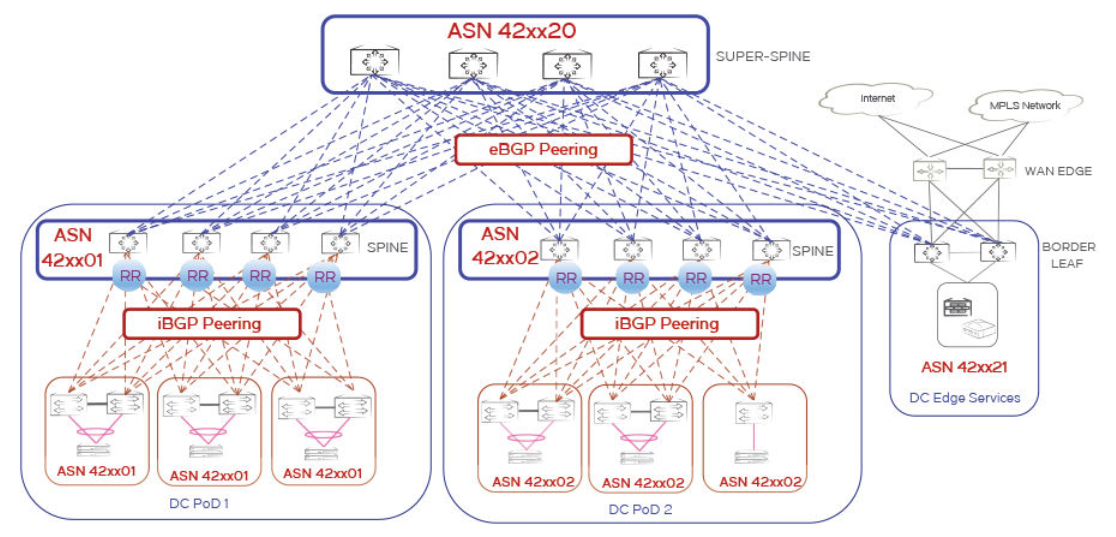

In addition to designs based on VCS factory, Brocade, of course, offers designs based on IP factory. This is a Clos network (3-stage or 5-stage), where the L2 / L3 border is located on leaf switches, and leaf-spine and ECMP connectivity provide dynamic routing protocol (usually BGP).

IP-factory with pervasive eBGP (use eBGP for all types of connectivity).

IP factory with iBGP (eBGP is used only for super-spine <-> spine and super-spine <-> edge leaf connectivity, iBGP is used within DC POD for spine <-> leaf).

In both cases, the VCS factory of two switches (vLAG pair) is used to connect servers to two leaf switches (multihoming).

And do not forget about VXLAN. Brocade supports virtualization with VXLAN as a data-plane, while EVPN can be used as a control plane. So, we take the designs of the IP-factory, add EVPN VXLAN and get the options:

with eBGP

and with iBGP

For multihoming, the same mechanism is used as in the IP factory.

Brocade does not insist on using iBGP or eBGP, both are equivalent. Brocade supports both Integrated Routing and Bridging for EVPN VXLAN: asymmetric and symmetric. Of the EVPN VXLAN implementations that I saw on the VDX is one of the most comprehensive.

Of course, besides VDX there are many other Brocade products for data centers, but they are not covered in this article.

In conclusion, I would like to add that there were no significant complaints about the quality of the equipment and software. The CLI, like many other manufacturers, is very similar to Cisco, in some places it is even more convenient (and we know that part of Cisco's CLI is far from ideal). The documentation for each product is collected in one place (Document Library), and this documentation is written very correctly. Validated Design is generally a pleasure to read. Well, let me remind you once again about the documents “Features and Standards Support Matrix” - it would be nice for someone to adopt this approach. Developed and active community, which is not every manufacturer. Complexity.

And a little about the current status of Brocade.

Broadcom buys the entire Brocade business, while Fiber Channel solutions (i.e. everything for the SAN) remain in the Broadcom solution portfolio, and the remaining Brocade products will be sold to other companies. The Broadcom-Brocade transaction will end in fiscal 3 quarter (ending July 30, 2017).

What will happen to the rest of the products:

ICX Switches

These switches are designed for use in the enterprise network (Enterprise Campus). The ICX family consists of access lines (7150/7250/7450), aggregation (7450), and aggregation / kernel (7750).

The ICX 7150 is the youngest and latest addition to the ICX family. In addition to the standard models for 24 and 48 ports, this line has a compact 12-port switch (7150-C12P) and a switch with 802.3bz Multigigabit Ethernet ports (7150-48ZP). Models on 12/24/48 ports are passively cooled, while models on 24/48 ports with PoE can operate without the use of active cooling.

')

The ICX 7250 is a standard model for 24 and 48 ports. The main difference is 8 uplink / stacking ports.

The ICX 7450 is a standard model for 24 and 48 ports. The switches do not have uplink / stacking ports built in but have 3 slots for modules of additional interfaces (1GE, 10GE, 40GE). Also in this line is a switch with 802.3bz Multigigabit Ethernet ports (7450-32ZP) and 7450-48F aggregation switch (48x1GE SFP).

ICX 7750 - aggregation / core. Available in 3 models (48x10BASE-T, 48x1 / 10GE SFP / SFP +, 26x40GE QSFP), all support the installation of an additional module on 6x40GE QSFP ports. All 40GE ports support breakout mode (dividing a 40GE port into 4 10GE ports).

The switches of the ICX line support a standard set of technologies for such positioning, there are no obvious gaps in the functionality. In the 7150/7250/7450 series, some models support Power over HDBaseT in addition to PoE + (up to 90 watts per port).

Software for managing and monitoring switches - Brocade Network Advisor (runs under Windows or Linux, on a separate server or as a virtual machine).

What I want to note of the features:

- A stack of switches. All ICX switches support the organization of the stack via uplink / stacking ports, you can collect a stack within a ruler (ie, 7250 with 7450, for example, not to collect). The maximum number of switches in a stack is 12. For stacking, the usual 10GE or 40GE ports are used, the maximum throughput is 480 Gbit / s. The maximum connection length for the stack is 10 km (long distance stacking). Both ring and line topologies are supported.

- For those who do not consider stacking as a fault tolerance option, the 7750 offers an alternative option - Multi-Chassis Trunking (aka MC-LAG). Those. A pair of switches is assembled into a cluster, which the devices connected to the cluster see as one logical device.

- Licensing. For 7150/7250/7450, the main licenses are Port on Demand (license for 10GE ports) and license for L3 functionality (dynamic routing protocols, PIM, PBR, VRRP, etc.). 7750 has only one license - L3 - which does not require activation.

- From the point of view of OS images of switches, there are two types: switch image and router image. Each switch has two flash (primary and secondary) and by default contains both types of image.

- The combination of OC image and L3 licenses give us the following options feature set: Layer 2 (switch image without a license), basic Layer 3 (router image without a license), full Layer 3 (router image with a license). If you need static routing, for example, then basic Layer 3 is enough.

- Supported features are conveniently listed in the document Features and Standards Support Matrix . I would even say that this is an exemplary document.

- Functional Time Domain Reflectometer, which Brocade called Virtual Cable Test.

- The 7150 is a new lineup, and some things in the datasheet are designated as In July, it is planned to release software in which, among other things, there will be stacking and L3-functionality.

And now let's see what can be collected on the basis of ICX. In addition to the usual two / three-tier architecture (on standalone + Multi-Chassis Trunking switches), two solutions are available for Enterprise Campus.

The first, with the use of stacking and, optionally, taking into account the long distance stacking, can look like this:

The scheme, I think, does not require comments.

The second solution is called Campus Fabric. In fact, this is the implementation of the 802.1BR standard (Bridge Port Extension) on ICX switches, where the 7750 acts as a single point of control (Control Bridge - CB), and the other switches as a remote line card (Port Extenders - PE).

What advantages does such an architecture give?

- This is a distributed chassis in which you can easily increase the port capacity.

- All links are active.

- Centralized management plane and control plane.

- L3 licenses, if required, are needed only on the Control Bridge, which allows you to save on L3 licenses.

But there are controversial points:

- The centralized control plane can be considered as a disadvantage, since the entire factory is one failure domain.

- All traffic goes through the factory core (Control Bridge). The exception is multicast and broadcast replication, which are made locally on the Port Extender.

- Port Extender access ports are not supported by LAG.

On scaling / backup I will note the following things:

- The 7750-based Control Bridge is a stack of 4 (maximum) switches, the recommended stack topology is a ring. We use at least 2 switches, since we definitely need a control bridge reservation.

- 7450 and 7250 can be used as Port Extenders. 7150 support will come later.

- PoD licenses are still needed.

- The maximum number of Port Extenders is 36. A switch stack is counted as separate switches.

- The ability to connect Port Extenders through the chain - up to 6 devices.

Whether or not to build the entire network on such a decision is a moot point, but as an access solution, the option has the right to life.

VDX Switches

Let me remind you what the folded Clos network looks like:

Leaf switches provide server connectivity, Spine switches provide reserved high-speed connectivity for leaf switches. The picture shows the 3-stage (leaf-spine-leaf) version of such a network, but the Brocade documentation also considers the 5-stage (leaf-spine-superspine-spine-leaf) option.

In the VDX family, I would like to highlight two lines: 6740 and 6940.

VDX 6740 - leaf switches with 48x10GE ports (10GBASE-T or SFP +) + 4x40GE QSFP.

VDX 6940 - leaf / spine switches. 6940-36Q - 36x40GE QSFP ports. 6940-144S - 96x10GE SFP + and 12x40GE QSFP ports (3 40GE ports can be combined into one 100GE port and use QSFP28 transceivers).

These switches are positioned for use in the data center, and have the appropriate characteristics:

- All 40GE ports support breakout mode (dividing a 40GE port into 4 10GE ports).

- Both front-to-back and back-to-front cooling are supported.

- Two power supplies.

- Supports FCoE and DCB.

- Automation support, integration with OpenStack and VMware products.

- VXLAN support.

I will not deny that the data center network at speeds of 10GE / 40GE is a bit outdated, but Brocade’s 25GE switches in another family (SLX), and they didn’t come to us.

From the features of VDX switches, I note the following:

- Licensing. There are two types of licenses - Port on Demand (for 10GE and 40GE ports) and a license for FCoE. PoD licenses are automatically "attached" to active ports. Those. you took the switch out of the box, turned it on, and you do not need to look in the documentation for which group of ports is activated by a license — you can simply install transceivers. To return a license to the free license pool, you will need to execute several commands in the CLI.

- Switches support VCS Fabric - TRILL-based Ethernet factory (up to 48 switches). TRILL-based, because from TRILL, only the data-plane is used, and the control-plane is used its own. Things unpretentious to the topology, easy to configure and maintain.

It is unusual that the VDX switches are always in Logical Chassis mode, i.e. in fact, every switch is a VCS factory of one switch. And, because within the VCS factory, the configuration is saved automatically; on the VDX switches you will not find an analogue of copy run startup.

VCS is basic functionality, but using a VCS factory is optional and can be disabled. - Two flash, but, unlike the ICX, here they are for fault tolerance and ease of software updates. There is no separation of OS images by options.

- Supported features are still listed in the Features and Standards Support Matrix document. The approach to writing documentation in this regard is no different from ICX.

- Since this is Brocade, then it is expected that some SAN switch technologies can be found on Ethernet switches. For example, this is MAPS (advanced monitoring) and part of the technology of the VCS factory.

- All branded DAC (copper direct attach) at 10GE and 40GE are active.

Architecture

In addition to designs based on VCS factory, Brocade, of course, offers designs based on IP factory. This is a Clos network (3-stage or 5-stage), where the L2 / L3 border is located on leaf switches, and leaf-spine and ECMP connectivity provide dynamic routing protocol (usually BGP).

IP-factory with pervasive eBGP (use eBGP for all types of connectivity).

IP factory with iBGP (eBGP is used only for super-spine <-> spine and super-spine <-> edge leaf connectivity, iBGP is used within DC POD for spine <-> leaf).

In both cases, the VCS factory of two switches (vLAG pair) is used to connect servers to two leaf switches (multihoming).

And do not forget about VXLAN. Brocade supports virtualization with VXLAN as a data-plane, while EVPN can be used as a control plane. So, we take the designs of the IP-factory, add EVPN VXLAN and get the options:

with eBGP

and with iBGP

For multihoming, the same mechanism is used as in the IP factory.

Brocade does not insist on using iBGP or eBGP, both are equivalent. Brocade supports both Integrated Routing and Bridging for EVPN VXLAN: asymmetric and symmetric. Of the EVPN VXLAN implementations that I saw on the VDX is one of the most comprehensive.

Of course, besides VDX there are many other Brocade products for data centers, but they are not covered in this article.

In conclusion, I would like to add that there were no significant complaints about the quality of the equipment and software. The CLI, like many other manufacturers, is very similar to Cisco, in some places it is even more convenient (and we know that part of Cisco's CLI is far from ideal). The documentation for each product is collected in one place (Document Library), and this documentation is written very correctly. Validated Design is generally a pleasure to read. Well, let me remind you once again about the documents “Features and Standards Support Matrix” - it would be nice for someone to adopt this approach. Developed and active community, which is not every manufacturer. Complexity.

And a little about the current status of Brocade.

Broadcom buys the entire Brocade business, while Fiber Channel solutions (i.e. everything for the SAN) remain in the Broadcom solution portfolio, and the remaining Brocade products will be sold to other companies. The Broadcom-Brocade transaction will end in fiscal 3 quarter (ending July 30, 2017).

What will happen to the rest of the products:

- ARRIS buys Ruckus Wireless and a line of ICX switches (which are likely to be sold as Ruckus ICX). It is stated that Ruckus Wireless will retain its structure and sales channel, i.e. there will be something like a Meraki-Cisco deal. The Broadcom-ARRIS transaction should be completed by the end of August 2017.

- Extreme Networks buys data center solutions, namely VDX / MLX / SLX, Brocade Workflow Composer and Network Visibility & Analytics products. The Broadcom-Extreme deal is due for completion by the end of September 2017.

- Pulse Secure buys the vADC (virtual Traffic Manager and related products) product family.

- AT & T buys the Vyatta platform (Vyatta Network OS and related products).

Source: https://habr.com/ru/post/330500/

All Articles