Cheap full flash - fiction or reality?

2016 was declared the beginning of the era of Flash drives: flash becomes cheaper and more accessible. And after the decline in the cost of ssd-drives manufacturers produce arrays, the logic of which was originally designed to work only with fast flash drives. The cost of such decisions still brings them to the corporate segment of the market, not allowing small companies to know all the "charms" of the ssd era. And here, Synology made its move: meet the budget full-flash array of Synology FS3017. What is this - a marketing ploy, or the first budget flash array?

The reasons for the appearance of flash systems (All Flash Array - AFA) are quite simple - unified hybrid arrays do not cope with the enormous performance of flash drives, and the logic of work is based on interaction with classic HDDs, increasing the system response time and SSD wear. Based on this, there are 3 main differences of AFA:

That is why when my colleagues suggested that I test the array of Synology FS3017, I was very surprised. Let's evaluate its parameters together and decide whether it fits the AFA category.

')

The FS3017 array has a standard rack-mount design: 24 SFF drives fit in a 2U package, drives from various manufacturers (Intel, OCZ, Seagate, etc.) are supported, which is typical of all Synology products. But what surprised me the most was the support of classic HDDs - this calls into question the belonging of the FS3017 to the AFA segment. On the positive side, both enterprise-class drives with a SAS interface and more budget SATA drives are supported.

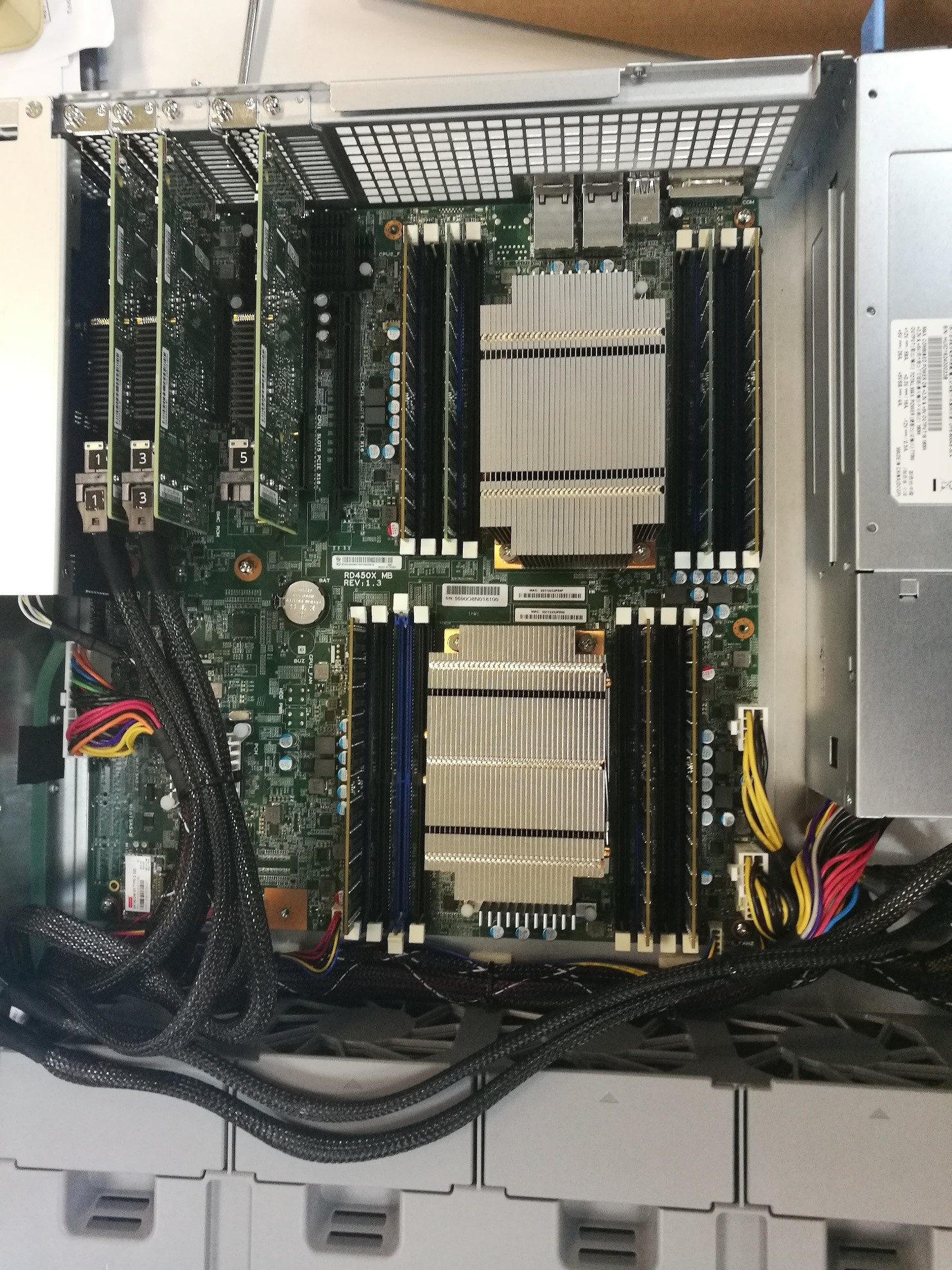

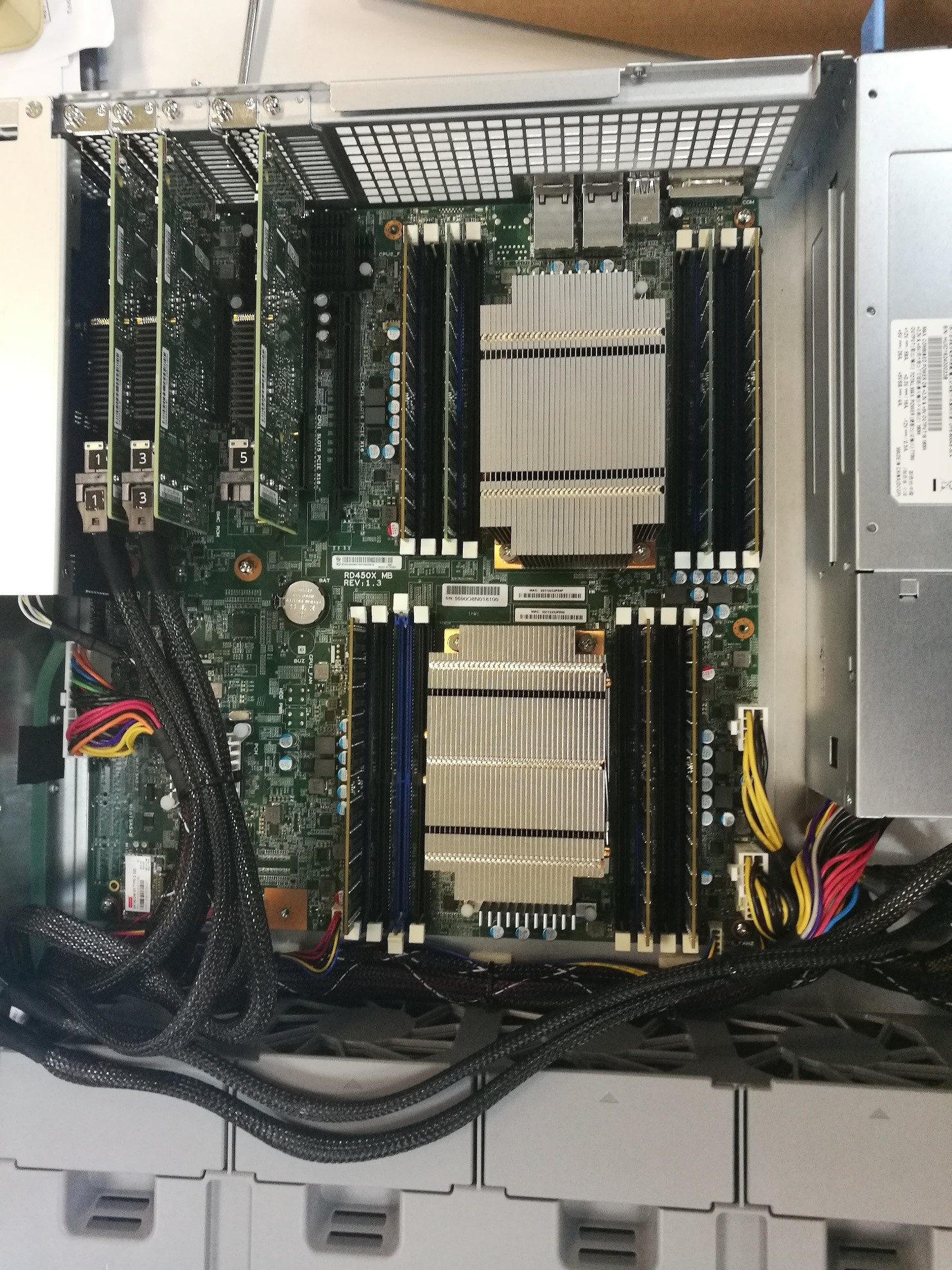

The processor uses 2 x Intel Xeon E5-2620 (6-core, 2.4 GHz) v3, the amount of internal memory is 64GB DDR4, and can be expanded to an impressive 512 Gb.

As a network interface in the basic configuration, there are 2 10 GE ports with support for Link Aggregation technology, used for both array management and data transfer. Additionally, you can install 2 PCI-E (x16 and x8) modules: a network expansion module (1GbE / 10GbE / 25GbE / 40GbE) or a SAS module for connecting two additional disk shelves (RX1217sas to 12 LFF slots or RX2417sas to 24 SFF slots ).

The operating system uses Synology DiskStation Manager (DSM) version 6.1, which is familiar to all users. I expected for my AFA Synology to release a special version of the microcode, but it matches the DSM of desktop and rack-mount models. So what does this AFA array do? As it turned out - support for technology RAID F1.

According to the manufacturer, RAID F1 technology is designed to reduce the likelihood of data loss associated with the failure of the entire RAID group as a whole. Since, unlike HDD, the failure of SSD drives is caused by the end of the drive resource (cell wear), then, theoretically, it is possible that all drives of one raid will die at the same time. To avoid this situation, RAID F1 was developed. Distributing data blocks between drives like RAID 5, RAID F1 writes a block with checksums twice to 1 drive. This leads to the fact that his resource is spent faster, and therefore the probability of failure of all disks of the raid is reduced. After completing the resource and replacing the drive, the system automatically selects the most worn drive, and begins to write duplicate blocks on it.

Despite all the statements of manufacturers, SSDs still remain expensive drives, and deliberately reducing their resources is not the most reasonable solution to the problem from a business point of view. While the main players of the AFA-market are introducing technologies for balancing and reducing the amount of recorded data to extend the lifespan of ssd-drives, Synology went in the opposite direction. How justified and whether this approach will be recognized by the market will show time.

The rest of the FS array differs from the RS line (Synology rack arrays) by the presence of a more powerful processor (Intel Xeon D Family), a second socket, and support for RAID F1. Inherited FS3017 and standard usage scenarios - the list of recommended DSM packages consists of a cloud storage server, a note server and a DVR. There is no talk of any optimization of architecture and logic for using ssd, which is supported by the presence of the SSD cache function. Since two of the three signs of AFA-systems are not observed, it remains to check how much the array can pump our ssd-drives.

I was provided with a test system with 128 Gb extended memory and 12 Intel SSD DC S3520 Series drives with a capacity of 480 Gb. Decent performance and affordable price due to the use of SATA-interface make them the most interesting for those who are planning to build a flash-class budget system. I allocated 1 disk for hot swapping (global disk replacement from all shelves is supported) and I combined 11 disks in RAID F1. On the resulting RAID group, I created an iSCSI volume and presented it to the server running Windows Server 2016. Server specification: 2 * Intel Xeon E5-2695 V3 2.3 GHz 14-core, 128 Gb RAM, 2-port 10 GE Base-T. For technical reasons, I had to use only one port 10 GE Base-T, which clearly affected the performance of the array.

I generated the load by the most popular synthetic test program Iometer on the market. The goal of the tests was not the performance of the disks, but the array itself, so I decided to use the 4KiB 100% random pattern.

I decided to start with the “ideal” load of 100% read, in order to check to look at the “beautiful” figure. FS3017 showed itself worthily, issuing 156k IOPS.

At the same time, the utilization of disks and the computing power of the system did not reach 90% - this indicates that the data transmission channel is a bottleneck. Then I went to the "combat" tests. I chose the most common load profile: 67% reading, 33% writing. The load was generated by 25 Workers (Iometer terminology) for 5 iSCSI targets.

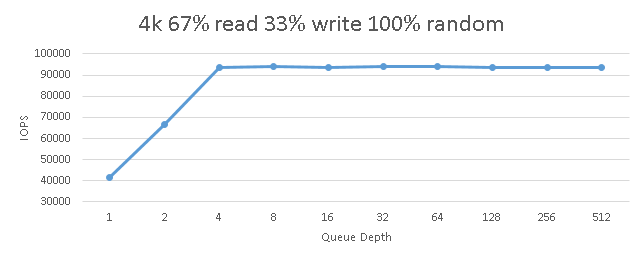

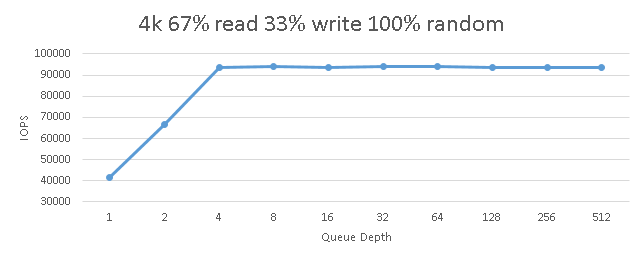

Based on personal experience, the specified AFA array configuration with a similar load profile should generate about 120-170k IOPS. After running the cyclic tests with an increase in the number of threads, I saw the following picture:

As can be seen from the graph, the limit was reached already at 4 I / O flows per loadable Worker and was ~ 94'000 IOPS. At the same time, there is no further degradation (which always occurs when the “ceiling” of the array is reached), therefore, this value is limited by the channel capacity. This is evidenced by indicators of utilization of computing resources of the array, not exceeding 15%.

It is worth noting the explosive growth of the delay time with an increase in the number of threads:

Upon reaching 4 threads on the Worker (which gives us a total of 100 threads on the system), the delay was ~ 1.06 ms, after which a sharp degradation began exponentially.

In the future, I plan to connect the server with two ports to see how much the system can squeeze the maximum out of these disks. But even achieved 94 k IOPS allow FS3017 to be rightly called AFA-system

The FS3017 system left a very strange impression: considering the performance indicators achieved in the tests, it can indeed be positioned as an AFA solution, however, the reduced reliability (due to the absence of a second controller) and the functionality that was cut down compared to its competitors puts an end to corporate tasks. Namely, this sector is currently the main and only user of AFA-systems.

But despite this, I am sure that she will definitely find her market segment. One of the most obvious may be the development of corporate applications and databases with complex terrain. In these tasks, we can afford to put a test copy of the database or the entire test environment on a very fast and relatively cheap FS3017 for further operational tasks, without exposing the business to losing critical data.

The reasons for the appearance of flash systems (All Flash Array - AFA) are quite simple - unified hybrid arrays do not cope with the enormous performance of flash drives, and the logic of work is based on interaction with classic HDDs, increasing the system response time and SSD wear. Based on this, there are 3 main differences of AFA:

- Increased controller performance, capable of completely "pumping" used drives.

- Optimized architecture that reduces system response time.

- Intellectual processing and data recording functionality that reduces drive wear.

That is why when my colleagues suggested that I test the array of Synology FS3017, I was very surprised. Let's evaluate its parameters together and decide whether it fits the AFA category.

')

The FS3017 array has a standard rack-mount design: 24 SFF drives fit in a 2U package, drives from various manufacturers (Intel, OCZ, Seagate, etc.) are supported, which is typical of all Synology products. But what surprised me the most was the support of classic HDDs - this calls into question the belonging of the FS3017 to the AFA segment. On the positive side, both enterprise-class drives with a SAS interface and more budget SATA drives are supported.

The processor uses 2 x Intel Xeon E5-2620 (6-core, 2.4 GHz) v3, the amount of internal memory is 64GB DDR4, and can be expanded to an impressive 512 Gb.

As a network interface in the basic configuration, there are 2 10 GE ports with support for Link Aggregation technology, used for both array management and data transfer. Additionally, you can install 2 PCI-E (x16 and x8) modules: a network expansion module (1GbE / 10GbE / 25GbE / 40GbE) or a SAS module for connecting two additional disk shelves (RX1217sas to 12 LFF slots or RX2417sas to 24 SFF slots ).

The operating system uses Synology DiskStation Manager (DSM) version 6.1, which is familiar to all users. I expected for my AFA Synology to release a special version of the microcode, but it matches the DSM of desktop and rack-mount models. So what does this AFA array do? As it turned out - support for technology RAID F1.

According to the manufacturer, RAID F1 technology is designed to reduce the likelihood of data loss associated with the failure of the entire RAID group as a whole. Since, unlike HDD, the failure of SSD drives is caused by the end of the drive resource (cell wear), then, theoretically, it is possible that all drives of one raid will die at the same time. To avoid this situation, RAID F1 was developed. Distributing data blocks between drives like RAID 5, RAID F1 writes a block with checksums twice to 1 drive. This leads to the fact that his resource is spent faster, and therefore the probability of failure of all disks of the raid is reduced. After completing the resource and replacing the drive, the system automatically selects the most worn drive, and begins to write duplicate blocks on it.

Despite all the statements of manufacturers, SSDs still remain expensive drives, and deliberately reducing their resources is not the most reasonable solution to the problem from a business point of view. While the main players of the AFA-market are introducing technologies for balancing and reducing the amount of recorded data to extend the lifespan of ssd-drives, Synology went in the opposite direction. How justified and whether this approach will be recognized by the market will show time.

The rest of the FS array differs from the RS line (Synology rack arrays) by the presence of a more powerful processor (Intel Xeon D Family), a second socket, and support for RAID F1. Inherited FS3017 and standard usage scenarios - the list of recommended DSM packages consists of a cloud storage server, a note server and a DVR. There is no talk of any optimization of architecture and logic for using ssd, which is supported by the presence of the SSD cache function. Since two of the three signs of AFA-systems are not observed, it remains to check how much the array can pump our ssd-drives.

I was provided with a test system with 128 Gb extended memory and 12 Intel SSD DC S3520 Series drives with a capacity of 480 Gb. Decent performance and affordable price due to the use of SATA-interface make them the most interesting for those who are planning to build a flash-class budget system. I allocated 1 disk for hot swapping (global disk replacement from all shelves is supported) and I combined 11 disks in RAID F1. On the resulting RAID group, I created an iSCSI volume and presented it to the server running Windows Server 2016. Server specification: 2 * Intel Xeon E5-2695 V3 2.3 GHz 14-core, 128 Gb RAM, 2-port 10 GE Base-T. For technical reasons, I had to use only one port 10 GE Base-T, which clearly affected the performance of the array.

I generated the load by the most popular synthetic test program Iometer on the market. The goal of the tests was not the performance of the disks, but the array itself, so I decided to use the 4KiB 100% random pattern.

I decided to start with the “ideal” load of 100% read, in order to check to look at the “beautiful” figure. FS3017 showed itself worthily, issuing 156k IOPS.

At the same time, the utilization of disks and the computing power of the system did not reach 90% - this indicates that the data transmission channel is a bottleneck. Then I went to the "combat" tests. I chose the most common load profile: 67% reading, 33% writing. The load was generated by 25 Workers (Iometer terminology) for 5 iSCSI targets.

Based on personal experience, the specified AFA array configuration with a similar load profile should generate about 120-170k IOPS. After running the cyclic tests with an increase in the number of threads, I saw the following picture:

As can be seen from the graph, the limit was reached already at 4 I / O flows per loadable Worker and was ~ 94'000 IOPS. At the same time, there is no further degradation (which always occurs when the “ceiling” of the array is reached), therefore, this value is limited by the channel capacity. This is evidenced by indicators of utilization of computing resources of the array, not exceeding 15%.

It is worth noting the explosive growth of the delay time with an increase in the number of threads:

Upon reaching 4 threads on the Worker (which gives us a total of 100 threads on the system), the delay was ~ 1.06 ms, after which a sharp degradation began exponentially.

In the future, I plan to connect the server with two ports to see how much the system can squeeze the maximum out of these disks. But even achieved 94 k IOPS allow FS3017 to be rightly called AFA-system

The FS3017 system left a very strange impression: considering the performance indicators achieved in the tests, it can indeed be positioned as an AFA solution, however, the reduced reliability (due to the absence of a second controller) and the functionality that was cut down compared to its competitors puts an end to corporate tasks. Namely, this sector is currently the main and only user of AFA-systems.

But despite this, I am sure that she will definitely find her market segment. One of the most obvious may be the development of corporate applications and databases with complex terrain. In these tasks, we can afford to put a test copy of the database or the entire test environment on a very fast and relatively cheap FS3017 for further operational tasks, without exposing the business to losing critical data.

Source: https://habr.com/ru/post/330434/

All Articles