Journey inside Avito: platform

We have already told you about Avito storage, pictures, media picnic, but the main question remained unsolved: what is it, platform architecture, what components does it consist of and what stack does it use. You asked to tell about the hardware component of Avito, the system used by virtualization, storage, and so on - well, we answer.

Hardware

For a long time, our servers were located in the Basefarm data center in Sweden, but in January-February of last year we coped with the large-scale task of moving to the Moscow Dataspace data center. About migration, if it will be interesting, I will tell in a separate article (we already told about database transfer to Highload 2016 ).

The move was due to several reasons. First of all, by the sensational law No. 242- on the storage of personal data of citizens of the Russian Federation. Secondly, we got more control over our hardware - not always efficient employees of a Swedish data center could fulfill simple requests for several days; here, the staff does everything quickly, and in any case, we can always personally come to the DC and take part in solving the problems that have arisen.

')

Servers

Servers are divided into several functional groups, each group has its own hardware configuration. For example, servers for the PHP backend also perform the role of the first level of image storage (for more details on the pictures below), they have a little RAM, small disks, but productive processors. On the servers for the Redis-cluster, on the contrary, a lot of RAM, and the processors are not as powerful, and so on. Such specific configurations have allowed us to significantly reduce the cost of servers compared to what it was before, when many servers were of universal configuration, and some resources in them were always not utilized.

Network

Our network is built according to the classical two-tier scheme: the core plus the access level. For fault tolerance, each access level switch is connected optically to two different root switches; A LACP link is made on top of these two links (one virtual link on top of several physical links allows you to fully utilize all the physical links and adds resistance to the failure of the physical links).

Software part

Virtualization

Hardware virtualization is not used as such, but virtualization at the operating system level (aka containers) is very much the case. This is mainly LXC (OpenVZ was once used for a long time), but now we are looking at Docker (with Kubernetes) with interest and slowly moving onto it, and launching new microservices right away in the Kubernetes cluster.

About how we use Kubernetes, we told on the profile mita and Codefest 2017:

- “ Kubernetes in Avito ” - Evgeny Olkov (Kubernetes meetup, 2017)

- “ Helm: package manager for Kubernetes ” - Sergey Orlov (Kubernetes meetup, 2017)

- “ Kubernetes as a platform for microservices ” - Sergey Orlov and Mikhail Prokopchuk (Codefest, 2017)

Picture Store

The history of the image store is described in detail in the article . Now it has a two-level structure: the first level is small images (those used in search results and, accordingly, are often requested; resolution up to 640x640) plus the cache of large images, the second level is large images that are accessible only from the announcement card. There is no direct access from the outside to the second level servers, everything goes through the first level (and thus settles in the cache). Due to the different profile of the load on different levels, the configuration and the number of servers in each level also differ: at the first level there are many servers with small disks, and at the second level there are few (~ five times less) servers with large disks.

All necessary image permissions are generated on the backend when loading. Images of some unpopular sizes are not stored on servers, but are generated by nginx on the fly. Similarly with watermark: for most of the permissions, they are overlaid by the backend immediately, but we give some permissions to partners without watermarks (and to the site with watermarks), so they are superimposed by nginx on the fly.

If we go into details, then we have 100 virtual picture nodes of the first and second levels, which are evenly scattered across the physical servers of the corresponding levels. The binding of virtual nodes to physical ones is regulated using CNAME records in the DNS and, in the case of the first level, external IP on servers.

To reduce the load on our servers and save traffic and electricity, we use a CDN, but our platform has sufficient resources to work independently, and we are not tied to a particular provider.

Platform device

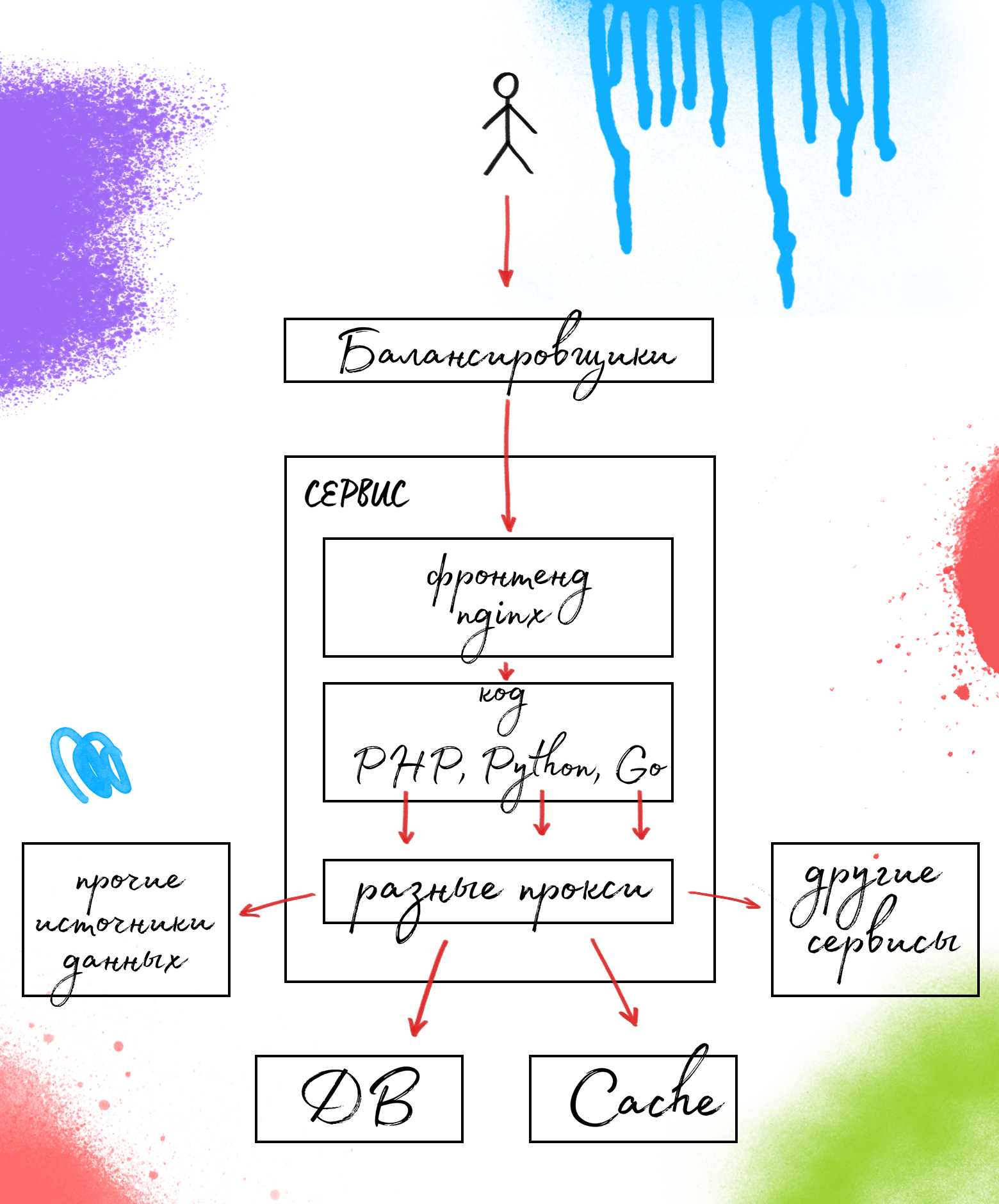

Incoming traffic is balanced at different levels: L3, L4, L7.

The internal structure of the platform can be described as “is in the process of transition from monolith to microservices”. The functionality is divided into pieces, which we call “services” - these are not microservices yet, but no longer a monolith.

The service device is typical: the frontend on nginx, the backend is the service itself, and a certain set of proxies to all the necessary data sources - databases, caches, and other services.

Some details about the proxies used can be found in my speech at Highload Junior 2016 .

Conclusion

Often from high-loaded projects are waiting for complex architecture, five-story solutions that require continuous support. This is wrong - the more complex the system, the more trouble the most insignificant bug can cause. Therefore, we are for simplicity. We adhere to the principle of KISS, do not breed the essence and do not complicate what should be simple - in development, in support, and in administration.

Such a device platform allows us to easily scale it, and therefore avoid many problems. Now we are in transitional

Source: https://habr.com/ru/post/330414/

All Articles