On the road with clouds. Relational databases in a new technological context

Hi, Habr! We are thinking about the publication of an unusual book, the author of which wants to present a very interesting interpretation of the modern technological landscape encompassing Big Data databases and processing technologies. The author believes that without the active use of clouds, there is no way to go, and he tells about this landscape from this perspective.

About the author:

Alexander Vasilyevich Senko , Candidate of Physical and Mathematical Sciences in the field of computer modeling and optimization of powerful superhigh-frequency devices.

')

The author is certified by Microsoft in the field of creating applications in the Microsoft Azure environment: Microsoft Certified Professional and Microsoft Specialist: Developing Microsoft Azure Solutions. In 2008 he graduated from the Belarusian State University of Informatics and Radioelectronics (BSUIR) with a degree in “Modeling and computer design of radio-electronic equipment”. From 2007 to 2012, the author works at the Scientific Research Institute of Nuclear Problems of the BSU as a technician, laboratory technician, and engineer. From 2013 to the present, the author works at ISSoft Solutions for the position of software developer and DevOps with a specialization in the creation of cloud applications based on the Microsoft stack.

Under the cut, you can appreciate the ideas and style of the author. Feel free to vote and comment - and welcome under the cat!

Introduction or why this article should write.

Currently, cloud services allow developers and system administrators to quickly create application infrastructure and just as quickly get rid of it when this is no longer necessary. The fee is charged for the use of cloud resources and for their level of performance, also referred to as the “price level” - pricing tier. You can quickly create resources, “play around” with them, “touch”, master them, and at the same time absolutely do not need to worry about buying / administering servers, reading documentation about installing the required software, and so on. In cloud environments, all typical administration and monitoring tasks consolidated into convenient API or user interface of the web portal management. But there is one complication - if a person does not understand or does not fully understand how cloud environments work, the task “what do I need to do to get a database and connect to it from my application” at first is puzzling. The abundance of cloud services and various “price levels” for each service further complicates the selection task. This article presents a brief overview of one of the most popular cloud services — relational database services.

Relational databases are a traditional data warehouse. Information in them can be presented in the form of a set of tables consisting of rows. These tables are linked to each other using “keys” —special integrity constraints, which match the rows of one table with the rows of another. “Big data” is often more convenient to store and process more quickly in a non-relational form (for example, in key-value databases), but data stored as interconnected tables allow efficient analysis in any dimension — across rows of related tables and across the table, that for the case of NoSQL databases is difficult. In addition, for relational databases there is a very effective query language - SQL. In cloud environments, relational databases are presented in two forms - SQL as a Service and as ready-made images for virtual machines. Databases hosted on virtual machines, in fact, differ little from database servers hosted on traditional virtual and physical hosts. At the same time, the choice of the type of virtual machine, virtual disks, cloud storage for their placement and network interfaces significantly affect its performance, but this is a topic for a separate chapter, and perhaps also a book. Database servers hosted on virtual machines in the clouds have significant limitations associated with scaling, availability, and fault tolerance. For example, Azure VMs at a service level agreement (availability level agreement) at the level of 99.95% only when using a cluster of at least two machines located in one common availability group (Availability Set), and they will physically have network interfaces and power supplies are separated. In order to reach level 99.99 you need to configure a cluster of several Always On virtual machines.

At the same time, SQL has an SLA of 99.99 without the additional difficulties associated with configuring clusters. On the other hand, the requirements of the HIPPA standards correspond to SQL servers hosted on virtual machines, but not the Azure SQL service. The rest of the cloud SQL service has unrivaled capabilities in terms of scaling, availability and ease of administration (replication, backup, export, performance analysis of executed queries). In addition, in some cases, SQL as a Service is significantly cheaper than a similar server that is hosted on a virtual machine (the author is aware that this is a very controversial statement, especially considering the particular use of resources in SQL as a Service and SQL on virtual machines). SQL as a Service does not support all the capabilities of traditional database servers. For example, the execution of queries from tables in one database inside another in the case of an SQL service is associated with a number of difficulties and limitations. Consider examples of implementing SQL as a Service in different cloud environments.

Microsoft's Azure SQL relational database service.

Microsoft's SQL-As-A-Service implementation is a cloud relational database service based on the Microsoft SQL Server engine and is called Azure SQL. The Azure SQL query language implements a subset of T-SQL functions. Azure SQL service instances, which are direct equivalents of MS-SQL databases, are logically grouped into Azure SQL Server “servers”. Each Azure SQL server must have a unique URL, credentials (username and password), and a set of valid IP addresses that can access it (this list is generated in the server’s firewall and regulates the rules for accessing it).

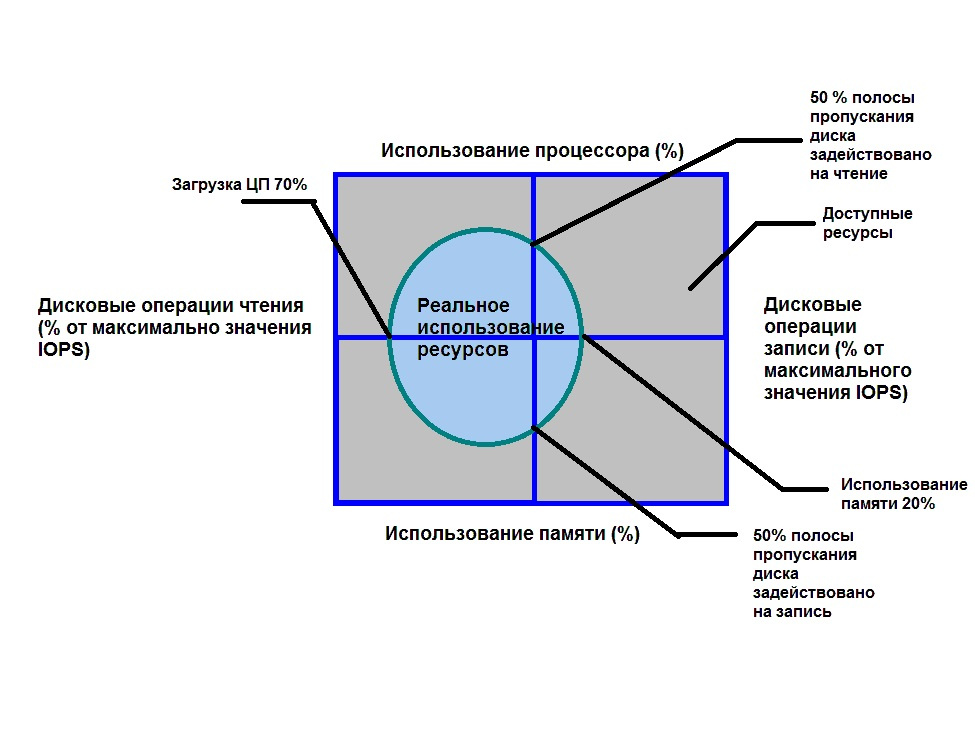

Physically, Azure SQL Server is located in a data center – e located in a specific geographic region. All instances of Azure SQL databases are located in the same region. It is also possible to geographically replicate databases in several regions using the Primary-Secondary or Primary-ReadOnly Replica scheme (the primary server is a read-only replica). With extensive T-SQL support in Azure SQL, you can directly migrate databases from Microsoft SQL Server to Azure SQL. Of course, direct migration from one type of database to another is far from simple and not a quick task, but in this case it is possible in principle and directly supported using special programs from Microsoft, Red Gate, etc. However, it should be borne in mind that this In some cases, the software simply “cuts out” incompatible database objects without trying to adapt them. And it may happen that after such a migration, the database “rose”, but happy developers can “suddenly” miss a number of database objects in Azure SQL. Each database instance has a certain “price level” - pricing tier - which is characterized by its performance, limitations on size, number of recovery points, and replication capabilities. All possible price levels are divided into the following levels: basic (“Basic”), standard (“Standard”) and premium (“Premium”). The most important difference in price levels is manifested in the different values of DTU - a generalized parameter characterizing database performance. What is this parameter? DTU (eDTU) is a generalized characteristic of database performance that includes performance indicators for the central processor, memory, I / O devices, and the network interface. This metric defines a kind of “volume” that can “occupy” Azure SQL performance. If a request is currently being executed in the database, this request consumes a certain amount of resources, which occupy a part of this allowed volume (see Figure 1). It should be borne in mind that not only the volume, but also the specific values of each of the indicators is limited (that is, it will not be possible to “exchange” the extremely small use of the CPU for an extremely large memory value). This is manifested in particular in the fact that one “curve” query can cause reuse of one of the resources and, as a result, weigh the entire database (an instance of the Azure SQL Database service but not the Azure SQL Server) and the exact same query will normally work in the traditional database Microsoft SQL Server. This is because, in Azure SQL, the mechanism for protecting database resources from excessive use is triggered, which forces the process that triggered the request to time out, thereby freeing up database resources.

So, Azure SQL consists of instances of the Azure SQL Database, which are actually relational repositories of information with specific values for size and maximum DTU; an Azure SQL Server instance that groups the Azure SQL Database, providing them with a common connection string, access rules written in the firewall and in some cases providing a common elastic resource pool (Elastic Database Pool, which is described below).

Now let's get acquainted with Elastic Database Pool. This service is built into Azure SQL Server and is used to pool databases into one pool and assign shared resources to all of them. Why do you need it? Consider an example of a SaaS application created to provide certain services to users registered in it (for example, this is a cloud-based CRM system). Each registered user of such a system is allocated its own database of a certain level of performance, expressed by a specific DTU value. Each performance level has a certain monthly cost, which ultimately affects the profit of the SaaS owner - it will be equal to the total monthly fee of all users minus the cost of cloud services underlying the system architecture. Now suppose that user activity, expressed in the use of the DTU of each base, is unrelated, i.e. they load their bases at various random time intervals. This leads to the fact that there are moments of time when the use of the DTU database of a particular user is very small, and moments when it is great. Thus, at the moment of small use of the DTU, the bases are “idle” - but they are charged for by Azure. And what if we take several such databases, that the activity intervals of each coincide with the underload intervals of others, and combine them into a common pool of databases and distribute the total DTU resources between the databases so that the total load is uniform in time; the fee for the price level will be significantly less than the total fee for all databases. This idea is the basis of the Azure Elastic Database Pool service. In addition, ElasticDatabasePool allows you to implement scenarios for dividing one large database into smaller ones, but to perform queries in this divided into “fragments” (shard) database as if it were one monolithic database.

Consider now the case when it is still necessary to perform a query to the data located in different databases. If there is just a set of databases without fragmentation, it is necessary to select one head database and create external data sources and external tables (reference table) in it, which are “reflections” of real tables hosted in other databases. You can also not use a specialized head database, but create tables in each instance of the Azure SQL Database. The disadvantage of this approach is that when changing table schemas in databases, you need to synchronously change the schemas in external tables (if there is no master database, then a lot of work needs to be done on changing the schema in all external tables in all databases where they are present) . In addition, T-SQL implementations in Azure SQL have data type restrictions for external tables (for example, they do not support Foreign Key and nvatrchar (max) type) and currently there are a number of restrictions on executing such queries and databases, where these queries will be executed - for example, it is impossible to export a database to a BACPAC file if it contains references to external tables . Well, we should not forget about the decline in performance for such requests. But what does it all look like?

Let's assume for definiteness that we have two databases - the First database and the Second database. Now let's say that from the Second database we need to execute a query to the First database. To do this, credentials must be created in the Second database that will be used to access the First database:

Then, in the Second database, create an external data source that will be used to link to the tables of the external database:

After that, in the Second database, you need to create an “External Table” (External Table) - a table that is a “reflection” of a similar table located in the First database:

And that's it! Now we can make queries to the table from the First database in the Second database as to an ordinary table located in the Second database. It should be borne in mind that these tables are connected only by data - any changes in the schema in one database will not affect the schema of the table in another.

Another possible case of querying various databases is to use Elastic Database jobs . The essence of this technology is that a group of databases is combined and managed centrally from a common specialized VM head server. By interacting with this server programmatically or through Azure Portal, you can manage all connected databases by creating jobs. These tasks may be of the following nature:

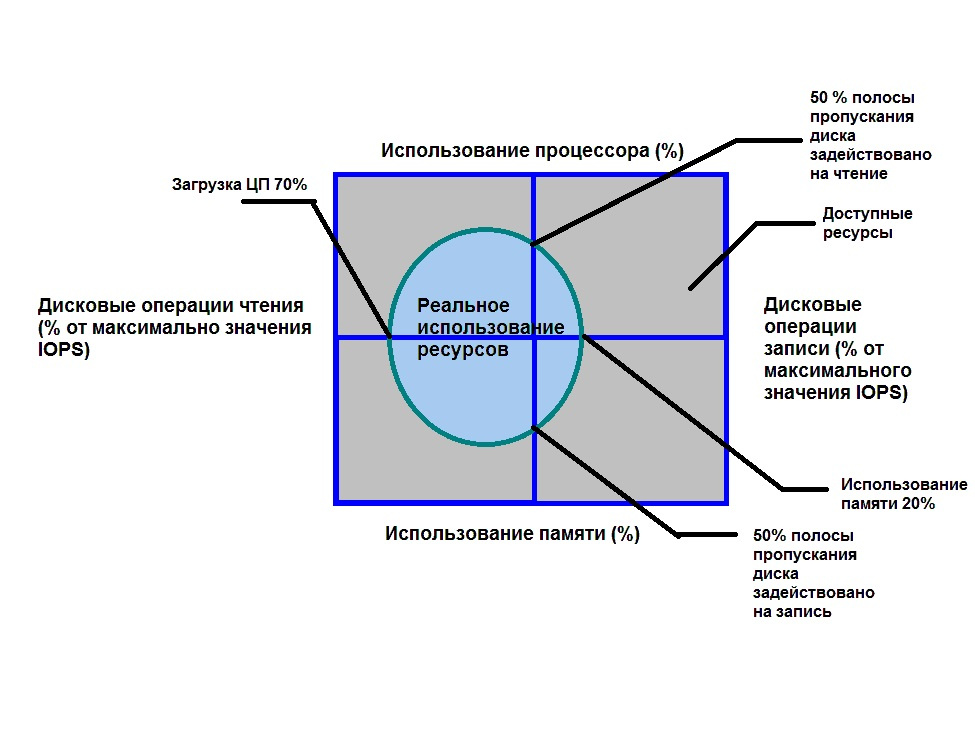

The Elastic Database jobs service contains the following components (Figure 2):

Configuring, using and administering this entire system is a rather complicated topic and will not be discussed in detail. Those interested can read the topic here .

In addition to single databases, AzureSQL allows you to create more complex relational repositories — fragmented databases — Sharded Databases and relational repositories with broad support for massively parallel query execution — Azure SQL DWH.

Consider the first fragmented database. The need for database fragmentation occurs when its size becomes excessively large to fit on a single instance of Azure SQL (currently more than 1 TB for Premium pricing tier). Of course, you can break a large database into smaller databases logically by analyzing its structure (schema). As mentioned earlier, Azure SQL Server is a logical grouping of Azure SQL Database instances, and not a physical union on a single server. And direct queries to database objects from another database are possible only if these objects are External table or these databases are combined in the Elastic Database Pool, and Elastic Database Query queries or Elastic Transactions transactions are used. In this case, it is necessary to apply sharding the database. Database fragmentation is essentially horizontal scaling, different from vertical scaling — increasing the “size” of the database across CPU, RAM, IOPs, and so on. Splitting one large repository into several smaller repositories and parallel processing all of them, then adding them processing results is the key concept of all big data processing technologies, with which we will continue to meet more than once.

About the author:

Alexander Vasilyevich Senko , Candidate of Physical and Mathematical Sciences in the field of computer modeling and optimization of powerful superhigh-frequency devices.

')

The author is certified by Microsoft in the field of creating applications in the Microsoft Azure environment: Microsoft Certified Professional and Microsoft Specialist: Developing Microsoft Azure Solutions. In 2008 he graduated from the Belarusian State University of Informatics and Radioelectronics (BSUIR) with a degree in “Modeling and computer design of radio-electronic equipment”. From 2007 to 2012, the author works at the Scientific Research Institute of Nuclear Problems of the BSU as a technician, laboratory technician, and engineer. From 2013 to the present, the author works at ISSoft Solutions for the position of software developer and DevOps with a specialization in the creation of cloud applications based on the Microsoft stack.

Under the cut, you can appreciate the ideas and style of the author. Feel free to vote and comment - and welcome under the cat!

Introduction or why this article should write.

Currently, cloud services allow developers and system administrators to quickly create application infrastructure and just as quickly get rid of it when this is no longer necessary. The fee is charged for the use of cloud resources and for their level of performance, also referred to as the “price level” - pricing tier. You can quickly create resources, “play around” with them, “touch”, master them, and at the same time absolutely do not need to worry about buying / administering servers, reading documentation about installing the required software, and so on. In cloud environments, all typical administration and monitoring tasks consolidated into convenient API or user interface of the web portal management. But there is one complication - if a person does not understand or does not fully understand how cloud environments work, the task “what do I need to do to get a database and connect to it from my application” at first is puzzling. The abundance of cloud services and various “price levels” for each service further complicates the selection task. This article presents a brief overview of one of the most popular cloud services — relational database services.

Relational databases are a traditional data warehouse. Information in them can be presented in the form of a set of tables consisting of rows. These tables are linked to each other using “keys” —special integrity constraints, which match the rows of one table with the rows of another. “Big data” is often more convenient to store and process more quickly in a non-relational form (for example, in key-value databases), but data stored as interconnected tables allow efficient analysis in any dimension — across rows of related tables and across the table, that for the case of NoSQL databases is difficult. In addition, for relational databases there is a very effective query language - SQL. In cloud environments, relational databases are presented in two forms - SQL as a Service and as ready-made images for virtual machines. Databases hosted on virtual machines, in fact, differ little from database servers hosted on traditional virtual and physical hosts. At the same time, the choice of the type of virtual machine, virtual disks, cloud storage for their placement and network interfaces significantly affect its performance, but this is a topic for a separate chapter, and perhaps also a book. Database servers hosted on virtual machines in the clouds have significant limitations associated with scaling, availability, and fault tolerance. For example, Azure VMs at a service level agreement (availability level agreement) at the level of 99.95% only when using a cluster of at least two machines located in one common availability group (Availability Set), and they will physically have network interfaces and power supplies are separated. In order to reach level 99.99 you need to configure a cluster of several Always On virtual machines.

At the same time, SQL has an SLA of 99.99 without the additional difficulties associated with configuring clusters. On the other hand, the requirements of the HIPPA standards correspond to SQL servers hosted on virtual machines, but not the Azure SQL service. The rest of the cloud SQL service has unrivaled capabilities in terms of scaling, availability and ease of administration (replication, backup, export, performance analysis of executed queries). In addition, in some cases, SQL as a Service is significantly cheaper than a similar server that is hosted on a virtual machine (the author is aware that this is a very controversial statement, especially considering the particular use of resources in SQL as a Service and SQL on virtual machines). SQL as a Service does not support all the capabilities of traditional database servers. For example, the execution of queries from tables in one database inside another in the case of an SQL service is associated with a number of difficulties and limitations. Consider examples of implementing SQL as a Service in different cloud environments.

Microsoft's Azure SQL relational database service.

Microsoft's SQL-As-A-Service implementation is a cloud relational database service based on the Microsoft SQL Server engine and is called Azure SQL. The Azure SQL query language implements a subset of T-SQL functions. Azure SQL service instances, which are direct equivalents of MS-SQL databases, are logically grouped into Azure SQL Server “servers”. Each Azure SQL server must have a unique URL, credentials (username and password), and a set of valid IP addresses that can access it (this list is generated in the server’s firewall and regulates the rules for accessing it).

Physically, Azure SQL Server is located in a data center – e located in a specific geographic region. All instances of Azure SQL databases are located in the same region. It is also possible to geographically replicate databases in several regions using the Primary-Secondary or Primary-ReadOnly Replica scheme (the primary server is a read-only replica). With extensive T-SQL support in Azure SQL, you can directly migrate databases from Microsoft SQL Server to Azure SQL. Of course, direct migration from one type of database to another is far from simple and not a quick task, but in this case it is possible in principle and directly supported using special programs from Microsoft, Red Gate, etc. However, it should be borne in mind that this In some cases, the software simply “cuts out” incompatible database objects without trying to adapt them. And it may happen that after such a migration, the database “rose”, but happy developers can “suddenly” miss a number of database objects in Azure SQL. Each database instance has a certain “price level” - pricing tier - which is characterized by its performance, limitations on size, number of recovery points, and replication capabilities. All possible price levels are divided into the following levels: basic (“Basic”), standard (“Standard”) and premium (“Premium”). The most important difference in price levels is manifested in the different values of DTU - a generalized parameter characterizing database performance. What is this parameter? DTU (eDTU) is a generalized characteristic of database performance that includes performance indicators for the central processor, memory, I / O devices, and the network interface. This metric defines a kind of “volume” that can “occupy” Azure SQL performance. If a request is currently being executed in the database, this request consumes a certain amount of resources, which occupy a part of this allowed volume (see Figure 1). It should be borne in mind that not only the volume, but also the specific values of each of the indicators is limited (that is, it will not be possible to “exchange” the extremely small use of the CPU for an extremely large memory value). This is manifested in particular in the fact that one “curve” query can cause reuse of one of the resources and, as a result, weigh the entire database (an instance of the Azure SQL Database service but not the Azure SQL Server) and the exact same query will normally work in the traditional database Microsoft SQL Server. This is because, in Azure SQL, the mechanism for protecting database resources from excessive use is triggered, which forces the process that triggered the request to time out, thereby freeing up database resources.

So, Azure SQL consists of instances of the Azure SQL Database, which are actually relational repositories of information with specific values for size and maximum DTU; an Azure SQL Server instance that groups the Azure SQL Database, providing them with a common connection string, access rules written in the firewall and in some cases providing a common elastic resource pool (Elastic Database Pool, which is described below).

Now let's get acquainted with Elastic Database Pool. This service is built into Azure SQL Server and is used to pool databases into one pool and assign shared resources to all of them. Why do you need it? Consider an example of a SaaS application created to provide certain services to users registered in it (for example, this is a cloud-based CRM system). Each registered user of such a system is allocated its own database of a certain level of performance, expressed by a specific DTU value. Each performance level has a certain monthly cost, which ultimately affects the profit of the SaaS owner - it will be equal to the total monthly fee of all users minus the cost of cloud services underlying the system architecture. Now suppose that user activity, expressed in the use of the DTU of each base, is unrelated, i.e. they load their bases at various random time intervals. This leads to the fact that there are moments of time when the use of the DTU database of a particular user is very small, and moments when it is great. Thus, at the moment of small use of the DTU, the bases are “idle” - but they are charged for by Azure. And what if we take several such databases, that the activity intervals of each coincide with the underload intervals of others, and combine them into a common pool of databases and distribute the total DTU resources between the databases so that the total load is uniform in time; the fee for the price level will be significantly less than the total fee for all databases. This idea is the basis of the Azure Elastic Database Pool service. In addition, ElasticDatabasePool allows you to implement scenarios for dividing one large database into smaller ones, but to perform queries in this divided into “fragments” (shard) database as if it were one monolithic database.

Consider now the case when it is still necessary to perform a query to the data located in different databases. If there is just a set of databases without fragmentation, it is necessary to select one head database and create external data sources and external tables (reference table) in it, which are “reflections” of real tables hosted in other databases. You can also not use a specialized head database, but create tables in each instance of the Azure SQL Database. The disadvantage of this approach is that when changing table schemas in databases, you need to synchronously change the schemas in external tables (if there is no master database, then a lot of work needs to be done on changing the schema in all external tables in all databases where they are present) . In addition, T-SQL implementations in Azure SQL have data type restrictions for external tables (for example, they do not support Foreign Key and nvatrchar (max) type) and currently there are a number of restrictions on executing such queries and databases, where these queries will be executed - for example, it is impossible to export a database to a BACPAC file if it contains references to external tables . Well, we should not forget about the decline in performance for such requests. But what does it all look like?

Let's assume for definiteness that we have two databases - the First database and the Second database. Now let's say that from the Second database we need to execute a query to the First database. To do this, credentials must be created in the Second database that will be used to access the First database:

CREATE MASTER KEY ENCRYPTION BY PASSWORD = '<0>'; CREATE DATABASE SCOPED CREDENTIAL FirstDBQueryCred -- WITH IDENTITY = '<>', SECRET = '<0>'; Then, in the Second database, create an external data source that will be used to link to the tables of the external database:

CREATE EXTERNAL DATA SOURCE FirstDatabaseDataSource WITH ( TYPE = RDBMS, LOCATION = '<logical_server_name>.database.windows.net', DATABASE_NAME = 'First', CREDENTIAL = FirstDBQueryCred ) After that, in the Second database, you need to create an “External Table” (External Table) - a table that is a “reflection” of a similar table located in the First database:

CREATE EXTERNAL TABLE [dbo].[TableFromFirstDatabase] ( [KeyFieldID] [int] NOT NULL, [DataField] [varchar](50) NOT NULL ) WITH ( DATA_SOURCE = FirstDatabaseDataSource) And that's it! Now we can make queries to the table from the First database in the Second database as to an ordinary table located in the Second database. It should be borne in mind that these tables are connected only by data - any changes in the schema in one database will not affect the schema of the table in another.

Another possible case of querying various databases is to use Elastic Database jobs . The essence of this technology is that a group of databases is combined and managed centrally from a common specialized VM head server. By interacting with this server programmatically or through Azure Portal, you can manage all connected databases by creating jobs. These tasks may be of the following nature:

- administrative (consistent schema change, performing index compilation);

- periodically updating data or collecting data for BI systems, incl. for analyzing large amounts of data.

The Elastic Database jobs service contains the following components (Figure 2):

- host server hosted on an Azure Cloud Service Worker Role instance. In essence, this is specialized software that is hosted (as of now) on the virtual Azure Cloud Service. To ensure high availability, it is recommended to create at least 2 VM instances;

- control database. This is an instance of Azure SQL that serves to store the metadata of all connected databases;

- An Azure Service Bus instance that serves to combine and synchronize all components.

- An instance of the Azure Storage Account cloud storage that serves to store the logs of the entire system.

Configuring, using and administering this entire system is a rather complicated topic and will not be discussed in detail. Those interested can read the topic here .

In addition to single databases, AzureSQL allows you to create more complex relational repositories — fragmented databases — Sharded Databases and relational repositories with broad support for massively parallel query execution — Azure SQL DWH.

Consider the first fragmented database. The need for database fragmentation occurs when its size becomes excessively large to fit on a single instance of Azure SQL (currently more than 1 TB for Premium pricing tier). Of course, you can break a large database into smaller databases logically by analyzing its structure (schema). As mentioned earlier, Azure SQL Server is a logical grouping of Azure SQL Database instances, and not a physical union on a single server. And direct queries to database objects from another database are possible only if these objects are External table or these databases are combined in the Elastic Database Pool, and Elastic Database Query queries or Elastic Transactions transactions are used. In this case, it is necessary to apply sharding the database. Database fragmentation is essentially horizontal scaling, different from vertical scaling — increasing the “size” of the database across CPU, RAM, IOPs, and so on. Splitting one large repository into several smaller repositories and parallel processing all of them, then adding them processing results is the key concept of all big data processing technologies, with which we will continue to meet more than once.

Source: https://habr.com/ru/post/330364/

All Articles