Energy Limit: New Data Center Cooling Technologies

Ken Brill from the data center certification center Uptime Institute once said that the process of increasing the heat generated by installing more and more transistors on the chip will reach the limit where the economic feasibility of cooling the data center will be lost without the introduction of new technologies.

And the industry is gradually moving in this direction, engaging in the prioritization of energy efficiency in the overall process of servicing data centers. For this reason, classic server rooms with traditional cooling systems are becoming an increasingly rare choice for companies, which is explained by economic considerations: the IEEE report of the first quarter of 2016 describes the distribution of energy consumption between the various components of data centers - cooling systems account for 50% of the energy consumed .

In this regard, companies began to look for new methods and solutions for cooling the data center, which we want to talk about in this material.

')

/ photo by Rob Fahey CC

/ photo by Rob Fahey CC

Mark Mills’s work “Clouds start with coal” makes the following conclusion: based on the average estimate, the information and communication technology (ICT) ecosystem consumes 1,500 TWh of electricity annually, which is 10% of its global production.

According to Intel, since 2002, the cost of electricity increases annually by about 5.5%. Organizations spend about $ 0.50 on electricity and cooling from each dollar, aimed at servicing the hardware.

Image: Uptime Institute

Image: Uptime Institute

To minimize the likelihood of an increase in the temperature of data centers, enterprises often use corridor mechanisms at the racks of data centers with air ducts for hot and cold flows. In this case, the systems of organization, management and containment of air flow are used, which capture hot “spent” air, pass it through air-conditioning devices and serve cooled directly to the air intakes of the server equipment.

Some systems use fluids to absorb and transfer heat from the hardware. Fluids generally perform this function more efficiently than air. Systems such as cooling by fluid contact with a radiator and immersion cooling (indirect and direct) are common, in which individual components are immersed in a non-conductive fluid.

Although water cooling in its traditional form is a more economical method, today it still remains secondary, facing a number of obstacles to mass adoption. The following factors slow down the spread:

The rise in energy costs and certain drawbacks of classical cooling methods prompted the data center industry to look for innovative solutions, including those associated with "natural" cooling.

There are a number of regions on the planet whose climate naturally allows saving up to 100% of the energy expended on servicing cooling systems. Such climatic zones, for example, are the northern part of Europe, Russia and several zones in the north of the USA.

In confirmation of this, in 2013, Facebook built its first data center outside the United States in the Swedish city of Luleå with an average annual temperature of 1.3 ° C, and Google invested a billion dollars in building a large data center in Finland.

The cold climate reduces operating costs due to the low temperatures of air and water that are launched into data centers. According to Andrew Donohue of research company 451 Research, natural air cooling allows builders to abandon mechanical chillers, reducing the capital cost of the facility to 40%.

As a logical continuation of the previous solution, natural cooling should be considered. There are three forms of its implementation: air, adiabatic and water.

The air form of natural cooling differs from classic air conditioning in that hot air next to the servers is sent to the environment (fully or partially), and it is replaced by cooled air from the outside.

Back in 2008, Intel conducted a ten-month test to evaluate the effectiveness of using only external air to cool the data center. As a result, it was not possible to fix the increase in the server failure rate due to fluctuations in temperature and humidity. The range of temperature changes in the machine room was 30 degrees, while using only standard household air filter, which removes only large particles from the incoming air.

As a result, the humidity in the data center ranged from 4 to 90%, and the servers were covered with a thin layer of dust. Despite this, the failure rate in the test area was 4.46%, which did not differ much from the 3.83% achieved in the main Intel data center over the same period.

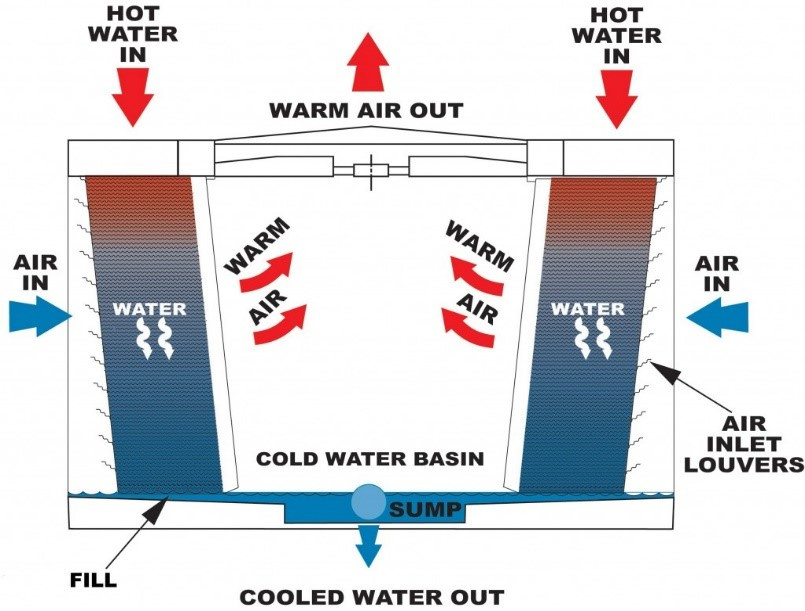

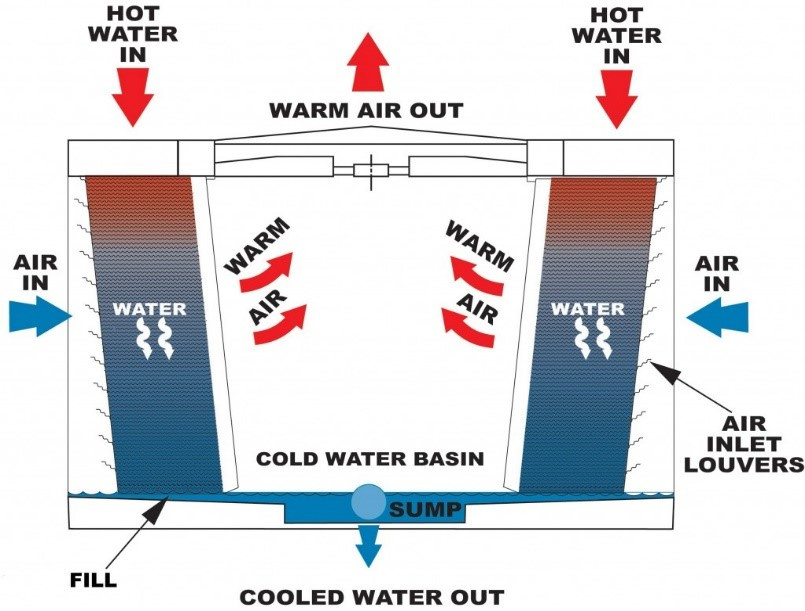

The second form is adiabatic cooling, which is often called evaporative. To cool the data center, a low-power fan delivers air to the irrigated surfaces, evaporating some of the water. The resulting air is much colder than that passed by the fan.

Image: Submer

Image: Submer

Another solution for adiabatic cooling is the so-called heat wheel. This wheel is part of the Kyoto cooling system used in Switzerland. The 9 MW hybrid plant uses the cooled water of a closed adiabatic dry fan connected to a water-cooling device with compressors.

The wheel is a large, slowly rotating aluminum disc, which has come to the data center industry from industrial air conditioning. Instead of introducing outside air directly into the server air, it mixes outside air with exhaust air, forming heat exchange. The wheel makes from 3 to 10 revolutions per minute and requires a minimum of energy to turn.

It is argued that this type of cooling requires only 8–25% of the power consumed during mechanical cooling. According to an independent report , a wheel-based system installed in Montana, USA, reduces the cost of cooling costs to 5 cents per dollar spent on energy.

As for the water form of natural cooling, in its real performance it illustrates the principle of operation of the Green Mountain data center, which is located in one of the fjords in Norway. The reservoir provides uninterrupted water supply at a temperature of 8 ° C, which is optimal for data center cooling systems.

The Microsoft Natick project, which implements the idea of an underwater data center, is aimed at a deeper exploitation of water resources. It is assumed that at the bottom of the ocean at a depth of 50 to 200 meters will be placed capsules containing several thousand servers. The interior of the unit consists of standard racks with heat exchangers that transfer heat from air to water.

Then the liquid enters the heat exchangers outside the capsules, which, in turn, redirect heat to the ocean. During the experiment, the prototype was immersed for 105 days off the coast of California. Water temperature ranged from 14 to 18 ° C. The cost of cooling was significantly lower in comparison with mechanical methods of cooling.

A similar concept is being developed by Google, but scientists from the IT giant are proposing to place data centers on barges. According to Nautilus Data Technologies, which is developing a similar technology, this approach saves up to 30% of energy due to natural cooling.

Another embodiment of the concept of operating “natural coolers” is underground data centers, as well as data centers based in caves of natural origin. In comparison with the idea of diving servers under water, underground objects have an advantage in terms of access to equipment. As examples of the implementation of a cave in the city of Tampere, Finland, which rents Aiber Networks.

Also worth noting is the Iron Mountain data center located in a former mine in Pennsylvania at a depth of 67 meters. It is argued that the constant temperature of the object is maintained at 11 ° C in a natural way - this provides "one of the lowest energy efficiency indicators [as of 2014]".

Based on the principle of immersion in a liquid, the 2bm Iceotope system covers the electronics with non-conductive coolant, the heat from which is transmitted to the radiator. Water passes through the rack and can be reused for other purposes, such as central heating. According to 2bm, this system allows you to save up to 40% of the total cost of maintaining the data center.

In 2014, Data Center Knowledge also submitted a report on the optimization of the cooling process of the data center of the US National Security Agency through the immersion of servers in petroleum oils. This approach also eliminated cooling with the help of fans.

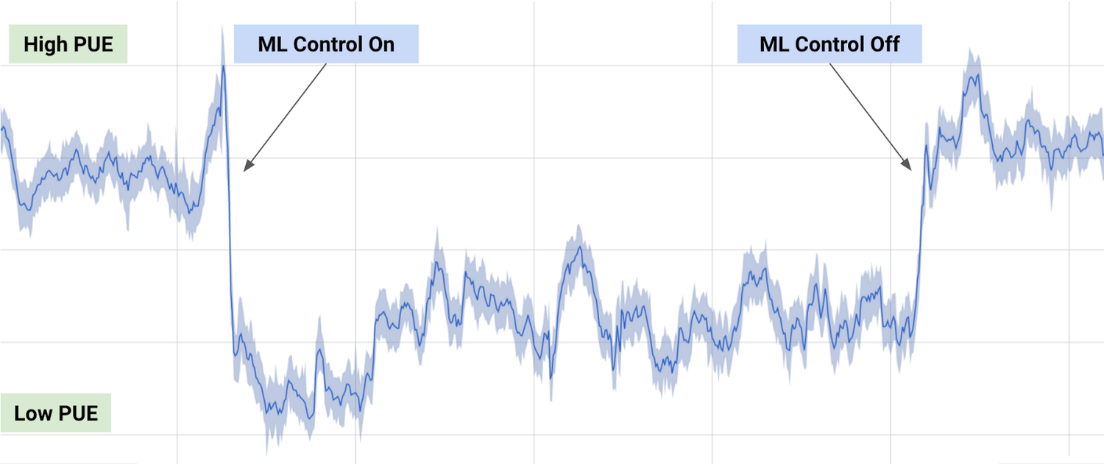

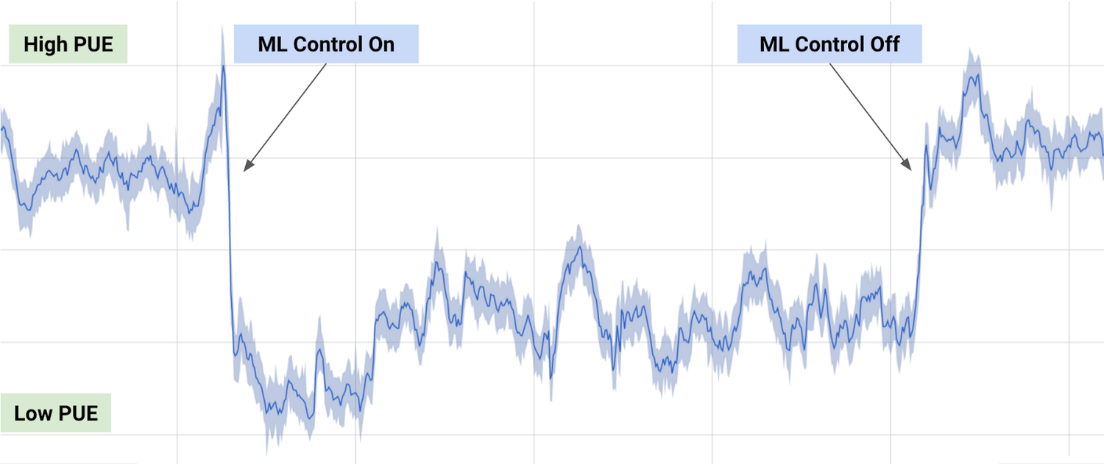

In 2014, Google acquired the company DeepMind, specializing in the development of artificial intelligence systems. A series of tests of the corporation AI was associated with the assessment of the energy efficiency of its own data centers. According to the study, Google was able to achieve a reduction in energy consumption in terms of cooling by 40%.

For this, data collected by thousands of sensors and thermal sensors in data centers were used to train neural networks. The networks learned to predict the temperature and pressure in the data center in the next hour and took the necessary actions to ensure the specified temperature thresholds. Optimization results are presented in the following graph.

Source: DeepMind

Source: DeepMind

The method is in the early stages of development and is implemented as part of a project known as DIMENSION. A group of scientists from Cardiff and Sheffield universities created a laser on a silicon substrate, capable of operating 100 thousand hours at temperatures up to 120 ° C.

According to scientists, this technology will allow to combine two areas: electronics and photonics. Lasers with direct modulation on a silicon substrate provide a tremendous speed of data transmission in electronic systems and, although not directly related to the cooling of the data center, can reduce the cost of electricity.

The focus on optimizing energy-intensive data center systems has led to the emergence of various concepts that may become a reality in the future. One such example is the project of a 65-story data center in Iceland, which, according to architects, is a “giant data tower”, reaching 50 meters in height and containing hundreds of thousands of servers serviced by “clean” energy. Given the proximity of the selected location to the Arctic Circle, the building will be provided with the means for natural cooling.

However, a more promising direction in the deployment of innovative systems is the combination of free cooling solutions with the re-use of the energy generated by the operation of data centers. For example, in December last year, the British telecommunications company aql officially opened a new data center that sends “waste” heat to the district heating system serving local residential and commercial buildings.

Alfonso Capozzoli (Alfonso Capozzoli) argues that the collection and reuse of waste heat produced by IT equipment is the next step in an energy efficiency strategy. The implementation of appropriate measures, among other things, can significantly affect the reduction of CO2 emissions. In addition to central heating, absorption cooling, Rankine cycle and water desalination are also considered as possible uses of waste heat.

Thus, the data center industry is currently focused on the use of natural cooling sources, combined with intelligent systems that optimize energy efficiency. Concepts that are currently at the prototype stage can radically change the approach to the cooling methods used in the future.

And the industry is gradually moving in this direction, engaging in the prioritization of energy efficiency in the overall process of servicing data centers. For this reason, classic server rooms with traditional cooling systems are becoming an increasingly rare choice for companies, which is explained by economic considerations: the IEEE report of the first quarter of 2016 describes the distribution of energy consumption between the various components of data centers - cooling systems account for 50% of the energy consumed .

In this regard, companies began to look for new methods and solutions for cooling the data center, which we want to talk about in this material.

')

/ photo by Rob Fahey CC

/ photo by Rob Fahey CCMark Mills’s work “Clouds start with coal” makes the following conclusion: based on the average estimate, the information and communication technology (ICT) ecosystem consumes 1,500 TWh of electricity annually, which is 10% of its global production.

According to Intel, since 2002, the cost of electricity increases annually by about 5.5%. Organizations spend about $ 0.50 on electricity and cooling from each dollar, aimed at servicing the hardware.

To minimize the likelihood of an increase in the temperature of data centers, enterprises often use corridor mechanisms at the racks of data centers with air ducts for hot and cold flows. In this case, the systems of organization, management and containment of air flow are used, which capture hot “spent” air, pass it through air-conditioning devices and serve cooled directly to the air intakes of the server equipment.

Some systems use fluids to absorb and transfer heat from the hardware. Fluids generally perform this function more efficiently than air. Systems such as cooling by fluid contact with a radiator and immersion cooling (indirect and direct) are common, in which individual components are immersed in a non-conductive fluid.

Although water cooling in its traditional form is a more economical method, today it still remains secondary, facing a number of obstacles to mass adoption. The following factors slow down the spread:

- Physical limitations. Unlike racks, access to the capacity for diving servers opens only from above, which complicates infrastructure scaling.

- Security. Any maintenance requires contact with a special fluid.

- The cost of deploying the system. Designing a new data center based on water cooling is less laborious than upgrading the existing infrastructure.

- Resource shortage The maintenance of a large data center requires such an amount of water that the feasibility of cooling servers in dry areas is questioned from both an economic and an environmental point of view.

Alternative solutions for cooling data centers

The rise in energy costs and certain drawbacks of classical cooling methods prompted the data center industry to look for innovative solutions, including those associated with "natural" cooling.

Data Center Migration

There are a number of regions on the planet whose climate naturally allows saving up to 100% of the energy expended on servicing cooling systems. Such climatic zones, for example, are the northern part of Europe, Russia and several zones in the north of the USA.

In confirmation of this, in 2013, Facebook built its first data center outside the United States in the Swedish city of Luleå with an average annual temperature of 1.3 ° C, and Google invested a billion dollars in building a large data center in Finland.

The cold climate reduces operating costs due to the low temperatures of air and water that are launched into data centers. According to Andrew Donohue of research company 451 Research, natural air cooling allows builders to abandon mechanical chillers, reducing the capital cost of the facility to 40%.

Natural forms of cooling

As a logical continuation of the previous solution, natural cooling should be considered. There are three forms of its implementation: air, adiabatic and water.

The air form of natural cooling differs from classic air conditioning in that hot air next to the servers is sent to the environment (fully or partially), and it is replaced by cooled air from the outside.

Back in 2008, Intel conducted a ten-month test to evaluate the effectiveness of using only external air to cool the data center. As a result, it was not possible to fix the increase in the server failure rate due to fluctuations in temperature and humidity. The range of temperature changes in the machine room was 30 degrees, while using only standard household air filter, which removes only large particles from the incoming air.

As a result, the humidity in the data center ranged from 4 to 90%, and the servers were covered with a thin layer of dust. Despite this, the failure rate in the test area was 4.46%, which did not differ much from the 3.83% achieved in the main Intel data center over the same period.

The second form is adiabatic cooling, which is often called evaporative. To cool the data center, a low-power fan delivers air to the irrigated surfaces, evaporating some of the water. The resulting air is much colder than that passed by the fan.

Another solution for adiabatic cooling is the so-called heat wheel. This wheel is part of the Kyoto cooling system used in Switzerland. The 9 MW hybrid plant uses the cooled water of a closed adiabatic dry fan connected to a water-cooling device with compressors.

The wheel is a large, slowly rotating aluminum disc, which has come to the data center industry from industrial air conditioning. Instead of introducing outside air directly into the server air, it mixes outside air with exhaust air, forming heat exchange. The wheel makes from 3 to 10 revolutions per minute and requires a minimum of energy to turn.

It is argued that this type of cooling requires only 8–25% of the power consumed during mechanical cooling. According to an independent report , a wheel-based system installed in Montana, USA, reduces the cost of cooling costs to 5 cents per dollar spent on energy.

As for the water form of natural cooling, in its real performance it illustrates the principle of operation of the Green Mountain data center, which is located in one of the fjords in Norway. The reservoir provides uninterrupted water supply at a temperature of 8 ° C, which is optimal for data center cooling systems.

Interesting to read: About data centers

The Microsoft Natick project, which implements the idea of an underwater data center, is aimed at a deeper exploitation of water resources. It is assumed that at the bottom of the ocean at a depth of 50 to 200 meters will be placed capsules containing several thousand servers. The interior of the unit consists of standard racks with heat exchangers that transfer heat from air to water.

Then the liquid enters the heat exchangers outside the capsules, which, in turn, redirect heat to the ocean. During the experiment, the prototype was immersed for 105 days off the coast of California. Water temperature ranged from 14 to 18 ° C. The cost of cooling was significantly lower in comparison with mechanical methods of cooling.

A similar concept is being developed by Google, but scientists from the IT giant are proposing to place data centers on barges. According to Nautilus Data Technologies, which is developing a similar technology, this approach saves up to 30% of energy due to natural cooling.

Another embodiment of the concept of operating “natural coolers” is underground data centers, as well as data centers based in caves of natural origin. In comparison with the idea of diving servers under water, underground objects have an advantage in terms of access to equipment. As examples of the implementation of a cave in the city of Tampere, Finland, which rents Aiber Networks.

Also worth noting is the Iron Mountain data center located in a former mine in Pennsylvania at a depth of 67 meters. It is argued that the constant temperature of the object is maintained at 11 ° C in a natural way - this provides "one of the lowest energy efficiency indicators [as of 2014]".

Alternative liquid based cooling systems

Based on the principle of immersion in a liquid, the 2bm Iceotope system covers the electronics with non-conductive coolant, the heat from which is transmitted to the radiator. Water passes through the rack and can be reused for other purposes, such as central heating. According to 2bm, this system allows you to save up to 40% of the total cost of maintaining the data center.

In 2014, Data Center Knowledge also submitted a report on the optimization of the cooling process of the data center of the US National Security Agency through the immersion of servers in petroleum oils. This approach also eliminated cooling with the help of fans.

Artificial Intelligence Cooling

In 2014, Google acquired the company DeepMind, specializing in the development of artificial intelligence systems. A series of tests of the corporation AI was associated with the assessment of the energy efficiency of its own data centers. According to the study, Google was able to achieve a reduction in energy consumption in terms of cooling by 40%.

For this, data collected by thousands of sensors and thermal sensors in data centers were used to train neural networks. The networks learned to predict the temperature and pressure in the data center in the next hour and took the necessary actions to ensure the specified temperature thresholds. Optimization results are presented in the following graph.

Directly modulated laser on a silicon substrate

The method is in the early stages of development and is implemented as part of a project known as DIMENSION. A group of scientists from Cardiff and Sheffield universities created a laser on a silicon substrate, capable of operating 100 thousand hours at temperatures up to 120 ° C.

According to scientists, this technology will allow to combine two areas: electronics and photonics. Lasers with direct modulation on a silicon substrate provide a tremendous speed of data transmission in electronic systems and, although not directly related to the cooling of the data center, can reduce the cost of electricity.

Prospects for innovative cooling systems

The focus on optimizing energy-intensive data center systems has led to the emergence of various concepts that may become a reality in the future. One such example is the project of a 65-story data center in Iceland, which, according to architects, is a “giant data tower”, reaching 50 meters in height and containing hundreds of thousands of servers serviced by “clean” energy. Given the proximity of the selected location to the Arctic Circle, the building will be provided with the means for natural cooling.

However, a more promising direction in the deployment of innovative systems is the combination of free cooling solutions with the re-use of the energy generated by the operation of data centers. For example, in December last year, the British telecommunications company aql officially opened a new data center that sends “waste” heat to the district heating system serving local residential and commercial buildings.

Alfonso Capozzoli (Alfonso Capozzoli) argues that the collection and reuse of waste heat produced by IT equipment is the next step in an energy efficiency strategy. The implementation of appropriate measures, among other things, can significantly affect the reduction of CO2 emissions. In addition to central heating, absorption cooling, Rankine cycle and water desalination are also considered as possible uses of waste heat.

Thus, the data center industry is currently focused on the use of natural cooling sources, combined with intelligent systems that optimize energy efficiency. Concepts that are currently at the prototype stage can radically change the approach to the cooling methods used in the future.

Source: https://habr.com/ru/post/330338/

All Articles