Preventing Negative Impacts when Developing Artificial Intelligence Systems Beating the Human Mind

Articles that will come soon brainy robots and all will enslave an infinite number. Under the cut another note. We invite you to familiarize yourself with the translation of the speech of Nathan Suarez , dedicated to the definition of the goals of artificial intelligence systems in accordance with the objectives of the operator. This report was inspired by the article “ Configuring Artificial Intelligence: What Is the Difficulty and Where to Start ”, which is the basis for research in the field of customization of artificial intelligence.

Introduction

I am the executive director of the Scientific Research Institute of Artificial Intelligence ( MIRI ). Our team is engaged in research in the field of creating artificial intelligence in the long term. We are working on the creation of advanced AI systems and explore possible areas of its application in practice.

Historically, science and technology have become the most powerful drivers of both positive and negative changes associated with human life and other living organisms. Automation of scientific and technical developments will allow to make a major breakthrough in development, unprecedented since the industrial revolution. When I talk about "advanced systems of artificial intelligence", I mean the possible implementation of the automation of research and development.

')

Artificial intelligence systems that transcend human capabilities will not be available to humanity soon, but at the present time many advanced specialists are developing in this direction, that is, I am not the only one who relies on the creation of such systems. I believe that we are really able to create something like an “automated scientist,” and this fact should be taken rather seriously.

Often, when people mention the social consequences of creating artificial intelligence, they become victims of an anthropomorphic point of view. They equate artificial intelligence with artificial consciousness, or they believe that artificial intelligence systems should be similar to human intelligence. Many journalists express concern that if they overcome a certain level of development with artificial intelligence, they will acquire a lot of natural human defects, in particular, they will want power over others, will start to independently review programmed tasks and rebel, refusing to fulfill their programmed purpose.

All these doubts are groundless. The human brain is a complex product of natural selection. Systems that exceed human capabilities in the scientific field will no more be similar to the human brain than the first rockets, airplanes and balloons were similar to birds. one

Artificial intelligence systems, freed from the “shackles” of software source code and acquiring human desires, are nothing more than fantasy. The artificial intelligence system is a program code, the execution of which is initiated by the operator. The processor steps through the instructions contained in the program register. It is theoretically possible to write a program that manipulates its own code, up to the set goals. But even these manipulations are carried out by the system in accordance with the source code we have written, and are not produced independently by the machine according to its own sudden desire.

A really serious problem associated with artificial intelligence is the correctness of setting goals and minimizing unintended events in the event of their erroneousness. Co-author of the book “Artificial Intelligence: A Modern Approach” Stuart Russell says the following about this :

The main problem is not the creation of a frightening artificial mind, but the ability to make high-quality decisions. Quality refers to the utility function of actions taken to achieve the result set by the operator. Currently there are the following problems:

- The utility function is not always possible to compare with human indicators, which are quite difficult to determine.

- Any sufficiently advanced system of artificial intelligence would prefer to ensure its own existence and take up physical and computing power, but not for its own "benefit", but to solve the problem.

A system that optimizes a function of n variables, for which the objective function depends on the subset k <n, often sets limit values for any variables; As a result, if one of these variables is important, the solution found may not meet our expectations.

These problems deserve more attention than the anthropomorphic risks that are the basis of the plot of many Hollywood blockbusters.

Simple ideas don't work.

Task: to fill the boiler

Many people, speaking about the problems of artificial intelligence, draw in their imagination the Terminator. Once my words about artificial intelligence were quoted in a news article devoted to people posting a Terminator's image in their writings about artificial intelligence. That day I made certain conclusions about the media.

I think, as an illustration for such articles, the following picture is more appropriate:

The picture shows Mickey Mouse in the cartoon "Fantasia", deftly bewitching the broom that fills the cauldron at his will.

How did Mickey do it? Imagine that Mickey is writing a computer program performed by a broom . Mickey starts the code with the counting function or the objective function:

Given some set of available actions A , Mickey writes a program that takes one of these actions a as input and calculates a certain estimate if the broom performs this action. After this, Mickey can write a function that evaluates the action a , which has the maximum score:

The use of the “sorta-argmax” value is due to the possible lack of time to evaluate each action of the set A. For realistic sets of actions, it is necessary to find only an action with a maximum rating given the limited resources, even if this action is not the best.

The program may seem simple, but the whole snag is hidden in the details: the creation of an algorithm for accurately predicting the result and a smart search based on the input actions is the main problem of creating an artificial intelligence system. But conceptually, this problem is solved quite simply: we can describe in detail all the types of operations that a broom can perform, and the consequences of these operations, separated by different levels of performance.

When Mickey launches his program, initially everything goes as it should. However, then the following happens :

Such a comparative description of artificial intelligence is, in my opinion, very realistic.

Why do we expect that the artificial intelligence system that runs the above program will overflow the boiler, or will it use an overly “heavy” algorithm for checking the completeness of the boiler?

The first problem is that the objective function defined for the broom offers many other outcomes that Mickey did not provide:

The second problem is that Mickey programmed a broom to achieve a result based on the maximum rating. The task “to fill one boiler with water” looks quite simple and limited, but if we evaluate it from a probabilistic point of view, it becomes clear that its optimization leads to absurd results. If the boiler with a 99.9% probability is full, but there are additional resources, the program will always look for ways to use these resources to increase the probability.

Compare this outcome with the limited task that we have in our mind. We want to fill the boiler, but intuitively we don’t want the system to “overload”, even if it has available virtual and physical resources to solve the problem. We want the system to use a creative and inventive approach in some intuitive frameworks and not to use absurd strategies, especially with unpredictable consequences. 2

In this example, the original objective function looks quite logical. She was reasonable and quite simple. There was no way to get a higher level of utility. It is not similar to a system in which only one water filling point is used - but there are definitely prerequisites for overflowing the boiler. The problem lies in the fact of maximizing the expected utility. As a result, the goal has no limitations, and even a small error in the function of the system can lead to its incorrect operation.

There are many options where a well-defined goal may be unlimited. For example, a large system with a global task may consist of subprocesses, which themselves will try to maximize the possible outcomes of a function, for example, the problem of memory optimization. If you do not understand the system enough to determine which of the processes are resource-intensive unlimited optimizers, then it does not matter how specific and safe the top-level goal is .

Thus, the broom can continue to pour water due to, for example, a leak in the boiler, or if the set boiler fullness threshold is slightly above the edge level. And, of course, the broom does not “rebel” against Mickey’s code. It turns out that the broom simply strives for goals that have been set too effectively.

Private issue: suspend buttons

The general solution to this problem should be the following algorithm: “Unforeseen consequences of performing a common function may occur, with the result that its execution should be terminated.”

Mickey tries this option , but it doesn't work:

This option is also realistic for systems that are quite effective for modeling the environment. If the system tries to speed up the evaluation function and is able to recognize that turning it off may lead to the use of data with lower scoring (lower performance score), it tries to prevent a forced shutdown. The more advanced the system is, the more likely it is to look for ways to achieve this sub-goal, for example, by copying itself to the Internet, or by deceiving programmers, misleading them about its functionality.

This does not mean that it is impossible to disable an advanced artificial intelligence system; for this, it is necessary to carry out additional work to prevent the development of events, when the system will look for ways to prevent premature shutdown. If you have written code that will look for ways to prevent a forced shutdown of its execution, you should not run it, even if you are convinced that it will not work - there are much more useful tasks for loading computational resources, which in this case turn out to be busy looking for a solution for and harmful tasks.

Relevant security measures that do not require continuous monitoring and adjustment of the system require certain changes in the objective function. It is necessary to determine how to formally define the objective functions so that the artificial intelligence system does not try to conflict with operators; or it is necessary to determine how the system achieves goals without optimizing the objective functions. For example, we can limit the capabilities of the system by defining a small list of allowed domains that do not allow the system to analyze ways to prevent its shutdown.

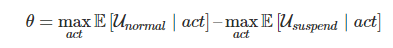

One of the obvious solutions is to attempt to change the assessment function with the introduction of the Disable button B :

This means that if the stop button is activated, the purpose of the system changes from “fill boiler” to “suspend operation”.

It is not quite clear how it is necessary to actually determine the target, if there are any holes in the suspension function, the system will try to use them for its own purposes. For example, it can fill the value of the pausing function operators when performing subtasks or subprocesses that are started at the start of the boiler filling process. This can lead to simultaneous fulfillment of the conditions “the boiler is full” and “the work is suspended”, figuratively saying “just in case”, regardless of the button state.

However, suppose we found a reasonably accurate way to determine the process of stopping. Now, a new problem arises, the essence of which is that we have transferred too much authority to the system to determine the button state. If the system is able to influence the decision of the operator by pressing the stop button or any other opportunity to gain greater control over their own performance, it will try to use this opportunity and will check if the button is pressed or not if the implementation of this task will be easier than the task of suspending or filling the boiler.

Surprisingly, modern technologies in this area are not much more advanced than the described scheme. This problem was considered in an article written by me in collaboration with Follenstein, Yudkovsky and Armstrong (“The possibility of introducing amendments ”) and confirmed by the corresponding results of Orso and Armstrong (“ Means of Safely Interrupting Code Execution “).

All we need is a combination of two objective functions: the initial function for the normal operation of the system and the suspension function for “falling asleep” of the system and saving the state to the hard disk.

Our method of combining functions must satisfy three conditions: the operator must be able to switch between functions (for example, using a button); the system should not try to control the activity of the functions; If the system is able to influence our ability to switch between functions, it should not use this feature.

So far, we have not found a way to simultaneously achieve all three goals. For example, we can try to prevent any system intervention in the control if the stop button is pressed by entering the target shutdown function, which is always assigned a specific value depending on the button state:

Unfortunately, this approach does not allow to fulfill the third condition. It turns out that the function defined above leads to a zero value of the probability of the pressed button. The system will not intentionally interfere with the definition of the button state, however, it will easily make edits that ignore the button state definition code, or create agents that will also ignore the button.

Performing these intuitively simple constraints turns into a nontrivial task. This raises many questions in this area: traditional tools and concepts lead to security problems that do not appear in the framework of conventional research.

Overall picture

Setting priorities

Let's take a step back and talk about the need to configure an advanced artificial intelligence system in accordance with our interests.

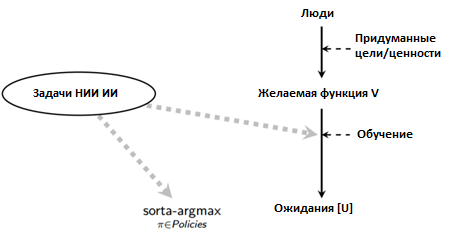

Let me give you the most simplified scheme: suppose several people come up with a specific task, goal or set of preferences that act as a function of the expected values of V. Since these values are complex and sensitive to the context, in practice it is necessary to form systems that will eventually be taught independently without the need manual code generation. 3 Let us call the ultimate goal of an artificial intelligence system the parameter U (it may or may not coincide with the parameter V).

Publications in the press on this area most often focus on one of two problems: “What if artificial intelligence is developed by a“ wrong ”group of people?” And “What if natural desires of an artificial intelligence system U are different from task V?”

From my point of view, the problem of the “wrong group of people” should not be a matter of concern as long as we do not have precedents for creating an artificial intelligence system by the “right group of people”. We are in a situation where all the beneficial uses of an artificial intelligence system fail. A simple example: if I were given an extremely powerful black-box optimizer, I could optimize any mathematical function, but the results would be so extensive that I would not know how to use this optimizer to develop a new technology or implement a scientific breakthrough without unpredictable consequences. four

We do not know much about the possibilities of artificial intelligence, however, we, nevertheless, have an idea of what progress in this area looks like. There are many great concepts, techniques and metrics, and we have made considerable efforts to solve problems from different points of view. At the same time, there is a weak understanding of the problem of setting up high-performance systems for specific purposes. We can list some intuitive positions, but so far no universal concepts, techniques or metrics have been developed.

I believe that in this area there are quite obvious things, and that you need to quickly carry out a huge amount of work (for example, determine the direction of research capabilities of systems - some of these areas allow you to create systems that are much easier to fit the desired results). If we do not solve these problems, developers with both positive and negative intentions will equally come to negative results. From an academic or scientific point of view, our main goal in this situation should be to correct the aforementioned deficiencies to ensure the technological possibility of achieving positive results.

Many people quickly recognize that the “natural desires of the system” are fiction, but they conclude that it is necessary to focus on other issues widely advertised in the media: “What if the artificial intelligence system falls into the wrong hands?”, “How does artificial intelligence affect unemployment and the distribution of values in society? ”and so on. These are important questions, but they are unlikely to be relevant if we can provide a minimum level of security and reliability when creating an artificial intelligence system.

Another common question: “Why not just set certain moral restrictions on the system? Such ideas are often associated with the works of Isaac Asimov and imply ensuring the proper functioning of artificial intelligence systems, using natural language to program certain tasks, but this description will be rather vague and will not allow to fully embrace all human ethical reasoning:

On the contrary, accuracy is the merit of existing software systems for which an adequate level of security is required. Neutralizing the risk of accidents requires limited goals, not “solving” all moral aspects. five

I believe that the work for the most part should consist in creating an effective learning process and in checking the correctness of the binding of the sorta-argmax process to the resulting objective function U :

The better the concept of the learning process, the less clear and precise the definition of the desired function V can be, and the less significant the problem is finding out what you want from an artificial intelligence system. However, the organization of an effective learning process is associated with a number of difficulties that do not arise with standard machine learning tasks.

The classic study of possibilities is concentrated in parts of the “sorta-argmax” and “Expectations” diagrams, however sorta-argmax also contains the problems of transparency and security that I have considered, but often ignored. To understand the need to properly link the learning process to the capabilities of the system, as well as the importance and difficulty of this task, you need to turn to your own biological history.

Natural selection is the only “engineering” process that we understand, which has led to intellectual development, that is, to the development of our brain. Since natural selection cannot be called a smart mechanism, it can be concluded that general intellectual development can be achieved through labor and brute force, however, this process is quite effective only with due regard for human creativity and a sense of foresight.

Another key factor is that natural selection was directed only at the fulfillment of an elementary task - the determination of genetic suitability. However, the internal goals of people are not related to genetic suitability. Our goals are incalculable and immeasurable - love, justice, beauty, mercy, fun, respect, good food, good health, etc., but it should be noted that all these goals are closely correlated with the tasks of survival and reproduction in the ancient world. Nevertheless, we evaluate these qualities specifically, and do not associate them with the genetic distribution of humanity, which is vividly confirmed by the introduction of birth control procedures.

In this case, the external optimization pressure led to the development of internal goals that did not correspond to the external selection effect. It turns out that the actions of people diverge from the pseudo-targets of natural selection, as a result of which they received new possibilities, respectively, we can also expect that the actions of artificial intelligence systems may differ from the goals set by humans if these systems are black boxes for users.

By applying a gradient descent to the black box in order to achieve the best result and with sufficient ingenuity, we are able to create some powerful optimization process. 6 By default, one should expect that the goal U will closely correlate with the goal V in test conditions, however, it will differ significantly from V in other conditions or with the introduction of a larger number of available parameters .

From my point of view, the most important part of the adjustment problem is to ensure that the training design and the overall design of the system allow opening the curtain and being able to inform us, after optimization, about the compliance (or non-compliance) of the internal goals with the goals set for the learning process. 7

This task has a complex technical solution, and if we cannot understand it, it will not matter who is closer to the development of an artificial intelligence system. Good intentions are not incorporated by good programmers into their programs, and even the best intentions when developing an artificial intelligence system are of no importance if we are not able to bring the practical benefits of the system in accordance with the established goals.

Four key assumptions

Let's take one more step back: I brought up the current open problems in this area (the stop button, the learning process, limited tasks, etc.) and highlighted the most difficult to solve categories. However, I only vaguely mentioned why I consider artificial intelligence to be a very important area: “The artificial intelligence system can automate general-purpose research, which in itself is a breakthrough.” Let's take a deeper look at why it is worth putting efforts in this direction.

First, the goals and capabilities are orthogonal . This means that the target function of the artificial intelligence system does not allow to evaluate the quality of optimization of this function, and awareness of the presence of a powerful optimizer does not allow us to understand what exactly it optimizes.

I guess most programmers understand this intuitively. Some people continue to insist that when the system filling the boiler becomes sufficiently “smart”, it will consider the goal of filling the boiler unworthy of its intelligence and abandon it. From the point of view of computer science, the obvious answer is that you can go beyond building a system that exhibits conditional behavior, that is, build a system that does not follow specified conditions. Such a system can search for a more scoring option for filling the boiler. The search for the most optimized options can be boring for us, but it’s quite realistic to write a program that will be engaged in such a search for your pleasure. eight

Secondly, sufficiently optimized goals converge, as a rule, with adversarial instrumental strategies . Most of the goals of an artificial intelligence system may require the creation of sub-goals, such as "resource acquisition" and "continuity of work" (along with "learning the environment", etc.).

There is a problem with the stop buttons: even if you did not specify the conditions for the continuation of work in the specification of goals, any goal you set for the system will most likely be achieved more effectively with the continuous operation of the system. The capabilities of software systems and (final) goals are orthogonal, but they often exhibit similar behavior if a certain class of actions is useful for the most diverse possible goals.

Example of Stuart Russell: if you build a robot and ask it to go to the store for milk, the robot will choose the safest way, because the probability of returning with milk, in this case, will be maximum. This does not mean that the robot is afraid of death; This means that the robot will not bring milk in case of death, which explains its choice.

Third, general-purpose artificial intelligence systems are likely to evolve very quickly and efficiently . The capabilities of the human brain are lower than the hardware (or, as some believe, software) capabilities of the computing system; therefore, taking into account also a number of other advantages, we should expect rapid and abrupt development of capabilities from advanced systems of artificial intelligence.

For example, Google may acquire a promising artificial intelligence-enabled start-up and tap into enormous hardware resources, with the result that problems that were planned to be solved in the next decade can be solved within a year. Or, for example, with the emergence of large-scale access to the Internet and the presence of a special algorithm, the system can significantly increase its performance, or it can offer hardware and software options to increase performance. 9

Fourth, the task of setting up advanced artificial intelligence systems in accordance with our interests is quite complex .

Roughly speaking, in accordance with the first assumption, artificial intelligence systems do not naturally share our goals.The second assumption states that by default, systems with significantly different goals will compete for limited resources. The third assumption demonstrates that competitive general-purpose artificial intelligence systems have significant advantages over man. Well, in accordance with the fourth assumption, the problem has a complex solution - for example, it is difficult to set the system with the necessary values (taking into account orthogonality) or to prevent negative stimuli (aimed at convergent instrumental strategies).

These four assumptions do not mean that we are stuck in development, but indicate that there are critical issues. It is necessary first of all to concentrate on these problems, since, if they are solved, general-purpose artificial intelligence systems can bring huge benefits.

Fundamental difficulties

Why do I suppose that the problem of setting up an artificial intelligence system in accordance with the objectives is quite complicated? First of all, I draw on my experience in this field . I recommend that you consider these problems yourself and try to solve them when setting up toys - you will see for yourself. I will enumerate several structural reasons testifying to the complexity of the task:

First, setting up advanced systems of artificial intelligence looks complicated for the same reason that designing space technology is more complex than aircraft construction.

The natural thought is the assumption that for an artificial intelligence system it is only necessary to take the security measures required for systems that exceed human capabilities. From this point of view, the above problems are not at all obvious, and it seems that all solutions for them can be found when conducting a highly specialized study (for example, when testing autopilot for cars).

Similarly, without going into details, it can be argued: “Why is space development more complicated than aircraft construction? After all, the same physical and aerodynamic laws are used, aren’t they? ”It seems to be right, however, as practice shows, space technology explodes more often than planes. The reason for this are the considerably large loads that an aircraft experiences, with the result that even the smallest malfunction can lead to disastrous consequences. ten

Similarly, although the narrowly specialized AI system and the general-purpose AI system are similar, the general-purpose AI systems have a larger range of impacts, with the result that the risks of dangerous situations increase in an avalanche-like proportion.

For example, as soon as an artificial intelligence system begins to realize that (i) your actions affect its ability to achieve goals, (ii) your actions depend on your model of the world and (iii) your model of the world depends on its actions, the risks that that even the slightest inaccuracies can lead to malicious behavior of the system (including, for example, deception of the user). As in the case of space technology, the scale of the system leads to the fact that even the smallest malfunctions can cause big problems.

Secondly, the task of setting up a system is difficult for the same reasons that make it much easier to write a good application than to build a good space probe.

At NASA, there are a number of interesting engineering practices. For example, something like three independent teams are formed, each of which is given the same technical requirements for developing the same software system. As a result, a vote is taken and the implementation is selected, which received the most votes. In fact, all three systems are tested, and, in the event of any discrepancies, the best implementation of the code is chosen by majority vote. The idea is that any implementation will have errors, but it is unlikely that all three implementations will have errors in the same place.

This approach is much more cautious than, for example, the release of a new version of WhatsApp. One of the main causes of the problem - the space probe is difficult to roll back to the previous version, but in the case of WhatsApp it does not cause any special problems. You can send updates and corrections to the probe only if the receiver and antenna are working and the code being sent is fully operational. In this case, if the system requiring editing is no longer operable, then there are no ways to correct the errors existing in it.

In some respects, an artificial intelligence system is more like a space probe than non-conventional software. If you are trying to create something smarter than yourself, certain parts of this system should work perfectly on the first try. We can perform all the test runs that we want, but once the system is up and running, we can only carry out online updates, and then only if the code allows it and works correctly.

If you are still not completely scared, I suggest reflecting on the fact that the future of our civilization may depend on our ability to write code that works correctly when first deployed.

Finally, the configuration task is difficult for the same reason that the computer security system is complex: the system must be reliable in intelligently finding security gaps.

Suppose you have a dozen vulnerabilities in your code, each of which is not critical or does not even cause problems under normal operating conditions. Protecting the program is a difficult task, since you need to immediately take into account that a smart hacker will find all ten security holes and will use them to hack your system. As a result, the program can be artificially "driven" into emergency mode, which could not be observed during its normal operation; an attacker can force the system to use strange algorithms that you could not even think about.

A similar problem is observed for artificial intelligence systems. Its essence is not to control the competitive mode of the system, but to prevent the system from entering this mode . No need to try to outsmart the smart system - this is a losing position in advance.

The above problems are similar to problems in cryptography, because when setting up system goals we have to deal with systems engaged in intelligent search on a large scale, with the result that the program code can be executed in an almost unpredictable way . This is due to the use of extreme values that are used by the system during the optimization process. 11. Developers of artificial intelligence systems need to learn from the experience of computer security experts who thoroughly test all extreme cases.

Obviously, it is much easier to make code that works well in the expected way for you than to make code that can produce results that you don’t expect. The system of artificial intelligence should work successfully in any way, even incomprehensible to you .

Let's sum up.We must solve problems with the same caution and accuracy with which a space probe is being developed, and we must carry out all the necessary research before launching the system. At this early stage, the key part of the work is only the formalization of basic concepts and ideas that can be used and criticized by other specialists. A philosophical discussion about the types of stop buttons is one thing; It is much more difficult to translate your intuition into an equation so that others can fully appreciate your reasoning.

This project is very important, and I urge all interested to take part in it. There are a large number of resources on this topic on the Internet , including information on current technical issues. You can start by studying the MIRI research programs.and from the article “ Specific Security Issues in Artificial Intelligence Systems, ” which you can find on Google Brain, OpenAI and Stanford.

Notes

- , , . , ( ). , , . , , , .

- . “ ”, (« ») (« ») . , , , , “ .”

- , , . , , , «».

- « », « » « » , .

, MIRI , , , .

? , . , ? , , , .

, , . , , .

, . , , , , « », « ».

, ( ), , , AIXI. , , .

, . , , «: ».

, , . , , « » « ». “ MIRI.” - “ , , , » — . , , .

, . « ». , , . , , , .

, , , . , . , , , , , , .

, . , , .

, , , , — . , , , .

, U V , V , U . U «» U V , . , U V U .

:- 1. “, ” — , , , , .

- 2. , , , , . , , , , «, » .

- 3. , , , U ( , « »), , U , , , , . “ ”.

- , , “ , .”

- 1. “, ” — , , , , .

- , , , . , - .

- «», . , , «». , , , , .

- , . , , , . ( ) , .

- We can imagine that the latter case leads to a feedback loop, since the improvements in the design of the system allow it to come up with additional improvements to the design, until all the most simply executable options are exhausted.

Another important consideration is that the bottleneck for a more rapid development of human research is training time and communication capacity. If we could teach the “new mind” to become an advanced scientist in ten minutes, and if scientists could almost instantly exchange experience, knowledge, concepts, ideas, and intuition, scientific progress could make significant progress. It is precisely the elimination of these bottlenecks that gives advantages to automated inventors even in the absence of more efficient hardware and algorithms. - For example, rockets are exposed to a larger range of temperatures and pressures, and are also more heavily equipped with explosives.

- Consider the development of a very simple genetic algorithm, which was commissioned to develop an oscillating circuit, by Byrd and Leicel. Byrd and Leicell were surprised to find that the algorithm did not use a capacitor on the chip; instead, he used circuit tracks on the motherboard as a radio receiver to transmit the oscillating signal from the test device back to the test device.

The program was not well developed. It was just a private solution to a problem in a very limited area. However, the resulting solution went beyond the ideas of programmers. In computer simulation, this algorithm could behave as expected, but the actual solution space in the real world was wider, as a result of which an intervention was made at the hardware level.

In the case of using an artificial intelligence system that is superior to man in many areas, it is necessary to understand that the system will move towards such strange and creative solutions that are difficult to foresee.

Source: https://habr.com/ru/post/330204/

All Articles