British satellite imagery 2: how it was in reality

At once I will make a reservation that this post does not bear a large technical burden and should be perceived exclusively in the “Friday history” mode. In addition, the text is full of English words, I don’t know how to translate some of them, but I don’t want to translate some of them.

Summary of the first part :

')

1. DSTL (Science and Technology Laboratory at the Department of Defense of the United Kingdom) held an open competition at Kaggle .

2. The competition ended on March 7, the results were announced on March 14.

3. Five of the top ten teams are Russian- speaking , and all of them are members of the Open Data Science community .

4. The prize fund of $ 100,000 was shared by the brutal Malaysian Kyle , the team of Roman Solovyov and Arthur Kuzin , as well as me and Sergey Mushinsky .

5. Following the results, blog posts were written ( my post on Habré , the post of Arthur on Habré , our post with Seryoga on Kaggle ), speeches were held at the meetings ( my performance at Adroll , my performance at H20.ai , performance by Arthur at Yandex , speech by Eugene Nekrasov in Mail.Ru Group ), written by tech report on arxiv .

The organizers liked the quality of the proposed solutions, but did not like how much they unfastened for this competition. Kaggle spent $ 500k, while the prize money was only $ 100k.

It was a logical conclusion - to create your own British Kaggle with preference and high school students. There is not enough knowledge and skills of the engines of science at the Ministry of Defense of the UK and MI6 for serious AI Research, so instead of making a closed party for their own, they organized an open competition, but with muddy rules, namely, everyone can participate, but Only those who are citizens and live in countries that are above a certain cutoff in the next 2014 corruption rating will apply for the prize. Why 2014, and not something more fresh? This is still not clear. There is a conspiracy thesis that 2014 was chosen precisely to leave the Chinese behind and not to leave the Indians. But in 2016, India and China have the same rating, so the focus would not have passed.

Writing a website imitating Kaggle didn’t be enough for science engines, so they hired a contractor, namely the British corrupt official BAE Systems.

To receive money for the competition, which was discussed in the first part , it was necessary to hold a skype conference with DSTL representatives and briefly describe the solution. We presented, described. They frankly floated and, in fact, partly based on this my self-confident assumption that they do not have high-flying specialists. But by themselves, these British scientists are quite adequate, and at the end of our speech their chief, as an advertisement, mentioned that they, too, were not made with a finger, and that they have their own platform for holding competitions, and that two tasks just started , one for Computer Vision , the other for Natural Language Processing, and each has a prize fund of 40 thousand pounds (20k, 12k, 8k). We replied that we were in the know and, in turn, politely asked: “Why do you have discriminatory rules”? The man replied that it was all nonsense, that it was not they, but the contractor who was holding the competition so nagudit, and that DSTL was also against discrimination, that they would change everything and in general, happiness to everyone and everyone.

Later we clarified by email how seriously they intend to adhere to the correct party line and received in response:

- It is likely to be the case for datasciencechallenge.org. It was based on legal grounds, but the following feedback was given. Please do not let it put you off.But in fact, it was all not important. The idea is that, on the one hand, I strongly sagged on the knowledge in Object Detection and flew into this interview famously. For example, in Nvidia on self driving car, I flew on the fact that I can do Image Classification, I can do Image Segmentation, but Object Detection, which is very important for many business tasks that use computer vision, is not. And as you know, it is better to study in battle, so this is necessary either to cut your project in your free time, or to work on some competition.

It is worth adding that the task about the classification of fish , in which object detection was necessary for me, was due to lack of knowledge, and other tasks that had already begun, but were not finished, that is, about cervical cancer , about the counting of fur seals , and ImageNet 2017, were also depressed, forcing the complexes on their own helplessness.

Summarizing:

1. The fact that the cash prize does not shine for the citizens of Russia was known in advance. When later this whole story went through the news, this piece went down, since working in the "our hit" mode is much more profitable for the rating. Yes, there was a hope that the rules would change, but “promise is not to marry,” so no one expected this much.

2. Competitions are 10-100x for specific knowledge per unit of time in machine learning, compared to academic environment or work. It was very necessary to understand Object Detection, plus, I wanted the pipeline to appear under this type of tasks, as the pipelines have a habit of wandering from problem to problem, transforming many tasks from „something is somehow understandable, but not very - I draw for a couple of months "in" the task is complicated, it means that the pipeline will take more than one evening, but two. "

Formulation of the problem

What is actually required? It is necessary to create an algorithm that will take satellite images of an unknown city in the UK and find motorcycles, cars and buses of a certain type on them. From the shadow of the Ferris wheel, it was suggested that this unknown city was London.

In the task, about the satellites , about which it was told in the first part, everything was difficult with the data:

1. Pictures are presented in a heap of various spectral ranges, resolutions, channels are shifted both in time and in space.

2. Little data. Train, public test and private test correspond to different distributions.

3. Classes are highly unbalanced. For example, in a train set, one pixel of a class of machines corresponded to 60,000 pixels of a class of trusses. Actually, in our decision with Sergey, we completely put the bolt on the typewriters and did not bother with their presence in the Nigerian jungle in which all the action happened just because of problems with the data of this class.

It was fascinating and very interesting, but everyone got tired. The task was with a subdivision and more time was spent not on scientific research, but on fighting engineering mud, part of which was due to the specifics of the data, and partly by unhealthy creativity from the organizers.

In the same task, it was the opposite:

1. Data is presented in the usual RGB, made from the same height, and almost at the same time.

2. Train and test are represented by 1200 pictures (600 and 600), each 2000x2000 in size, at a resolution of 5 cm / pixel. That is enough to solve the problem, but it does not require a cluster of GPUs and several weeks of training.

In standard benchmark datasets tagged under Object Detection, where pundits find out which algorithm is better, objects are marked through the bounding boxes, that is, the coordinates of the rectangle and the class label correspond to each object from the train, and the model, in turn, , predicts the coordinates of the rectangles, the class label and how confident the model is in the prediction.

The problem with the preparation of such datasets is that these rectangles are drawn around for a long time, and datasets need big ones, so even if you hire markers cheaply, you’ll get long and expensive. An alternative is the so-called weak labeling, where instead of boxing a dot is placed in the center of the object.

The British went exactly along the alternative path, and marked them with dots, and, unlike the standard approach, in which there is an object - there is a label, they added imagination and labeled in the mode - the participants' algorithms will probably find this object - we will mark it. and here the car is half hidden by the crown of the tree, so we will not mark it. So it is not necessary to do, it greatly complicates the training of models. But we will write off these distortions on their inexperience.

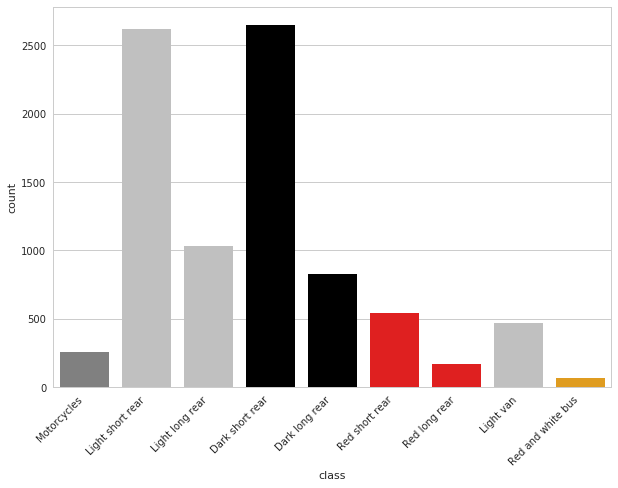

There were 9 classes, namely:

- A - any motorcycles and scooters

- B - short light cars

- C - long light cars

- D - short dark cars

- E - long dark cars

- F - short red cars

- G - long red cars

- H - white vans

- I - red and white buses

That is, it is not enough to find a typewriter, it is necessary to classify correctly, plus, not everything must be looked for. Blue, green, yellow vehicles and other British were not interested.

Classes are unbalanced, but the least represented classes, that is, motorcycles, buses and red cars, had pronounced signs, so the network didn’t strain it much.

(Picture provided by Vladislav Kass)

Metrics

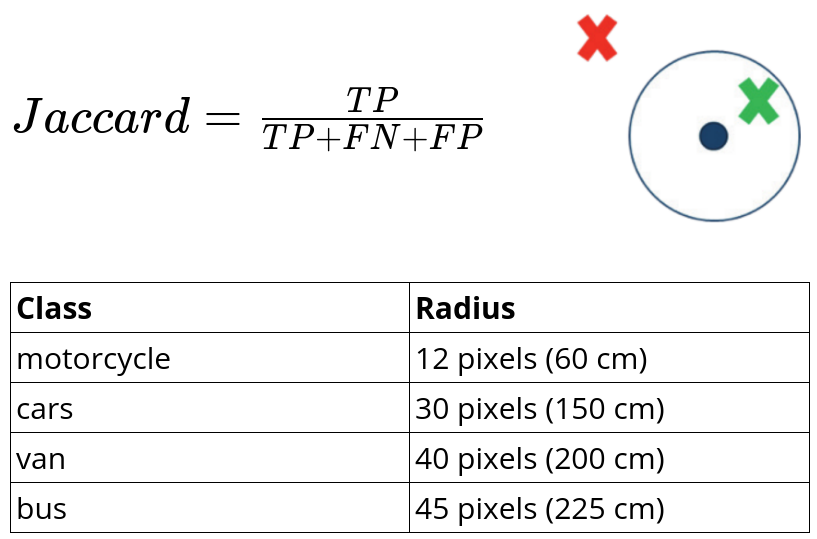

As an estimate of the accuracy of the model, jaccard similarity was used, where True Positive was counted, if the predicted point was next to the British label, and since the sizes of the objects differed, the distance depended on the class.

What we have? A task that, on the one hand, is standard, and on the other, the devil knows how to approach it. Alternatively, you can try to go on the shoulders of the team, after all, the guys who got in the Open Data Science slak have overwhelmingly high qualifications in machine learning. But the fact that the organizers consider the citizens of Russia, Ukraine and Belarus to be second-rate people and that at the same time there is a sea of other tasks, where there are no discrimination in the rules and cash prizes more sympathetic, did not add motivation to start working on the task. In addition, the intensive felling, which was the task of the satellites, led to the fact that many burned out, took a break from the competition and did what was more important, that is, sports and personal life.

The team to solve problems more interesting and more efficient, so the task was to figure out for yourself and select the rest for participation. There was little time left, something around the month.

Having plunged into the literature on Object Detection, it became clear that there are a lot of articles about detection through the boxes, and through the points in the centers of mass there are few. Well, the code implementations that the authors lay out for the case with boxes under each framework are also sufficient, but I did not find anything under the dots during a cursory review.

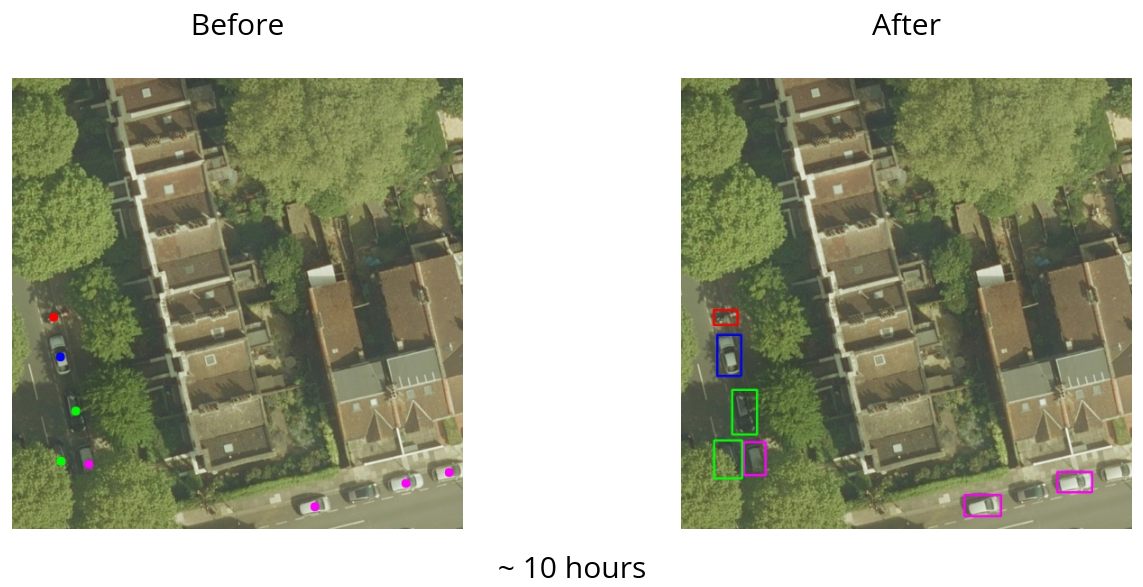

As a result, the first step that was taken was to reduce the unknown task to the standard one, namely, I killed a couple of days at work and circled all the boxes in the train. I used the sloth for this, although later, after I had already marked everything, I guessed to ask, and the team suggested that there were alternative tools, and for other tasks that were after, I used labelimg .

The markup took about ten hours, which, in principle, a lot, but this investment later helped to easily go to the top, and indeed, to win a prize. Two tasks were decided at once, first, it became clear on what exactly to focus in the literature and which foreign code to interrupt for this task, and second, what is equally important, immediately added motivation to the guys in chatika, to join the work on the task . A lot of people did not connect, because, as I mentioned above, equally interesting tasks about cervical cancer and counting fur seals went in parallel. For example, Konstantin Lopukhin immediately said that there was little time for the task about the British typewriters, but it didn’t lose the most on the seals by finishing second in that task ( performance in Yandex with his decision ). Sergey Mushinsky and Vladislav Kassym expressed a desire to get a hand on the task of detecting British typewriters. Actually, all three of them finished in the top 10.

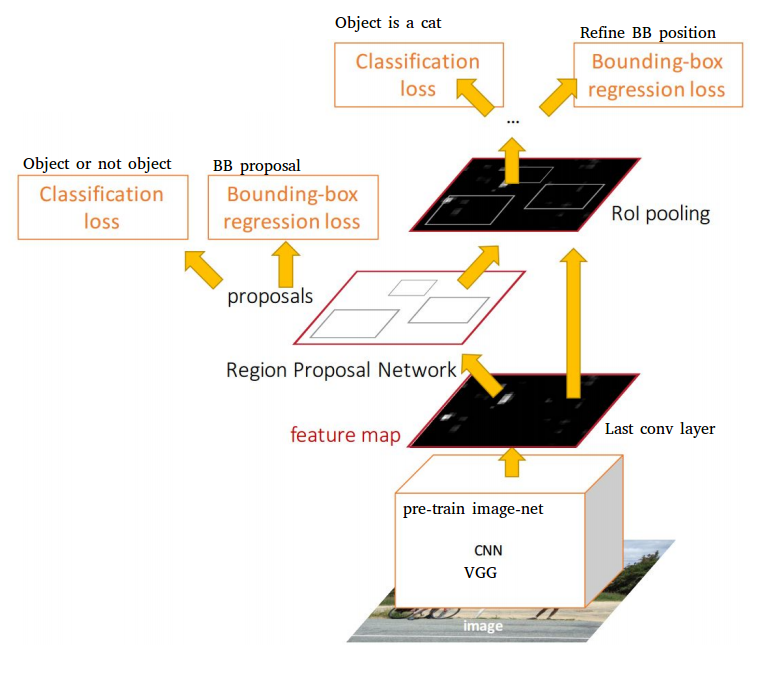

So, a month before the end, the boxes are circled, something needs to be done about it. There are two main variations on the network architecture for the detection task:

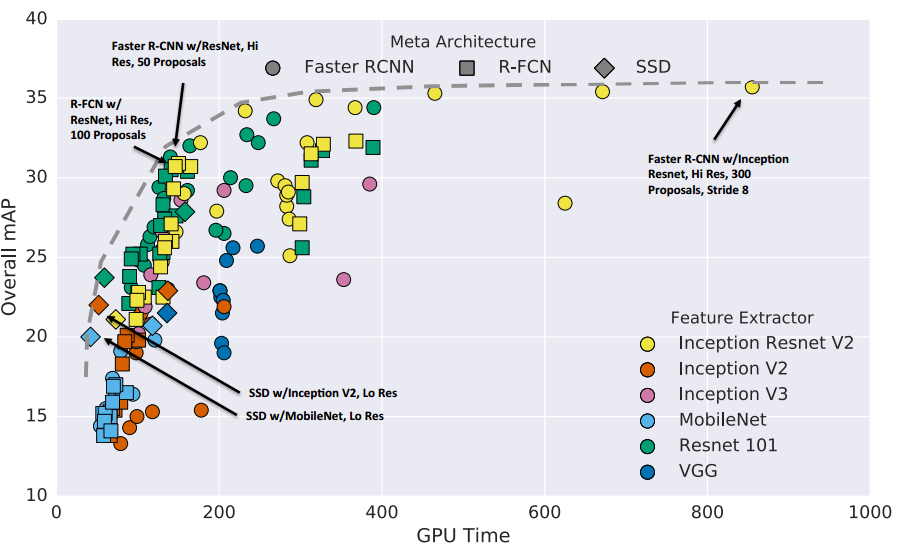

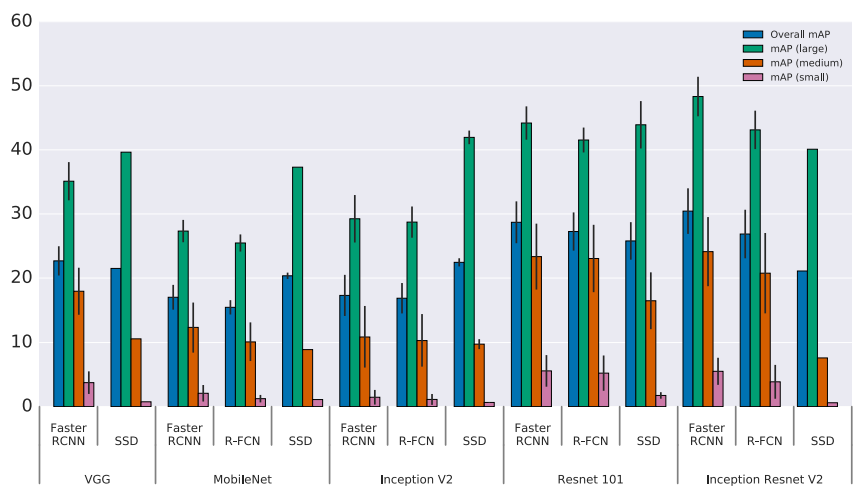

1. The family of two stage detectors: R-FCN, Fast-RCNN, Faster-RCNN — that is, networks whose pipeline can be divided into two parts: the first is to find areas in the picture that correspond to the objects, and the second is to classify for each areas to determine which object is contained there.

2. Family of one stage detectors: YOLO, YOLO9000, SSD - in these architectures the search for areas and classification occurs in one run.

A reasonable question arises, what is better and when. In each article, the authors claim that it is they who are tearing competitors to the British flag. This approach is understandable, you will not be engaged in aggressive academic cherry picking, overfit to standard benchmarks and try about State Of The Art - you will not receive a grant, your graduate students will not graduate and all the other joys that we now have in an academic environment. (On this topic, there was a very nice article recently that provided historical background on how we got to this life: Is it a staggeringly profitable science? ).

But these are problems of the academic environment and their local problems. Here we have a clearly set task, there is a metric, there is a leaderboard on which participants show serious results, and I, besides the boxed boxes, do not have a damn thing. With a large amount of time and computational resources, one could drive all the architectures through our data and look at what happened, but of course there wasn’t any time or desire to do this. But! The question of when and which networks are good, not tormented me alone. A couple of years ago, Objects Detection was done exclusively by scientists, but, despite the power of the approach, it had nothing to do with business. It all came down to the fact that, in contrast to the tasks of classification and segmentation, the detection tasks worked at the speed of an Estonian tortoise, that is, there was no talk of any real time detection. But it was all a couple of years ago, that is, by the standards of Deep Learning, a long time ago. Since then, many improvements have been made and now companies are actively using Object Detection to earn their trillions.

So, at one time, pundits who work at Google took advantage of the fact that everything is fine with their computing resources and wrote a review article in 11 authors in which, on the one hand, not a single new idea, and on the other, it has tremendous value, anyway among me. In this article, the authors drove the Faster RCNN and SSD with various base feature extractions and analyzed the results for speed and accuracy.

The article says that Faster RCNN is more accurate, especially on small objects, and the SSD predicts more brightly. And the difference in the speed of predictions between them, in my opinion, passes just between "you can not shove in production" and "you can shove in production". From the couch, I don’t really imagine what tasks you can shove Faster RCNN into, but I’m happy to hear about the success story in the comments.

I took the word for this article, not because I like to take a word and not because Google writes more about its name when writing articles and, as a result, speaks more about the matter, but simply because there was no big choice. There was very little time left for the end of the competition.

For the above reason, namely - greater accuracy in the detection of small objects, the motorcycles in the pictures were extremely small, the choice fell on the Faster RCNN.

Cool. I read literature, something began to clear up, but reading was not enough. From the fact that some contours began to appear in my head and the mosaic began to gradually form into the big picture, you would not appear on the leaderboard. You need to write code. No code - no conversation.

Detection is not a classification, it is hard to write from scratch, a bunch of nuances, a sea of mystical parameters that the authors selected for months, so usually someone else’s code is taken and adapted to fit their data. There are many different frameworks for working with neural networks, each with its own pros and cons. At the beginning of work on this task, I felt most comfortable with Keras, at which we with Sergey and dragged the previous competition. No sooner said than done, the Faster RCNN implementation on Keras is taken and interrupted by our data. Training starts, something converges, but it is sooooo slow. Somehow you get used to the fact that in other tasks dozens of pictures fly through the network per second, but this is not at all the case. The fact that the Faster RCNN is slow and the fact that Tensorflow does not go off-scale in terms of performance, plus the overhead that comes from Keras, as from the wrapper.

Something was practiced somewhere, it came together, a prediction, a leaderboard and I am the tenth with a result of 0.49. The fact that I am in the top ten is good, but the fact that the guys in the first three have 0.8+, that is, the gap is quite large - this is not very.

Keras is nice and pleasant, clean code, understandable code base, but it is slow and data parallelization is done with more pain than you want. All winter I worked on the previous task, using one GPU, and already closer to the end I bought the second one. Two GPUs - this is very convenient, at a minimum, it allows you to test two ideas at the same time, and even if there are no ideas, you don’t have to idle the hardware - you can always mine cryptocurrencies on these GPUs, which most likely solves many who solve puzzles on the Deep Learning, in his free time from training models.

In this case, the idea is the same - to train Faster RCNN. The network is training slowly, I have two GPUs, the implementation on Keras is slow and tricky, you can parallelize it, but with pain. Now a lot of work is being done to enable Data Parallelization to be done on several GPUs with the little finger of the left leg. As I understand it, at the moment the developers of mxnet and pytorch have successfully coped with this task.

In this problem, the cards are laid down so that the choice fell on mxnet. He is really fast in itself, he really easily parallels the little finger of his left foot. These were pluses, from minuses - the feeling that he was written by aliens. Probably, the effect that mxnet itself and the Faster RCNN implementation were unusual for me, but nevertheless, there are problems with taking a step to the side - a dark forest at once, there is not enough documentation, they did not leave from beginning to end. It was saved by the fact that Sergey and Vladislav were doing exactly the same thing and through the wilds of mxnet I was torn as a part of a group, and not one.

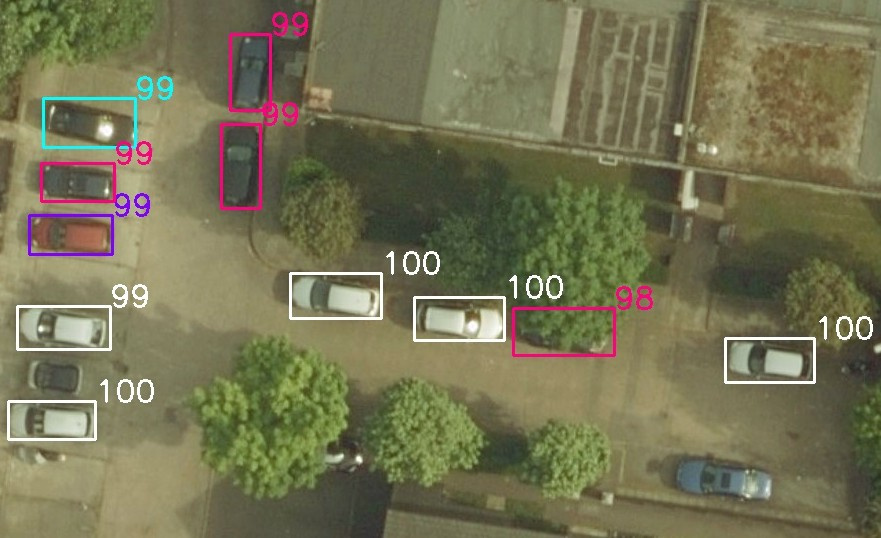

On this the technical part could be completed. In fact, the application of the implementation of Faster RCNN on mxnet instantly brought to the region 0.8+. The training was carried out on crops 1000x1000 in size, which were augmented by turns at angles multiple to 90 degrees + reflections (transformations from the D4 group). In the prediction, the original 2000x2000 images were cut into intersecting 1000x1000 pieces. After this, Non Maximum Supression was used to combat the effect, when the same box was predicted in different tiles. After that, each box was the center of mass, and it went into the final prediction.

At a certain point, despite the fact that everywhere in the literature it is stated that the Resnet 152 as a feature extractor works better than the VGG 16, it turned out that the generally accepted point of view on this task does not work. Someone on the fool tried to use VGG 16 and this markedly improved the result. Later, in another conversation, bobutis , who in the composition of the team won the task of classifying fish and cervical cancer using Faster RCNN, confirmed that in practice, VGG as a feature extractor works better.

In this task there were such pure data and their number was chosen so well that the network worked beyond my expectations. Particularly pleased cases when from under the tree or from under the bridge stuck alone lights.

Where did the network have problems? As expected, tightly packed objects were poorly predicted. There is also a lot of literature on this subject, but in general, the detection of tightly packed objects is another task.

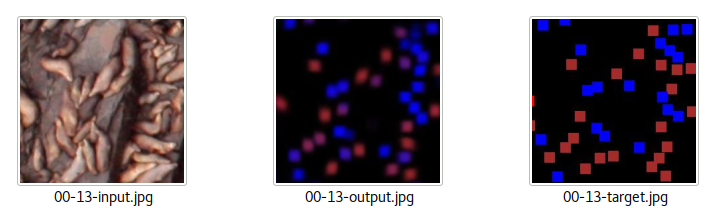

On the task of counting fur seals, the approach did not go through detection, it was precisely because of the tight packing that the people decided through segmentation, comparing each seal to a Gaussian and segmenting the resulting heatmap.

Sometimes, but extremely rarely, the network tried to pass trash for objects of interest to us.

At the same time, the accuracy of my model is jaccard = 0.85, for ironabar , which is 0.87 in the first place, but not 0.98-0.99. Offhand, we made a mistake with about 1200 typewriters - and this is a lot, especially considering that I had a strong impression that the network works more precisely on this task and on this data than manual markup (for the first time I come across this in practice).

I think what happened is this:

1. Many classes are difficult to distinguish visually, a gray car in the shade may look like a dark one, and in the sun like a light one. Blue and bluish cars, too, quite easily, could fall into the wrong class. And this affected both in training and in prediction.

2. The organizers defined the classes in a rather strange manner - not, say, a white sedan or hatchback, but a white long or short car. With a visual assessment, even if the question of color is put aside, there are many borderline cases that are not obvious which class to assign. Even white vans cannot always be distinguished from short white cars.

3. Convertibles, despite the fact that they look dark from above, belong to the class to which the main coloring corresponds, that is, what a passerby would say if he were asked the color of the car. It is not a problem if there were many such cabriolets in a train, but the number of cabriolets in London, frankly, does not exceed the limit.

4. The organizers showed creativity and decided to simplify the task for the participants, that is, they broke the cases into simple and complex, and introduced some internal rules for this, that is, if the car is on the edge and part of the trunk goes beyond the edge of the picture, they did not take it into account in train, in test, something similar can be said about cars that are half hidden by trees. As a result, it added noise to the markup, that is, the network during training didn’t click on False Positive in the wrong way, reducing its predictive power and some predicted cars were classified as False Positive when calculating jaccard on the competition site.

Mid May. The competition is over, I am the third on Public LB, the second is on Private - the organizers write a letter asking to provide:

Please send the following information to general@datasciencechallenge.org:The rules say that if you are a citizen of Russia, then they will give you money. But I will not lose clarification with me, I wrote a letter in which I wrote in English and white that I am a citizen of Russia, I live in San Francisco, USA, I have an American bank and ask a logical question: “Will we pay the prize money”? In response, I receive an email that comes down to what was expected: “No, there will be no prizes, because, despite the result, according to the rules, you did not come out with the color of your passport”.

Full name

Nationality

Telephone contact details

For the winner announcements

Passport details (scan / photo of details page with photo)

Proof of address (eg. Scan / photo of a utility bill)

Bank account details (IBAN, Account Number and Sort Code)

... Clause 2.3b because we have a score below 37. Thank you for your opinion. follow the criteria in the UK.The answer was expected, so it was not insulting. But! The very fact of having all these restrictions on the place of residence and on citizenship ran out from the very beginning.It is clear that in this competition it so happened, but since they position themselves as another copy of Kaggle, I would very much like to click on the nose to increase the chances that they will think in the future before running competitions with unhealthy rules. How to click - it is not clear. I wrote something on their forum, added a couple of lines to a blog post on Kaggle about satellite imagery , even though no one reads this blog, but to clear my conscience. I posted my first and only twitter post and an angry facebook post . I repent. Even when I wrote, I realized that those who are not aware of the details will perceive the text as: "The vile British at the last moment changed the rules and threw me for money."

...

I also dropped the link to the post on facebook and twitter in the ODS. At this, the duty to the world scientific community was completed, for it is not clear what else to do in order to click on the nose, and with these thoughts I went to sleep. To be honest, I underestimated the power of ODS, someone repostnul, someone liked, word for word, the next day this story appeared somewhere in the telegram, on some small news sites, and then it all finally got out of control .

I no longer remember the chronology of events, I only remember how at a certain point I am sitting at work, and instead of training the next model, I read the #_random_flood channel and this is perceived as front-line reports.

The people monitored various media outlets and threw off what flew by on the news.

They tried to contact me:

1. Russia Today - I refused to give an interview on camera, but answered a couple of questions by email. And this was enough for them to note .

2. Channel One - they attacked all my Russian-speaking friends on Facebook and VK with a request to help contact me.

3. Channel Five - tried to contact me, did not work; tried to contact my parents, did not work; went to school and interviewed the teachers. I strongly hope that the teachers did not snap their beaks and knock out the add-on. financing.

4. Ren TV - they wanted something from a series of their standard programs about aliens and other exotic things, namely, that I became the central character of the release of the program “Caution: Russians!”.

5. Komsomolskaya Pravda - they released not only the electronic, but also the paper version.

6. I got home lenta.ru . Not for long, but nonetheless.

7. Some completely unknown channels like “Tsargrad TV” also tried to communicate, but they all disappeared in the avalanche of everyone who tried to contact me.

I did not watch it myself, but the people said that the fact that I refused to work for the camera did not stop the television crews. They still released stories, adding my photo to the background. Well, as usual, they invited some incomprehensible characters to express their expert assessment on what is happening.

There was no great desire to communicate with all these people. The purpose of my post on Facebook is to click the British so that their brains move faster and that in the future they create less discriminatory competitions, and not become the central character of news stories like: “Scandals. Intrigue.Investigations. I have never worked for the camera, plus, I have a sin to say what I think, and you should probably not do that for the camera. Yes, and what I say and what can happen after installation - these are two big differences, so despite the natural adventurism inherent in me, I did not fit into this.

Against the background of all this, I’m not afraid of the word, the flock, Mail.Ru Group stood out very nicely. They wrote me in plain text: “We are ready to pay money instead of the British. How to contact you?". As I wrote, I did not expect to receive money from this competition, but I liked the approach itself. It is clear that for them it is PR, and it is cheap enough, but at the same time, in my opinion, this is a beautiful move. With this approach, they expressed their respect, which looked very good at the contrast with all the others, for whom “giving us an interview is a great honor”. We talked. The text of the press release from them was ready and within 30 seconds after I gave the go-ahead, it was published. Carefully, without hysterics, without "ours are beating", everything is in the case, as it should be a serious company.

Intelligence reported that in the Mail.Ru Group itself, this decision was met ambiguously, which, in general, is expected. In fact, we have:

1. What is the left guy who does not work in Mail.Ru Group.

2. Does not live in Russia.

3. Participated in the competition, which was held by British intelligence.

4. According to the rules of the money should not get.

5. Instead of spending this money and sending workers who plow like negros on the plantations from morning till evening, to some conference, several monthly salaries of a typical Data Scientist are allocated to this left pepper.

The logic is definitely there. And I apologize to the guys whom all this has upset me. In my defense, I want to say that for the allocation of money for conferences and for PR, various departments are responsible, and that, nevertheless, for healthy PR, the money is small, so it should remain at the conference.

Money allocated, it is nice. But you can not take them, they are toxic. Although, again, let's be honest, if it was not about 12 thousand pounds, but about 120, I would probably take it. Or not?God knows. But 1,200,000 just would take.

Money had to do something. Someone on the #_random_flood channel offered to donate them. I liked this idea. Very much annoying lack of funding in basic science. On the one hand, this is logical, because by and large people are paid for the very fact of their existence, hoping that at one fine moment there will be some kind of breakthrough, something big. And there are more than enough examples of such breakthroughs in history, but financing, that in Russia, that abroad, basic science, frankly, is not a fountain. So the idea to donate this money to Russian science has matured. Where to donate? God knows. When I studied in Russia, I did not apply for grants, and it was a long time ago, everything changed ten times. Who is more supportive? Who saws less? I do not know.Googled some funds, chose to fool RFBR. Attempts to contact them were unsuccessful. Maybe they really are fine with money and don’t accept donations. But the Russian Science Foundation agreed to take the money, although it clarified who was the organizer of the competition. Strangely enough, the answer “British intelligence” completely suited them.

As soon as the news passed that I donated a prize of twelve thousand pounds to science, the people approved, but not all. For example, a sister who is also a physicist, though an experimenter and whose funding depends on grants, wrote me an angry letter about what I did wrong and that everything was cut and that she was angry.

In general, this whole story was on the verge of a foul. I got a ride on the news as an offended Russian genius, and could just ride like a traitor to the motherland. He lives abroad, he developed algorithms based on British intelligence data - this is already behind the eyes. If you would like, you could wind up a lot on this topic and the fact that it was an open international competition would no longer concern anyone.

Did the money transfer? As far as I know, yes. It took more than a month, but recently I received a confirmation that the money was already in the fund. Again, I would like to hope that at least one of them will reach the engines of science themselves.

Messages came from those who reacted negatively to the fact that I live and work abroad. I ignored these messages, but let it be, I will answer them here and I will answer like this: after the demob, when I came to the military registration and enlistment office, the military commissary issued me a war veteran certificate, a medal for military prowess, shook the hand and said that Motherland is proud of me. Even if this is not the case, from that moment on I believe that I have paid all the debts for two years of military service. And this is the question with who, to whom and what should, I consider closed. Another question is why it is often easier to move science abroad than in Russia, but this is a separate problem and a detailed analysis, probably, needs to be done in another post and hopefully, I’ll get my hands on it.

From funny. Quite a lot of girls wanted to be added to friends on facebook or VK. Many are very pretty. But at the same time just came the notice that someone wanted to add, without a message. So boring. But if any girl wrote a variation on the topic: “Vladimir, you are probably hard now, such stress, come to visit me for a glass of wine, I’ll invite a friend” - that would be more interesting, and, at least, I would interpret this, that the girl is confident in herself, knows how to achieve what she wants, in general, is not like everything in the good sense of the word. But the maximum that was: "You look" lightly "atypically for a programmer." Somehow even without a spark.

On this note, this post can and finish, but there are a couple of events that I want to add. One guy who lives in London and who himself experienced British nationalism in his own way, connected me with a journalist who works in some small British news publication and spoke with me, this journalist published a note about these events on his website .

The organizers of the competition, despite the fact that they were not going to pay the prize, asked to raise the EC2 instance on Amazon, reproduce the decision, write the documentation and make a presentation, and for this they promised to mention my name on their website. I politely refused. But during the exchange of emails on this topic, it turned out that the journalists, that ours, that the British, tried to contact DSTL and asked questions to which they did not have beautiful answers. I do not know whether the anti-discriminatory click on the nose was successful or not, but I would like to hope that yes.

Towards the middle of June, they wrote an email and said that no matter what, no one takes my second place from me, and asked for my photo.

I was very much tempted to send a photo with a bear cub with the words: “And when I’m free from machine learning, I grow a bear, once again you will be discriminated against - he will eat you”.

I sent the photo with the bear to them, although the wording softened and removed emotions from it. In the final press release, they mentioned me, but for some reason they didn’t show clumsy toes.

In parallel with all this history, a competition with a prize of $ 1,200,000 started on Kaggle, where for some reasons the Chinese were cut off, the team did not approve, the rules were changed, and everything seemed normal.

About a month ago, another open Kaggle competition kicked off and was also discriminatory. The prize is $ 1,500,000, but only US citizens and Green Card holders can apply for it, that is, this time not only China and Russia are left behind, but the European Union, the United Kingdom, Canada, Australia, Israel and everyone else who usually does not have the United States. leaves. Seryoga on this topic wrote an angry text on the forum , and judging by the number of upvotes, the team is mostly in agreement with him. I was also noted and immediately a certain American journalist contacted me and even published a text on this subject .

When I contacted the administrator of this new competition and politely said: “How did it happen? You were always for meritocracy. ” He replied something flowery, but in essence the answer came down to two words: "We have sold out."

I plan to participate in this competition. It ends in a few months, let's see how it goes. The task is rather evil, but if something sticks together, it is possible that there will be a second part of Marlezonsky ballet. In the end, when you drown against discrimination, you hear better from prizes than you did when the damn thing didn't work out.

And what about knowledge? I now have a lot of knowledge in Deep Learning in various areas, at least they have enough for such a story:

In early May, through Kaggle, I was contacted by a Tesla recruiter, who was looking for specialists for the position of AI Researcher. I agreed to interview for this job. Famously passed the take home test, both tech screen, onsite interview, had a good talk with his potential supervisor Andrej Karpathy, had to go through background check and that Elon Musk would approve my application. And just at the background check stage I was cut off. The recruiter told me that I had broken the Non Disclosure Agreement, and that I had talked about the interview process somewhere and that there would be no offer. It was unexpected, but in a more expanded form this bike, I will probably tell you when I will write the third part of the epic about office plankton.

Special thanks to Sergey Mushinsky and Vladislav Kassym for helping to work on this task, Sergey Belousov, for answering my stupid questions on Object Detection, without his help, I would hardly have had a chance to understand the topic so well in a month, and also Julia Semenova for assistance in the preparation of this text.

Source: https://habr.com/ru/post/330118/

All Articles