How did the intro on 64k

Intro intro

The demoscene is about creating cool pieces that work in real time (as if “spinning in your computer”). They are called demos . Some of them are really small, say, 64k or less - such are called intro . The name comes from the advertising or presentation of hacked programs (crack intro). So, intro is just a little demo.

I noticed that many people are interested in the works of the demoscene, but they have no idea how demos are made in reality. This article is a brain dump and postmortem autopsy of our fresh Guberniya intro. I hope it will be interesting for both novice and experienced veterans. The article affects almost all the techniques that are used in the demos, and should give a good idea of how to do them. In this article I will call people by nicknames, because this is the custom on the stage .

Binary under Windows: guberniya_final.zip (61.8 kB) (breaks a bit on AMD cards)

Guberniya in a nutshell

This is a 64k intro released on demopati Revision 2017 . Some numbers:

')

- C ++ and OpenGL, dear imgui for GUI

- 62976 bytes binary under Windows, packed kkrunchy

- mostly raymarching ( rakecasting option - approx. lane. )

- group of 6 people

- one artist :)

- done in four months

- ~ 8300 C ++ lines, not counting library code and spaces

- 4840 lines of GLSL shaders

- ~ 350 git commits

Development

Demos are usually released on demopati, where viewers watch them and vote for the winner. Release for demopati gives a good motivation, because you have a deadline and a passionate audience. In our case, it was Revision 2017 , a big demopati, which traditionally takes place on Easter weekends. You can look at some photos to get an idea of the event.

The number of commits per week. The biggest surge is that we urgently hack right before deadline. The last two columns are the changes for the final version, after demopati.

We started working on the demo in early January and released it on Easter in April during the event. You can watch the entire competition if you wish :)

Our team consisted of six people: cce (this is me), varko , noby , branch , msqrt and goatman.

Design and influence

The song was ready at a fairly early stage, so I tried to draw something based on its motives. It was clear that we needed something big and cinematic with memorable parts.

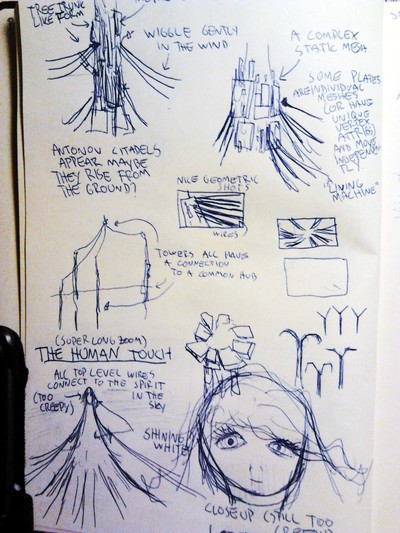

The first visual ideas revolved around wires and their use. I really like the work of Viktor Antonov, so the first sketches are largely copied from Half-Life 2:

The first outline of the citadel towers and ambitious human characters. Full size .

Conceptual work of Victor Antonov for Half-Life 2: Raising the Bar

The similarities are quite obvious. In the landscape scenes, I also tried to convey the mood of Eldion Passageway Anthony Shimez.

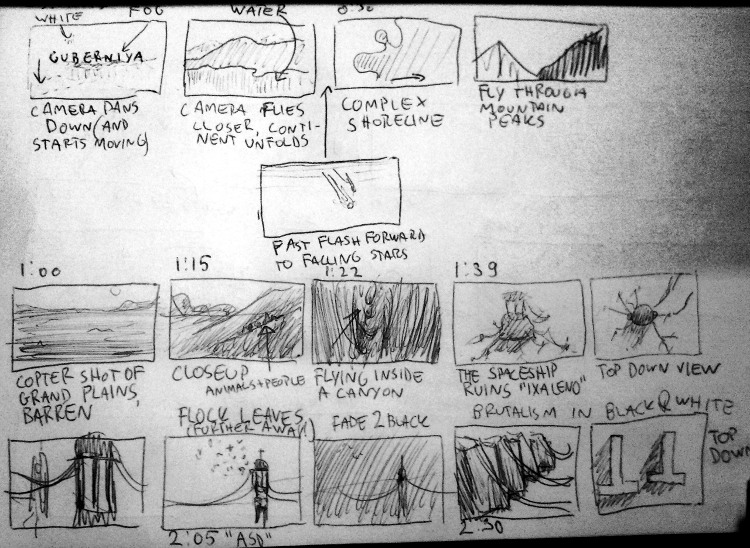

The landscape was created under the inspiration of this glorious video about Iceland , as well as “Koyaaniskatsi”, probably. I had big plans for the story shown on the storyboard:

This storyboard is different from the final intro version. For example, brutal architecture cut out. Full storyboard .

If I would do it again, I would limit myself to just a couple of photos that set the mood. So less work and more space for imagination. But at least drawing helped me organize my thoughts.

Ship

The spacecraft designed by noby . This is a combination of numerous Mandelbrot fractals intersecting with geometric primitives. The design of the ship remained a little unfinished, but it seemed to us that it was better not to touch it in the final version.

The spacecraft is a raymarching field of distances, like everything else.

We had another ship shader that didn’t enter the intro. Now I look at the design, it is very cool, and it is a pity that there was no place for it.

Spacecraft design from branch. Full size .

Implementation

We started with the code base of our old intro Pheromone ( YouTube ). There was basic framing functionality and a library of standard OpenGL functions along with a file system utility that packs files from a data directory into an executable file using

bin2h .The working process

To compile the project, we used Visual Studio 2013 because it was not compiled in VS2015. Our replacement of the standard library did not work very well with the updated compiler and produced funny errors like these:

Visual Studio 2015 did not get along with our code base

For some reason, we are still stuck on VS2015 as an editor and just compiled the project using the v120 platform toolkit.

Most of my work with the demo looked like this: the shaders are open in one window, and the final result with console output is in the others. Full size .

We made a simple global interception of keystrokes that would reload all shaders if it detected the CTRL + S combination:

// Listen to CTRL+S. if (GetAsyncKeyState(VK_CONTROL) && GetAsyncKeyState('S')) { // Wait for a while to let the file system finish the file write. if (system_get_millis() - last_load > 200) { Sleep(100); reloadShaders(); } last_load = system_get_millis(); } It worked really well, and editing shaders in real time became much more interesting. No need to intercept file system events and the like.

GNU Rocket

For animation and production we used Ground Control , fork GNU Rocket . Rocket is a program for editing animated curves, it connects to the demo via a TCP socket. The reference frames are sent on request demo. This is very convenient, because you can edit and recompile the demo without closing the editor and without risking losing the synchronization position. For the final version, reference frames are exported to binary format. However, there are some annoying restrictions .

Tool

Changing the point of view with the mouse and keyboard is very convenient to select camera angles. Even a simple GUI helps a lot when little things matter.

Unlike some , we did not have a tool for demos, so we had to create it as we worked. The magnificent dear imgui library makes it easy to add features as needed.

For example, you need to add several sliders to control the color parameters — all you need is to add these lines to the rendering cycle ( not to a separate GUI code).

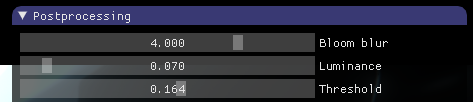

imgui::Begin("Postprocessing"); imgui::SliderFloat("Bloom blur", &postproc_bloom_blur_steps, 1, 5); imgui::SliderFloat("Luminance", &postproc_luminance, 0.0, 1.0, "%.3f", 1.0); imgui::SliderFloat("Threshold", &postproc_threshold, 0.0, 1.0, "%.3f", 3.0); imgui::End(); Final result:

These sliders were easy to add.

The camera position can be saved to a

.cpp file by pressing F6 , so after the next compilation it will be in the demo. This eliminates the need for a separate data format and the corresponding serialization code, but such a solution can also be rather sloppy.Making small binaries

The main thing to minimize the binary is to throw away the standard library and compress the compiled binary. As a base for our own library implementation, we used the Tiny C Runtime Library from Mike_V.

Binary compression is done by kkrunchy , a tool made for this very purpose. It works with individual executables, so you can write your demo in C ++, Rust, Object Pascal or anything else. To be honest, the size for us was not a particular problem. We did not store a lot of binary data like images, so there was room for maneuver. I didn't even have to delete comments from the shaders!

Floating commas

The floating-point code delivered some headaches by making calls to the functions of a non-existent standard library. Most of them were eliminated by disabling SSE compiler

/arch:IA32 key and removing calls to ftol using the /QIfst flag, which generates code that does not save the FPU flags for truncation mode. This is not a problem, because you can set the floating-point truncation mode at the beginning of your program using this code from Peter Schoffhauser : // set rounding mode to truncate // from http://www.musicdsp.org/showone.php?id=246 static short control_word; static short control_word2; inline void SetFloatingPointRoundingToTruncate() { __asm { fstcw control_word // store fpu control word mov dx, word ptr [control_word] or dx, 0x0C00 // rounding: truncate mov control_word2, dx fldcw control_word2 // load modfied control word } } You can read more about these things at benshoof.org .

POW

The call to

pow still generates a call to the internal __CIpow function that does not exist. I could not find out its signature by myself, but I found the implementation in ntdll.dll from Wine - it became clear that it expects two double precision numbers in the registers. After this, it became possible to make a wrapper that calls our own implementation of pow : double __cdecl _CIpow(void) { // Load the values from registers to local variables. double b, p; __asm { fstp qword ptr p fstp qword ptr b } // Implementation: http://www.mindspring.com/~pfilandr/C/fs_math/fs_math.c return fs_pow(b, p); } If you know the best way to deal with this, please report.

WinAPI

If you can not count on the SDL or something similar, then you have to use pure WinAPI for the necessary operations to display the window on the screen. If you have problems, this is what can help:

- Example of creating a WinAPI window

- OpenGL initialization example , glext.h and wglext.h are required

Please note that in the last example we load function pointers only for those OpenGL functions that are actually used in the business. It might be a good idea to automate this. Functions need to be accessed along with string identifiers that are stored in an executable file, so the less functions are loaded, the more space is saved. The Whole Program Optimization option may remove all unused string literals, but we will not use it because of a problem with memcpy .

Rendering techniques

The rendering is performed mainly by the raymarching method, and for convenience we used the hg_sdf library. Iñigo Quiles (from now on, simply called iq) wrote a lot about this and many other techniques. If you have ever visited ShaderToy , then you should be familiar with this.

In addition, we had a racaster output - the depth buffer value, so that we could combine the SDF (signed distance fields) with the geometry in the raster, as well as apply post-processing effects.

Shading

We applied the standard Unreal Engine 4 shading ( here is a large pdf with a description ) with a drop of GGX. This is not very noticeable, but it does matter in the main points. From the very beginning, we planned to make the same illumination for both raymarching and rasterized forms. The idea was to use deferred rendering and shadow maps, but it completely failed.

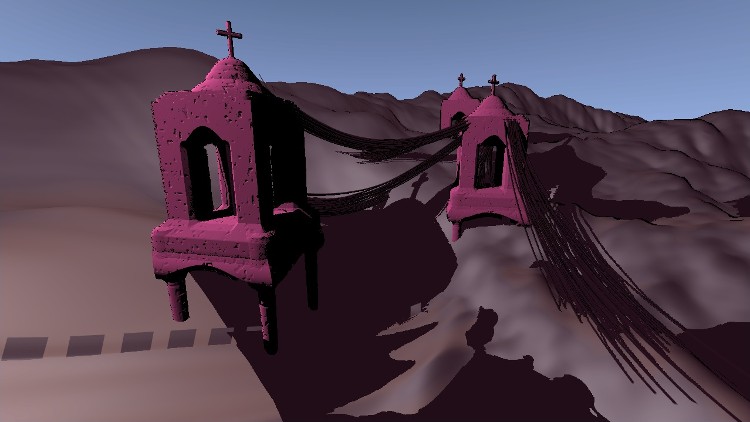

One of the first experiments with the imposition of shadow maps. Notice that both towers and wires cast a shadow on the raymarching-ground and also correctly intersect. Full size .

It is incredibly difficult to correctly render large areas with shadow maps due to the wildly jumping screen-to-shadow map-texel and other problems with accuracy. I also had no desire to start experiments with cascading shadow cards . In addition, raymarching of the same scene from different angles of view is really slow . So we just decided to scrape the whole system of the same lighting. This turned out to be a huge problem later when we tried to correlate the lighting of rasterized wires and raymarching scene geometry.

Terrain

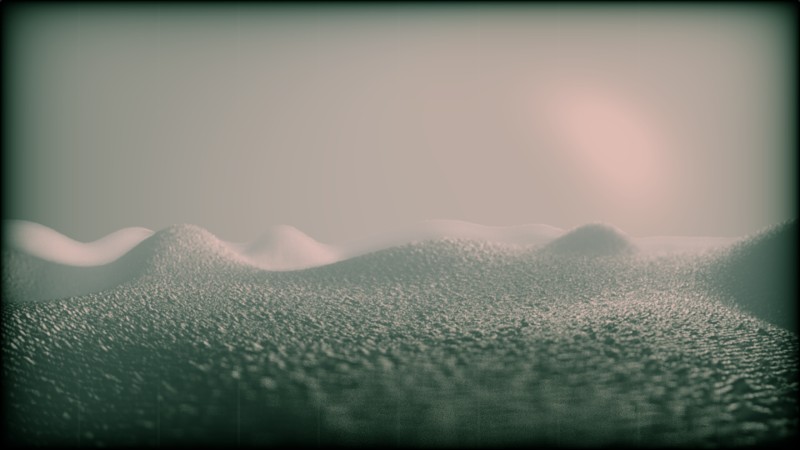

Raymarching terrain was produced by numerical noise with analytical derivatives. one Of course, the generated derivatives were used to impose shadows, but also to control the beam pitch to accelerate the traversal of rays by smooth contours, as in examples iq. If you want to learn more, read the old article about this technique or play with the cool rainforest scene on ShaderToy . The landscape elevation map became more realistic when msqrt implemented an exponentially distributed noise .

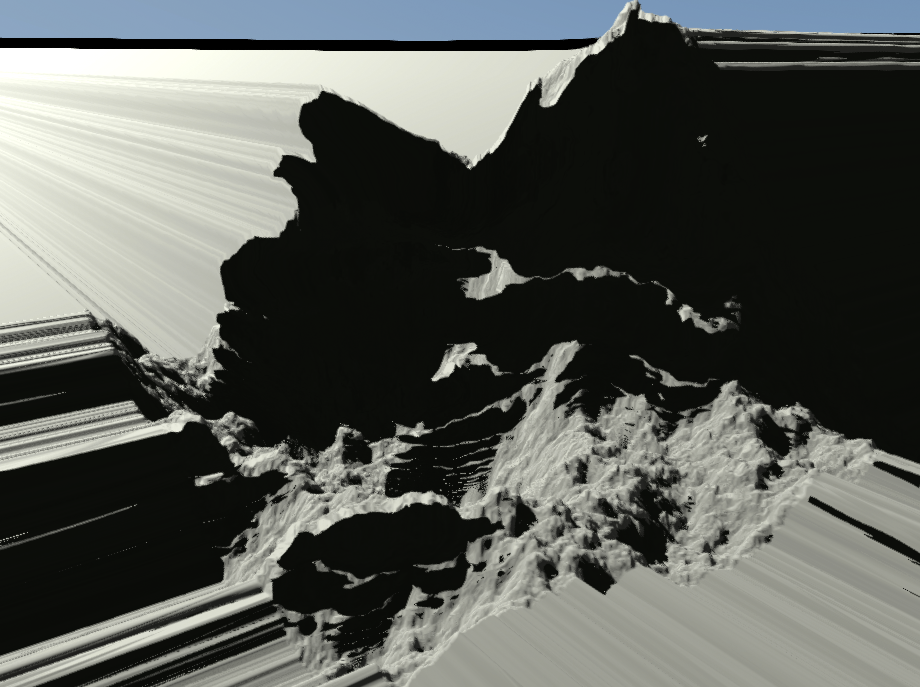

The first tests are my own implementation of numerical noise.

The implementation of the area from the branch, which they decided not to use. I do not remember why. Full size .

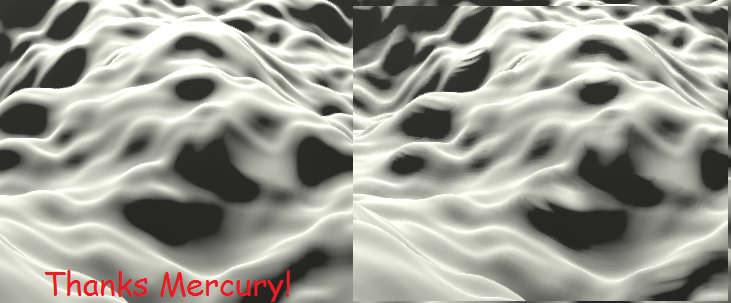

The effect of the landscape is calculated very slowly, because we brute force the shadows and reflections. The use of shadows is a small hack with shadows , in which the size of the penumbra is determined by the shortest distance that was encountered when walking around the shadow beam. They look pretty good in action . We also tried using bisection tracing to speed up the effect, but it produced too many artifacts. On the other hand, the mercury raymarching tricks (another demo group) helped us to improve the quality a bit without losing speed.

Landscape rendering with better fixed-point iterations (left) compared to conventional raymarching (right). Pay attention to the unpleasant artifacts of ripples in the picture to the right.

The sky is generated by almost the same techniques as described in behind elevated from iq, slide 43. Some simple functions of the direction vector of the beam. The sun produces rather large values in the frame buffer (above 100), so this also adds some color naturalness.

Lane scene

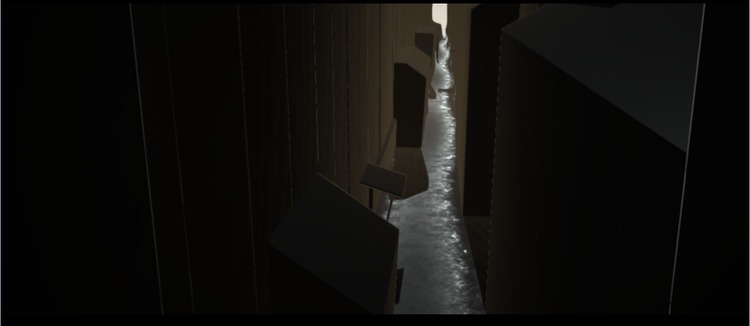

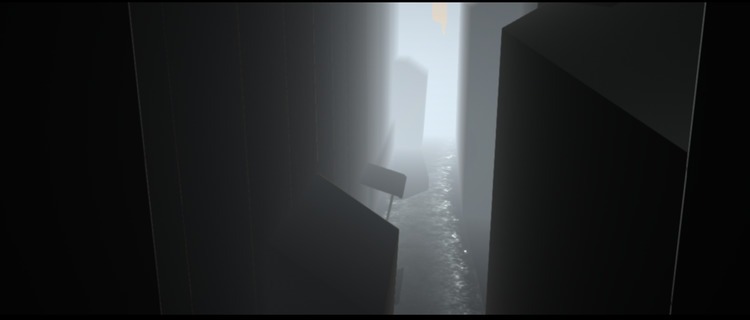

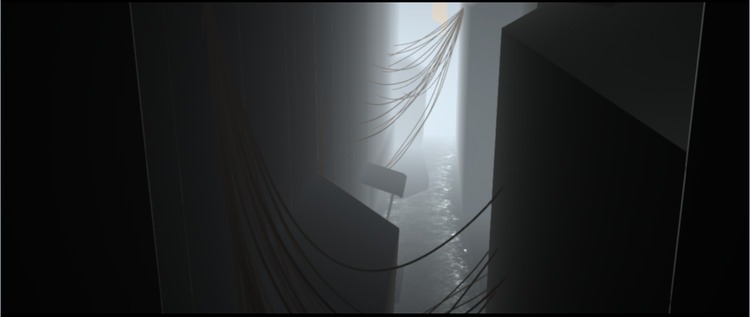

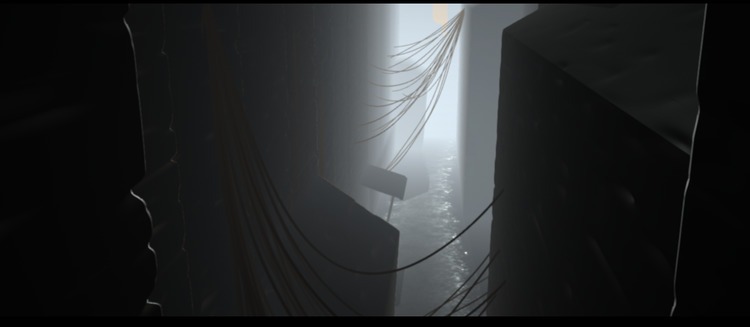

This is a view created under the influence of photos of Fan Ho . Our post-processing effects really allowed us to create a solid scene, although the original geometry is fairly simple.

An ugly distance field with some repeating fragments. Full size .

Added a bit of fog with an exponential change in distance. Full size .

Wires make the scene more interesting and realistic. Full size .

In the final version, a bit of noise is added to the distance field to create the impression of brick walls. Full size .

With post-processing added color gradient, color, chromatic aberration and glare. Full size .

Modeling with distance fields

B-52 bombers are a good example of SDF simulation. They were much simpler at the development stage, but we brought them to the final release. From a distance they look pretty convincing:

Bombers look good from a distance. Full version .

However, this is just a bunch of capsules. Admittedly, it would be easier to simply model them in some 3D package, but we didn’t have any suitable tools at hand, so we chose a faster way. Just for reference, here’s what the distance field shader looks like: bomber_sdf.glsl .

However, they are actually very simple. Full size .

Characters

The first four frames of the goat animation.

Animated characters are simply packaged 1-bit bitmaps. During playback, the frames smoothly move from one to another. Material provided by the mysterious goatman.

Kozopas with your friends.

Post processing

The effects of post-processing wrote varko. The system is as follows:

- Add shadows from the G-buffer.

- Calculate the depth of field.

- Extract light parts for chroma.

- Perform N separate Gaussian blur operations.

- Calculate fake lens flare and spotlight glare.

- Put it all together.

- Make smooth contours using FXAA ( thanks, mudlord ).

- Color correction.

- Gamma Correction and Light Grit.

The lens flare largely follow the technique described by John Chapman . Sometimes it was hard to work with them, but the end result delivers.

We tried to aesthetically use the effect of depth of field. Full size .

The effect of depth of field (based on the DICE technique ) is done in three passes. The first one calculates the size of the circle of unsharpness for each pixel, and the other two passes impose on them two spots from rotating areas. We also make improvements in several iterations (in particular, we impose numerous Gaussian smears) if necessary. This implementation worked well for us and it was fun to play with.

The effect of depth of field in action. The red picture shows a calculated circle of sharpness for the DOF spot.

Color correction

Rocket has an animated parameter

pp_index , which is used to switch between color correction profiles. Each profile is simply a different branch of a large branch operator in the final post-processing shader: vec3 cl = getFinalColor(); if (u_GradeId == 1) { cl.gb *= UV.y * 0.7; cl = pow(cl, vec3(1.1)); } else if (u_GradeId == 2) { cl.gb *= UV.y * 0.6; cl.g = 0.0+0.6*smoothstep(-0.05,0.9,cl.g*2.0); cl = 0.005+pow(cl, vec3(1.2))*1.5; } /* etc.. */ It is very simple, but it works quite well.

Physical modeling

There are two simulated systems in the demo: wires and a flock of birds. They also wrote varko.

Wires

Wires add realism to the scene. Full size .

Wires are considered as a series of springs. They are modeled on a GPU using computational shaders. We make this simulation in many small steps due to the instability of the Verlet numerical integration method that we use here. The computational shader also outputs the wire geometry (a series of triangular prisms) to the vertex buffer. Unfortunately, for some reason, the simulation does not work on AMD cards.

A flock of birds

Birds give a sense of scale.

The flock model consists of 512 birds, where the first 128 are considered leaders. Leaders move in a whirling pattern, and the rest follow them. I think that in real life the birds follow the movements of the nearest neighbors, but this simplification looks quite good. The flock was rendered as

GL_POINTs , whose size was modulated to create the impression of flapping wings. I think this rendering technique was also used in Half-Life 2.Music

Usually, music for 64k intro is made using a VST plugin : so musicians can use their usual instruments to compose music. A classic example of this approach is farbrausch V2 Synthesizer .

This was a problem. I did not want to use any ready-made synthesizer, but from previous failed experiments I knew that making my own virtual instrument would require a lot of work. I remember how I really liked the mood of the element / gesture demo of 61% , which the branch did with a musical ambient theme, prepared in paulstretched . This gave me the idea to implement such in the amount of 4k or 64k.

Paulstretch

Paulstretch is a great tool for really crazy stretching music. If you haven't heard about him, then you should definitely listen to what he can make from the sound of the Windows 98 greeting . His internal algorithms are described in this interview with the author , and he is also open source.

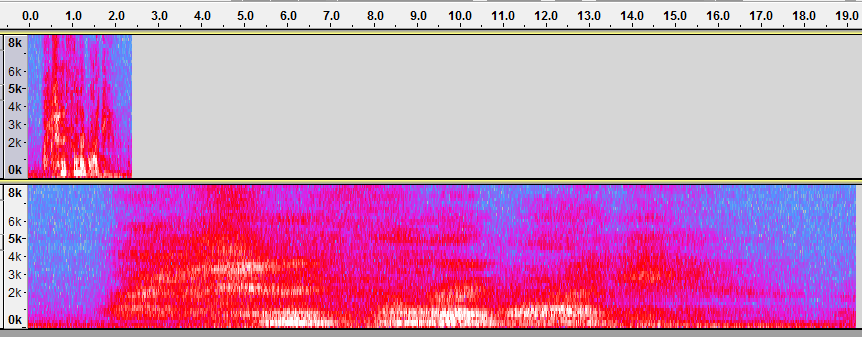

The original sound (top) and the stretched sound (bottom) created using the Paulstretch effect for Audacity. Notice also how the frequencies are spread across the spectrum (vertical axis).

Essentially, along with stretching the original signal, it also shakes its phases in the frequency space, so instead of metal artifacts you get an unearthly echo. This requires many Fourier transforms, and the original application uses the Kiss FFT library for this. I did not want to depend on an external library, so I finally implemented a simple discrete Fourier transform on the GPU. It took a long time to implement it correctly, but in the end it was worth it. The implementation of the GLSL shader is very compact and works fairly quickly, despite its bruteforce nature.

Tracker module

Now it has become possible to wind the coils of ambient buzz, if there is some sensible sound as input data. So I decided to use proven and tested technology: tracker music. It is much like MIDI 2. , but packed in a file with samples. For example, in the kasparov demo from elitegroup ( YouTube ), a module with additional reverberation is used. If it worked 17 years ago, why not now?

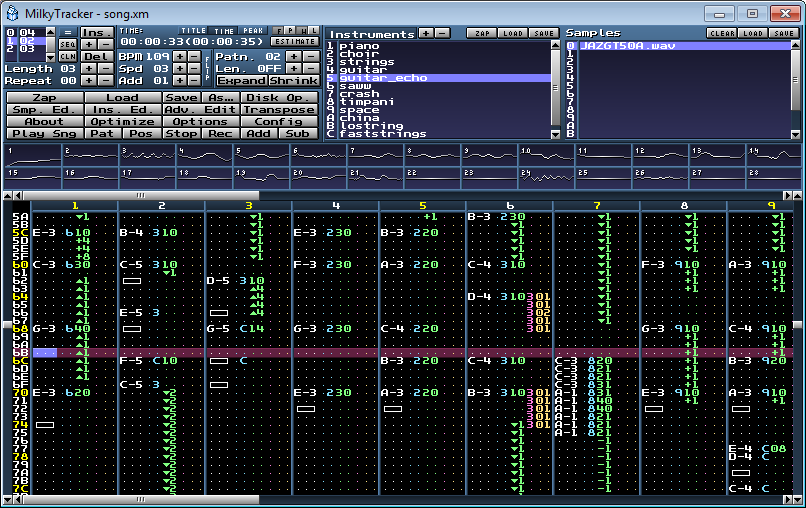

I used

gm.dls - a sound bank built into Windows MIDI (again an old trick) and made a song using MilkyTracker in the XM module format. This format was used for many more demos under MS-DOS in the 90s.

I used MilkyTracker to compose the original song. The final module file has been cleared from the instruments samples, and instead the offset and length parameters from

gm.dlsThe catch with

gm.dls is that Roland instruments from 1996 sound very archaic and of poor quality. But it turned out that there is no problem in this if you immerse them in a ton of reverberations! Here is an example in which a short test song first plays, and then a stretched version:Surprisingly atmospheric, agree? So yes, I made a song that mimics Hollywood music, and it turned out great. In general, this is all about the musical side.

Thanks

Thanks to varko for help with some of the technical details of this article.

Additional materials

- Ferris from the Logicoma group shows his toolkit for creating demos 64k

- Don't forget to see Engage first, their work in the same competition in which we participated.

- Sources of some demos Ctrl-Alt-Test

- There are code 4k and 64k.

- They also have an intro: H-Immersion

1. You can calculate analytic derivatives for gradient noise: https://mobile.twitter.com/iquilezles/status/863692824100782080

2. The first thought was to simply use MIDI instead of the tracker module, but it does not seem to be a way to simply render a song to a Windows audio buffer. Apparently, somehow this is possible using the DirectMusic API, but I could not find how. ↑

Source: https://habr.com/ru/post/330090/

All Articles