Win the Android Camera2 API with RxJava2 (Part 1)

As you know, RxJava is ideal for solving two tasks: processing event streams and working with asynchronous methods. In one of the previous posts, I showed how you can build a chain of operators that processes the flow of events from the sensor. And today I want to demonstrate how RxJava is used to work with a substantially asynchronous API. As such an API, I chose the Camera2 API.

Below is an example of using the Camera2 API, which is still rather poorly documented and studied by the community. To tame it, RxJava2 will be used. The second version of this popular library was released relatively recently, and there are not many examples on it either.

Who is this post for? I hope that the reader is an experienced, but still an inquisitive Android developer. Basic knowledge of reactive programming (a good introduction is here ) and understanding of Marble Diagrams are highly desirable. The post will be useful to those who want to penetrate the reactive approach, as well as those who want to use the Camera2 API in their projects. I warn you, there will be a lot of code!

Project sources can be found on GitHub .

Project preparation

Add third-party dependencies to our project.

Retrolambda

When working with RxJava, lambda support is absolutely necessary - otherwise the code will look just awful. So if you haven't switched to Android Studio 3.0 yet, add Retrolambda to our project.

buildscript { dependencies { classpath 'me.tatarka:gradle-retrolambda:3.6.0' } } apply plugin: 'me.tatarka.retrolambda' Now you can raise the language version to 8, which will provide support for the lambda.

android { compileOptions { sourceCompatibility JavaVersion.VERSION_1_8 targetCompatibility JavaVersion.VERSION_1_8 } } Full instructions .

Rxjava2

compile 'io.reactivex.rxjava2:rxjava:2.1.0' The current version, full instructions and documentation are available here .

RxAndroid

Useful library when using RxJava on Android. Mainly used for AndroidSchedulers. Repository

compile 'io.reactivex.rxjava2:rxandroid:2.0.1' Camera2 API

At one time, I participated in the code review module written using the Camera1 API, and was unpleasantly surprised by the inevitable because of the design of the API concurrency issues. Apparently, Google also realized the problem and stopped the first version of the API. Instead, it is proposed to use the Camera2 API. The second version is available on Android Lollipop and newer.

First impressions

Google did a good job of making mistakes in terms of streamlining. All operations are performed asynchronously, notifying the results through callbacks. Moreover, by passing the appropriate Handler, you can select the stream in which the callback methods will be called.

Reference implementation

Google offers an example of the Camera2Basic application.

This is a rather naive implementation, but it helps to get started with the API. See if we can make a more elegant solution using a reactive approach.

Steps for taking a snapshot

In short, the sequence of actions for taking a picture is as follows:

- select a device

- open the device

- open session

- run preview

- by clicking on the button to take a picture

- close the session

- close the device.

Device selection

First we need the CameraManager .

mCameraManager = (CameraManager) mContext.getSystemService(Context.CAMERA_SERVICE); This class allows you to receive information about existing cameras in the system and connect to them. There may be several cameras, smartphones usually have two of them: front and rear.

Get the list of cameras.

String[] cameraIdList = mCameraManager.getCameraIdList(); That's so severe - just a list of string aydishnikov.

Now we get a list of characteristics for each camera.

for (String cameraId : cameraIdList) { CameraCharacteristics characteristics = mCameraManager.getCameraCharacteristics(cameraId); ... } CameraCharacteristics contains a huge number of keys by which you can get information about the camera.

Most often at the stage of selecting the camera look at where the camera is directed. To do this, you need to get the value for the key CameraCharacteristics.LENS_FACING .

Integer facing = characteristics.get(CameraCharacteristics.LENS_FACING); The camera can be front ( CameraCharacteristics.LENS_FACING_FRONT ), rear ( CameraCharacteristics.LENS_FACING_BACK ) or connectable ( CameraCharacteristics.LENS_FACING_EXTERNAL ).

The camera selection function with orientation preference might look something like this:

@Nullable private static String getCameraWithFacing(@NonNull CameraManager manager, int lensFacing) throws CameraAccessException { String possibleCandidate = null; String[] cameraIdList = manager.getCameraIdList(); if (cameraIdList.length == 0) { return null; } for (String cameraId : cameraIdList) { CameraCharacteristics characteristics = manager.getCameraCharacteristics(cameraId); StreamConfigurationMap map = characteristics.get(CameraCharacteristics.SCALER_STREAM_CONFIGURATION_MAP); if (map == null) { continue; } Integer facing = characteristics.get(CameraCharacteristics.LENS_FACING); if (facing != null && facing == lensFacing) { return cameraId; } //just in case device don't have any camera with given facing possibleCandidate = cameraId; } if (possibleCandidate != null) { return possibleCandidate; } return cameraIdList[0]; } Great, now we have the camera id of the desired orientation (or any other, if the desired one was not found). So far, it's pretty simple, no asynchronous actions.

Create Observable

We approach asynchronous API methods. We will convert each of them into Observable using the create method.

openCamera

The device must be opened using the CameraManager.openCamera method before use.

void openCamera (String cameraId, CameraDevice.StateCallback callback, Handler handler) In this method, we pass the id of the selected camera, the callback for obtaining the asynchronous result, and the Handler, if we want the callback methods to be called in the stream of this Handler.

Here we are faced with the first asynchronous method. It is understandable, because the initialization of the device is a long and expensive process.

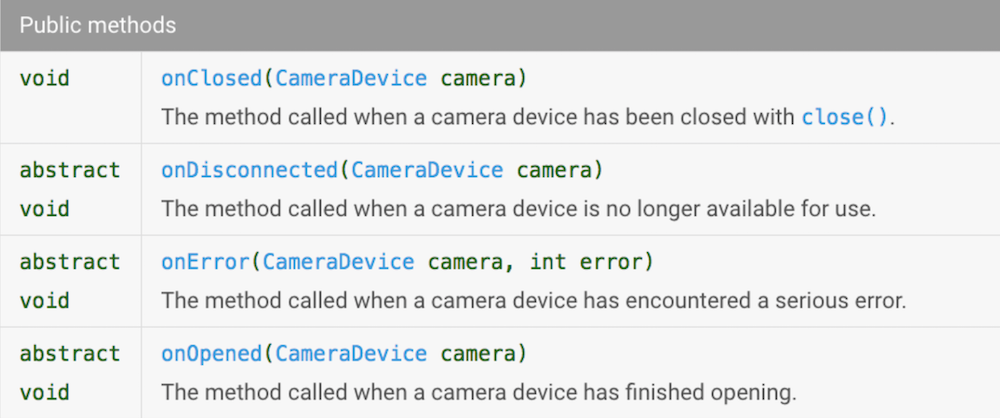

Let's take a look at CameraDevice.StateCallback .

In the reactive world, these methods will correspond to events. Let's do an Observable that will generate events when the camera API onOpened , onClosed , onDisconnected . So that we can distinguish these events, create an enum:

public enum DeviceStateEvents { ON_OPENED, ON_CLOSED, ON_DISCONNECTED } And in order to have a jet stream (hereinafter I will call a jet stream a sequence of reactive operators - not to be confused with Thread) to have an opportunity to do something with the device, we will add a link to CameraDevice in the generated event. The easiest way is to generate Pair<DeviceStateEvents, CameraDevice> . To create an Observable , we use the create method (remember, we use RxJava2, so now we are not ashamed to do this).

Here is the signature of the create method:

public static <T> Observable<T> create(ObservableOnSubscribe<T> source) That is, we need to pass into it an object that implements the ObservableOnSubscribe<T> interface. This interface contains only one method.

void subscribe(@NonNull ObservableEmitter<T> e) throws Exception; which is called every time Observer subscribes to our Observable .

Let's see what ObservableEmitter .

public interface ObservableEmitter<T> extends Emitter<T> { void setDisposable(@Nullable Disposable d); void setCancellable(@Nullable Cancellable c); boolean isDisposed(); ObservableEmitter<T> serialize(); } Already good. Using the methods setDisposable/setCancellable you can set the action that will be executed when our Observable unsubscribed. This is extremely useful if, when creating the Observable we opened a resource that needs to be closed. We could create Disposable in which to close the device when unsubscribe , but we want to respond to the onClosed event, so we will not do this.

The isDisposed method allows isDisposed to check if someone else is subscribed to our Observable.

Note that ObservableEmitter extends the Emitter interface.

public interface Emitter<T> { void onNext(@NonNull T value); void onError(@NonNull Throwable error); void onComplete(); } These are the methods we need! We will call onNext every time the Camera API calls the CameraDevice.StateCallback onOpened / onClosed / onDisconnected ; and we will call onError when the Camera API calls an onError .

So, apply our knowledge. The method that creates the Observable may look like this (for the sake of readability, I removed the checks on isDisposed() , the full code with boring checks is on GitHub):

public static Observable<Pair<DeviceStateEvents, CameraDevice>> openCamera( @NonNull String cameraId, @NonNull CameraManager cameraManager ) { return Observable.create(observableEmitter -> { cameraManager.openCamera(cameraId, new CameraDevice.StateCallback() { @Override public void onOpened(@NonNull CameraDevice cameraDevice) { observableEmitter.onNext(new Pair<>(DeviceStateEvents.ON_OPENED, cameraDevice)); } @Override public void onClosed(@NonNull CameraDevice cameraDevice) { observableEmitter.onNext(new Pair<>(DeviceStateEvents.ON_CLOSED, cameraDevice)); observableEmitter.onComplete(); } @Override public void onDisconnected(@NonNull CameraDevice cameraDevice) { observableEmitter.onNext(new Pair<>(DeviceStateEvents.ON_DISCONNECTED, cameraDevice)); observableEmitter.onComplete(); } @Override public void onError(@NonNull CameraDevice camera, int error) { observableEmitter.onError(new OpenCameraException(OpenCameraException.Reason.getReason(error))); } }, null); }); } Super! We just got a little more reactive!

As I said before, all Camera2 API methods accept Handler as one of the parameters. By passing null , we will receive callback calls in the current stream. In our case, this is the thread in which the subscribe was called, that is, the main thread.

createCaptureSession

Now that we have CameraDevice , we can open the CaptureSession . We will not hesitate!

To do this, we use the CameraDevice.createCaptureSession method. Here is its signature:

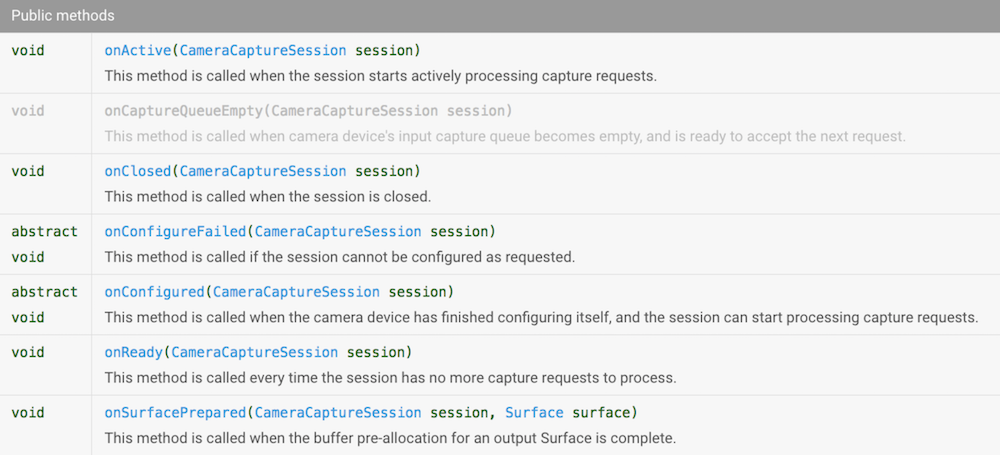

public abstract void createCaptureSession(@NonNull List<Surface> outputs, @NonNull CameraCaptureSession.StateCallback callback, @Nullable Handler handler) throws CameraAccessException; A list of the Surface (where to get it, CameraCaptureSession.StateCallback later) and CameraCaptureSession.StateCallback . Let's see what methods it has.

Richly But we already know how to win Kolbeks. Create an Observable that will generate events when the Camera API calls these methods. To distinguish them, create an enum.

public enum CaptureSessionStateEvents { ON_CONFIGURED, ON_READY, ON_ACTIVE, ON_CLOSED, ON_SURFACE_PREPARED } And in order to have a CameraCaptureSession object in the jet stream, we will generate not just the CaptureSessionStateEvent , but the Pair<CaptureSessionStateEvents, CameraCaptureSession> . Here is how the code of the method creating such an Observable might look like (checks are again removed for readability):

@NonNull public static Observable<Pair<CaptureSessionStateEvents, CameraCaptureSession>> createCaptureSession( @NonNull CameraDevice cameraDevice, @NonNull List<Surface> surfaceList ) { return Observable.create(observableEmitter -> { cameraDevice.createCaptureSession(surfaceList, new CameraCaptureSession.StateCallback() { @Override public void onConfigured(@NonNull CameraCaptureSession session) { observableEmitter.onNext(new Pair<>(CaptureSessionStateEvents.ON_CONFIGURED, session)); } @Override public void onConfigureFailed(@NonNull CameraCaptureSession session) { observableEmitter.onError(new CreateCaptureSessionException(session)); } @Override public void onReady(@NonNull CameraCaptureSession session) { observableEmitter.onNext(new Pair<>(CaptureSessionStateEvents.ON_READY, session)); } @Override public void onActive(@NonNull CameraCaptureSession session) { observableEmitter.onNext(new Pair<>(CaptureSessionStateEvents.ON_ACTIVE, session)); } @Override public void onClosed(@NonNull CameraCaptureSession session) { observableEmitter.onNext(new Pair<>(CaptureSessionStateEvents.ON_CLOSED, session)); observableEmitter.onComplete(); } @Override public void onSurfacePrepared(@NonNull CameraCaptureSession session, @NonNull Surface surface) { observableEmitter.onNext(new Pair<>(CaptureSessionStateEvents.ON_SURFACE_PREPARED, session)); } }, null); }); } setRepeatingRequest

In order for a live picture from the camera to appear on the screen, you need to constantly receive new images from the device and transfer them for display. For this API there is a convenient method CameraCaptureSession.setRepeatingRequest .

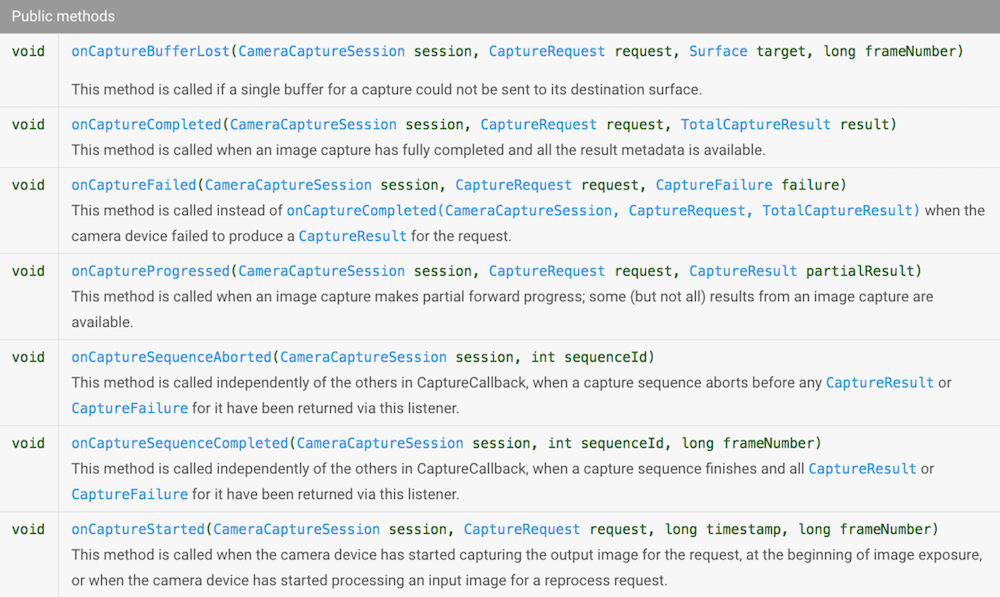

int setRepeatingRequest(@NonNull CaptureRequest request, @Nullable CaptureCallback listener, @Nullable Handler handler) throws CameraAccessException; We use the technique already familiar to us to make this operation reactive. We look at the interface CameraCaptureSession.CaptureCallback .

Again, we want to distinguish the generated events and for this we create an enum .

public enum CaptureSessionEvents { ON_STARTED, ON_PROGRESSED, ON_COMPLETED, ON_SEQUENCE_COMPLETED, ON_SEQUENCE_ABORTED } We see that quite a lot of information that we want to have in the jet stream is transferred to the methods, including the CameraCaptureSession , CaptureRequest , CaptureResult , so just Pair<> will no longer suit us - we will create a POJO:

public static class CaptureSessionData { final CaptureSessionEvents event; final CameraCaptureSession session; final CaptureRequest request; final CaptureResult result; CaptureSessionData(CaptureSessionEvents event, CameraCaptureSession session, CaptureRequest request, CaptureResult result) { this.event = event; this.session = session; this.request = request; this.result = result; } } Creating the CameraCaptureSession.CaptureCallback to a separate method.

@NonNull private static CameraCaptureSession.CaptureCallback createCaptureCallback(final ObservableEmitter<CaptureSessionData> observableEmitter) { return new CameraCaptureSession.CaptureCallback() { @Override public void onCaptureStarted(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, long timestamp, long frameNumber) { } @Override public void onCaptureProgressed(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull CaptureResult partialResult) { } @Override public void onCaptureCompleted(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull TotalCaptureResult result) { if (!observableEmitter.isDisposed()) { observableEmitter.onNext(new CaptureSessionData(CaptureSessionEvents.ON_COMPLETED, session, request, result)); } } @Override public void onCaptureFailed(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull CaptureFailure failure) { if (!observableEmitter.isDisposed()) { observableEmitter.onError(new CameraCaptureFailedException(failure)); } } @Override public void onCaptureSequenceCompleted(@NonNull CameraCaptureSession session, int sequenceId, long frameNumber) { } @Override public void onCaptureSequenceAborted(@NonNull CameraCaptureSession session, int sequenceId) { } }; } Of all these messages, we are interested in onCaptureCompleted / onCaptureFailed , ignoring the rest of the events. If you need them in your projects, they are easy to add.

Now everything is ready to create an Observable .

static Observable<CaptureSessionData> fromSetRepeatingRequest(@NonNull CameraCaptureSession captureSession, @NonNull CaptureRequest request) { return Observable .create(observableEmitter -> captureSession.setRepeatingRequest(request, createCaptureCallback(observableEmitter), null)); } capture

In fact, this step is completely analogous to the previous one, only we make not a repeated request, but a single one. To do this, use the CameraCaptureSession.capture method.

public abstract int capture(@NonNull CaptureRequest request, @Nullable CaptureCallback listener, @Nullable Handler handler) throws CameraAccessException; It takes exactly the same parameters, so that we can use the function defined above to create the CaptureCallback .

static Observable<CaptureSessionData> fromCapture(@NonNull CameraCaptureSession captureSession, @NonNull CaptureRequest request) { return Observable .create(observableEmitter -> captureSession.capture(request, createCaptureCallback(observableEmitter), null)); } Surface Preparation

Cameara2 API allows in the request to transfer the list of Surfaces that will be used to record data from the device. We need two Surfaces:

- to display the preview on the screen,

- to capture a snapshot to a jpeg file.

Textureview

To display the preview on the screen, we will use TextureView . In order to get Surface from TextureView, we suggest using the TextureView.setSurfaceTextureListener method.TextureView will notify the listener when Surface is ready for use.

Let's create a PublishSubject this time that will generate events when TextureView calls listener methods.

private final PublishSubject<SurfaceTexture> mOnSurfaceTextureAvailable = PublishSubject.create(); @Override public void onCreate(@Nullable Bundle saveState){ mTextureView.setSurfaceTextureListener(new TextureView.SurfaceTextureListener(){ @Override public void onSurfaceTextureAvailable(SurfaceTexture surface,int width,int height){ mOnSurfaceTextureAvailable.onNext(surface); } }); ... } Using PublishSubject , we avoid possible problems with multiple subscribe . We install SurfaceTextureListener once in onCreate and continue to live peacefully. PublishSubject allows PublishSubject to subscribe to it as many times as you like and distributes events to all those who subscribe.

When using the Camera2 API, there is a subtlety associated with the inability to explicitly set the image size, the camera itself chooses one of the resolutions it supports based on the size transferred to it by the Surface . Therefore, we have to go for such a trick: find out the list of image sizes supported by the camera, choose the one you like the most and then set the buffer size exactly the same.

private void setupSurface(@NonNull SurfaceTexture surfaceTexture) { surfaceTexture.setDefaultBufferSize(mCameraParams.previewSize.getWidth(), mCameraParams.previewSize.getHeight()); mSurface = new Surface(surfaceTexture); } At the same time, if we want to see the image with preservation of proportions, it is necessary to set the necessary proportions to our TextureView . To do this, we expand it and override the onMeasure method:

public class AutoFitTextureView extends TextureView { private int mRatioWidth = 0; private int mRatioHeight = 0; ... public void setAspectRatio(int width, int height) { mRatioWidth = width; mRatioHeight = height; requestLayout(); } @Override protected void onMeasure(int widthMeasureSpec, int heightMeasureSpec) { int width = MeasureSpec.getSize(widthMeasureSpec); int height = MeasureSpec.getSize(heightMeasureSpec); if (0 == mRatioWidth || 0 == mRatioHeight) { setMeasuredDimension(width, height); } else { if (width < height * mRatioWidth / mRatioHeight) { setMeasuredDimension(width, width * mRatioHeight / mRatioWidth); } else { setMeasuredDimension(height * mRatioWidth / mRatioHeight, height); } } } } Write to file

To save an image from Surface to a file, let's use the ImageReader class.

A few words about choosing the size for the ImageReader . First, we have to select it from those supported by the camera. Secondly, the aspect ratio should coincide with what we chose to preview.

So that we can receive notifications from ImageReader about image readiness, we will use the setOnImageAvailableListener method

void setOnImageAvailableListener (ImageReader.OnImageAvailableListener listener, Handler handler) The listener passed in implements just one onImageAvailable method.

Every time the Camera API will record an image in Surface provided by our ImageReader , it will call this callback.

Let's make this operation reactive: create an Observable that will generate a message every time ImageReader is ready to provide an image.

@NonNull public static Observable<ImageReader> createOnImageAvailableObservable(@NonNull ImageReader imageReader) { return Observable.create(subscriber -> { ImageReader.OnImageAvailableListener listener = reader -> { if (!subscriber.isDisposed()) { subscriber.onNext(reader); } }; imageReader.setOnImageAvailableListener(listener, null); subscriber.setCancellable(() -> imageReader.setOnImageAvailableListener(null, null)); //remove listener on unsubscribe }); } Notice that here we used the ObservableEmitter.setCancellable method to remove the listener when unsubscribing from the Observable.

Writing to a file is a lengthy operation; we will make it reactive using the fromCallable method.

@NonNull public static Single<File> save(@NonNull Image image, @NonNull File file) { return Single.fromCallable(() -> { try (FileChannel output = new FileOutputStream(file).getChannel()) { output.write(image.getPlanes()[0].getBuffer()); return file; } finally { image.close(); } }); } Now we can specify the following sequence of actions: when the finished image appears in ImageReader , we write it to a file in the workflow Schedulers.io() , then switch to UI thread and notify the UI that the file is ready.

private void initImageReader() { Size sizeForImageReader = CameraStrategy.getStillImageSize(mCameraParams.cameraCharacteristics, mCameraParams.previewSize); mImageReader = ImageReader.newInstance(sizeForImageReader.getWidth(), sizeForImageReader.getHeight(), ImageFormat.JPEG, 1); mCompositeDisposable.add( ImageSaverRxWrapper.createOnImageAvailableObservable(mImageReader) .observeOn(Schedulers.io()) .flatMap(imageReader -> ImageSaverRxWrapper.save(imageReader.acquireLatestImage(), mFile).toObservable()) .observeOn(AndroidSchedulers.mainThread()) .subscribe(file -> mCallback.onPhotoTaken(file.getAbsolutePath(), getLensFacingPhotoType())) ); } We start preview

So, we are thoroughly prepared. We can already create Observable for the main asynchronous actions that are required for the application to work. Ahead the most interesting is the configuration of reactive flows.

To warm up, let's make the camera open after SurfaceTexture ready for use.

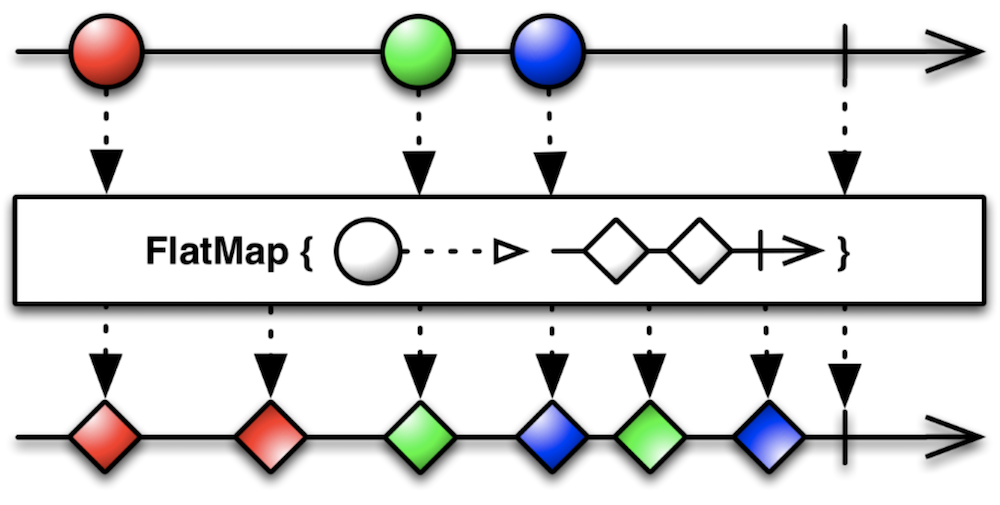

Observable<Pair<CameraRxWrapper.DeviceStateEvents, CameraDevice>> cameraDeviceObservable = mOnSurfaceTextureAvailable .firstElement() .doAfterSuccess(this::setupSurface) .doAfterSuccess(__ -> initImageReader()) .toObservable() .flatMap(__ -> CameraRxWrapper.openCamera(mCameraParams.cameraId, mCameraManager)) .share(); The key operator here is flatMap .

In our case, when receiving the readiness SurfaceTexture it will execute the openCamera function and openCamera events from the Observable created by it further into the jet stream.

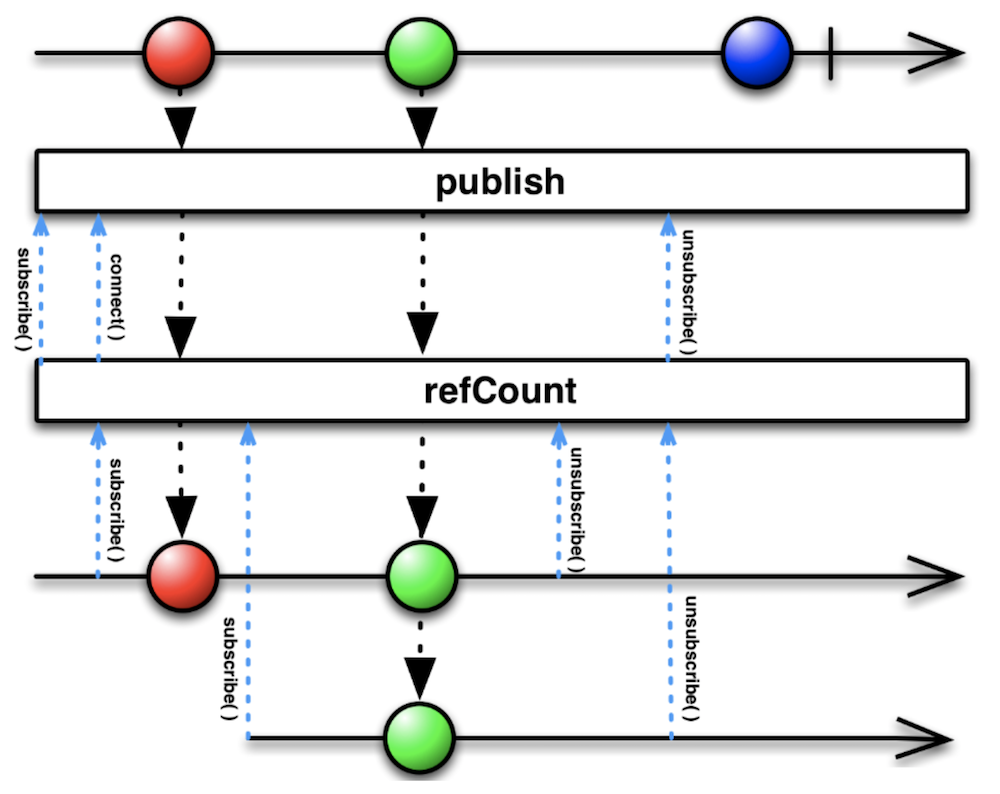

It is also important to understand why the share statement is used at the end of the chain. It is equivalent to the chain of publish().refCount() .

If you look at this Marble Diagram for a long time, you can see that the result is very similar to the result of using PublishSubject . Indeed, we solve a similar problem: if we subscribe to our Observable several times, we do not want to re-open the camera every time.

For convenience, let's introduce a couple more Observable.

Observable<CameraDevice> openCameraObservable = cameraDeviceObservable .filter(pair -> pair.first == CameraRxWrapper.DeviceStateEvents.ON_OPENED) .map(pair -> pair.second) .share(); Observable<CameraDevice> closeCameraObservable = cameraDeviceObservable .filter(pair -> pair.first == CameraRxWrapper.DeviceStateEvents.ON_CLOSED) .map(pair -> pair.second) .share(); openCameraObservable will generate events when the camera is successfully open, and closeCameraObservable when it is closed.

Let's take one more step: after successfully opening the camera, we will open the session.

Observable<Pair<CameraRxWrapper.CaptureSessionStateEvents, CameraCaptureSession>> createCaptureSessionObservable = openCameraObservable .flatMap(cameraDevice -> CameraRxWrapper .createCaptureSession(cameraDevice, Arrays.asList(mSurface, mImageReader.getSurface())) ) .share(); And by analogy, we will create a couple more Observable , signaling the successful opening or closing of the session.

Observable<CameraCaptureSession> captureSessionConfiguredObservable = createCaptureSessionObservable .filter(pair -> pair.first == CameraRxWrapper.CaptureSessionStateEvents.ON_CONFIGURED) .map(pair -> pair.second) .share(); Observable<CameraCaptureSession> captureSessionClosedObservable = createCaptureSessionObservable .filter(pair -> pair.first == CameraRxWrapper.CaptureSessionStateEvents.ON_CLOSED) .map(pair -> pair.second) .share(); Finally, we can set a repeating query to display a preview.

Observable<CaptureSessionData> previewObservable = captureSessionConfiguredObservable .flatMap(cameraCaptureSession -> { CaptureRequest.Builder previewBuilder = createPreviewBuilder(cameraCaptureSession, mSurface); return CameraRxWrapper.fromSetRepeatingRequest(cameraCaptureSession, previewBuilder.build()); }) .share(); Now it is enough to execute previewObservable.subscribe() - and a live picture from the camera will appear on the screen!

A small digression. If you collapse all intermediate Observable , you get this chain of operators:

mOnSurfaceTextureAvailable .firstElement() .doAfterSuccess(this::setupSurface) .toObservable() .flatMap(__ -> CameraRxWrapper.openCamera(mCameraParams.cameraId, mCameraManager)) .filter(pair -> pair.first == CameraRxWrapper.DeviceStateEvents.ON_OPENED) .map(pair -> pair.second) .flatMap(cameraDevice -> CameraRxWrapper .createCaptureSession(cameraDevice, Arrays.asList(mSurface, mImageReader.getSurface())) ) .filter(pair -> pair.first == CameraRxWrapper.CaptureSessionStateEvents.ON_CONFIGURED) .map(pair -> pair.second) .flatMap(cameraCaptureSession -> { CaptureRequest.Builder previewBuilder = createPreviewBuilder(cameraCaptureSession, mSurface); return CameraRxWrapper.fromSetRepeatingRequest(cameraCaptureSession, previewBuilder.build()); }) .subscribe(); And this is enough to show preview. Impressive, isn't it?

In fact, this solution has problems with closing resources, and snapshots cannot be made yet. , . Observable .

, subscribe Disposable . CompositeDisposable .

private final CompositeDisposable mCompositeDisposable = new CompositeDisposable(); private void unsubscribe() { mCompositeDisposable.clear(); } mCompositeDisposable.add(...subscribe()) , , .

CaptureRequest

, , , createPreviewBuilder , . , .

@NonNull CaptureRequest.Builder createPreviewBuilder(CameraCaptureSession captureSession, Surface previewSurface) throws CameraAccessException { CaptureRequest.Builder builder = captureSession.getDevice().createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW); builder.addTarget(previewSurface); setup3Auto(builder); return builder; } preview, Surface , Auto Focus, Auto Exposure Auto White Balance ( A). , .

private void setup3Auto(CaptureRequest.Builder builder) { // Enable auto-magical 3A run by camera device builder.set(CaptureRequest.CONTROL_MODE, CaptureRequest.CONTROL_MODE_AUTO); Float minFocusDist = mCameraParams.cameraCharacteristics.get(CameraCharacteristics.LENS_INFO_MINIMUM_FOCUS_DISTANCE); // If MINIMUM_FOCUS_DISTANCE is 0, lens is fixed-focus and we need to skip the AF run. boolean noAFRun = (minFocusDist == null || minFocusDist == 0); if (!noAFRun) { // If there is a "continuous picture" mode available, use it, otherwise default to AUTO. int[] afModes = mCameraParams.cameraCharacteristics.get(CameraCharacteristics.CONTROL_AF_AVAILABLE_MODES); if (contains(afModes, CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE)) { builder.set(CaptureRequest.CONTROL_AF_MODE, CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE); } else { builder.set(CaptureRequest.CONTROL_AF_MODE, CaptureRequest.CONTROL_AF_MODE_AUTO); } } // If there is an auto-magical flash control mode available, use it, otherwise default to // the "on" mode, which is guaranteed to always be available. int[] aeModes = mCameraParams.cameraCharacteristics.get(CameraCharacteristics.CONTROL_AE_AVAILABLE_MODES); if (contains(aeModes, CaptureRequest.CONTROL_AE_MODE_ON_AUTO_FLASH)) { builder.set(CaptureRequest.CONTROL_AE_MODE, CaptureRequest.CONTROL_AE_MODE_ON_AUTO_FLASH); } else { builder.set(CaptureRequest.CONTROL_AE_MODE, CaptureRequest.CONTROL_AE_MODE_ON); } // If there is an auto-magical white balance control mode available, use it. int[] awbModes = mCameraParams.cameraCharacteristics.get(CameraCharacteristics.CONTROL_AWB_AVAILABLE_MODES); if (contains(awbModes, CaptureRequest.CONTROL_AWB_MODE_AUTO)) { // Allow AWB to run auto-magically if this device supports this builder.set(CaptureRequest.CONTROL_AWB_MODE, CaptureRequest.CONTROL_AWB_MODE_AUTO); } } , RxBinding , .

private final PublishSubject<Object> mOnShutterClick = PublishSubject.create(); public void takePhoto() { mOnShutterClick.onNext(this); } . , preview ( , ). combineLatest.

Observable.combineLatest(previewObservable, mOnShutterClick, (captureSessionData, o) -> captureSessionData) previewObservable, .

.firstElement().toObservable() , .

.flatMap(this::waitForAf) .flatMap(this::waitForAe) , , .

.flatMap(captureSessionData -> captureStillPicture(captureSessionData.session)) :

Observable.combineLatest(previewObservable, mOnShutterClick, (captureSessionData, o) -> captureSessionData) .firstElement().toObservable() .flatMap(this::waitForAf) .flatMap(this::waitForAe) .flatMap(captureSessionData -> captureStillPicture(captureSessionData.session)) .subscribe(__ -> { }, this::onError) , captureStillPicture .

@NonNull private Observable<CaptureSessionData> captureStillPicture(@NonNull CameraCaptureSession cameraCaptureSession) { return Observable .fromCallable(() -> createStillPictureBuilder(cameraCaptureSession.getDevice())) .flatMap(builder -> CameraRxWrapper.fromCapture(cameraCaptureSession, builder.build())); } : , capture – . STILL_PICTURE , Surface , , , . , JPEG.

@NonNull private CaptureRequest.Builder createStillPictureBuilder(@NonNull CameraDevice cameraDevice) throws CameraAccessException { final CaptureRequest.Builder builder; builder = cameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_STILL_CAPTURE); builder.set(CaptureRequest.CONTROL_CAPTURE_INTENT, CaptureRequest.CONTROL_CAPTURE_INTENT_STILL_CAPTURE); builder.set(CaptureRequest.CONTROL_AE_PRECAPTURE_TRIGGER, CameraMetadata.CONTROL_AE_PRECAPTURE_TRIGGER_IDLE); builder.addTarget(mImageReader.getSurface()); setup3Auto(builder); int rotation = mWindowManager.getDefaultDisplay().getRotation(); builder.set(CaptureRequest.JPEG_ORIENTATION, CameraOrientationHelper.getJpegOrientation(mCameraParams.cameraCharacteristics, rotation)); return builder; } , , . onPause .

Observable.combineLatest(previewObservable, mOnPauseSubject, (state, o) -> state) .firstElement().toObservable() .doOnNext(captureSessionData -> captureSessionData.session.close()) .flatMap(__ -> captureSessionClosedObservable) .doOnNext(cameraCaptureSession -> cameraCaptureSession.getDevice().close()) .flatMap(__ -> closeCameraObservable) .doOnNext(__ -> closeImageReader()) .subscribe(__ -> unsubscribe(), this::onError); , API.

findings

, preview . . . .

RxJava API. , Callback Hell , . !

')

Source: https://habr.com/ru/post/330080/

All Articles