Limit the speed of processing requests in nginx

Flickr user picture Wonderlane

NGINX is great! That's just his documentation on limiting the speed of processing requests seemed to me, how to say it, somewhat limited. Therefore, I decided to write this guide on rate limiting and shaping traffic in NGINX.

We are going to:

- describe the NGINX directives,

- deal with accept / reject-logic of NGINX,

- Visualize the processing of traffic bursts in various settings.

In addition, I created a GitHub repository and a Docker image with which you can experiment and reproduce the tests in this article. It is always easier to learn by doing.

NGINX directives on request rate limiting

In this article we will talk about ngx_http_limit_req_module , which implements the directive limit_req_zone , limit_req , limit_req_status and limit_req_level . They allow you to control the value of the HTTP request status code for rejected requests, as well as the logging of these failures.

Most often confused precisely in the logic of the rejection of the request.

First you need to deal with the directive limit_req , which requires the zone parameter. It also has optional parameters burst and nodelay .

The following concepts are used here:

zonedefines a “bucket” (bucket) - a shared space in which incoming requests are counted. All requests that fall into one “bucket” will be counted and processed in its section. This achieves the ability to set restrictions based on URLs, IP addresses, etc.burstis an optional parameter. When set, it determines the number of requests that can be processed in excess of the established basic speed limit. It is important to understand that theburstis the absolute value of the number of requests, and not the speed .nodelayis also an optional parameter that is used in conjunction with theburst. Below we will understand why it is needed.

How does NGINX decide to accept or reject a request?

When setting up a zone, its speed is set. For example, at 300r/m 300 requests per minute will be received, and at 5r/s - 5 requests per second.

Examples of directives:

limit_req_zone $request_uri zone=zone1:10m rate=300r/m;limit_req_zone $request_uri zone=zone2:10m rate=5/s;

It is important to understand that these two zones have the same limits. Using the rate parameter, NGINX calculates the frequency and, accordingly, the interval after which a new request can be received. In this case, NGINX will use an algorithm called a leaky bucket (leaky bucket).

For NGINX 300r/m and 5r/s same: it will skip one request every 0.2 s. In this case, NGINX will set a flag every 0.2 seconds to allow reception of the request. When a request arrives for this zone, NGINX clears the flag and processes the request. If another request arrives, and the timer counting the time between packets has not yet been triggered, the request will be rejected with status code 503. If the time has elapsed and the flag has already been set to the enable value, no action will be performed.

Do I need to limit the speed of processing requests and shaping traffic?

Let's talk about the parameter burst . Imagine that the flag about which we spoke above can take values greater than one. In this case, it will reflect the maximum number of requests that NGINX must skip within one burst.

Now it is no longer a “leaky bucket”, “token bucket”. The rate parameter defines the time interval between requests, but we are dealing not with a true / false token, but with a counter from 0 to 1 + burst . The counter is incremented every time the calculated time interval passes (a timer is triggered), reaching a maximum value of b+1 . Let me remind you again: burst is the number of requests, not the speed of their transmission.

When a new request arrives, NGINX checks the availability of the token (counter> 0). If the token is not available, the request is rejected. Otherwise, the request is accepted and will be processed, and the token is considered consumed (the counter is reduced by one).

Well, if there are unspent burst tokens, NGINX will accept the request. But when will he process it?

We set the limit to 5r / s, while NGINX will accept requests in excess of the norm, if there are available burst tokens, but postpone their processing in such a way as to withstand the set speed. That is, these burst requests will be processed with some delay or will end on timeout.

In other words, NGINX will not exceed the limit set for the zone, but will place additional requests in the queue and process them with some delay.

Let's give a simple example: let's say we have a limit of 1r/s and the burst is 3 . What happens if NGINX receives 5 requests at once?

- The first will be accepted and processed.

- Since no more than 1 + 3 is allowed, one request will be immediately denied with status code 503.

- The three remaining ones will be processed one after the other, but not instantly. NGINX will skip them at a speed of

1r/s, staying within the limits of the established limit, and also on the condition that no new requests will be received that also use a quota. When the queue becomes empty, the burst counter begins to increase again (the marker basket starts to fill).

In the case of using NGINX as a proxy server, the services behind it will receive requests at a speed of 1r/s and will not know anything about the traffic spikes smoothed by the proxy server.

So, we have just configured the traffic shaping, applying delays to controlling the bursts of requests and aligning the data flow.

nodelay

nodelay tells NGINX that it should receive packets within the window defined by the burst value and process them immediately (as well as regular requests).

As a result, traffic surges will still reach the services located behind NGINX, but these surges will be limited to the burst value.

Visualization of request processing speed limits

Since I believe that the practice helps a lot in memorizing anything, I made a small Docker image with NGINX on board. There are configured resources for which various options for limiting the speed of query processing are implemented: with a basic limit, with a speed limit using burst , and also with burst and nodelay . Let's see how they work.

It uses a fairly simple NGINX configuration (it is also in the Docker image, a link to which can be found at the end of the article):

limit_req_zone $request_uri zone=by_uri:10m rate=30r/m; server { listen 80; location /by-uri/burst0 { limit_req zone=by_uri; try_files $uri /index.html; } location /by-uri/burst5 { limit_req zone=by_uri burst=5; try_files $uri /index.html; } location /by-uri/burst5_nodelay { limit_req zone=by_uri burst=5 nodelay; try_files $uri /index.html; } } NGINX test configuration with various options for limiting the speed of processing requests

In all tests, using this configuration, we send 10 parallel requests simultaneously.

Let's find out this:

- How many requests will be denied due to speed limit?

- What is the speed of processing received requests?

We make 10 parallel requests to the resource with the speed limit processing requests

10 simultaneous requests to a resource with a request processing speed limit

In our configuration, allowed 30 requests per minute. But in this case, 9 out of 10 will be rejected. If you have carefully read the previous sections, this behavior of NGINX will not come as a surprise to you: 30r/m means that only one request will pass in 2 seconds. In our example, 10 requests come at the same time, one is skipped, and the other nine are rejected, since they are visible to NGINX before the timer that allows the next request is triggered.

I will survive small bursts of requests to clients / endpoints

Good! Then we add the argument burst=5 , which will allow NGINX to skip small bursts of requests to this end point of the zone, with a limit on the speed of processing requests:

10 simultaneous requests to the resource with the argument burst = 5

What happened here? As was to be expected, 5 additional requests were accepted with the burst argument, and we improved the ratio of received requests to their total number from 1/10 to 6/10 (the rest were rejected). Here you can clearly see how NGINX updates the token and processes received requests - outgoing speed is limited to 30r/m , which equals one request every 2 seconds.

The response to the first request returns in 0.2 seconds. The timer is triggered after 2 seconds, one of the pending requests is processed, and the client receives a response. The total time spent on the road there and back was 2.02 seconds. After another 2 seconds, the timer works again, giving the opportunity to process the next request, which returns with a total travel time of 4.02 seconds. And so on and so forth…

Thus, the burst argument turns the NGINX query processing speed limit system from a simple threshold filter into a traffic shaper.

My server will handle the additional load, but I would like to use the request processing rate limit to prevent overloading.

In this case, the nodelay argument may be useful. Let's send the same 10 requests to the endpoint with burst=5 nodelay :

10 simultaneous requests to the resource with the argument burst = 5 nodelay

As expected with burst=5 , we will have the same ratio of 200 and 503 state codes. But outgoing speed is no longer limited to one request every 2 seconds. As long as burst tokens are available, incoming requests will be received and immediately processed. The speed of the timer is still important from the point of view of replenishing the number of burst tokens, but the delay does not apply to accepted requests.

Comment. In this case, zone uses $request_uri , but all subsequent tests work in the same way for the binary_remote_addr option, in which the speed is limited to the client’s IP address. You will have the opportunity to play with these settings, using specially prepared Docker-image.

Let's sum up

Let's try to visualize how NGINX accepts incoming requests and processes them based on the rate , burst and nodelay .

In order not to complicate, let's display the number of incoming requests (which are then rejected or accepted and processed) on the time scale defined in the zone settings, divided into segments equal to the trigger value of the timer. The absolute value of the time interval is not significant. The number of requests that NGINX can process in each step is important.

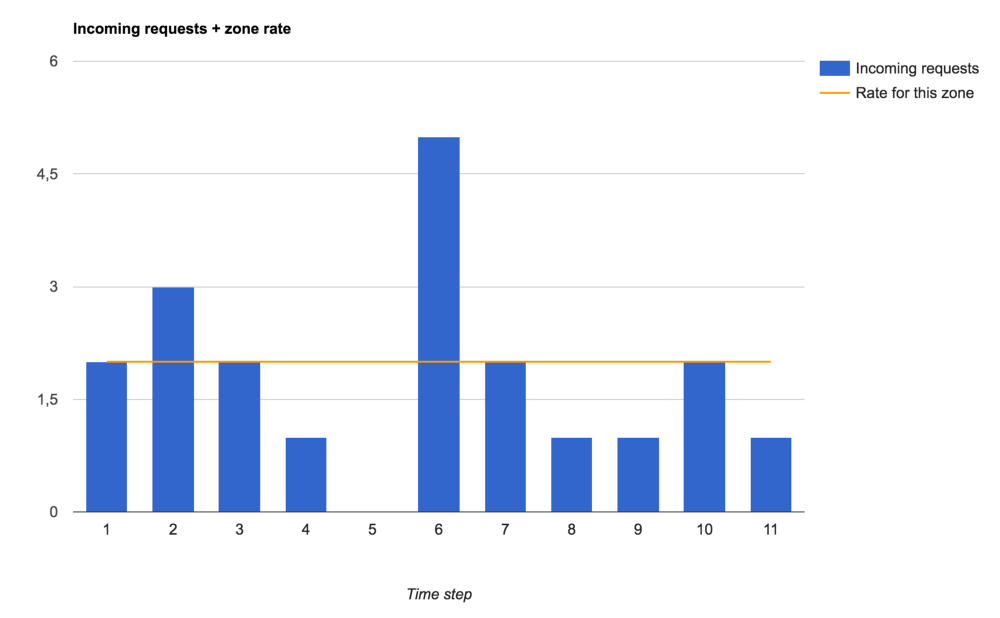

Here is the traffic that we will run through different settings for the speed of processing requests:

Incoming requests and request processing speed limit set for the zone

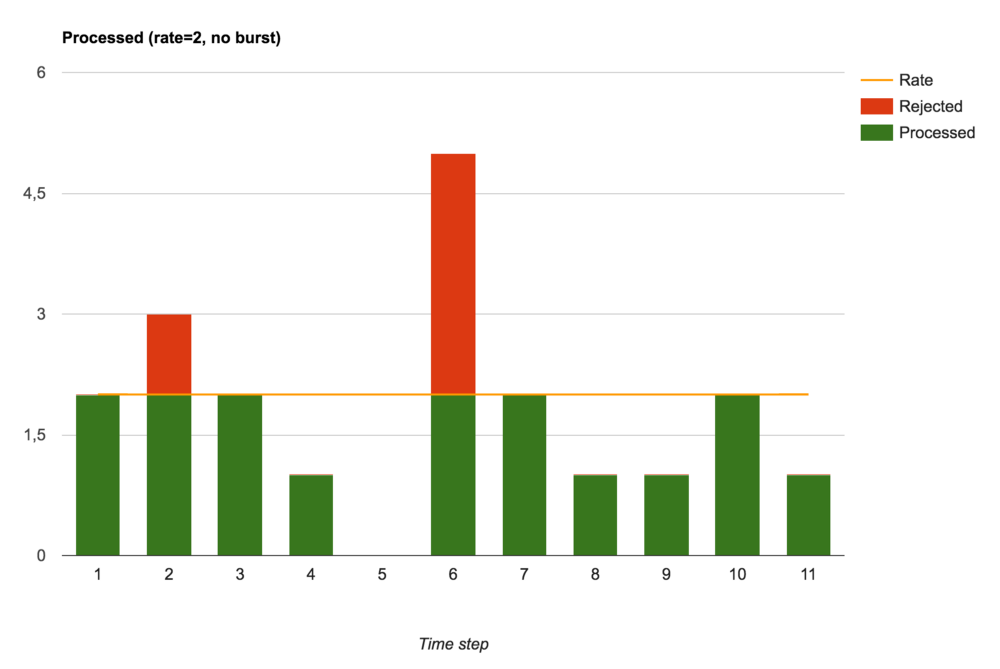

Accepted and rejected requests (burst setting is not set)

Without burst (that is, with burst=0 ) NGINX performs the function of a speed limiter. Requests are either processed immediately or immediately rejected.

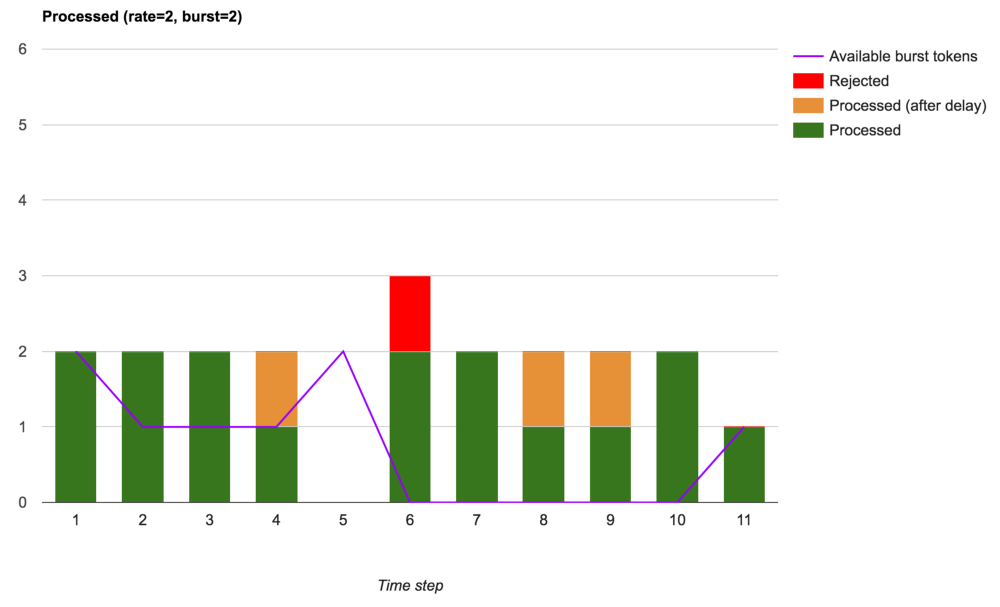

If we want to allow small bursts of traffic, for example, in order to load capacity within the established limit, then we can add a burst argument, which implies a delay in processing requests received within the available burst tokens:

Accepted, delayed and rejected requests (using burst)

We see that the total number of rejected requests has decreased. Only those requests exceeding the established speed that came at the moments when there were no available burst tokens were rejected. With these settings, NGINX performs full-fledged traffic shaping.

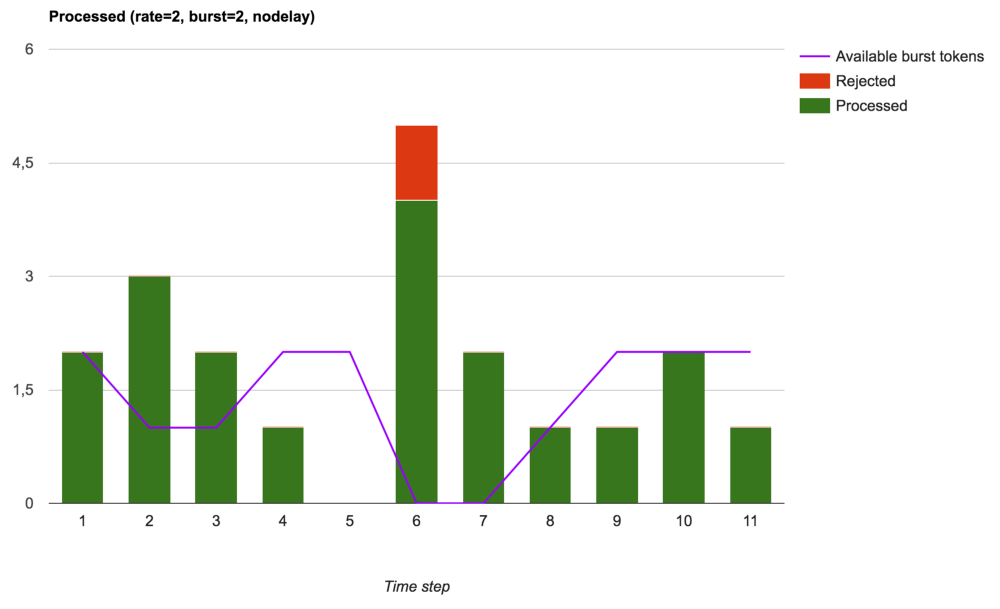

Finally, NGINX can be used to control traffic by limiting the size of a burst of requests (burst), but at the same time bursts of requests will partially reach their handlers (upstream or local), which ultimately leads to less stable outgoing speed, but will improve network latency ( if you can, of course, process these additional requests):

Accepted, processed and rejected requests (burst used with nodelay)

Play with the speed limit processing requests

Now, in order to better consolidate the understanding of the concepts presented, you can study the code, copy the repository and experiment with the prepared Docker-image:

https://github.com/sportebois/nginx-rate-limit-sandbox .

References:

- Original: NGINX rate-limiting in a nutshell .

')

Source: https://habr.com/ru/post/329876/

All Articles