DevOps on Amazon AWS

The effectiveness of the use of cloud services is one of the main trends for the transformation of many IT companies today. Automation of all processes on the way from the GIT code to deployment to Development and / or Production , as well as follow-up monitoring, response to incidents, etc. (which also can and should be automated) - all this, if not canceled, then significantly changes many of the universally accepted ITIL practices . A look at DevOps processes from the Amazon AWS point of view : how they can be implemented on its services within the framework of the IaaC ( Infrastructure as a Code ) concept - all this will be given further in a series of articles on Amazon AWS Code Services: CodeCommit , CodeBuild , CodeDeploy , CodePipeline , CodeStar .

This article is the first overview.

The purpose of this article is not an educational program on DevOps and not a banal duplication, and so the materials available from the original source . The goal is to present the implementation of DevOps on Amazon AWS services in its general form, from which everyone is free to choose the desired option. Target readers are familiar at least about the existence of Amazon AWS, who plan to use its capabilities in their work, to transfer their processes there partially or completely. The provided Amazon AWS services are multiplying at high speed, their number has already exceeded the round mark of 100 pieces, because even having a good experience with AWS may be useful to find out what appeared new, one that maybe they just missed, and this can be successfully engage in their work.

A few common, but important words for someone, especially for those who do not have enough experience with Amazon AWS. The cubes depicted on the logo logically correctly convey the basic idea: Amazon AWS is a constructor that gives a set of services and from which everyone can collect what they need. However, just this moment of “cubic-centricity” can repel some in case the necessary cube (service) does not satisfy its needs. In this case, you need to remember that the same task can be solved with the help of different services, many services can largely duplicate each other, and therefore Amazon AWS, which did not meet your needs, does not cancel its use, but only makes it possible to find suitable. After all, Amazon AWS services appeared as a result of operation primarily for their own needs. Amazon AWS employs thousands of small teams (they call them two pizza team ), each of which is free to choose its own way of working (language, OS, structure, protocols, etc.), and hence the services available on Amazon AWS must maintain the diversity of such a zoo they are already operating simply within the company Amazon AWS.

')

This is especially important for Amazon AWS Code services, which are not meant to tell you what to use, but to give you, on the one hand, the ability to integrate with your usual, existing and streamlined processes. tools, on the other hand, offer their own implementation, which is usually most effective in engaging Amazon AWS functionality.

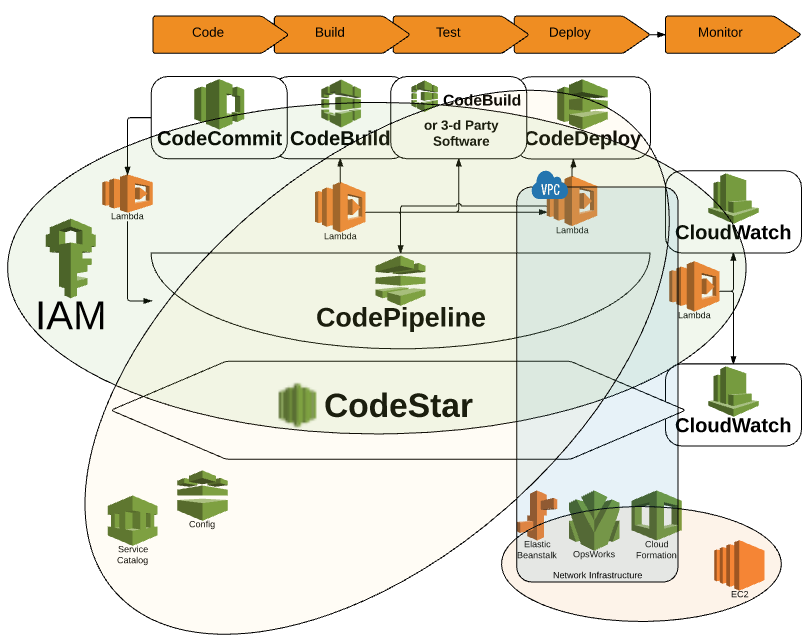

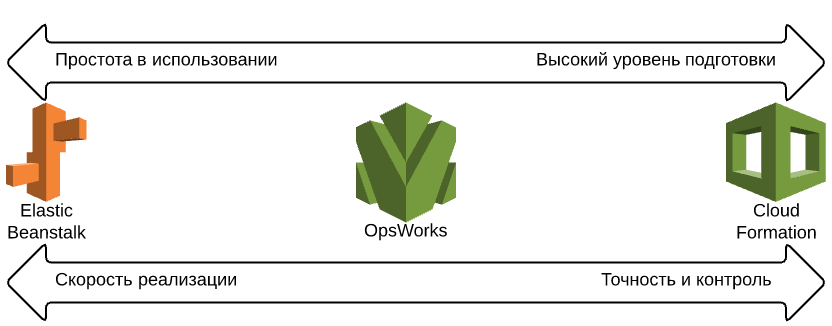

Even well understanding each cube separately, it is not always clear which house can be built from them. Therefore, we will try to assemble these services in one picture in order to get a general idea of what can be assembled from such a constructor. If you select Code- services and correlate them with the usual processes, you get something like this:

Let's briefly go through each of the Code services (a separate article will be devoted to each of them in the following paragraphs):

The most obvious and understandable service is the Amazon implementation of GIT, which repeats it completely. There are no differences from GIT in terms of work and interaction (team), as a matter of fact, for direct integration into the structure of AWS services, including access to IAM services. The code itself is stored on S3 .

Assembly server - for projects that require assembly before deployment, for example, Java. By default, it launches a Ubuntu-based container, but you can specify your own.

Supports Jenkins integration via plugin .

In addition to assembly, tests can be carried out in the same way. And although this is not its main purpose, it can be chosen as such.

Because AWS CodeBuild is also present at the “Test” stage.

Service for deploying code that works with any pre-installed agent and flexible settings in any environment. The special difference is that the agent works not only with Amazon AWS virtual machines, but also with “external” ones, which allows you to centrally deploy the most disparate software, including and locally.

As you can see from the main diagram, it interacts with the previous three services, launching them in the right sequence and, in fact, providing automation for DevOps processes.

Allows you to organize branching processes, run third-party services (for example, for testing), make parallel branches, request confirmation ( Approval Actions ) before starting the next stage. In general, it is the central tool for organizing DevOps on Amazon AWS.

Service, essentially duplicating CodePipeline , but sharpened for ease of launching and customization, which is solved using a wide range of ready-made templates (for application-language bundles ) and really convenient Dashboard with plug-ins that have some integration with the monitoring service ( CloudWatch ) and a plug-in for integration with jira.

Amazon AWS services, implementing the concept of "Infrastructure as a code", are:

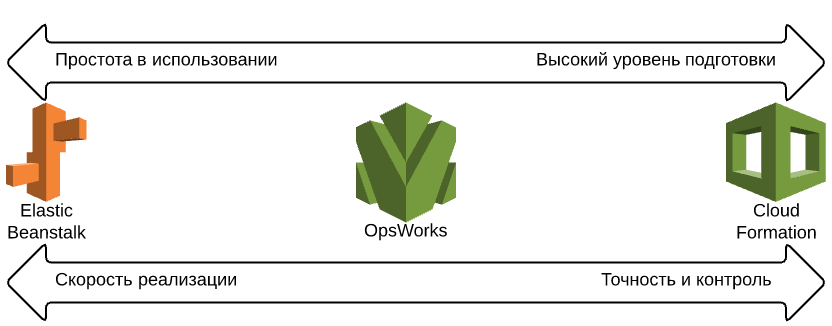

Quite often there are questions about which one to choose and why the three services are designed for the same (infrastructure and application deployment), located in the main diagram at the Deploy stage. In short, their use can be represented as:

That is, in order to do something quickly (and this does not mean that it is bad), this is some kind of standard functionality (for example, a simple site) - it is convenient to use Elastic Beanstalk . If this is a complex project with numerous nested elements and serious requirements for network settings, you can not do without using CloudFormation . As something in between, the use of OpsWorks based on Chef appears .

However, in reality (and just on complex projects, a combination of all three is commonly used): CloudFormation raises the basic infrastructure ( VPC , subnets, repositories, creates the necessary roles in IAM for access, etc.), then launches OpsWorks stack can already flexibly configure the internal component of the running virtualok. And for the convenience of the development process, CloudFormation can also raise the stack for Elastic Beanstalk components so that developers using .ebextensions themselves can change some parameters of a running application (the number and type of virtual machines used, use Load Balancer , etc.) by simply changing the simple configuration file in a folder with a code when changes are applied (including to the application infrastructure) automatically after a commit.

We should also mention the Lambda service, which implements the concept of ServerLess architecture, which, on the one hand, is similar to Elastic Beanstalk , and can be entered into the DevOps process using AWS Code services. On the other hand, AWS Lambda is an excellent (read - mandatory) automation tool for everything on Amazon AWS. All processes that involve interaction with each other - can be linked using Lambda . It can process and respond to the monitoring results of CloudWatch , for example, by restarting the service (virtual cluster) and sending a problem message to the admin. It is also used in connection with DevOps processes, for example, to run its build methods and tests with subsequent transfer to Deploy in a general manner. And in general, using AWS Lambda can be implemented the most complex logic that is not yet available using the current set of Amazon AWS services.

In addition to these services, other services may be involved in DevOps processes. Therefore, if you try to present a general scheme of the services used, the picture can turn out to be quite confusing (below is just a conditional example).

Not everything can be transmitted visually, because global entities such as the AWS IAM access control service penetrate and are present in almost all components. All other services operate on the basis of the computing power of the EC2 service. The S3 data storage service is used to transfer data between so many other services. And such high-level services like AWS Service Catalog can provide interaction, including between different Amazon AWS accounts.

Further, as a more detailed consideration of individual services, an intricate and incomprehensible scheme will emerge into a clear set of tools, where everyone can choose the right one.

Total for the general scheme of Amazon AWS services that can be involved in DevOps processes. The most popular bundle is something like: CodePipeline / CodeCommit + ElasticBenstalk / OpsWorks as Deploy . And in order to “just look quickly” - CodeStar will work well . True, AWS CodeStar is paid, but the cost factor was not specifically taken into account here to first give a general idea of the choice, because each component can be taken at will, including using the necessary partners through Jenkins plugins of CI / CD projects such as Jenkins.

References:

This article is the first overview.

The purpose of this article is not an educational program on DevOps and not a banal duplication, and so the materials available from the original source . The goal is to present the implementation of DevOps on Amazon AWS services in its general form, from which everyone is free to choose the desired option. Target readers are familiar at least about the existence of Amazon AWS, who plan to use its capabilities in their work, to transfer their processes there partially or completely. The provided Amazon AWS services are multiplying at high speed, their number has already exceeded the round mark of 100 pieces, because even having a good experience with AWS may be useful to find out what appeared new, one that maybe they just missed, and this can be successfully engage in their work.

Not (only) cubes

A few common, but important words for someone, especially for those who do not have enough experience with Amazon AWS. The cubes depicted on the logo logically correctly convey the basic idea: Amazon AWS is a constructor that gives a set of services and from which everyone can collect what they need. However, just this moment of “cubic-centricity” can repel some in case the necessary cube (service) does not satisfy its needs. In this case, you need to remember that the same task can be solved with the help of different services, many services can largely duplicate each other, and therefore Amazon AWS, which did not meet your needs, does not cancel its use, but only makes it possible to find suitable. After all, Amazon AWS services appeared as a result of operation primarily for their own needs. Amazon AWS employs thousands of small teams (they call them two pizza team ), each of which is free to choose its own way of working (language, OS, structure, protocols, etc.), and hence the services available on Amazon AWS must maintain the diversity of such a zoo they are already operating simply within the company Amazon AWS.

')

This is especially important for Amazon AWS Code services, which are not meant to tell you what to use, but to give you, on the one hand, the ability to integrate with your usual, existing and streamlined processes. tools, on the other hand, offer their own implementation, which is usually most effective in engaging Amazon AWS functionality.

DevOps Amazon AWS Designer

Even well understanding each cube separately, it is not always clear which house can be built from them. Therefore, we will try to assemble these services in one picture in order to get a general idea of what can be assembled from such a constructor. If you select Code- services and correlate them with the usual processes, you get something like this:

Let's briefly go through each of the Code services (a separate article will be devoted to each of them in the following paragraphs):

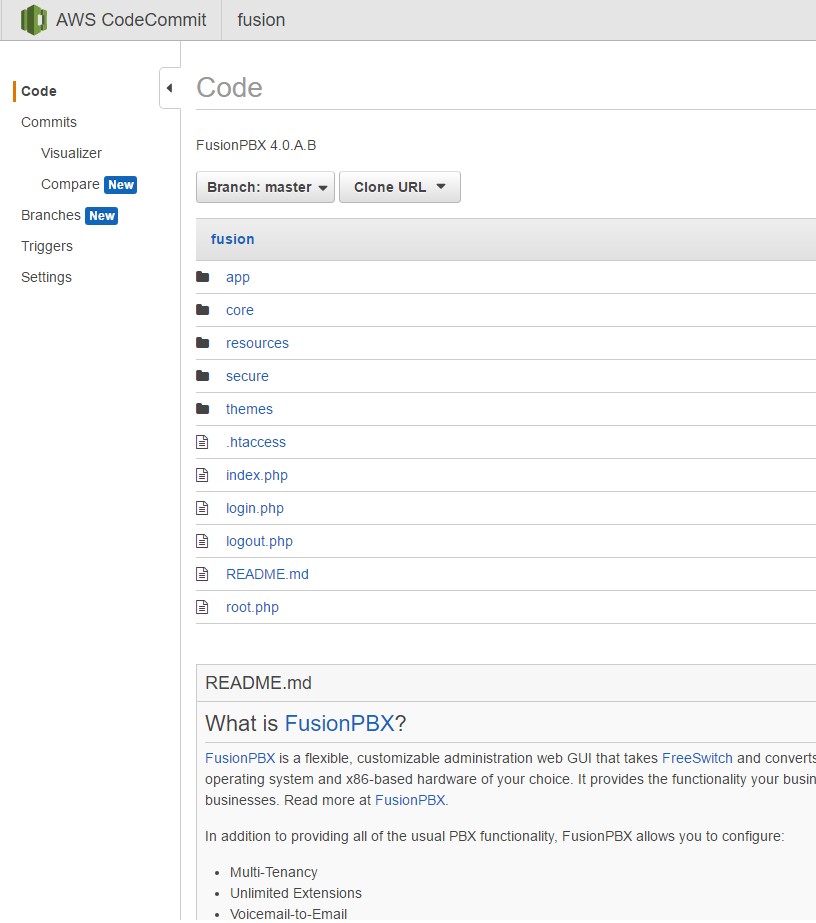

AWS CodeCommit

what it looks like

The most obvious and understandable service is the Amazon implementation of GIT, which repeats it completely. There are no differences from GIT in terms of work and interaction (team), as a matter of fact, for direct integration into the structure of AWS services, including access to IAM services. The code itself is stored on S3 .

AWS CodeBuild

what it looks like

Assembly server - for projects that require assembly before deployment, for example, Java. By default, it launches a Ubuntu-based container, but you can specify your own.

Specify a Docker image

Supports Jenkins integration via plugin .

In addition to assembly, tests can be carried out in the same way. And although this is not its main purpose, it can be chosen as such.

AWS CodeBuild as Test provider

Because AWS CodeBuild is also present at the “Test” stage.

AWS CodeDeploy

Service for deploying code that works with any pre-installed agent and flexible settings in any environment. The special difference is that the agent works not only with Amazon AWS virtual machines, but also with “external” ones, which allows you to centrally deploy the most disparate software, including and locally.

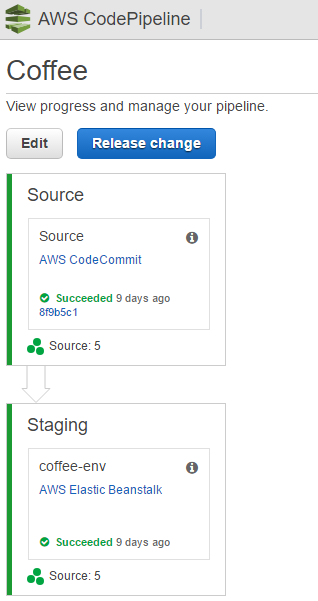

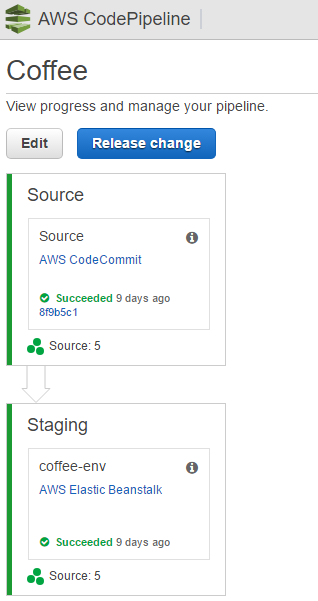

AWS CodePipeline

what it looks like

As you can see from the main diagram, it interacts with the previous three services, launching them in the right sequence and, in fact, providing automation for DevOps processes.

Allows you to organize branching processes, run third-party services (for example, for testing), make parallel branches, request confirmation ( Approval Actions ) before starting the next stage. In general, it is the central tool for organizing DevOps on Amazon AWS.

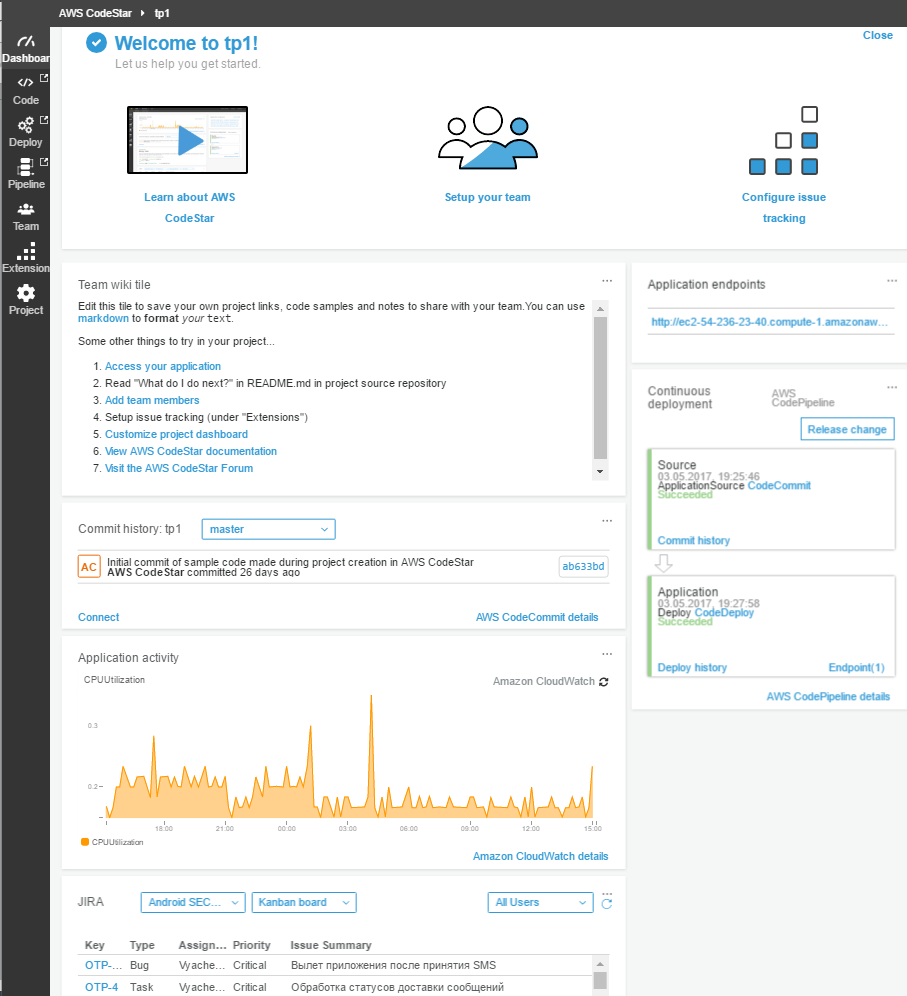

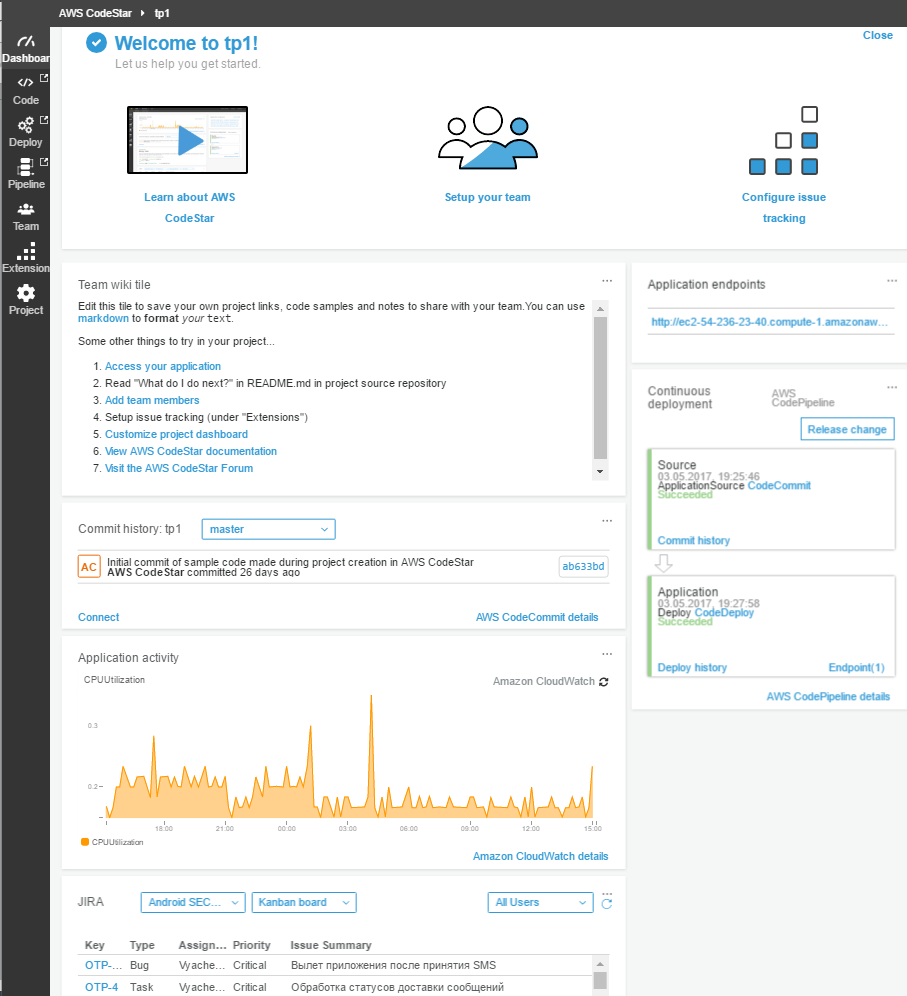

AWS CodeStar

what it looks like

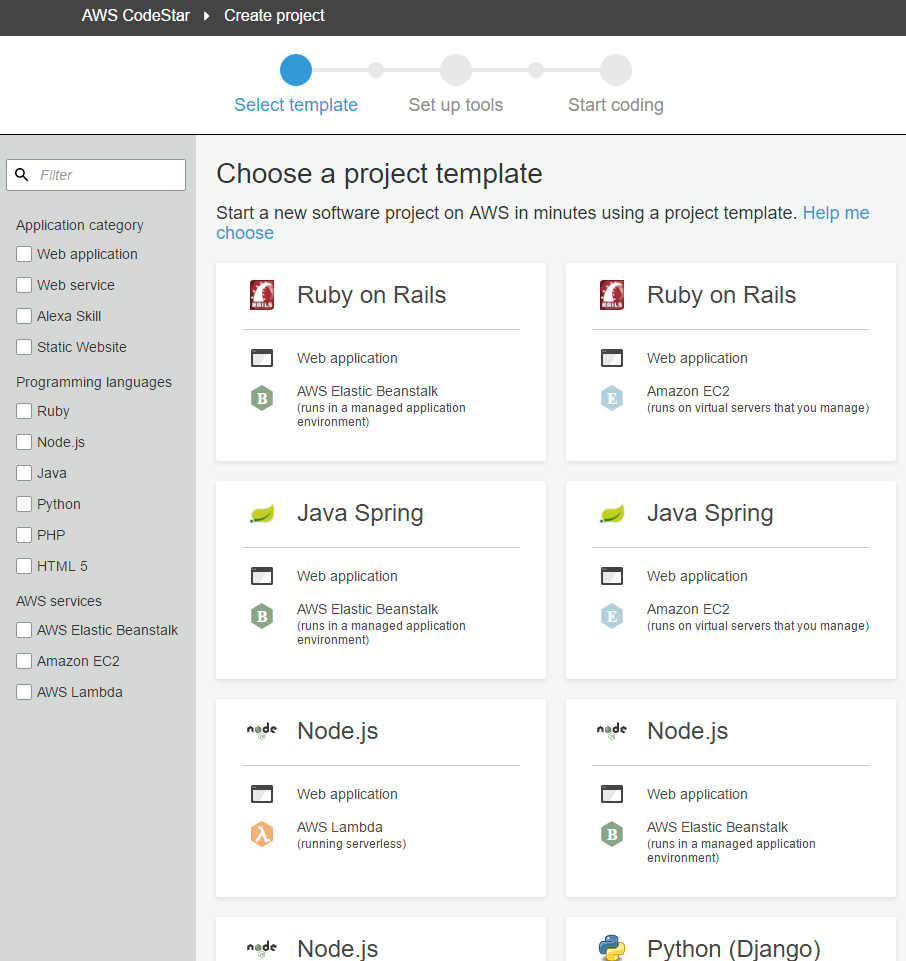

Service, essentially duplicating CodePipeline , but sharpened for ease of launching and customization, which is solved using a wide range of ready-made templates (for application-language bundles ) and really convenient Dashboard with plug-ins that have some integration with the monitoring service ( CloudWatch ) and a plug-in for integration with jira.

AWS CodeStar Templates

Amazon AWS IaaC Services

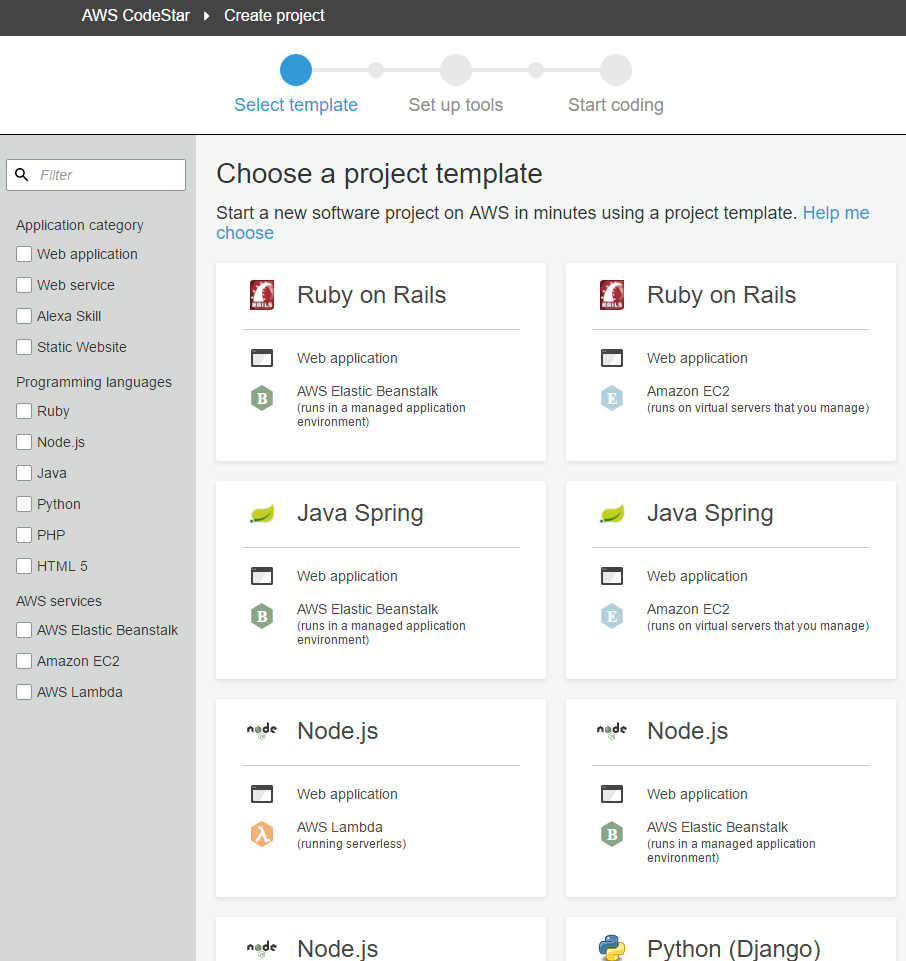

Amazon AWS services, implementing the concept of "Infrastructure as a code", are:

- Elastic beanstalk

- Opsworks

- CloudFormation

Quite often there are questions about which one to choose and why the three services are designed for the same (infrastructure and application deployment), located in the main diagram at the Deploy stage. In short, their use can be represented as:

That is, in order to do something quickly (and this does not mean that it is bad), this is some kind of standard functionality (for example, a simple site) - it is convenient to use Elastic Beanstalk . If this is a complex project with numerous nested elements and serious requirements for network settings, you can not do without using CloudFormation . As something in between, the use of OpsWorks based on Chef appears .

However, in reality (and just on complex projects, a combination of all three is commonly used): CloudFormation raises the basic infrastructure ( VPC , subnets, repositories, creates the necessary roles in IAM for access, etc.), then launches OpsWorks stack can already flexibly configure the internal component of the running virtualok. And for the convenience of the development process, CloudFormation can also raise the stack for Elastic Beanstalk components so that developers using .ebextensions themselves can change some parameters of a running application (the number and type of virtual machines used, use Load Balancer , etc.) by simply changing the simple configuration file in a folder with a code when changes are applied (including to the application infrastructure) automatically after a commit.

AWS Lambda

We should also mention the Lambda service, which implements the concept of ServerLess architecture, which, on the one hand, is similar to Elastic Beanstalk , and can be entered into the DevOps process using AWS Code services. On the other hand, AWS Lambda is an excellent (read - mandatory) automation tool for everything on Amazon AWS. All processes that involve interaction with each other - can be linked using Lambda . It can process and respond to the monitoring results of CloudWatch , for example, by restarting the service (virtual cluster) and sending a problem message to the admin. It is also used in connection with DevOps processes, for example, to run its build methods and tests with subsequent transfer to Deploy in a general manner. And in general, using AWS Lambda can be implemented the most complex logic that is not yet available using the current set of Amazon AWS services.

In addition to these services, other services may be involved in DevOps processes. Therefore, if you try to present a general scheme of the services used, the picture can turn out to be quite confusing (below is just a conditional example).

Not everything can be transmitted visually, because global entities such as the AWS IAM access control service penetrate and are present in almost all components. All other services operate on the basis of the computing power of the EC2 service. The S3 data storage service is used to transfer data between so many other services. And such high-level services like AWS Service Catalog can provide interaction, including between different Amazon AWS accounts.

Further, as a more detailed consideration of individual services, an intricate and incomprehensible scheme will emerge into a clear set of tools, where everyone can choose the right one.

Total for the general scheme of Amazon AWS services that can be involved in DevOps processes. The most popular bundle is something like: CodePipeline / CodeCommit + ElasticBenstalk / OpsWorks as Deploy . And in order to “just look quickly” - CodeStar will work well . True, AWS CodeStar is paid, but the cost factor was not specifically taken into account here to first give a general idea of the choice, because each component can be taken at will, including using the necessary partners through Jenkins plugins of CI / CD projects such as Jenkins.

References:

- Accelerating DevOps Pipelines with AWS: Video , Presentation

Source: https://habr.com/ru/post/329840/

All Articles