Container Networking Interface (CNI) - network interface and standard for Linux containers

Last week, the CNC NF (Cloud Native Computing Foundation) announced the adoption of the 10th Open Source project, CNI (Container Networking Interface), under its wing. His task is to provide everything necessary for standardized management of network interfaces in Linux containers and flexible expansion of network capabilities. CNCF explained the need for such a project by actively distributing containerized applications in the production world and argue that "just as Kubernetes allows developers to massively launch containers on thousands of machines, these containers on a large scale require network management [and implementing the framework]."

How did CNI come about and what does it offer?

')

CNI Background

The Container Network Interface (CNI) project originated in CoreOS , known for the rkt container engine (recently also transferred to CNCF, along with containerd ), NoSQL-storage etcd, active work on Kubernetes and other Open Source-related projects and DevOps. The first detailed CNI demonstration took place at the end of 2015 (see video in October , presentation in November ). From the very beginning, the project was positioned as “the proposed standard for configuring network interfaces for Linux containers”, and first of all they began to use it in rkt. The first "third-party users" of CNI were Project Calico, Weaveworks, and soon Kubernetes joined them.

The adaptation of CNI in the Kubernetes platform is worth special attention. Since the need to standardize the network configuration of Linux containers was obvious and increasingly relevant at that time (~ 2015) , CNI was not the only such project. Competing product - Container Network Model (CNM) from Docker, presented in the same 2015, - solved the same tasks and got its reference implementation in the form of libnetwork - a library that grew out of network code in libcontainer and Docker Engine. And an important step in the confrontation of CNI and CNM was the choice made by Kubernetes. In the article “ Why Kubernetes does not use libnetwork? » (January 2016), the developers describe in detail all the technical and other reasons explaining their decision. The main essence (in addition to a number of technical issues) is as follows:

CNI is closer to Kubernetes from a philosophical point of view. It is much simpler than CNM, does not require demons, and its cross-platform capability is at least plausible (the CoreOS rkt container executable environment supports it) [... and Kubernetes strives to support different container implementations - approx. trans. ] . Being cross-platform means the ability to use network configurations that will work equally in different executable environments (eg, Docker, Rocket, Hyper). This approach follows the UNIX philosophy of doing one thing well.

Apparently, it was this (not only the choice of Kubernetes, but also the outside view of the technical specialists on the existing implementations) that predetermined the future of CNI and its adoption at the CNCF. For comparison, other competing CoreOS and Docker products: the rkt and conatinerd container execution environments - were accepted into the fund together and at the same time , but this did not happen with CNI / CNM.

View of the Calico project on the web for containers in March 2016

An additional comparison of CNI and CNM as of September 2016 can also be found in an English-language article on The New Stack .

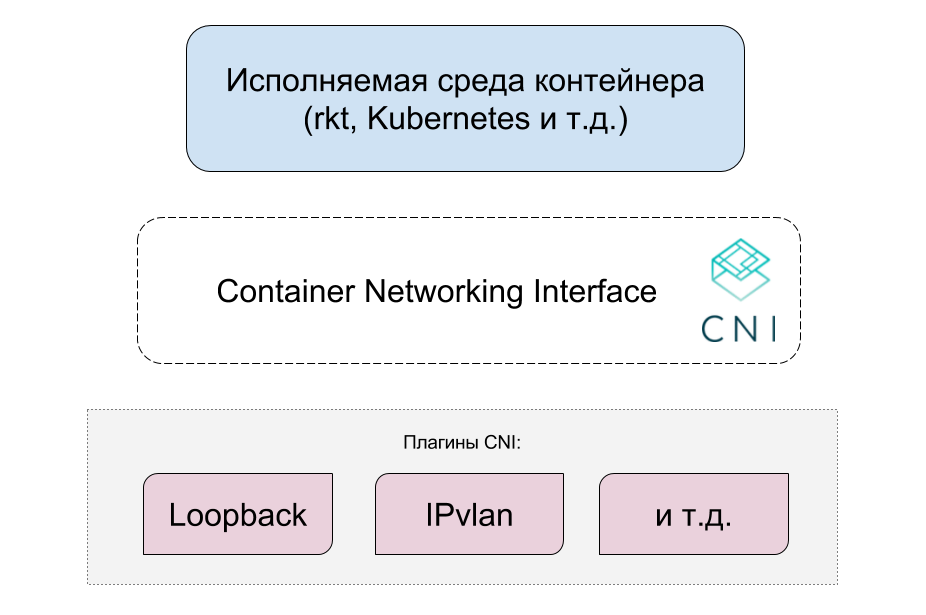

CNI device

The authors of CNI went through the creation of the lowest possible specification, the purpose of which is to become an easy layer between the executable container environment and the plug-ins. All necessary network functions are implemented exactly in plugins, the interaction with which is defined by the scheme in JSON format.

CNI consists of three parts:

1. Specifications ( see GitHub ) defining an API between the container's executable environment and network plugins: required supported operations (adding a container to the network and deleting it from there), a list of parameters, a network configuration format and their lists (stored in JSON), and known structures (IP addresses, routes, DNS servers).

CNI network configuration example:

{ "cniVersion": "0.3.1", "name": "dbnet", "type": "bridge", "bridge": "cni0", "ipam": { "type": "host-local", "subnet": "10.1.0.0/16", "gateway": "10.1.0.1" }, "dns": { "nameservers": [ "10.1.0.1" ] } } 2. Official plug-ins that provide network configurations for different situations and serve as an example of compliance with the CNI specification. They are available in containernetworking / plugins and are divided into 4 categories: main (loopback, bridge, ptp, vlan, ipvlan, macvlan), ipam (dhcp, host-local), meta (flannel, tuning), sample. All written in Go. (For third-party plug-ins, see the next section of the article.)

3. Libraries ( libcni ), offering the implementation of the CNI specification (also in the Go language) for convenient use in executable container environments.

All existing code (and specification) are published under the free Apache License v2.0.

Quickly try CNI

You can touch CNI without containers. To do this, just download the files from the project repository (and, optionally, the necessary plugins), compile them from

./build.sh (or download a binary build), then use the plug-in executable file (for example, ./bin/bridge ), transferring to it the necessary arguments for functioning through the environment variables CNI_* , and the network configuration - directly with JSON data via STDIN (as provided in the CNI specification).Details about such an experiment can be found in this article , the author of which does something like the following (on a Ubuntu host):

$ cat > mybridge.conf <<"EOF" { "cniVersion": "0.2.0", "name": "mybridge", "type": "bridge", "bridge": "cni_bridge0", "isGateway": true, "ipMasq": true, "ipam": { "type": "host-local", "subnet": "10.15.20.0/24", "routes": [ { "dst": "0.0.0.0/0" }, { "dst": "1.1.1.1/32", "gw":"10.15.20.1"} ] } } EOF $ sudo ip netns add 1234567890 $ sudo CNI_COMMAND=ADD CNI_CONTAINERID=1234567890 \ CNI_NETNS=/var/run/netns/1234567890 CNI_IFNAME=eth12 \ CNI_PATH=`pwd` ./bridge <mybridge.conf $ ifconfig cni_bridge0 Link encap:Ethernet HWaddr 0a:58:0a:0f:14:01 inet addr:10.15.20.1 Bcast:0.0.0.0 Mask:255.255.255.0 inet6 addr: fe80::3cd5:6cff:fef9:9066/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:8 errors:0 dropped:0 overruns:0 frame:0 TX packets:8 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:536 (536.0 B) TX bytes:648 (648.0 B) … Additional examples for quick launch of CNI and network plug-ins are presented in the project's README (section “How do I use CNI?”).

Third Party Plugins for CNI

One of the main values of CNI, of course, are third-party plug-ins that provide support for various modern solutions for Linux containers. Among them:

- Project Calico (L3 virtual network integrated with orchestration tools and cloud platforms);

- Weave (simple network for multi-host Docker-installations);

- Contiv Netplugin (policies / ACL / QoS and other features for containers in multi-host cluster installations);

- Flannel (network factory for containers from CoreOS);

- SR-IOV , Cilium (BPF / XDP), Multus (Multi Plugin for Kubernetes from Intel), VMware NSX and others ...

Unfortunately, there is no any catalog or constantly updated list of them, but for a general idea of the current distribution of CNI this should be enough.

An additional indicator of the maturity of the project is its integration into existing executable environments for containers: rkt and Kurma, - and platforms for working with containers: Kubernetes, OpenShift, Cloud Foundry, Mesos.

Conclusion

The current status of CNI allows us to speak now not only about the “great prospects” of the project, but also tangible realities of its practical applicability. The acceptance at CNCF is an official recognition by the industry and a guarantee for further development. And all this means that it's time to at least find out about CNI, which you will most likely have to meet sooner or later.

PS

Read also in our blog:

Source: https://habr.com/ru/post/329830/

All Articles