We make previews of WebRTC video stream in PNG images

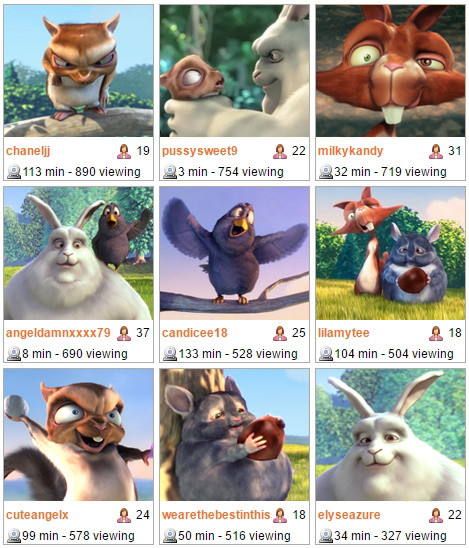

There are 10 users who stream video from a webcam on WebRTC. It is necessary to display snapshots (thumbnails) of their streams on a single web page, so that it looks like this:

You can simply play the video instead of pictures, but let's calculate what the bitrate will be if each stream takes 1 Mbps band. If you play all ten, you get 10 Mbps. Not too much for thumbnails?

It would be much better to request from the server a frame from the video stream, get it in the form of a PNG and show the picture in the browser.

First, we implemented the download of PNG images on request:

')

This option worked, but something we did not like. Perhaps the fact that I would have to store pictures on my server or invent their upload to Nginx or Apache for the subsequent static distribution.

As a result, we decided to wrap PNG in Base64 and send snapshot to the client in this form. With this approach, files are not distributed from the server via http. PNG file content is generated and transmitted to the client via Websockets at the time of the request.

Step-by-step description of the process with pieces of JavaScript code:

1. Alice sends the video stream from the webcam to the server via WebRTC and calls stream1.

2. Bob, knowing the name of the stream, can query the snapshot in the following way:

In order to get a snapshot, Bob hangs a listener STREAM_STATUS.SNAPSHOT_COMPLETE, which inserts a Base64 image into an element located on the page:

The full snapshot example code is available here .

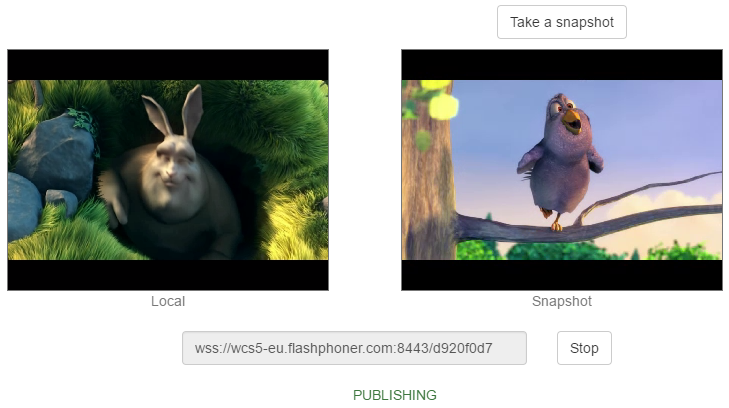

In this example, you can send a video stream to the server, and then take snapshots from this video stream by periodically pressing the Take button. Of course, if you wish, you can automate this by writing an appropriate script that will take snapshots from streams, for example, once a minute.

On the server side, to get snapshots, decoding of the video stream is required. An incoming WebRTC stream is depacketized, decoded, and at this point it becomes possible to take its snapshots. The circuit with decoding and with normal playback looks like this:

For normal playback, streams are not decoded and go to the viewer as is. If snapshot is required, it is taken from the branch of the video stream that has entered the decoding.

When working with snapshots, the WebRTC server Web Call Server 5 and the Web SDK are used , which provides a JavaScript API for working with snapshots of online broadcasting.

You can simply play the video instead of pictures, but let's calculate what the bitrate will be if each stream takes 1 Mbps band. If you play all ten, you get 10 Mbps. Not too much for thumbnails?

It would be much better to request from the server a frame from the video stream, get it in the form of a PNG and show the picture in the browser.

First, we implemented the download of PNG images on request:

')

- Making an asynchronous request:

stream.snapshot(); - The server saves the image in the file system.

- The user who requested the snapshot gets the URL of the image and can insert it into the image with an HTML tag:

<img src="{url}"/>

This option worked, but something we did not like. Perhaps the fact that I would have to store pictures on my server or invent their upload to Nginx or Apache for the subsequent static distribution.

As a result, we decided to wrap PNG in Base64 and send snapshot to the client in this form. With this approach, files are not distributed from the server via http. PNG file content is generated and transmitted to the client via Websockets at the time of the request.

Step-by-step description of the process with pieces of JavaScript code:

1. Alice sends the video stream from the webcam to the server via WebRTC and calls stream1.

session.createStream({name:"stream1"}).publish() 2. Bob, knowing the name of the stream, can query the snapshot in the following way:

var stream = session.createStream({name:"stream1"}); stream.snapshot(); In order to get a snapshot, Bob hangs a listener STREAM_STATUS.SNAPSHOT_COMPLETE, which inserts a Base64 image into an element located on the page:

stream.on(STREAM_STATUS.SNAPSHOT_COMPLETE, function(stream){ snapshotImg.src = "data:image/png;base64,"+stream.getInfo(); } The full snapshot example code is available here .

In this example, you can send a video stream to the server, and then take snapshots from this video stream by periodically pressing the Take button. Of course, if you wish, you can automate this by writing an appropriate script that will take snapshots from streams, for example, once a minute.

On the server side, to get snapshots, decoding of the video stream is required. An incoming WebRTC stream is depacketized, decoded, and at this point it becomes possible to take its snapshots. The circuit with decoding and with normal playback looks like this:

For normal playback, streams are not decoded and go to the viewer as is. If snapshot is required, it is taken from the branch of the video stream that has entered the decoding.

When working with snapshots, the WebRTC server Web Call Server 5 and the Web SDK are used , which provides a JavaScript API for working with snapshots of online broadcasting.

Source: https://habr.com/ru/post/329432/

All Articles