Natural Language Processing - how will it be in Russian?

Around us are huge amounts of textual data in electronic form, in them are human knowledge, emotions and experience. And also - spam, which pretends to be useful information, and we must be able to separate one from the other. People want to communicate with those who do not know their native language. And yet - manage your mobile / TV / smart home voice. All this ensures the demand for and rapid development of Natural Language Processing (NLP) methods.

On June 2, my online course “Introduction to Natural Language Processing” starts on the Stepik platform. This is a completely new format for me, and this is also the first online course on applied linguistics, which focuses on the processing of the Russian language, the available data and resources. 10 lectures of the course are devoted to basic linguistic tools and popular applications; An important component of the course is five practical tasks.

When we were preparing the Russian edition of the book “Introduction to the Information Retrieval” , many colleagues were skeptical - the one who really needs it will read the free electronic version in English. After the textbook was published, I read a course on its basis at UrFU, SAD, ITMO and St. Petersburg State University and made sure that the availability of translation is very helpful.

')

There are a lot of NLP training materials available online. Only on Coursera there were three distinct courses from renowned scholars in this field (for example, the latter from Dragomir Radev ). But mostly they are focused on processing English. In the case of NLP, the need for “localized” training materials is even more pronounced than for information retrieval. First, there are features of the language that affect automatic processing. Secondly, and more importantly, the methods essentially depend on the available tools and annotated data. And finally, proficiency can greatly influence the methods being developed and their assessment (for example, I am now engaged in an automatic analysis of humor, and with Russian-language data everything is much more fun than with English;)

Besides, I have long wanted to try myself in the MOOC format.

The course consists of 10 lectures; four of them are devoted to tools - morphological and syntactic analysis, language models and modeling of the meaning of words, and six - to popular applications - information and question-answer search, automatic summarization, tonality analysis, information extraction and machine translation. The structure of each lecture is more or less standard - a problem statement, a description of methods, a review of available tools and data sets, and an assessment. In the course of a minimum of mathematics and algorithms, the emphasis is on methods and their evaluation.

It is very difficult to accommodate all interesting topics in one online course, besides not all areas I understand so well to include them in the course. For example, I only briefly describe the recent successes of in-depth training in language processing tasks. The course did not include such popular topics as, for example, text classification, correction of typos or interactive systems. The course has a practical focus, there is almost no linguistic theory and in-depth analysis of linguistic phenomena.

An important part of the course is five practical tasks. Listeners are invited to independently implement the methods of morphological analysis, determining the tonality of the text, automatic summarizing of documents, extracting named entities and machine translation. Each task allows the implementation of a simple solution "for the test" (with the exception of machine translation, this is a problem with an asterisk). I really hope that students will consider simple solutions as a “starting point” for improvements and a gradual approach to the “gold standard”. There will be no deadlines in the course - everyone can choose a comfortable pace of learning.

The target audience of the course is anyone who wants to get a general understanding of the discipline and practical skills in solving problems of natural language processing. It is desirable that students have basic knowledge of linear algebra, probability theory, mathematical statistics and machine learning, as well as programming skills (necessary for solving practical tasks). Special linguistic knowledge is not required, it will be enough "school level". I hope that the course may also interest (non-computer) linguists as a review of automatic language processing methods.

Substantive conclusions and assessments can be made only on the basis of feedback from the audience, but for now I can share some impressions about the preparation process.

Few people like their voice in the recording, and even more so - the problems with diction and facial expressions / gestures that become so obvious. Besides, it seemed to me that on the recording I speak very meededly. Viewing the footage vividly reminded me how about 20 years ago I spent a short time on the student radio program SoundTrack and realized my own incompetence in this area. For perfectionists without acting experience, this is difficult. I tried to concentrate on the content and watched the recordings at x1.7 speed.

In the process of recording, I realized that in the absence of a direct reaction, I avoid talking about nuances and subtleties: these details are usually related to my interests / experience, and I am not sure that everyone is interested / needed. Probably, this is a side effect of mass courses, which I had not thought about before. And it's hard to joke to the camera :)

A separate problem was the development of practical tasks with automatic verification. All the tasks of the course are “in the style of kaggle”, i.e. input data - output data. Not for all the interesting tasks it turned out to find the “gold standards” for automatic verification. Nevertheless, we managed to compile five actual and realistic tasks (for example, we had to prepare a special data set for the task of automatic summarization). Perhaps it was worth starting the training with tasks, not lectures.

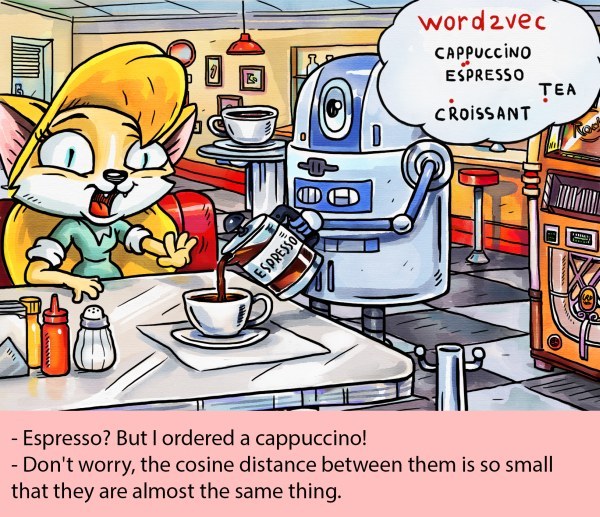

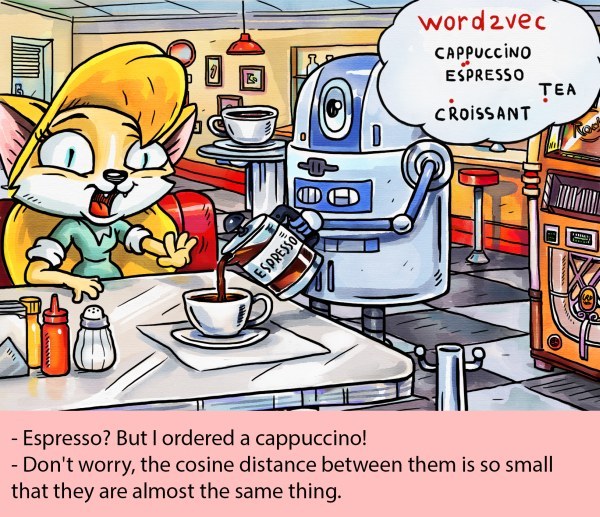

The creation of an online course was supported by a grant from the V. Potanin Foundation . Stepik not only hosted the course, but also took over the recording and editing of the video. Kirill Mishchenko prepared data for practical tasks and implemented an automatic verification code. The tasks used data provided by NCRF , Yandex , imhonet , OpenCorpora . The data for the automatic evaluation of abstracts were prepared by Ekaterina Pyrozhok, Irina Ermolina and Mikhail Kazarin. In the test mode, the tasks were solved by Dmitry Kuznetsov and Olga Annenkova. Maxim Khalilov, Alexey Zobnin, Victor Bocharov, Olga Mitrenina helped me a lot in the process of preparing the course with advice and recommendations. The main part of the borrowed slides belongs to Dan Jurafsky and Philipp Koehn . The picture in this article is from @data_monsters . Thank you all very much!

On June 2, my online course “Introduction to Natural Language Processing” starts on the Stepik platform. This is a completely new format for me, and this is also the first online course on applied linguistics, which focuses on the processing of the Russian language, the available data and resources. 10 lectures of the course are devoted to basic linguistic tools and popular applications; An important component of the course is five practical tasks.

Motivation

When we were preparing the Russian edition of the book “Introduction to the Information Retrieval” , many colleagues were skeptical - the one who really needs it will read the free electronic version in English. After the textbook was published, I read a course on its basis at UrFU, SAD, ITMO and St. Petersburg State University and made sure that the availability of translation is very helpful.

')

There are a lot of NLP training materials available online. Only on Coursera there were three distinct courses from renowned scholars in this field (for example, the latter from Dragomir Radev ). But mostly they are focused on processing English. In the case of NLP, the need for “localized” training materials is even more pronounced than for information retrieval. First, there are features of the language that affect automatic processing. Secondly, and more importantly, the methods essentially depend on the available tools and annotated data. And finally, proficiency can greatly influence the methods being developed and their assessment (for example, I am now engaged in an automatic analysis of humor, and with Russian-language data everything is much more fun than with English;)

Besides, I have long wanted to try myself in the MOOC format.

Course structure and content

The course consists of 10 lectures; four of them are devoted to tools - morphological and syntactic analysis, language models and modeling of the meaning of words, and six - to popular applications - information and question-answer search, automatic summarization, tonality analysis, information extraction and machine translation. The structure of each lecture is more or less standard - a problem statement, a description of methods, a review of available tools and data sets, and an assessment. In the course of a minimum of mathematics and algorithms, the emphasis is on methods and their evaluation.

It is very difficult to accommodate all interesting topics in one online course, besides not all areas I understand so well to include them in the course. For example, I only briefly describe the recent successes of in-depth training in language processing tasks. The course did not include such popular topics as, for example, text classification, correction of typos or interactive systems. The course has a practical focus, there is almost no linguistic theory and in-depth analysis of linguistic phenomena.

An important part of the course is five practical tasks. Listeners are invited to independently implement the methods of morphological analysis, determining the tonality of the text, automatic summarizing of documents, extracting named entities and machine translation. Each task allows the implementation of a simple solution "for the test" (with the exception of machine translation, this is a problem with an asterisk). I really hope that students will consider simple solutions as a “starting point” for improvements and a gradual approach to the “gold standard”. There will be no deadlines in the course - everyone can choose a comfortable pace of learning.

Who is this course for?

The target audience of the course is anyone who wants to get a general understanding of the discipline and practical skills in solving problems of natural language processing. It is desirable that students have basic knowledge of linear algebra, probability theory, mathematical statistics and machine learning, as well as programming skills (necessary for solving practical tasks). Special linguistic knowledge is not required, it will be enough "school level". I hope that the course may also interest (non-computer) linguists as a review of automatic language processing methods.

Notes on the preparatory stage

Substantive conclusions and assessments can be made only on the basis of feedback from the audience, but for now I can share some impressions about the preparation process.

Few people like their voice in the recording, and even more so - the problems with diction and facial expressions / gestures that become so obvious. Besides, it seemed to me that on the recording I speak very meededly. Viewing the footage vividly reminded me how about 20 years ago I spent a short time on the student radio program SoundTrack and realized my own incompetence in this area. For perfectionists without acting experience, this is difficult. I tried to concentrate on the content and watched the recordings at x1.7 speed.

In the process of recording, I realized that in the absence of a direct reaction, I avoid talking about nuances and subtleties: these details are usually related to my interests / experience, and I am not sure that everyone is interested / needed. Probably, this is a side effect of mass courses, which I had not thought about before. And it's hard to joke to the camera :)

A separate problem was the development of practical tasks with automatic verification. All the tasks of the course are “in the style of kaggle”, i.e. input data - output data. Not for all the interesting tasks it turned out to find the “gold standards” for automatic verification. Nevertheless, we managed to compile five actual and realistic tasks (for example, we had to prepare a special data set for the task of automatic summarization). Perhaps it was worth starting the training with tasks, not lectures.

Thanks

The creation of an online course was supported by a grant from the V. Potanin Foundation . Stepik not only hosted the course, but also took over the recording and editing of the video. Kirill Mishchenko prepared data for practical tasks and implemented an automatic verification code. The tasks used data provided by NCRF , Yandex , imhonet , OpenCorpora . The data for the automatic evaluation of abstracts were prepared by Ekaterina Pyrozhok, Irina Ermolina and Mikhail Kazarin. In the test mode, the tasks were solved by Dmitry Kuznetsov and Olga Annenkova. Maxim Khalilov, Alexey Zobnin, Victor Bocharov, Olga Mitrenina helped me a lot in the process of preparing the course with advice and recommendations. The main part of the borrowed slides belongs to Dan Jurafsky and Philipp Koehn . The picture in this article is from @data_monsters . Thank you all very much!

Source: https://habr.com/ru/post/329386/

All Articles