You measure CPU usage incorrectly.

That metric that we call “processor load” is actually understood by some people not quite right. What is the "CPU load"? Is this how busy our processor is? No, it is not. Yes, yes, I'm talking about the very same CPU usage that all performance analysis utilities show, from Windows Task Manager to top Linux command.

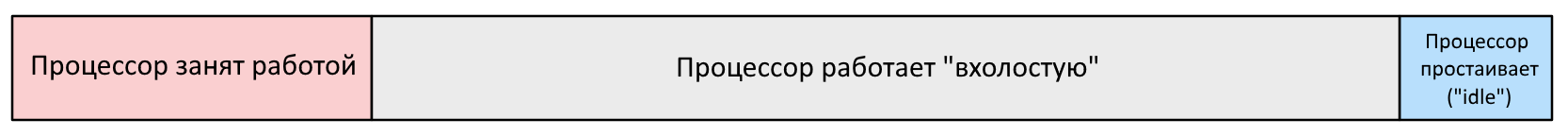

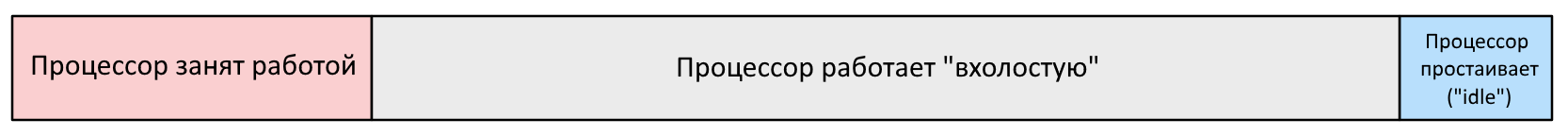

This is what “90% processor is loaded now”? Perhaps you think it looks something like this:

')

But in fact it looks like this:

“Running idle” means that the processor is able to execute some instructions, but does not, because it is expecting something — for example, input / output of data from the main memory. The percentage of real and “idle” work in the figure above is what I see day after day in the work of real applications on real servers. There is a substantial probability that your program spends its time in much the same way, and you don’t know about it.

What does this mean to you? Understanding how much time the processor actually performs some operations, and how much it just waits for data, sometimes it gives you the opportunity to change your code, reducing the exchange of data with RAM. This is especially true in the current realities of cloud platforms, where the policies of automatic scaling are sometimes directly tied to the CPU load, which means that every extra tact of “idle” work costs us real money.

That metric that we call “processor load” actually means something like “non-idle time”: that is, this is the amount of time that the processor spent in all threads except the special “Idle” stream. The kernel of your operating system (whatever it is) measures this amount of time when switching context between threads of execution. If the flow of command execution has been switched to a non-idle thread that has worked for 100 milliseconds, then the OS kernel regards this time as the time spent by the CPU on performing real work in this thread.

This metric first appeared in this form simultaneously with the advent of time-sharing operating systems. The computer programmer’s manual in the lunar module of the Appolon spacecraft (the advanced time-sharing system) called its idle stream with the special name DUMMY JOB and the engineers compared the number of commands executed by this thread to the number of commands executed by the worker threads. gave them an understanding of CPU usage.

So what's wrong with this approach?

Today, processors are much faster than RAM, and waiting for data has taken up the lion's share of the time that we used to call "CPU time." When you see a high percentage of CPU utilization in the output of the top command, you can decide that the processor is a bottleneck (the piece of hardware on the motherboard under the radiator and cooler), although in reality it will be a completely different device - memory banks.

The situation even worsens with time. For a long time, processor manufacturers managed to increase the speed of their cores faster than memory manufacturers increased their access speed and reduced delays. Somewhere in 2005, processors with a frequency of 3 Hz appeared on the market and manufacturers concentrated on increasing the number of cores, hyper trading, multi-socket configurations - and all this put even greater demands on the speed of data exchange! Processor manufacturers have tried to somehow solve the problem by increasing the size of the processor caches, faster tires, etc. This, of course, helped a little, but did not change the situation drastically. We are already waiting for the memory most of the time "CPU load" and the situation is only getting worse.

Using hardware performance counters. On Linux, they can be read with perf and other similar tools. Here, for example, measuring the performance of the entire system for 10 seconds:

The key metric here is the " number of instructions per cycle " (insns per cycle: IPC), which shows how many instructions the processor has executed on average for each of its cycles. Simplified: the more this number, the better. In the example above, this number is equal to 0.78, which, at first glance, does not seem to be such a bad result (78% of the time the useful work was done?). But no, on this processor, the maximum possible IPC value could be 4.0 (this is related to the method of receiving and executing instructions by modern processors). That is, our IPC value (equal to 0.78) is only 19.5% of the maximum possible speed of executing instructions. And in Intel processors starting from Skylake, the maximum IPC value is already 5.0.

When you work in a virtual environment, you may not have access to real performance counters (this depends on the hypervisor used and its settings). Here is an article on how it works in Amazon EC2 .

If you have IPC <1.0 , then I congratulate you, your application is idle while waiting for data from RAM. Your strategy for optimizing performance in this case will not be a reduction in the number of instructions in the code, but a reduction in the number of memory accesses, more active use of caches, especially on NUMA systems. From a hardware point of view (if you can influence this) it is wise to choose processors with larger cache sizes, faster memory and bus.

If you have IPC> 1.0 , then your application suffers not so much from waiting for data, but from an excessive amount of instructions being executed. Look for more efficient algorithms, do not do unnecessary work, cache the results of repeated operations. Using Flame Graphs build and analysis tools can be a great way to figure out the situation. From a hardware point of view, you can use faster processors and increase the number of cores.

As you can see, I drew a line on the IPC value of 1.0. Where did I get this number? I calculated it for my platform, and you, if you do not trust my estimate, can calculate it for yours. To do this, write two applications: one must load the processor 100% in the execution flow of instructions (without actively accessing large blocks of RAM), and the other must conversely actively manipulate the data in RAM, avoiding heavy calculations. Measure the IPC for each of them and take the average. This will be an approximate turning point for your architecture.

I believe that every performance monitoring tool should show the IPC value next to the processor load. This is done, for example, in the Linux tiptop tool:

The processor can perform its work more slowly, not only because of the loss of time waiting for data from RAM. Other factors may be:

The processor load has now become a substantially misunderstood metric: it includes the time it takes to wait for data from RAM, which can take even more time than executing real commands. You can determine the actual CPU usage by using additional metrics, such as the number of instructions per cycle (IPC). Values smaller than 1.0 indicate that you are resting on the speed of data exchange with memory, and large values indicate that the processor is very busy with instructions. Performance measurement tools need to be improved to display IPC (or something similar) directly next to the processor load, which will give the user a complete understanding of the situation. Having all these data, developers can take some measures to optimize their code in precisely those aspects where it will bring the greatest benefit.

This is what “90% processor is loaded now”? Perhaps you think it looks something like this:

')

But in fact it looks like this:

“Running idle” means that the processor is able to execute some instructions, but does not, because it is expecting something — for example, input / output of data from the main memory. The percentage of real and “idle” work in the figure above is what I see day after day in the work of real applications on real servers. There is a substantial probability that your program spends its time in much the same way, and you don’t know about it.

What does this mean to you? Understanding how much time the processor actually performs some operations, and how much it just waits for data, sometimes it gives you the opportunity to change your code, reducing the exchange of data with RAM. This is especially true in the current realities of cloud platforms, where the policies of automatic scaling are sometimes directly tied to the CPU load, which means that every extra tact of “idle” work costs us real money.

What is the CPU load really?

That metric that we call “processor load” actually means something like “non-idle time”: that is, this is the amount of time that the processor spent in all threads except the special “Idle” stream. The kernel of your operating system (whatever it is) measures this amount of time when switching context between threads of execution. If the flow of command execution has been switched to a non-idle thread that has worked for 100 milliseconds, then the OS kernel regards this time as the time spent by the CPU on performing real work in this thread.

This metric first appeared in this form simultaneously with the advent of time-sharing operating systems. The computer programmer’s manual in the lunar module of the Appolon spacecraft (the advanced time-sharing system) called its idle stream with the special name DUMMY JOB and the engineers compared the number of commands executed by this thread to the number of commands executed by the worker threads. gave them an understanding of CPU usage.

So what's wrong with this approach?

Today, processors are much faster than RAM, and waiting for data has taken up the lion's share of the time that we used to call "CPU time." When you see a high percentage of CPU utilization in the output of the top command, you can decide that the processor is a bottleneck (the piece of hardware on the motherboard under the radiator and cooler), although in reality it will be a completely different device - memory banks.

The situation even worsens with time. For a long time, processor manufacturers managed to increase the speed of their cores faster than memory manufacturers increased their access speed and reduced delays. Somewhere in 2005, processors with a frequency of 3 Hz appeared on the market and manufacturers concentrated on increasing the number of cores, hyper trading, multi-socket configurations - and all this put even greater demands on the speed of data exchange! Processor manufacturers have tried to somehow solve the problem by increasing the size of the processor caches, faster tires, etc. This, of course, helped a little, but did not change the situation drastically. We are already waiting for the memory most of the time "CPU load" and the situation is only getting worse.

How to understand what the processor actually does

Using hardware performance counters. On Linux, they can be read with perf and other similar tools. Here, for example, measuring the performance of the entire system for 10 seconds:

# perf stat -a -- sleep 10 Performance counter stats for 'system wide': 641398.723351 task-clock (msec) # 64.116 CPUs utilized (100.00%) 379,651 context-switches # 0.592 K/sec (100.00%) 51,546 cpu-migrations # 0.080 K/sec (100.00%) 13,423,039 page-faults # 0.021 M/sec 1,433,972,173,374 cycles # 2.236 GHz (75.02%) <not supported> stalled-cycles-frontend <not supported> stalled-cycles-backend 1,118,336,816,068 instructions # 0.78 insns per cycle (75.01%) 249,644,142,804 branches # 389.218 M/sec (75.01%) 7,791,449,769 branch-misses # 3.12% of all branches (75.01%) 10.003794539 seconds time elapsed The key metric here is the " number of instructions per cycle " (insns per cycle: IPC), which shows how many instructions the processor has executed on average for each of its cycles. Simplified: the more this number, the better. In the example above, this number is equal to 0.78, which, at first glance, does not seem to be such a bad result (78% of the time the useful work was done?). But no, on this processor, the maximum possible IPC value could be 4.0 (this is related to the method of receiving and executing instructions by modern processors). That is, our IPC value (equal to 0.78) is only 19.5% of the maximum possible speed of executing instructions. And in Intel processors starting from Skylake, the maximum IPC value is already 5.0.

In the clouds

When you work in a virtual environment, you may not have access to real performance counters (this depends on the hypervisor used and its settings). Here is an article on how it works in Amazon EC2 .

Data Interpretation and Response

If you have IPC <1.0 , then I congratulate you, your application is idle while waiting for data from RAM. Your strategy for optimizing performance in this case will not be a reduction in the number of instructions in the code, but a reduction in the number of memory accesses, more active use of caches, especially on NUMA systems. From a hardware point of view (if you can influence this) it is wise to choose processors with larger cache sizes, faster memory and bus.

If you have IPC> 1.0 , then your application suffers not so much from waiting for data, but from an excessive amount of instructions being executed. Look for more efficient algorithms, do not do unnecessary work, cache the results of repeated operations. Using Flame Graphs build and analysis tools can be a great way to figure out the situation. From a hardware point of view, you can use faster processors and increase the number of cores.

As you can see, I drew a line on the IPC value of 1.0. Where did I get this number? I calculated it for my platform, and you, if you do not trust my estimate, can calculate it for yours. To do this, write two applications: one must load the processor 100% in the execution flow of instructions (without actively accessing large blocks of RAM), and the other must conversely actively manipulate the data in RAM, avoiding heavy calculations. Measure the IPC for each of them and take the average. This will be an approximate turning point for your architecture.

What performance monitoring tools really should show

I believe that every performance monitoring tool should show the IPC value next to the processor load. This is done, for example, in the Linux tiptop tool:

tiptop - [root] Tasks: 96 total, 3 displayed screen 0: default PID [ %CPU] %SYS P Mcycle Minstr IPC %MISS %BMIS %BUS COMMAND 3897 35.3 28.5 4 274.06 178.23 0.65 0.06 0.00 0.0 java 1319+ 5.5 2.6 6 87.32 125.55 1.44 0.34 0.26 0.0 nm-applet 900 0.9 0.0 6 25.91 55.55 2.14 0.12 0.21 0.0 dbus-daemo Other reasons for the misinterpretation of the term "CPU usage"

The processor can perform its work more slowly, not only because of the loss of time waiting for data from RAM. Other factors may be:

- CPU temperature drops

- Variable frequency processor technology Turboboost

- CPU core frequency variation

- The problem of average calculations: 80% of the average load on the measurement period per minute may not be catastrophic, but they can hide in themselves jumps up to 100%

- Spin-locks: the processor is loaded with instructions and has a high IPC, but in fact the application is in spin-locks and does not perform any real work

findings

The processor load has now become a substantially misunderstood metric: it includes the time it takes to wait for data from RAM, which can take even more time than executing real commands. You can determine the actual CPU usage by using additional metrics, such as the number of instructions per cycle (IPC). Values smaller than 1.0 indicate that you are resting on the speed of data exchange with memory, and large values indicate that the processor is very busy with instructions. Performance measurement tools need to be improved to display IPC (or something similar) directly next to the processor load, which will give the user a complete understanding of the situation. Having all these data, developers can take some measures to optimize their code in precisely those aspects where it will bring the greatest benefit.

Source: https://habr.com/ru/post/329206/

All Articles