Why constant traffic filtering is a necessity

Filtering encrypted traffic without disclosing encryption keys.

Often in discussions we hear that the neutralization service for distributed denial of service attacks based on constant traffic filtering is less efficient and more expensive than on-demand filtering.

The arguments used in such dialogues practically do not change with time when the discussion begins: the high cost of continuous filtering against the time delay required for the inclusion of a specialist or equipment in the process of neutralizing an on-demand attack.

')

Qrator Labs would like to clarify their own position by putting to the general discussion some arguments about how permanent filtering is different from filtering on request and why the first option is actually the only workable one.

One of the key reasons is that modern attacks develop very quickly — evolve and become more complex in real time. The service itself evolves and the site and application evolve, so it may turn out that the “normal” behavior of users during the previous attack is no longer relevant.

Technical experts of the provider of services to neutralize attacks against denial of service, in the case of manual filtering, in most cases, it takes not only time to realize what is happening to develop the correct strategy of behavior and the sequence of performing specific actions. In addition, such a specialist also needs to know exactly when and how the attack vector changes in order to effectively neutralize it at the request of the client.

Connection under attack is a separate difficulty, mainly due to the deterioration of accessibility for all users trying to reach the service. If the attack was successful and the users did not receive the requested resource - they try to retrieve it again, simply by refreshing the page or reloading the application. This worsens the attack scenario, because it becomes harder to distinguish garbage traffic from legitimate traffic.

The actual deployment of a neutralization attack service — in the cloud or physically at the client’s site, at the partner’s data center — is often a key requirement for its implementation. Since any of the options for placement allows continuous automatic or manual filtering, and accordingly - detection and neutralization of attacks. But the availability of automatic filtering is a key requirement.

Most often, cloud attack neutralization services filter all incoming traffic - it becomes fully accessible for analysis. Physical equipment installed at the edge of the network, or receiving cloned traffic, provides almost the same opportunities to monitor and neutralize attacks in real time.

Some vendors recommend using NetFlow metrics to analyze traffic, or other metrics, which in itself is a compromise for the worse in terms of results, since third-party or derived metrics give only part of the information about the data, thus narrowing the possibilities for detecting and neutralize the attack. And vice versa - cloud services are not obliged to analyze 100% of incoming traffic, however, most often they do it due to the fact that such an approach makes it possible to best build models and train algorithms.

Another disadvantage of using the NetFlow protocol as the main analysis tool is that it gives only some characteristic of data streams - their description, but not the streams themselves. Therefore, of course, you will notice an attack based on the parameters that NetFlow reflects, but more complex types of attacks that should be detected by analyzing the contents of the stream will not be visible. Therefore, attacks on the application layer (L7) are difficult to reflect using only the NetFlow metric, except in cases of one hundred percent obvious attack inside the transport (because above L4 NetFlow is frankly useless).

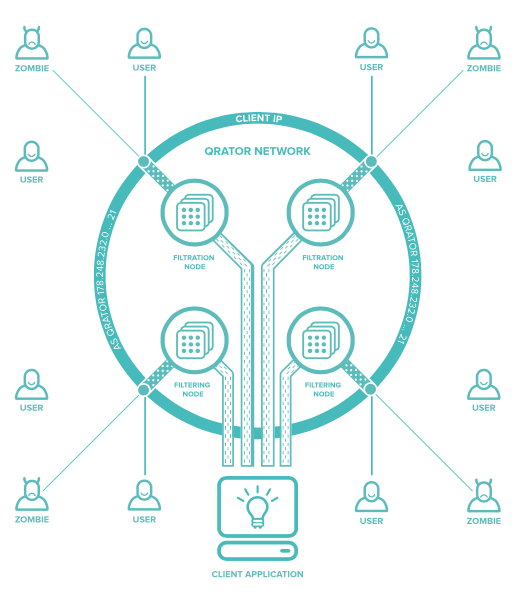

The general scheme of connection to the filtration network.

1. Why do cloud DDoS neutralization service providers offer “constant filtering” even if no attack is currently taking place?

The answer is simple: constant filtering is the most effective way to neutralize attacks. Here it is also necessary to add that the physical equipment placed at the client is not much different from cloud filtering, with the only exception that the box is turned on and off physically somewhere in the data center. However, there is a choice in any case (to work - that is, to turn on the device, always or only if necessary) and it will have to be done.

Theoretically, the deterioration of the network latency due to filtering is indeed possible - especially if the nearest node is geographically far, and the client needs a local resource and traffic. But, what we see in most cases is a reduction in the total delay due to the acceleration of HTTPS / TCP handshakes (handshakes) based on a well-constructed filtering network with logical placement of nodes. No one will probably argue with the fact that the correct neutralization network has a better topology (faster and more reliable) than the network that requires protection.

Saying that reverse proxying restricts filtering only to the HTTP and HTTPS (SSL) protocols, you only speak half of the truth. HTTP traffic is an integral and one of the crucial parts of complex filtering systems, and reverse proxying is one of the most efficient ways to collect and analyze it.

2. As we know, distributed denial of service attacks can take many forms and be modified, moving away from the HTTP protocol. Why is the cloud in this case better than stand-alone equipment on the client's side?

Overloading of individual nodes of the filtration network is possible as much as is actually possible with equipment placed in the rack. There is no powerful iron box enough to cope with any attacks alone - a complex and complex system is required.

Nevertheless, even the largest equipment manufacturers recommend switching to cloud filtering in case of the most serious attacks. Because their clouds consist of the same equipment organized in clusters, each of which is by default more powerful than a separate solution placed in the data center. In addition, your box works only for you, but a large filtering network serves dozens and hundreds of clients - the design of such a network was originally designed to process large amounts of data to an order to successfully neutralize the attack.

Before the attack, it is impossible to say for sure that it will be easier: to disable individual equipment (CPE) or a filtering network node. But think about this - point failure is always a problem for your vendor, but a piece of equipment that refuses to work as stated after the purchase is only your problem.

3. A network node acting as a proxy server should be able to obtain content and data from the resource. Does this mean that anyone can bypass the cloud solution to neutralize attacks?

If there is no dedicated physical line between you and the security service provider - yes.

It is true that without a dedicated channel from the client to the service provider to neutralize the denial of service attacks, attackers can attack the native IP address of the service. Not all providers of such services, in principle, offer services of leased lines from themselves to the client.

In general, switching to cloud filtering means declaring relevant announcements using BGP. In this case, the individual IP addresses of the service under attack are hidden and inaccessible to attack.

4. Sometimes, the ratio of the cost of the service and the cost of it from the supplier is used as an argument against cloud filtering. What does this situation look like in comparison with equipment located on the client side?

It is safe to say that no matter how small the attack on denial of service is, the cloud service provider for their neutralization will have to process them all, even though the internal expense in building such networks is always based on the statement that each The attack is intense, large, long and smart. On the other hand, this does not mean at all that the provider of such a service loses money, selling clients protection from everything, but in fact it has to cope mainly with small and medium attacks. Yes, the filtering network can spend a little more resources than in the "perfect condition", but in the case of a successfully neutralized attack, no one will ask questions. Both the client and the provider will be satisfied with this partnership and will continue it with a high degree of probability.

Imagine the same situation with the equipment in place - once it costs an order of magnitude more, it requires skilled hands for maintenance and ... still it will have to work out small and rare attacks. When you were planning to buy such equipment that is not cheap anywhere, did you think about it?

The thesis that a separate box, together with a contract for installation, technical support and payment for the work of highly qualified engineers will ultimately be cheaper compared to buying a suitable tariff in the cloud, is simply incorrect. The final cost of equipment and its hours of operation is very high - and this is the main reason why protection and neutralization of distributed denial of service attacks became an independent business and formed an industry - otherwise, in every IT company we would see an attack protection unit.

Based on the premise that the attack is a rare phenomenon, a solution to neutralize it must be built accordingly and be able to neutralize these rare attacks successfully. But, in addition to this, it is also worth the cost of adequate funds, because everyone understands that most of the time nothing terrible happens.

Cloud providers design and build their own networks in an efficient manner in order to consolidate their own risks and cope with attacks by distributing traffic between filtering points, which are both hardware and software — two parts of a system created with one goal.

Here we are talking about the “Law of Large Numbers,” familiar from probability theory. This is the reason why Internet service providers sell more channel capacity than they actually possess. All clients of an insurance company, hypothetically, can get into an unpleasant situation at a time - but in practice this has never happened. And even though the individual insurance compensation can be huge, it does not lead to the bankruptcy of the insurance business every time someone has an accident.

People who are professionally involved in neutralizing denial of service attacks know that the cheapest, and therefore most common attacks are associated with amplificators, and can in no way be characterized as "small."

At the same time, agreeing that a one-time payment for equipment installed on the physical site will remain there forever - the methods of attacks will evolve. There is no guarantee that yesterday’s equipment will cope with tomorrow’s attack - this is only an assumption. Therefore, the volume investment made in such equipment begins to lose its value exactly from the moment of installation, not to mention the need for its constant maintenance and updating.

In order to neutralize DDoS, it is important to have a highly scalable solution with high connectivity, which is very difficult to achieve by purchasing a separate box of equipment.

When a serious attack occurs, any stand-alone equipment will attempt to signal to the cloud that it has begun and try to distribute traffic to filter points. However, no one says that when the channel is clogged with garbage there is no guarantee that it will be able to deliver this message to its own cloud. And again - it takes time to switch the data stream.

Therefore, the only real price that a customer can pay, in addition to cash, to protect their own infrastructure from denial of service attacks, is delay and nothing but it. But, as we have said, properly constructed clouds reduce delays, improving the global availability of the requested resource.

Keep this in mind when making a choice between an iron box and a filtering cloud.

Source: https://habr.com/ru/post/328998/

All Articles